深度学习基础学习

预备知识

安装

数据操作

运算符

import torch

x = torch.arange(12) # tensor-张量

print(x.shape)

print(x.numel()) ## numel-元素数量

X = x.reshape(3, 4)

# torch.zeros((2, 3, 4))

# torch.ones((2,3,4))

# torch.randn(3, 4) # 正态分布 random

# torch.tensor([[2, 2], [3, 3]])

torch.exp(x)

x = torch.tensor([1.0, 2, 4, 8]) # x.shape = y.shape

y = torch.tensor([2, 2, 2, 2])

x + y, x - y, x * y, x / y, x ** y # **运算符是求幂运算

X = torch.arange(12, dtype=torch.float32).reshape(3, 4)

Y = torch.tensor([[2.0, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

torch.cat((X, Y), dim=0) # 行扩展

torch.cat((X, Y), dim=1) # 列扩展

(X > Y)

X.sum(), X.mean()

广播机制

维度从后往前对比各维度都要满足一下两个条件之一

- 两个值相等

- 其中一个值为1

a = torch.randn((3, 4, 5))

b = torch.randn((3, 1, 5))

# a + b #可广播

索引与切片

X[-1], X[1:3]

X[0:2, :] = 12

X

节省内存

before = id(Y)

Y += X

print(id(Y) == before)

Y = Y + X

print(id(Y) == before)

这可能是不可取的,原因有两个:

- 我们不想总是不必要地分配内存。在机器学习,我们可能有数百兆的参数,并且在一秒内多次更新所有参数。通常情况下,我们希望原地执行这些更新;

- 如果我们不原地更新,其他引用仍然会指向旧的内存位置,这样我们的某些代码可能会无意中引用旧的参数。

Z = torch.zeros_like(Y) # 相同大小

print('id(Z):', id(Z))

Z[:] = X + Y

print('id(Z):', id(Z))

Z = X + Y

print('id(Z):', id(Z))

A = X.numpy()

B = torch.tensor(A) # 初始化

C = B.numpy()

type(A), type(B), type(C)

a = torch.tensor([3.5])

a, a.item(), float(a), int(a)

数据预处理

读取数据集

import os

os.makedirs(os.path.join("..", 'data'), exist_ok=True)

data_file = os.path.join("..", 'data', 'house_tiny.csv')

with open(data_file, 'w') as f:

f.write('NumRooms,Alley,Price\n') # 列名

f.write('NA,Pave,127500\n') # 每行表示一个数据样本

f.write('2,NA,106000\n')

f.write('4,NA,178100\n')

f.write('NA,NA,140000\n')

import pandas as pd

data = pd.read_csv(data_file)

data

处理缺失值

inputs, outputs = data.iloc[:, 0:2], data.iloc[:, 2]

inputs = inputs.fillna(inputs.mean())

inputs = pd.get_dummies(inputs,

prefix="转换",

prefix_sep="*",

columns=["Alley"],

dummy_na=True) # dummy_na default False 增加一列表示空缺值,如果False就忽略空缺值

print(type(inputs.values))

inputs

import torch

X, y = torch.tensor(inputs.values), torch.tensor(outputs.values)

X, y

data.drop(data.columns[data.isnull().sum().argmax()], axis=1) # argmax - 最大值行号

data.drop(data.isnull().sum().idxmax(), axis=1) # idx - 最大值索引

线性代数

特征向量-不被A改变方向

难点- https://courses.d2l.ai/zh-v2/assets/pdfs/part-0_6.pdf

https://courses.d2l.ai/zh-v2/assets/pdfs/part-0_7.pdf

标量 向量 矩阵

import torch

# 标量

x = torch.tensor(3.0)

y = torch.tensor(2.0)

x + y, x * y, x / y, x ** y

# 向量

x = torch.arange(4)

print(x[3]) # 标量

len(x), x.shape

"""

向量或轴的维度被用来表示向量或轴的长度,即向量或轴的元素数量。

张量的维度用来表示张量具有的轴数。 在这个意义上,张量的某个轴的维数就是这个轴的长度。

"""

# 矩阵

A = torch.arange(20).reshape(5, 4)

张量

X = torch.arange(24).reshape(2, 3, 4)

# 加法

Y = X.clone()

X, X + Y, X * Y, 3 * X # 哈达玛乘积

降维

A = torch.arange(24, dtype=torch.float32).reshape(2, 3, 4)

A, A.sum()

A_sum_axis2 = A.sum(axis=2)

A_sum_axis2, A_sum_axis2.shape

A_sum_axis1 = A.sum(axis=1)

A_sum_axis1, A_sum_axis1.shape, A.sum(axis=[0, 1, 2])

# A.mean(), A.sum() / A.numel()

A.mean(axis=0), A.sum(axis=0) / A.shape[0] # axis = i 消掉 i 轴

非降维求和

有时在调用函数来计算总和或均值时保持轴数不变会很有用

A = torch.arange(20, dtype=torch.float32).reshape(5, 4)

sum_A = A.sum(axis=1, keepdim=True) # 5 * 1

A / sum_A # 利用广播机制-后面可以数据标准化和归一化

A.cumsum(axis=0) # 行累加

点积 和 矩阵-向量积

# 点积

x = torch.arange(4, dtype=torch.float32)

y = torch.ones(4, dtype=torch.float32)

x, y, torch.dot(x, y), sum(x * y)

# mv 矩阵 与 向量乘积

A.shape, x.shape, torch.mv(A, x)

# mm 矩阵 与 矩阵 乘积

B = torch.ones(4, 3)

torch.mm(A, B)

范数

线性代数中最有用的一些运算符是范数(norm)。 非正式地说,向量的范数是表示一个向量有多大。 这里考虑的大小(size)概念不涉及维度,而是分量的大小。

# L2 范数

u = torch.tensor([3.0, -4.0])

print(torch.norm(u))

# L1 范数

print(torch.abs(u).sum())

# Frobenius 范数 矩阵L2

torch.norm(torch.ones((4, 9)))

"""

在深度学习中,我们经常试图解决优化问题:

最大化分配给观测数据的概率;

最小化预测和真实观测之间的距离。

用向量表示物品(如单词、产品或新闻文章),以便最小化相似项目之间的距离,

最大化不同项目之间的距离。

目标,或许是深度学习算法最重要的组成部分(除了数据),通常被表达为范数。

"""

A = torch.arange(24, dtype=torch.float32).reshape(2, 3, 4)

A / A.sum(axis=1, keepdim=True)

# A / A.sum(axis=1)

torch.linalg.norm(A)

B = torch.arange(24, dtype=torch.float32)

torch.norm(B)

自动微分

反向传播

import torch

x = torch.arange(4.0)

x.requires_grad_(True) # 等价于 x = torch.arange(4.0, require_grad=True)

x.grad

y = 2 * torch.dot(x, x)

y.backward()

x.grad

x.grad.zero_()

y = x.sum()

y.backward()

x.grad

# 不太懂 2023-01-20 10:52:13

# 已解决 2023-01-20 11:01:13

# https://blog.csdn.net/qq_38800089/article/details/118271097

# 非标量变量的反向传播

x.grad.zero_()

y = x * x

# 等价于 y.backward(torch.ones(len(x)))

y.sum().backward()

print(x.grad)

x.grad.zero_()

y = x * x

y.backward(torch.tensor([0.1, 0.2, 0.3, 0.4]))

x.grad

分离计算

# datach 返回一个新的tensor,从当前计算图中分离下来的,

# 但是仍指向原变量的存放位置,不同之处只是requires_grad为false,

# 得到的这个tensor永远不需要计算其梯度,不具有grad。

# y = x

# z = y * x

x.grad.zero_()

y = x * x

u = y.detach()

z = u * x

z.sum().backward()

x.grad == u

x.grad.zero_()

y.sum().backward()

x.grad == 2 * x

Python控制流的梯度计算

def f(a):

b = a * 2

while b.norm() < 1000:

b = b * 2

if b.sum() > 0:

c = b

else:

c = 100 * b

return c

a = torch.randn(size=(), requires_grad=True)

d = f(a)

d.backward()

a.grad == d / a

# 在控制流的例子中,我们计算d关于a的导数,如果将变量a更改为随机向量或矩阵,会发生什么?

def f(a):

b = a * 2

while b.norm() < 1000:

b = b * 2

if b.sum() > 0:

c = b

else:

c = 100 * b

return c

a = torch.randn((2, 4), requires_grad=True, dtype=torch.float32)

d = f(a)

d.backward(torch.ones((2, 4))) # 需要修改 d 为向量

# d.sum().backward()

a.grad

% matplotlib inline

import numpy as np

from matplotlib_inline import backend_inline

from d2l import torch as d2l

def use_svg_display(): #@save

"""使用svg格式在Jupyter中显示绘图"""

backend_inline.set_matplotlib_formats('svg')

def set_figsize(figsize=(3.5, 2.5)): #@save

"""设置matplotlib的图表大小"""

use_svg_display()

d2l.plt.rcParams['figure.figsize'] = figsize

def set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend):

"""设置matplotlib的轴"""

axes.set_xlabel(xlabel)

axes.set_ylabel(ylabel)

axes.set_xscale(xscale)

axes.set_yscale(yscale)

axes.set_xlim(xlim)

axes.set_ylim(ylim)

if legend:

axes.legend(legend)

axes.grid()

#@save

def plot(X, Y=None, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), figsize=(3.5, 2.5), axes=None):

"""绘制数据点"""

if legend is None:

legend = []

set_figsize(figsize)

axes = axes if axes else d2l.plt.gca()

# 如果X有一个轴,输出True

def has_one_axis(X):

return (hasattr(X, "ndim") and X.ndim == 1 or isinstance(X, list)

and not hasattr(X[0], "__len__"))

if has_one_axis(X):

X = [X]

if Y is None:

X, Y = [[]] * len(X), X

elif has_one_axis(Y):

Y = [Y]

if len(X) != len(Y):

X = X * len(Y)

axes.cla()

for x, y, fmt in zip(X, Y, fmts):

if len(x):

axes.plot(x, y, fmt)

else:

axes.plot(y, fmt)

set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

x = torch.arange(-1, 4, 0.1, requires_grad=True)

y = sum(torch.sin(x))

z = torch.sin(x)

y.backward()

x.grad

plot(x.detach().numpy(), [z.detach().numpy(), x.grad.detach().numpy()],

'x', 'f(x)', legend=['sin x ', 'grad'])

z.detach().numpy()

概率

% matplotlib inline

import torch

from torch.distributions import multinomial

from d2l import torch as d2l

fair_probs = torch.ones([6]) / 6

# 将结果存储为32位浮点数以进行除法

counts = multinomial.Multinomial(1000, fair_probs).sample()

counts / 1000 # 相对频率作为估计值

counts = multinomial.Multinomial(10, fair_probs).sample((500,))

cum_counts = counts.cumsum(dim=0)

estimates = cum_counts / cum_counts.sum(dim=1, keepdims=True)

d2l.set_figsize((6, 4.5))

for i in range(6):

d2l.plt.plot(estimates[:, i].numpy(),

label=("P(die=" + str(i + 1) + ")"))

d2l.plt.axhline(y=0.167, color='black', linestyle='dashed')

d2l.plt.gca().set_xlabel('Groups of experiments')

d2l.plt.gca().set_ylabel('Estimated probability')

d2l.plt.legend();

2.6.5. 练习-https://zh.d2l.ai/chapter_preliminaries/probability.html#id14

4. 马尔科夫链-https://www.bilibili.com/video/BV19b4y127oZ/

线性神经网络

batchsize 过小,噪音大 对于深层神经网络有好处

牛顿法-在机器学习和深度学习中 二阶导不好算,且预设的模型不一定是正确的

线性回归

矢量化加速

# 矢量化代码通常会带来数量级的加速。

# 另外,我们将更多的数学运算放到库中,而无须自己编写那么多的计算,从而减少了出错的可能性。

% matplotlib inline

import math

import time

import numpy as np

import torch

from d2l import torch as d2l

n = 10000

a = torch.ones([n])

b = torch.ones([n])

class Timer: #@save

"""记录多次运行时间"""

def __init__(self):

self.times = []

self.start()

def start(self):

"""启动计时器"""

self.tik = time.time()

def stop(self):

"""停止计时器并将时间记录在列表中"""

self.times.append(time.time() - self.tik)

return self.times[-1]

def avg(self):

"""返回平均时间"""

return sum(self.times) / len(self.times)

def sum(self):

"""返回时间总和"""

return sum(self.times)

def cumsum(self):

"""返回累计时间"""

return np.array(self.times).cumsum().tolist()

c = torch.zeros(n)

timer = Timer()

for i in range(n):

c[i] = a[i] + b[i]

print(f'{timer.stop():.5f} sec')

timer.start()

d = a + b

print(f'{timer.stop():.0} sec')

正态分布与平方损失-略

练习

很多不会

线性回归从零开始

% matplotlib inline

import random

import torch

from d2l import torch as d2l

def synthetic_data(w, b, num_examples): #@save

"""生成y=Xw+b+噪声"""

X = torch.normal(0, 1, (num_examples, len(w))) #

print(X)

y = torch.matmul(X, w) + b

y += torch.normal(0, 0.01, y.shape)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)

print('features:', features[0], '\nlabel:', labels[0])

d2l.set_figsize()

d2l.plt.scatter(features[:, 0].detach().numpy(), labels.detach().numpy(), 1);

定义模型

# 小批量 取数据

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(

indices[i: min(i + batch_size, num_examples)])

yield features[batch_indices], labels[batch_indices]

# 模型

def linereg(X, w, b):

return torch.matmul(X, w) + b

# loss

def squared_loss(y_pre, y):

"""均方损失"""

return (y_pre - y) ** 2 / 2

# return (y_pre - y.reshape(y_pre.shape)) ** 2 / 2

# 优化函数

def sgd(params, lr, batch_size):

"""小批量随机梯度下降"""

with torch.no_grad(): # 在该模块下,所有计算得出的tensor的requires_grad都自动设置为False

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

# 参数

batch_size = 10

w = torch.normal(0, 0.01, size=(2, 1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

# 训练

lr = 0.03 # 学习率

num_epochs = 3

net = linereg

loss = squared_loss

for epoch in range(num_epochs):

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y)

l.sum().backward()

sgd([w, b], lr, batch_size)

with torch.no_grad():

train_l = loss(net(features, w, b), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

线性回归的简洁实现

import numpy as np

import torch

from torch.utils import data

from d2l import torch as d2l

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = d2l.synthetic_data(true_w, true_b, 1000)

"""构造一个PyTorch数据迭代器"""

def load_array(data_arrays, batch_size, is_train=True):

"""

:param data_arrays:

:param batch_size:

:param is_train: 是否希望数据迭代器对象在每个迭代周期内打乱数据。

:return:

"""

dataset = data.TensorDataset(*data_arrays)

return data.DataLoader(dataset, batch_size, shuffle=is_train)

batch_size = 10

data_iter = load_array((features, labels), batch_size)

next(iter(data_iter))

# 定义模型

"""

Sequential类将多个层串联在一起。 当给定输入数据时,Sequential实例将数据传入到第一层,

然后将第一层的输出作为第二层的输入,以此类推。

在下面的例子中,我们的模型只包含一个层,因此实际上不需要Sequential。

但是由于以后几乎所有的模型都是多层的,在这里使用Sequential会让你熟悉“标准的流水线”。

"""

from torch import nn

net = nn.Sequential(nn.Linear(2, 1))

"""

正如我们在构造nn.Linear时指定输入和输出尺寸一样, 现在我们能直接访问参数以设定它们的初始值。

我们通过net[0]选择网络中的第一个图层, 然后使用weight.data和bias.data方法访问参数。

我们还可以使用替换方法normal_和fill_来重写参数值

"""

net[0].weight.data.normal_(0, 0.01)

net[0].bias.data.fill_(0)

"""

计算均方误差使用的是MSELoss类,也称为L2平方范数。 默认情况下,它返回所有样本损失的平均值。

https://pytorch.org/docs/stable/nn.html#loss-functions

"""

# loss = nn.MSELoss(reduction='mean') # 默认是和

loss = nn.SmoothL1Loss(reduction='mean')

"""

小批量随机梯度下降算法是一种优化神经网络的标准工具, PyTorch在optim模块中实现了该算法的许多变种。

当我们实例化一个SGD实例时,我们要指定优化的参数

(可通过net.parameters()从我们的模型中获得)以及优化算法所需的超参数字典。

"""

trainer = torch.optim.SGD(net.parameters(), lr=0.03)

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:

l = loss(net(X), y)

# print(l)

trainer.zero_grad()

l.backward()

trainer.step()

l = loss(net(features), labels)

print(f'epoch {epoch + 1}, loss {l:f}')

SmoothL1对于异常点的敏感性不如MSE,而且,在某些情况下防止了梯度爆炸。

# 访问模型梯度

for name, parms in net.named_parameters():

print('name:', name)

print('para:', parms)

print('grad_requirs:', parms.requires_grad)

print('grad_value:', parms.grad)

分类问题-SoftMax

课后习十分硬核

https://zh.d2l.ai/chapter_linear-networks/softmax-regression.html

LogSumExp解决溢出

图像分类数据集

% matplotlib inline

import torch

import torchvision

from torch.utils import data

from torchvision import transforms

from d2l import torch as d2l

d2l.use_svg_display()

# 通过ToTensor实例将图像数据从PIL类型变换成32位浮点数格式,

# 并除以255使得所有像素的数值均在0~1之间

trans = transforms.ToTensor()

mnist_train = torchvision.datasets.FashionMNIST(

root="../data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="../data", train=False, transform=trans, download=True)

len(mnist_train), len(mnist_test)

def get_fashion_mnist_labels(labels): #@save

"""返回Fashion-MNIST数据集的文本标签"""

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5): #@save

"""绘制图像列表"""

figsize = (num_cols * scale, num_rows * scale)

_, axes = d2l.plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

if torch.is_tensor(img):

# 图片张量

ax.imshow(img.numpy())

else:

# PIL图片

ax.imshow(img)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

return axes

X, y = next(iter(data.DataLoader(mnist_train, batch_size=18)))

show_images(X.reshape(18, 28, 28), 2, 9, titles=get_fashion_mnist_labels(y));

batch_size = 256

def get_dataloader_workers(): # 说实话没啥用

"""使用4个进程来读取数据"""

return 4

train_iter = data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=get_dataloader_workers())

timer = d2l.Timer()

for X, y in train_iter:

continue

f'{timer.stop():.2f} sec'

def load_data_fashion_mnist(batch_size, resize=None): #@save

"""下载Fashion-MNIST数据集,然后将其加载到内存中"""

trans = [transforms.ToTensor()]

if resize:

trans.insert(0, transforms.Resize(resize))

trans = transforms.Compose(trans)

mnist_train = torchvision.datasets.FashionMNIST(

root="../data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="../data", train=False, transform=trans, download=True)

return data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=get_dataloader_workers()), data.DataLoader(mnist_test, batch_size, shuffle=False,

num_workers=get_dataloader_workers())

train_iter, test_iter = load_data_fashion_mnist(32, resize=64)

for X, y in train_iter:

print(X.shape, X.dtype, y.shape, y.dtype)

break

softmax的从零开始实现

import torch

from IPython import display

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

num_inputs = 784

num_outputs = 10

W = torch.normal(0, 1, size=(num_inputs, num_outputs), requires_grad=True)

b = torch.zeros(num_outputs, requires_grad=True)

# 这里很多函数返回的是一个1 * n 的向量,而不是求平均值,因为到最后batch_size不一定相同

# 所以一起求平均值

def softmax(X):

# 0,1 会上溢和下溢

X_exp = torch.exp(X)

return X_exp / X_exp.sum(1, keepdim=True) # 广播

def LogSumExp(X):

b = X.max(axis=1, keepdim=True)[0]

return b + torch.log(torch.exp(X - b).sum(axis=1, keepdim=True))

def softmax_plus(X):

# 改进的softmax

return torch.exp(X - LogSumExp(X))

# def net(X):

# """

# torch.matmul是tensor的乘法,输入可以是高维的。

# 当输入都是二维时,就是普通的矩阵乘法,和tensor.mm函数用法相同。

# """

# return softMax(torch.matmul(X, W) + b)

def net(X):

return softmax(torch.matmul(X.reshape((-1, W.shape[0])), W) + b)

# def cross_entropy(y_pre, y): # one_hot 编码

# # 0 1 溢出-所以转化为下面比较好

# return -(y * torch.log(y_pre)).sum(1, keepdim=0)

def cross_entropy(y_pre, y): # 离散编码

# range(len(y_pre)), y 相当于zip(x, y)

return - torch.log(y_pre[range(len(y_pre)), y])

def accuracy(y_pre, y):

# 这个返回的是 tensor,需要float

return float((y_pre.argmax(axis=1) == y).int().sum())

class Accumulator:

"""

在n个变量上累加

这里定义一个实用程序类Accumulator,用于对多个变量进行累加。

在上面的evaluate_accuracy函数中, 我们在Accumulator实例中创建了2个变量, 分别用于存储正确预测的数量和预测的总数量。

当我们遍历数据集时,两者都将随着时间的推移而累加。

"""

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

def evaluate_accuracy(net, data_iter): #@save

"""计算在指定数据集上模型的精度"""

if isinstance(net, torch.nn.Module):

net.eval() # 将模型设置为评估模式

metric = Accumulator(2) # 正确预测数、预测总数

with torch.no_grad():

for X, y in data_iter:

# print(accuracy(net(X), y),"++++", accuracy_(net(X), y))

metric.add(accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

# x = torch.tensor([

# [1, -1, 100, 0],

# [4, 3, 2, 1]

# ])

# print(softmax_plus(x))

# softmax(x)

def train_epoch_ch3(net, train_iter, loss, updater): #@save

"""训练模型一个迭代周期(定义见第3章)"""

# 将模型设置为训练模式

if isinstance(net, torch.nn.Module):

net.train()

# 训练损失总和、训练准确度总和、样本数

metric = Accumulator(3)

for X, y in train_iter:

# 计算梯度并更新参数

y_hat = net(X)

l = loss(y_hat, y)

if isinstance(updater, torch.optim.Optimizer): #

# 使用PyTorch内置的优化器和损失函数

updater.zero_grad()

l.mean().backward()

updater.step() # 自动更新求导

else:

# 使用定制的优化器和损失函数

l.sum().backward()

updater(X.shape[0])

metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())

# 返回训练损失和训练精度

return metric[0] / metric[2], metric[1] / metric[2]

class Animator: #@save

"""在动画中绘制数据 不需要过于了解"""

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1,

figsize=(3.5, 2.5)):

# 增量地绘制多条线

if legend is None:

legend = []

d2l.use_svg_display()

self.fig, self.axes = d2l.plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使用lambda函数捕获参数

self.config_axes = lambda: d2l.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

# 向图表中添加多个数据点

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

display.display(self.fig)

display.clear_output(wait=True)

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater): #@save

"""训练模型(定义见第3章)"""

animator = Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[-0.1, 1],

legend=['train loss', 'train acc', 'test acc'])

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

animator.add(epoch + 1, train_metrics + (test_acc,))

train_loss, train_acc = train_metrics

lr = 0.1

def updater(batch_size):

return d2l.sgd([W, b], lr, batch_size)

num_epochs = 10

# train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, updater)

softmax的简洁实现

import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

net = nn.Sequential(nn.Flatten(), nn.Linear(784, 10)) # flatten-图片28*28*1 平展层

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01)

net.apply(init_weights) # 自己根据模型初始化参数

# loss

loss = nn.CrossEntropyLoss(reduction='none')

trainer = torch.optim.SGD(net.parameters(), lr=0.1, weight_decay=0.01) # weight_decay L2 正则化

num_epochs = 10

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

多层感知机

一文搞懂激活函数(Sigmoid/ReLU/LeakyReLU/PReLU/ELU)

梯度消失问题与如何选择激活函数

神经网络中的权重初始化一览:从基础到Kaiming

为什么要加激活函数- 如果不加激活函数或者线性的激活函数,那么最终经过多2层感知机alph = wT * W * x 仍为线性,所以要加非线性激活函数

设计层数和每层个数 简单->复杂 慢慢加上去

% matplotlib inline

import torch

from d2l import torch as d2l

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = torch.relu(x)

fig, axs = d2l.plt.subplots(2, 1)

d2l.plot(x.detach(), y.detach(), 'x', 'relu(x)', figsize=(4, 5), axes=axs[0])

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plot(x.detach(), x.grad, 'x', 'grad of relu', figsize=(5, 2.5), axes=axs[1])

fig, axs = d2l.plt.subplots(2, 1)

y = torch.sigmoid(x)

d2l.plot(x.detach(), y.detach(), 'x', 'sigmoid(x)', figsize=(5, 6), axes=axs[0])

x.grad.data.zero_()

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plot(x.detach(), x.grad, 'x', 'grad of sigmoid', figsize=(5, 5), axes=axs[1])

# 清除以前的梯度

fig, axs = d2l.plt.subplots(2, 1)

y = torch.tanh(x)

d2l.plot(x.detach(), y.detach(), 'x', 'tanh(x)', figsize=(5, 5), axes=axs[0])

# 清除以前的梯度

x.grad.data.zero_()

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plot(x.detach(), x.grad, 'x', 'grad of tanh', figsize=(5, 2.5), axes=axs[1])

多层感知机的从零开始

import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# 参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = nn.Parameter(torch.randn(

num_inputs, num_hiddens, requires_grad=True) * 0.01)

b1 = nn.Parameter(torch.zeros(

num_hiddens, requires_grad=True))

W2 = nn.Parameter(torch.randn(

num_hiddens, num_outputs, requires_grad=True) * 0.01)

b2 = nn.Parameter(torch.zeros(num_outputs), requires_grad=True)

params = [W1, b1, W2, b2]

# 激活函数

def relu(X):

return torch.max(X, torch.zeros_like(X))

# 模型

def net(X):

X = X.reshape((-1, num_inputs))

# H = torch.matmul(X, W1) + b1

H = relu(X @ W1 + b1) # 这里“@”代表矩阵乘法

return (H @ W2 + b2)

# 损失函数

loss = nn.CrossEntropyLoss(reduction='none') # 不能改为mean 因为trian 训练时已经mean了

num_epochs, lr = 10, 0.1

updater = torch.optim.SGD(params, lr=lr, weight_decay=0.01)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, updater)

多层感知机的简洁实现

import torch

from torch import nn

from d2l import torch as d2l

net = nn.Sequential(

nn.Flatten(),

nn.Linear(784, 128),

nn.ReLU(),

# nn.Sigmoid(),

nn.Linear(128, 10)

)

def init_weight(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01) # 方差有讲究 - 以后

net.apply(init_weight)

batch_size, lr, num_epochs = 256, 0.1, 10

loss = nn.CrossEntropyLoss(reduction='none')

trainer = torch.optim.SGD(net.parameters(), lr=lr, weight_decay=0.01)

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

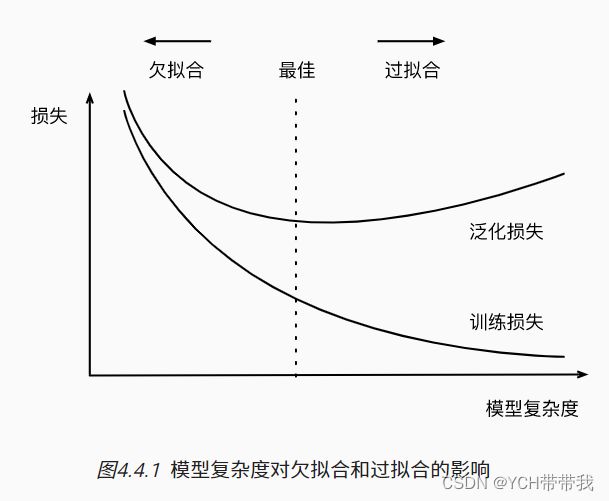

模型选择、欠拟合和过拟合

import math

import numpy as np

import torch

from torch import nn

from d2l import torch as d2l

# 生成数据集的策略

max_degree = 20 # 多项式的最大阶数

n_train, n_test = 100, 100 # 训练和测试数据集大小

true_w = np.zeros(max_degree) # 分配大量的空间

true_w[0:4] = np.array([5, 1.2, -3.4, 5.6])

features = np.random.normal(size=(n_train + n_test, 1))

np.random.shuffle(features)

poly_features = np.power(features, np.arange(max_degree).reshape(1,

-1)) # https://numpy.org/doc/stable/reference/generated/numpy.power.html

for i in range(max_degree):

poly_features[:, i] /= math.gamma(

i + 1) # gamma(n)=(n-1)! poly_features中的单项式由gamma函数重新缩放,生成的数据集中查看一下前2个样本, 第一个值是与偏置相对应的常量特征。

# labels的维度:(n_train+n_test,)

labels = np.dot(poly_features, true_w)

labels += np.random.normal(scale=0.1, size=labels.shape)

# NumPy ndarray转换为tensor

true_w, features, poly_features, labels = [torch.tensor(x, dtype=

torch.float32) for x in [true_w, features, poly_features, labels]]

features[:2], poly_features[:2, :], labels[:2]

def evaluate_loss(net, data_inter, loss):

metric = d2l.Accumulator(2)

for X, y in data_inter:

y_pre = net(X)

l = loss(y_pre, y.reshape(y_pre.shape))

metric.add(l.sum(), len(l))

return metric[0] / metric[1]

def load_array(data_arrays, batch_size, is_train=True):

dataset = torch.utils.data.TensorDataset(*data_arrays) # 相当于 zip

return torch.utils.data.DataLoader(dataset, batch_size, shuffle=is_train)

def train(train_features, test_features, train_labels, test_labels, num_epochs=400):

inputs_shape = train_features.shape[-1]

loss = torch.nn.MSELoss(reduction="none")

net = torch.nn.Sequential(torch.nn.Linear(inputs_shape, 1, bias=False))

batch_size = min(10, train_labels.shape[0])

train_iter = load_array((train_features, train_labels.reshape(-1, 1)), batch_size)

test_iter = load_array((test_features, test_labels.reshape(-1, 1)), batch_size, is_train=False)

trainer = torch.optim.SGD(net.parameters(), lr=0.01)

animator = d2l.Animator(xlabel='epoch', ylabel='loss', yscale='log',

xlim=[1, num_epochs], ylim=[1e-3, 1e2],

legend=['train', 'test'])

for epoch in range(num_epochs):

d2l.train_epoch_ch3(net, train_iter, loss, trainer)

if epoch == 0 or (epoch + 1) % 20 == 0:

animator.add(epoch + 1, (evaluate_loss(net, train_iter, loss),

evaluate_loss(net, test_iter, loss)))

print('weight:', net[0].weight.data.numpy())

# 三阶多项式函数拟合(正常)

# 从多项式特征中选择前4个维度,即1,x,x^2/2!,x^3/3!

train(poly_features[:n_train, :4], poly_features[n_train:, :4],

labels[:n_train], labels[n_train:])

# 从多项式特征中选择前2个维度,即1和x

train(poly_features[:n_train, :2], poly_features[n_train:, :2],

labels[:n_train], labels[n_train:])

# 从多项式特征中选取所有维度

train(poly_features[:n_train, :], poly_features[n_train:, :],

labels[:n_train], labels[n_train:], num_epochs=1500)

权重衰减

% matplotlib inline

import torch

from torch import nn

from d2l import torch as d2l

def synthetic_data(w, b, num_examples):

"""Generate y = Xw + b + noise.

Defined in :numref:`sec_utils`"""

X = d2l.normal(0, 1, (num_examples, len(w)))

y = d2l.matmul(X, w) + b

y += d2l.normal(0, 0.01, y.shape)

return X, d2l.reshape(y, (-1, 1))

def load_array(data_arrays, batch_size, is_train=True):

dataset = torch.utils.data.TensorDataset(*data_arrays) # 相当于 zip

return torch.utils.data.DataLoader(dataset, batch_size, shuffle=is_train)

n_train, n_test, num_inputs, batch_size = 20, 100, 200, 5

true_w, true_b = torch.ones((num_inputs, 1)) * 0.01, 0.05

train_data = synthetic_data(true_w, true_b, n_train)

train_iter = load_array(train_data, batch_size)

test_data = synthetic_data(true_w, true_b, n_test)

test_iter = load_array(test_data, batch_size, is_train=False)

def init_params():

w = torch.normal(0, 1, size=(num_inputs, 1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

return [w, b]

def l2_penalty(w):

return torch.sum(w.pow(2)) / 2

def linreg(X, w, b):

return torch.matmul(X, w) + b

def sgd(params, lr, batch_size):

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

def squared_loss(y_hat, y):

return (y_hat - d2l.reshape(y, y_hat.shape)) ** 2 / 2

def train(lambd, nrows=1, ncols=1):

w, b = init_params()

net, loss = lambda X: linreg(X, w, b), squared_loss

num_epochs, lr = 100, 0.003

animator = d2l.Animator(xlabel='epochs', ylabel='loss-lambda=' + str(lambd), yscale='log',

xlim=[5, num_epochs], legend=['train', 'test'], nrows=nrows, ncols=ncols,

)

for epoch in range(num_epochs):

for X, y in train_iter:

# 增加了L2范数惩罚项,

# 广播机制使l2_penalty(w)成为一个长度为batch_size的向量

l = loss(net(X), y) + lambd * l2_penalty(w)

l.sum().backward()

sgd([w, b], lr, batch_size)

if (epoch + 1) % 5 == 0:

animator.add(epoch + 1, (d2l.evaluate_loss(net, train_iter, loss),

d2l.evaluate_loss(net, test_iter, loss)))

print('w的L2范数是:', torch.norm(w).item())

train(lambd=0)

train(lambd=3)

def train_concise(wd):

net = nn.Sequential(nn.Linear(num_inputs, 1))

for param in net.parameters():

param.data.normal_()

loss = nn.MSELoss(reduction="none")

num_epochs, lr = 100, 0.003

# 偏置参数没有衰减

trainer = torch.optim.SGD([

{"params": net[0].weight, 'weight_decay': wd},

{"params": net[0].bias}], lr=lr

)

animator = d2l.Animator(xlabel='epochs', ylabel='loss', yscale='log',

xlim=[5, num_epochs], legend=['train', 'test'])

for epoch in range(num_epochs):

for X, y in train_iter:

trainer.zero_grad()

l = loss(net(X), y)

l.mean().backward()

trainer.step()

if (epoch + 1) % 5 == 0:

animator.add(epoch + 1,

(d2l.evaluate_loss(net, train_iter, loss),

d2l.evaluate_loss(net, test_iter, loss)))

print('w的L2范数:', net[0].weight.norm().item())

train_concise(0), train_concise(3)

% matplotlib inline

import torch

from torch import nn

from d2l import torch as d2l

def synthetic_data(w, b, num_examples):

"""Generate y = Xw + b + noise.

Defined in :numref:`sec_utils`"""

X = d2l.normal(0, 1, (num_examples, len(w)))

y = d2l.matmul(X, w) + b

y += d2l.normal(0, 0.01, y.shape)

return X, d2l.reshape(y, (-1, 1))

def load_array(data_arrays, batch_size, is_train=True):

dataset = torch.utils.data.TensorDataset(*data_arrays) # 相当于 zip

return torch.utils.data.DataLoader(dataset, batch_size, shuffle=is_train)

n_train, n_test, num_inputs, batch_size = 20, 100, 200, 5

true_w, true_b = torch.ones((num_inputs, 1)) * 0.01, 0.05

train_data = synthetic_data(true_w, true_b, n_train)

train_iter = load_array(train_data, batch_size)

test_data = synthetic_data(true_w, true_b, n_test)

test_iter = load_array(test_data, batch_size, is_train=False)

def init_params():

w = torch.normal(0, 1, size=(num_inputs, 1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

return [w, b]

def l2_penalty(w):

return torch.sum(w.pow(2)) / 2

def linreg(X, w, b):

return torch.matmul(X, w) + b

def sgd(params, lr, batch_size):

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

def squared_loss(y_hat, y):

return (y_hat - d2l.reshape(y, y_hat.shape)) ** 2 / 2

def train(lambd, nrows=1, ncols=1):

w, b = init_params()

net, loss = lambda X: linreg(X, w, b), squared_loss

num_epochs, lr = 100, 0.003

animator = d2l.Animator(xlabel='epochs', ylabel='loss-lambda=' + str(lambd), yscale='log',

xlim=[5, num_epochs], legend=['train', 'test'], nrows=nrows, ncols=ncols,

)

for epoch in range(num_epochs):

for X, y in train_iter:

# 增加了L2范数惩罚项,

# 广播机制使l2_penalty(w)成为一个长度为batch_size的向量

l = loss(net(X), y) + lambd * l2_penalty(w)

l.sum().backward()

sgd([w, b], lr, batch_size)

if (epoch + 1) % 5 == 0:

animator.add(epoch + 1, (d2l.evaluate_loss(net, train_iter, loss),

d2l.evaluate_loss(net, test_iter, loss)))

print('w的L2范数是:', torch.norm(w).item())

train(lambd=0)

train(lambd=3)

def train_concise(wd):

net = nn.Sequential(nn.Linear(num_inputs, 1))

for param in net.parameters():

param.data.normal_()

loss = nn.MSELoss(reduction="none")

num_epochs, lr = 100, 0.003

# 偏置参数没有衰减

trainer = torch.optim.SGD([

{"params": net[0].weight, 'weight_decay': wd},

{"params": net[0].bias}], lr=lr

)

animator = d2l.Animator(xlabel='epochs', ylabel='loss', yscale='log',

xlim=[5, num_epochs], legend=['train', 'test'])

for epoch in range(num_epochs):

for X, y in train_iter:

trainer.zero_grad()

l = loss(net(X), y)

l.mean().backward()

trainer.step()

if (epoch + 1) % 5 == 0:

animator.add(epoch + 1,

(d2l.evaluate_loss(net, train_iter, loss),

d2l.evaluate_loss(net, test_iter, loss)))

print('w的L2范数:', net[0].weight.norm().item())

train_concise(0), train_concise(3)

暂退法(Dropout)

import torch

from torch import nn

from d2l import torch as d2l

num_inputs, num_outputs, num_hiddens1, num_hiddens2 = 784, 10, 256, 256

dropput1, dropour2 = 0.2, 0.5

def dropout_layer(X, dropout):

assert 0 <= dropout <= 1

# 在本情况中,所有元素都被丢弃

if dropout == 1:

return torch.zeros_like(X)

# 在本情况中,所有元素都被保留

if dropout == 0:

return X

mask = (torch.rand(X.shape) > dropout).float() # false = 0, ture = 1

return mask * X / (1.0 - dropout)

class Net(nn.Module):

def __init__(self, num_inputs, num_outputs, num_hiddens1, num_hiddens2, is_training=True):

super(Net, self).__init__()

self.num_inputs = num_inputs

self.training = is_training

self.lin1 = nn.Linear(num_inputs, num_hiddens1)

self.lin2 = nn.Linear(num_hiddens1, num_hiddens2)

self.lin3 = nn.Linear(num_hiddens2, num_outputs)

self.relu = nn.ReLU()

def forward(self, X):

H1 = self.relu(self.lin1(X.reshape((-1, self.num_inputs))))

if self.training == True:

H1 = dropout_layer(H1, dropput1)

H2 = self.relu(self.lin2(H1))

if self.training == True:

H2 = dropout_layer(H2, dropour2)

out = self.lin3(H2)

return out

net = Net(num_inputs, num_outputs, num_hiddens1, num_hiddens2)

num_epochs, lr, batch_size = 10, 0.5, 256

loss = nn.CrossEntropyLoss(reduction='none')

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

trainer = torch.optim.SGD(net.parameters(), lr=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

简洁实现

dropput1, dropour2 = 0.2, 0.5

net = nn.Sequential(

nn.Flatten(),

nn.Linear(784, 256),

nn.ReLU(),

nn.Dropout(dropput1),

nn.Linear(256, 256),

nn.ReLU(),

nn.Dropout(dropour2),

nn.Linear(256, 12)

)

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01)

trainer = torch.optim.SGD(net.parameters(), lr=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

dropput1, dropour2 = 0.5, 0.2 # 交换

net = nn.Sequential(

nn.Flatten(),

nn.Linear(784, 256),

nn.ReLU(),

nn.Dropout(dropput1),

nn.Linear(256, 256),

nn.ReLU(),

nn.Dropout(dropour2),

nn.Linear(256, 12)

)

trainer = torch.optim.SGD(net.parameters(), lr=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

-

如果更改第一层和第二层的暂退法概率,会发生什么情况?具体地说,如果交换这两个层,会发生什么情况?

猜测 神经网络第一层往往提取比较底层的信息,杂质较多,dropout比较大时抛弃的无用的也比较多

-

以本节中的模型为例,比较使用暂退法和权重衰减的效果。如果同时使用暂退法和权重衰减,会发生什么情况?结果是累加的吗?收益是否减少(或者说更糟)?它们互相抵消了吗?

暂退法-添加噪声增加鲁棒性 权重衰减 - 改变参数值防止过拟合

-

如果我们将暂退法应用到权重矩阵的各个权重,而不是激活值,会发生什么?

数值稳定性和模型初始化

在此之前sigmoid函数学习可能导致梯度消失时和ReLU梯度爆炸参考了一下知识

梯度消失问题与如何选择激活函数

神经网络中的权重初始化一览:从基础到Kaiming

保证梯度在合理范围内:

- 将乘法变加法 ResNet LSTM

- 归一化 - 梯度归一化, 梯度裁剪

- 合理的权重初始和激活函数

目标让每一个方差是一个常数:

- 将每层的输出和梯度都看作随机变量

- 让他们的均值(常为0)和方差都保持一致

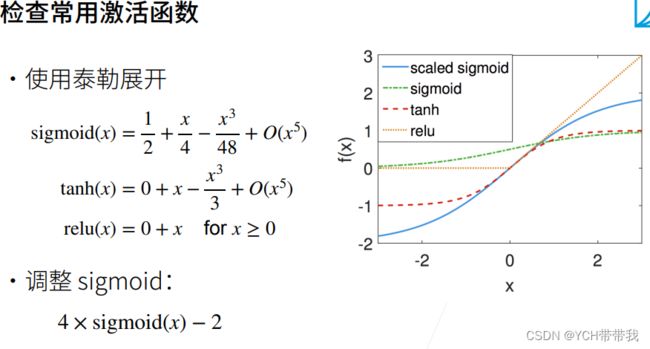

Xavier初始化尽量使每一层初始化参数方差相等

对于常用激活函数来讲,sigmoid在0点小范围内均值为1/2(泰勒展开),需要调整,而relu和tanh比较好

梯度消失和梯度爆炸

%matplotlib inline

import torch

from d2l import torch as d2l

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = torch.sigmoid(x)

y.backward(torch.ones_like(x))

d2l.plot(x.detach().numpy(), [y.detach().numpy(), x.grad.numpy()],

legend=['sigmoid', 'gradient'], figsize=(4.5, 2.5))

参数初始化

pytorch中的参数初始化方法总结