Towards Real-Time Multi-Object Tracking(JDE)

本文我们接着对多目标追踪(MOT)领域常见的模型和算法进行学习,这次的主角是JDE,JDE可不是一个模型的名字,而是一类追踪算法的总称,全称叫做

Jointly learns the Detector and Embedding model (JDE)

什么意思呢?我们之前讨论的一些多目标追踪模型,比如SORT和DeepSORT,都是2015-2018年常见的MOT范式,也就是tracking by detection 。

该类范式因为通俗易懂,且表现出了不俗的追踪精度,在2015年到2018年,一度成为MOT的主流范式。该范式首先通过检测器(detector)检测出画面中物体所在的检测框,然后根据物体检测框移动的规律(运动特征)和检测框中物体的外观特征(通常通过一个ReID网络抽取一个低维的向量,叫做embedding向量)来进行前后帧同一物体的匹配,从而实现多目标追踪。

该类范式将MOT分为了两步,即

- 物体检测

- 特征提取与物体关联

该类方法检测与特征提取是分开的,所以又被称为SDE

Separate Detection and Embedding (SDE)

SDE存在的最大缺点就是速度慢,因为将物体检测和(外观)特征提取分开,检测速度自然就下去了。

那么作者考虑到这点不足,提出了JDE范式。论文和代码链接如下:

论文:Towards Real-Time Multi-Object Tracking

代码:Zhongdao/Towards-Realtime-MOT

1 JDE产生背景

其实,作者有对比了前面方法:

1)、SDE(Separate Detection and Embedding)就是两阶段法。检测器和Reid模块是独立开来的,先检测后识别(感觉思想和Fast RCNN很像),这些方法需要分为两部分操作,两部分不相互干扰,精度高,但是耗时长。

2)、作者这里的two-stage实际上应该也是一种端到端的方法,只是不是利用检测的最后的结果,而是利用两阶段检测法中FPN生成的框来做Embedding,这样做可以贡献部分特征,减少计算量。但是二阶段法不来就不是很快,所以整体速度依然达不到实时。

2 JDE的网络结构和损失函数

既然作者提到该方法是基于One-stage检测器学习到物体的embedding的(代码中采用的是经典的YOLO V3模型)。那么JDE范式就应该在检测器的输出(head),多输出一个分支用来学习物体的embedding的。

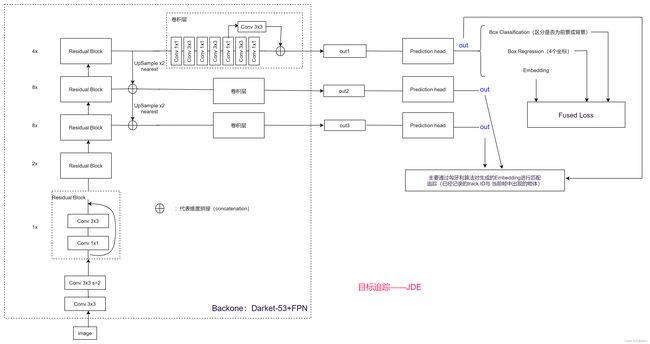

果不其然,作者就是采用了这种思路。原论文中给出结构图如下

这个结构图勾勒了作者大致的想法,在Prediction head中多出来了一个分支用于输出embedding。然后使用一个**多任务学习(multi-task learning)**的思路设置损失函数。看的时候觉得如此简单,但是深思了下,发现问题没有这么简单。

我根据源码自己又画了一个图:

第一个问题就是embedding要怎么学出来?

我们知道,理想情况下同一物体在不同的帧中,被同一跟踪标签锁定(即拥有同一track ID)。我们知道的信息就只有他们的标签索引(同一物体的track ID一致,不同物体的track ID不一样)。

那么网络在训练的过程中,应该需要对embedding进行转化,转化为足够强的语义信息,也就是这个embedding可以轻松的区分检测出来的目标属于哪个track ID的物体,那么这种就需要借鉴物体分类的思路了(将每个track ID当作一个类别),所以作者引入了全连接层将embedding信息转化为track ID分类信息。

第二个问题就是这个全连结层的输出节点到底是多少?

因为刚才我们提到,要将每个track ID当作一个类别,但是这个track ID的数量十分庞大,甚至不可计数。这个输出节点应该如何设置呢?看了一圈代码,代码中设置了14455个输出节点,设置依据为训练集的总的Track ID数量。

那么实际上作者的结构图是进行了省略的,我们不妨手动补全。

注意:在Test的时候实际上是没有Embedding到14455的映射的,prediction head几乎没起什么作用

这样的话就没什么大的疑问了。有关YOLO V3的结构,熟悉目标检测的都不会陌生,我们这里忽略FPN网络的结构定义,直接看predicition head的代码部分,确定上面的分析是合理的。

该predicition head的代码定义在model.py文件下的 YOLOLayer类中。定义如下:

class YOLOLayer(nn.Module):

def __init__(self, anchors, nC, nID, nE, img_size, yolo_layer):

super(YOLOLayer, self).__init__()

self.layer = yolo_layer

nA = len(anchors)

self.anchors = torch.FloatTensor(anchors)

self.nA = nA # number of anchors (4)

print('nA',nA)

self.nC = nC # number of classes (1)

print('nC', nC)

self.nID = nID # number of identities, 14455

print('nID', nID)

self.img_size = 0

self.emb_dim = nE # 512

print('nE', nE)

self.shift = [1, 3, 5]

self.SmoothL1Loss = nn.SmoothL1Loss() # for bounding box regression

self.SoftmaxLoss = nn.CrossEntropyLoss(ignore_index=-1) # foreground and background classification

self.CrossEntropyLoss = nn.CrossEntropyLoss()

self.IDLoss = nn.CrossEntropyLoss(ignore_index=-1) # loss of embedding

self.s_c = nn.Parameter(-4.15*torch.ones(1)) # -4.15

self.s_r = nn.Parameter(-4.85*torch.ones(1)) # -4.85

self.s_id = nn.Parameter(-2.3*torch.ones(1)) # -2.3

self.emb_scale = math.sqrt(2) * math.log(self.nID-1) if self.nID>1 else 1

作者简单定义了一些参数和损失函数类型。我已经将一些参数的设定值标注在后面,便于大家理解。作者定义了四个损失函数,但是只用到了三个,分别为

- self.SmoothL1Loss 用于检测框的回归

- self.SoftmaxLoss 用于前景和背景分类

- self.IDLoss 用于计算embedding的损失

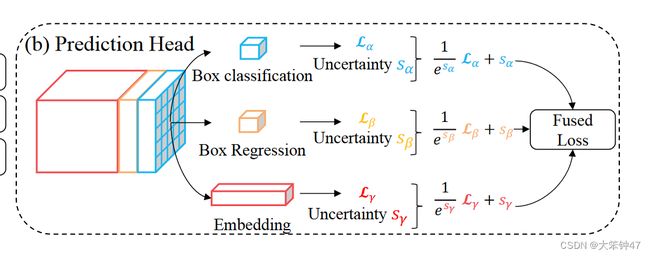

当然了,作者在论文中提到,JDE是一个多任务的学习,所以在计算损失函数的时候,需要采用任务独立不确定性(task-independent uncertainty)的自动学习方案进行以上三个损失函数的求和,有关参数在上述代码中已经定义,分别为

- self.s_c

- self.s_r

- self.s_id

这在上图中也有体现。该部分的核心代码如下

def forward(self, p_cat, img_size, targets=None, classifier=None, test_emb=False):

p, p_emb = p_cat[:, :24, ...], p_cat[:, 24:, ...]

nB, nGh, nGw = p.shape[0], p.shape[-2], p.shape[-1]

if self.img_size != img_size:

create_grids(self, img_size, nGh, nGw)

if p.is_cuda:

self.grid_xy = self.grid_xy.cuda()

self.anchor_wh = self.anchor_wh.cuda()

p = p.view(nB, self.nA, self.nC + 5, nGh, nGw).permute(0, 1, 3, 4, 2).contiguous() # prediction

p_emb = p_emb.permute(0,2,3,1).contiguous()

p_box = p[..., :4]

p_conf = p[..., 4:6].permute(0, 4, 1, 2, 3) # Conf

# Training

if targets is not None:

if test_emb:

tconf, tbox, tids = build_targets_max(targets, self.anchor_vec.cuda(), self.nA, self.nC, nGh, nGw)

else:

tconf, tbox, tids = build_targets_thres(targets, self.anchor_vec.cuda(), self.nA, self.nC, nGh, nGw)

tconf, tbox, tids = tconf.cuda(), tbox.cuda(), tids.cuda()

mask = tconf > 0

# Compute losses

nT = sum([len(x) for x in targets]) # number of targets

nM = mask.sum().float() # number of anchors (assigned to targets)

nP = torch.ones_like(mask).sum().float()

if nM > 0:

lbox = self.SmoothL1Loss(p_box[mask], tbox[mask])

else:

FT = torch.cuda.FloatTensor if p_conf.is_cuda else torch.FloatTensor

lbox, lconf = FT([0]), FT([0])

lconf = self.SoftmaxLoss(p_conf, tconf)

lid = torch.Tensor(1).fill_(0).squeeze().cuda()

emb_mask,_ = mask.max(1)

# For convenience we use max(1) to decide the id, TODO: more reseanable strategy

tids,_ = tids.max(1)

tids = tids[emb_mask]

embedding = p_emb[emb_mask].contiguous()

embedding = self.emb_scale * F.normalize(embedding)

nI = emb_mask.sum().float()

if test_emb:

if np.prod(embedding.shape)==0 or np.prod(tids.shape) == 0:

return torch.zeros(0, self.emb_dim+1).cuda()

emb_and_gt = torch.cat([embedding, tids.float()], dim=1)

return emb_and_gt

if len(embedding) > 1:

logits = classifier(embedding).contiguous()

lid = self.IDLoss(logits, tids.squeeze())

# Sum loss components

loss = torch.exp(-self.s_r)*lbox + torch.exp(-self.s_c)*lconf + torch.exp(-self.s_id)*lid + \

(self.s_r + self.s_c + self.s_id)

loss *= 0.5

return loss, loss.item(), lbox.item(), lconf.item(), lid.item(), nT

else:

p_conf = torch.softmax(p_conf, dim=1)[:,1,...].unsqueeze(-1)

p_emb = F.normalize(p_emb.unsqueeze(1).repeat(1,self.nA,1,1,1).contiguous(), dim=-1)

#p_emb_up = F.normalize(shift_tensor_vertically(p_emb, -self.shift[self.layer]), dim=-1)

#p_emb_down = F.normalize(shift_tensor_vertically(p_emb, self.shift[self.layer]), dim=-1)

p_cls = torch.zeros(nB,self.nA,nGh,nGw,1).cuda() # Temp

p = torch.cat([p_box, p_conf, p_cls, p_emb], dim=-1)

#p = torch.cat([p_box, p_conf, p_cls, p_emb, p_emb_up, p_emb_down], dim=-1)

p[..., :4] = decode_delta_map(p[..., :4], self.anchor_vec.to(p))

p[..., :4] *= self.stride

return p.view(nB, -1, p.shape[-1])

稍微有点冗长,我们来一一分析。

(1)前向预测信息的划分

首先作者将输出特征图划分为三部分,分别为

- 包含embedding信息的p_emb

- 包含检测框位置信息的p_box

- 包含前景背景分类置信度的p_conf

p_emb = p_emb.permute(0,2,3,1).contiguous()

p_box = p[..., :4]

p_conf = p[..., 4:6].permute(0, 4, 1, 2, 3) # Conf

这对应上图中的

(2)监督信息的划分

其次,作者从真实的标签信息中抽取各自的监督信息,分别为

# Training

if targets is not None:

if test_emb:

tconf, tbox, tids = build_targets_max(targets, self.anchor_vec.cuda(), self.nA, self.nC, nGh, nGw)

else:

tconf, tbox, tids = build_targets_thres(targets, self.anchor_vec.cuda(), self.nA, self.nC, nGh, nGw)

tconf, tbox, tids = tconf.cuda(), tbox.cuda(), tids.cuda()

mask = tconf > 0

因为我们知道,想要高效地进行损失函数求解,最好将监督信息对应到网络输出格式。其中tconf=1代表的是anchor中与GroundTruth目标物体最接近的框。注意在Test的时候,是需要对网络输出的框进行NMS(非极大值抑制)来避免重复框的。

(3)检测框回归损失和前景背景分类损失

接着作者计算检测框回归损失和前景背景分类损失。

# Compute losses

nT = sum([len(x) for x in targets]) # number of targets

nM = mask.sum().float() # number of anchors (assigned to targets)

nP = torch.ones_like(mask).sum().float()

if nM > 0:

lbox = self.SmoothL1Loss(p_box[mask], tbox[mask])

else:

FT = torch.cuda.FloatTensor if p_conf.is_cuda else torch.FloatTensor

lbox, lconf = FT([0]), FT([0])

lconf = self.SoftmaxLoss(p_conf, tconf)

lid = torch.Tensor(1).fill_(0).squeeze().cuda()

emb_mask,_ = mask.max(1)

这里值得注意的是mask的设置。作者将被分配为目标(targets)的anchor设置mask,那么只考虑被分配为targets的anchor对应位置上的值来计算损失函数。

(4)embedding损失的计算

接着作者计算embedding损失,值得注意的是,在该过程中作者采用了前面提到的全连结层来获得embedding的高级语义信息(track ID)。然后使用常见用于分类任务的交叉熵损失函数。

具体代码如下:

# For convenience we use max(1) to decide the id, TODO: more reseanable strategy

tids,_ = tids.max(1)

tids = tids[emb_mask]

embedding = p_emb[emb_mask].contiguous()

embedding = self.emb_scale * F.normalize(embedding)

nI = emb_mask.sum().float()

if test_emb:

if np.prod(embedding.shape)==0 or np.prod(tids.shape) == 0:

return torch.zeros(0, self.emb_dim+1).cuda()

emb_and_gt = torch.cat([embedding, tids.float()], dim=1)

return emb_and_gt

if len(embedding) > 1:

logits = classifier(embedding).contiguous()

lid = self.IDLoss(logits, tids.squeeze())

(5)总损失函数

最后,作者按照公式

对上面的三个损失进行求和。实现代码如下

loss = torch.exp(-self.s_r)*lbox + torch.exp(-self.s_c)*lconf + torch.exp(-self.s_id)*lid + \

(self.s_r + self.s_c + self.s_id)

loss *= 0.5

至此,有关predicition head的部分就讲解结束了。JDE的主体部分就介绍完了,其他细节,大家可以看一下原论文和代码,进行探索。

3 匹配

1.如何匹配

匹配是根据prediction head输出的embedding来进行匹配的。

2.变量说明

Tracks:表示一个被记录的物体(instance,同一个人)

Detections:表示当前帧中检测的是前景(比如人)的物体(会有对应的边框坐标和embedding)

不同状态的Tracks会有四个序列来分别进行存储:

-

Activated:表示当前帧中出现某个Tracks记录的人,则Tracks状态变为Activated

-

Refined:处于Lost状态的Tracks记录的人出现在当前帧

-

Lost:处于Lost状态的Tracks,但是并没有被删除(Remove)

-

Removed(删除):剔除序列的Tracks

3.匹配细则

当下一帧来到时,如何在处于Activated状态中的Tracks找到与其匹配的detection:

-

对处于Activated的Tracks,使用卡尔曼滤波预测物体当前帧的位置。

-

通过余弦相似性来计算Activated Tracks与Detections之间的appearance affinity matrix AE;通过马氏距离来计算Activated Tracks与Detections之间的motion affinity matrix AM。综合AE和AM得到最终的Cost Matrix,通过使用匈牙利算法来根据cost matrix进行Track与detection间的最佳匹配。

-

对于匹配到的且处于Activated 状态的Tracks,状态依旧处于Activated;对于新的detections,则新建一个处于Activated的Track;对于处于Lost状态的Tracks将重新处于Refined状态。

-

对于匹配失败的Track,则采用IOU距离度量指标重新进行匹配。

-

在IOU距离匹配下:匹配成功的Tracks处于Activated状态,失败的处于Lost状态。

关于匹配的详细代码:

class JDETracker(object):

def __init__(self, opt, frame_rate=30):

self.opt = opt

self.model = Darknet(opt.cfg, nID=14455)

# load_darknet_weights(self.model, opt.weights)

self.model.load_state_dict(torch.load(opt.weights, map_location='cpu')['model'], strict=False)

self.model.cuda().eval()

self.tracked_stracks = [] # type: list[STrack]

self.lost_stracks = [] # type: list[STrack]

self.removed_stracks = [] # type: list[STrack]

self.frame_id = 0

self.det_thresh = opt.conf_thres

self.buffer_size = int(frame_rate / 30.0 * opt.track_buffer)

self.max_time_lost = self.buffer_size

self.kalman_filter = KalmanFilter()

def update(self, im_blob, img0):

"""

Processes the image frame and finds bounding box(detections).

Associates the detection with corresponding tracklets and also handles lost, removed, refound and active tracklets

Parameters

----------

im_blob : torch.float32

Tensor of shape depending upon the size of image. By default, shape of this tensor is [1, 3, 608, 1088]

img0 : ndarray

ndarray of shape depending on the input image sequence. By default, shape is [608, 1080, 3]

Returns

-------

output_stracks : list of Strack(instances)

The list contains information regarding the online_tracklets for the recieved image tensor.

"""

# 定义不同的序列存放不同的frame

self.frame_id += 1

activated_starcks = [] # for storing active tracks, for the current frame

refind_stracks = [] # Lost Tracks whose detections are obtained in the current frame

lost_stracks = [] # The tracks which are not obtained in the current frame but are not removed.(Lost for some time lesser than the threshold for removing)

removed_stracks = []

t1 = time.time()

''' Step 1: Network forward, get detections & embeddings'''

with torch.no_grad():

pred = self.model(im_blob)

# pred is tensor of all the proposals (default number of proposals: 54264). Proposals have information associated with the bounding box and embeddings

pred = pred[pred[:, :, 4] > self.opt.conf_thres]

# pred now has lesser number of proposals. Proposals rejected on basis of object confidence score

if len(pred) > 0:

dets = non_max_suppression(pred.unsqueeze(0), self.opt.conf_thres, self.opt.nms_thres)[0].cpu()

# Final proposals are obtained in dets. Information of bounding box and embeddings also included

# Next step changes the detection scales

scale_coords(self.opt.img_size, dets[:, :4], img0.shape).round()

'''Detections is list of (x1, y1, x2, y2, object_conf, class_score, class_pred)'''

# class_pred is the embeddings.

detections = [STrack(STrack.tlbr_to_tlwh(tlbrs[:4]), tlbrs[4], f.numpy(), 30) for

(tlbrs, f) in zip(dets[:, :5], dets[:, 6:])]

else:

detections = []

t2 = time.time()

# print('Forward: {} s'.format(t2-t1))

''' Add newly detected tracklets to tracked_stracks'''

unconfirmed = []

tracked_stracks = [] # type: list[STrack]

for track in self.tracked_stracks:

if not track.is_activated:

# previous tracks which are not active in the current frame are added in unconfirmed list

unconfirmed.append(track)

# print("Should not be here, in unconfirmed")

else:

# Active tracks are added to the local list 'tracked_stracks'

tracked_stracks.append(track)

''' Step 2: First association, with embedding'''

# Combining currently tracked_stracks and lost_stracks

strack_pool = joint_stracks(tracked_stracks, self.lost_stracks)

# Predict the current location with KF 运用卡尔曼滤波进行motion state的更新

STrack.multi_predict(strack_pool, self.kalman_filter)

# appearance affinity matrix

dists = matching.embedding_distance(strack_pool, detections)

# dists = matching.gate_cost_matrix(self.kalman_filter, dists, strack_pool, detections)

# motion affinity matrix

dists = matching.fuse_motion(self.kalman_filter, dists, strack_pool, detections)

# The dists is the list of distances of the detection with the tracks in strack_pool

# 匈牙利算法做匹配

matches, u_track, u_detection = matching.linear_assignment(dists, thresh=0.7)

# The matches is the array for corresponding matches of the detection with the corresponding strack_pool

for itracked, idet in matches:

# itracked is the id of the track and idet is the detection

track = strack_pool[itracked]

det = detections[idet]

if track.state == TrackState.Tracked:

# If the track is active, add the detection to the track

track.update(detections[idet], self.frame_id)

activated_starcks.append(track)

else:

# We have obtained a detection from a track which is not active, hence put the track in refind_stracks list

track.re_activate(det, self.frame_id, new_id=False)

refind_stracks.append(track)

# None of the steps below happen if there are no undetected tracks.

''' Step 3: Second association, with IOU'''

detections = [detections[i] for i in u_detection]

# detections is now a list of the unmatched detections

r_tracked_stracks = [] # This is container for stracks which were tracked till the

# previous frame but no detection was found for it in the current frame

for i in u_track:

if strack_pool[i].state == TrackState.Tracked:

r_tracked_stracks.append(strack_pool[i])

dists = matching.iou_distance(r_tracked_stracks, detections)

matches, u_track, u_detection = matching.linear_assignment(dists, thresh=0.5)

# matches is the list of detections which matched with corresponding tracks by IOU distance method

for itracked, idet in matches:

track = r_tracked_stracks[itracked]

det = detections[idet]

if track.state == TrackState.Tracked:

track.update(det, self.frame_id)

activated_starcks.append(track)

else:

track.re_activate(det, self.frame_id, new_id=False)

refind_stracks.append(track)

# Same process done for some unmatched detections, but now considering IOU_distance as measure

for it in u_track:

track = r_tracked_stracks[it]

if not track.state == TrackState.Lost:

track.mark_lost()

lost_stracks.append(track)

# If no detections are obtained for tracks (u_track), the tracks are added to lost_tracks list and are marked lost

'''Deal with unconfirmed tracks, usually tracks with only one beginning frame'''

detections = [detections[i] for i in u_detection]

dists = matching.iou_distance(unconfirmed, detections)

matches, u_unconfirmed, u_detection = matching.linear_assignment(dists, thresh=0.7)

for itracked, idet in matches:

unconfirmed[itracked].update(detections[idet], self.frame_id)

activated_starcks.append(unconfirmed[itracked])

# The tracks which are yet not matched

for it in u_unconfirmed:

track = unconfirmed[it]

track.mark_removed()

removed_stracks.append(track)

# after all these confirmation steps, if a new detection is found, it is initialized for a new track

""" Step 4: Init new stracks"""

for inew in u_detection:

track = detections[inew]

if track.score < self.det_thresh:

continue

track.activate(self.kalman_filter, self.frame_id)

activated_starcks.append(track)

""" Step 5: Update state"""

# If the tracks are lost for more frames than the threshold number, the tracks are removed.

for track in self.lost_stracks:

if self.frame_id - track.end_frame > self.max_time_lost:

track.mark_removed()

removed_stracks.append(track)

# print('Remained match {} s'.format(t4-t3))

# Update the self.tracked_stracks and self.lost_stracks using the updates in this step.

self.tracked_stracks = [t for t in self.tracked_stracks if t.state == TrackState.Tracked]

self.tracked_stracks = joint_stracks(self.tracked_stracks, activated_starcks)

self.tracked_stracks = joint_stracks(self.tracked_stracks, refind_stracks)

# self.lost_stracks = [t for t in self.lost_stracks if t.state == TrackState.Lost] # type: list[STrack]

self.lost_stracks = sub_stracks(self.lost_stracks, self.tracked_stracks)

self.lost_stracks.extend(lost_stracks)

self.lost_stracks = sub_stracks(self.lost_stracks, self.removed_stracks)

self.removed_stracks.extend(removed_stracks)

self.tracked_stracks, self.lost_stracks = remove_duplicate_stracks(self.tracked_stracks, self.lost_stracks)

# get scores of lost tracks

output_stracks = [track for track in self.tracked_stracks if track.is_activated]

logger.debug('===========Frame {}=========='.format(self.frame_id))

logger.debug('Activated: {}'.format([track.track_id for track in activated_starcks]))

logger.debug('Refind: {}'.format([track.track_id for track in refind_stracks]))

logger.debug('Lost: {}'.format([track.track_id for track in lost_stracks]))

logger.debug('Removed: {}'.format([track.track_id for track in removed_stracks]))

# print('Final {} s'.format(t5-t4))

return output_stracks

上述代码中的step与匹配细则相对应。

4 总结

tracking by detection是非常常见的MOT范式,但是目前MOT领域为了平衡追踪速度和精度,慢慢放弃了这种范式,转而投入将检测与embedding匹配进行结合的范式研究中。本文介绍的JDE就是一个网络同时输出图像画面中的检测框位置和检测框内物体的embedding,从而加速MOT的速度。

但是值得注意的是,JDE只是同时输出了检测框和embedding信息。后面还是要通过卡尔曼滤波和匈牙利算法进行目标的匹配。总的来说,还是分为检测和匹配双阶段。

5 参考

参考代码:

[1]https://github.com/Zhongdao/Towards-Realtime-MOT

[2]https://zhuanlan.zhihu.com/p/243290960