内网环境基于 k8s 的大型网站电商解决方案(一)

一、环境说明

1、所有系统为rockylinux8.6最小化安装,所有服务器均为内网,只有manager为双网卡(可访问互联网),提供内网yum源、DNS解析、时间同步等

2、 k8s搭建高可用集群版本为1.24.6(基于containerd部署) 3台控制节点,2台工作节点

3、搭建rancher平台管理k8s集群(注:rancher为centos7.9最小化安装)

4、 mysql版本为8.0.31 搭建MGR

5、 ceph版本为quincy版,通过cephadm搭建

6、镜像存放在 harbor 仓库,版本为2.6.0

8、电商项目使用 LNMP 架构

9、PHP 和 Nginx 共享同一个 pvc:基于 cephfs 划分 pv

10、使用 Prometheus 监控电商平台,在 Grafana 可视化展示监控数据

11、搭建 efk+logstash+kafka 日志收集平台

12、K8S升级,将k8s升级至1.25.2,备份etcd

规划如下:

| 序号 | 系统名 | IP地址 | 配置 | 作用 | 备注 |

| 1 | master1 | 192.168.8.81 | 8G /sda 60G | K8S控制节点 | |

| 2 | master2 | 192.168.8.82 | 8G /sda 60G | K8S控制节点 | |

| 3 | master3 | 192.168.8.83 | 8G /sda 60G | K8S控制节点 | |

| 4 | node1 | 192.168.8.84 | 8G /sda 60G | K8S工作节点 | |

| 5 | node2 | 192.168.8.85 | 8G /sda 60G | K8S工作节点 | |

| 6 | master | 192.168.8.88 | 8G /sda 60G | K8S VIP | |

| 7 | harbor1 | 192.168.8.91 | 8G /sda 60G | harbor 私有仓库 | |

| 8 | harbor2 | 192.168.8.92 | 8G /sda 60G | harbor 私有仓库 | |

| 9 | rancher | 192.168.8.96 | 8G /sda 60G | rancher管理平台 | centos7.9 |

| 10 | mysqla | 192.168.8.51 | 8G /sda 60G | mysql数据库 | |

| 11 | mysqlb | 192.168.8.52 | 8G /sda 60G | mysql数据库 | |

| 12 | mysqlc | 192.168.8.53 | 8G /sda 60G | mysql数据库 | |

| 13 | mysql | 192.168.8.55 | 8G /sda 60G | mysql数据库 vip | |

| 14 | cepha | 192.168.8.61 | 8G /sda 60G,/sdb 20G,/sdc 20G | ceph集群 | |

| 15 | cephb | 192.168.8.62 | 8G /sda 60G,/sdb 20G,/sdc 20G | ceph集群 | |

| 16 | cephc | 192.168.8.63 | 8G /sda 60G,/sdb 20G,/sdc 20G | ceph集群 | |

| 17 | nfs | 192.168.8.100 | 8G /sda 60G | nfs共享 | |

| 18 | manager | 192.168.8.80 | 8G /sda 60G | yum源、dns、ntp等 |

二、基础环境搭建

1、安装系统rockylinux8.6最小化

网卡模式为仅主机

手动分区

所有服务器配置DNS为192.168.8.80,gateway 192.168.8.1

关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config2、安装基础软件包及chrony(manager)

设置防火墙

firewall-cmd --add-service=http --add-service=ntp --add-service=dns --permanent

firewall-cmd --reload安装基础软件包

yum install vim net-tools bash-completion wget -y安装chronyc

yum install chrony -y

sed -i 's/2.pool.ntp.org/ntp.aliyun.com/g' /etc/chrony.conf

echo 'allow 192.168.8.0/24' >> /etc/chrony.conf

systemctl enable --now chronyd

systemctl status chronyd

chronyc sources

3、配置manager服务器yum源

yum install httpd -y

systemctl enable --now httpd

mkdir /var/www/html/k8s

mkdir /var/www/html/ceph

mkdir /var/www/html/epel

mkdir /var/www/html/docker

dnf install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

cat > /etc/yum.repos.d/ceph.repo << EOF

[ceph-norch]

name=ceph-norch

baseurl=https://mirrors.aliyun.com/ceph/rpm-quincy/el8/noarch/

enable=1

gpgcheck=0

[ceph-x86_64]

name=ceph-x86_64

baseurl=https://mirrors.aliyun.com/ceph/rpm-quincy/el8/x86_64/

enable=1

gpgcheck=0

[ceph-source]

name=ceph-source

baseurl=https://mirrors.aliyun.com/ceph/rpm-quincy/el8/SRPMS/

enable=1

gpgcheck=0

EOF

4、搭建yum源服务器(manager)

mount /dev/sr0 /mnt/

cp -r /mnt/* /var/www/html/

mv AppStream appstream

mv BaseOS baseos

dnf install -y kubelet-1.24.6 kubeadm-1.24.6 kubectl-1.24.6 --downloadonly --destdir /var/www/html/k8s/

dnf install -y kubelet kubeadm kubectl --downloadonly --destdir /var/www/html/k8s/

dnf install -y docker-ce --downloadonly --destdir /var/www/html/docker/

dnf install -y cephadm --downloadonly --destdir /var/www/html/ceph/

dnf install -y ceph-common --downloadonly --destdir /var/www/html/ceph/

dnf install -y perl --downloadonly --destdir /var/www/html/epel/

yum install createrepo

createrepo /var/www/html/k8s

createrepo /var/www/html/docker

createrepo /var/www/html/ceph

createrepo /var/www/html/epel

systemctl restart httpd

http://192.168.8.80/appstream/

5、安装dnsmasq(manager)

yum install dnsmasq -y

echo 'listen-address=192.168.8.80' >> /etc/dnsmasq.conf

cat >> /etc/hosts << EOF

192.168.8.80 manager

192.168.8.81 master1

192.168.8.82 master2

192.168.8.83 master3

192.168.8.84 node1

192.168.8.85 node2

192.168.8.88 master

192.168.8.91 harbor1

192.168.8.92 harbor2

192.168.8.96 rancher

192.168.8.51 mysqla

192.168.8.52 mysqlb

192.168.8.53 mysqlc

192.168.8.55 mysql

192.168.8.61 cepha

192.168.8.62 cephb

192.168.8.63 cephc

192.168.8.100 nfs

EOF

systemctl enable --now dnsmasq

6、安装docker

7、配置内网服务器yum源及NTP配置

除80外所有服务器上执行

rm -rf /etc/yum.repos.d/*

cat > /etc/yum.repos.d/base.repo << EOF

[appstream]

name=appstream

baseurl=http://manager/appstream

enable=1

gpgcheck=0

[baseos]

name=baseos

baseurl=http://manager/baseos

enable=1

gpgcheck=0

[k8s]

name=k8s

baseurl=http://manager/k8s

enable=1

gpgcheck=0

[docker]

name=k8s

baseurl=http://manager/docker

enable=1

gpgcheck=0

[ceph]

name=ceph

baseurl=http://manager/ceph

enable=1

gpgcheck=0

[epel]

name=epel

baseurl=http://manager/epel

enable=1

gpgcheck=0

EOF

yum install -y wget bash-completion vim net-tools chrony

sed -i 's/2.pool.ntp.org/manager/g' /etc/chrony.conf

systemctl enable --now chronyd

chronyc sources所有服务器关机,打快照

三、搭建harbor私有仓库(harbor1、harbor2)

1、安装docker

yum install -y docker-ce

systemctl start docker && systemctl enable docker2、修改内核参数

modprobe br_netfilter

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/docker.conf <3、安装docker-compose

上传docker-compose-linux-x86_64至/root

wget https://github.com/goharbor/harbor/releases/download/v2.6.0/harbor-offline-installer-v2.6.0.tgz

wget https://github.com/docker/compose/releases/download/v2.11.0/docker-compose-linux-x86_64

mv docker-compose-linux-x86_64 /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose4、生成ca证书

mkdir /data/ssl -p

cd /data/ssl/

openssl genrsa -out ca.key 2048

openssl req -new -x509 -days 365 -key ca.key -out ca.pem

Country Name (2 letter code) [XX]: CN

State or Province Name (full name) []:xinjiang

Locality Name (eg, city) [Default City]:urumqi

Organization Name (eg, company) [Default Company Ltd]:myhub

Organizational Unit Name (eg, section) []:CA

Common Name (eg, your name or your server's hostname) []:harbor1

Email Address []:[email protected]

5、生成域名证书

openssl genrsa -out myhub.key 2048

openssl req -new -key myhub.key -out myhub.csr

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:xinjiang

Locality Name (eg, city) [Default City]:urumqi

Organization Name (eg, company) [Default Company Ltd]:myhub

Organizational Unit Name (eg, section) []:CA

Common Name (eg, your name or your server's hostname) []:myhub

Email Address []:[email protected]

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

openssl x509 -req -in myhub.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out myhub.pem -days 365

openssl x509 -noout -text -in myhub.pem6、安装harbor

mkdir /data/install -p

ll /data/sslcd

mv harbor-offline-installer-v2.6.0.tgz /data/install/

cd /data/install/

tar -xvf harbor-offline-installer-v2.6.0.tgz

cd harbor

cp harbor.yml.tmpl harbor.yml

vim harbor.ymlhostname: harbor1 ( harbor2)

certificate: /data/ssl/myhub.pem

private_key: /data/ssl/myhub.key

harbor_admin_password: password

docker load -i harbor.v2.6.0.tar.gz

./install.sh7、 停止harbor

cd /data/install/harbor

docker-compose stop8、启动harbor

cd /data/install/harbor

docker-compose start

firewall-cmd --add-service=http --add-service=https --permanent;firewall-cmd --reloadhttp://192.168.8.91 http://192.168.8.92

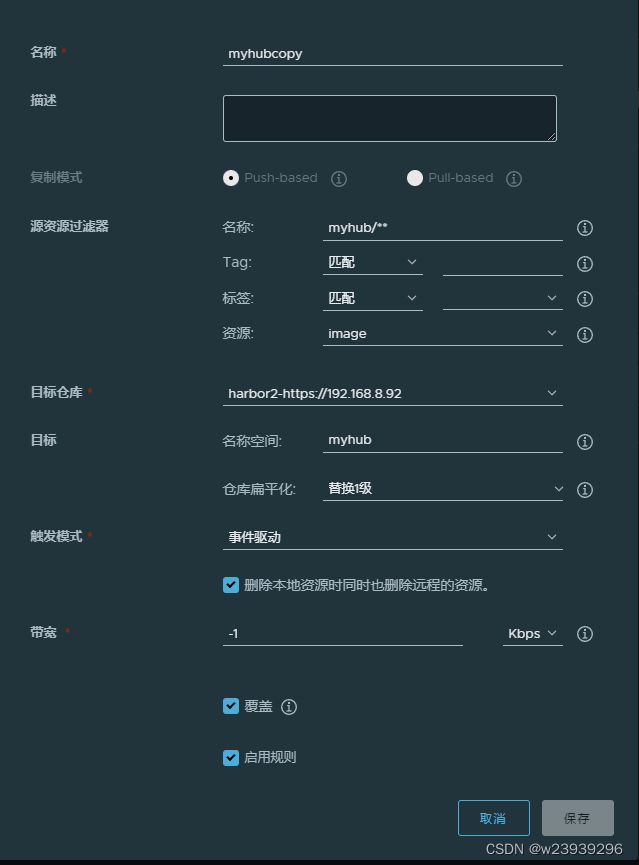

9、配置镜像自动同步

新建项目myhub http://192.168.8.91

配置仓库

新建复制规则

10、在192.168.8.80上测试

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["http://hub-mirror.c.163.com","https://0x3urqgf.mirror.aliyuncs.com"],

"insecure-registries": [ "192.168.8.91","harbor1" ]

}

EOF

systemctl daemon-reload

systemctl restart docker

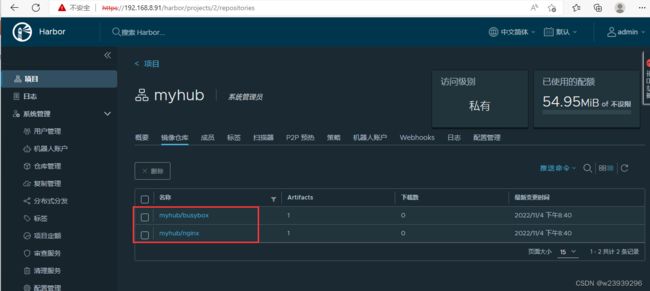

docker login 192.168.8.91上传镜像至仓库

docker pull nginx

docker pull busybox

docker tag busybox:latest 192.168.8.91/myhub/busybox:latest

docker tag nginx:latest 192.168.8.91/myhub/nginx:latest

docker push 192.168.8.91/myhub/busybox:latest

docker push 192.168.8.91/myhub/nginx:latesthttp://192.168.8.91 http://192.168.8.92登录验证

镜像已自动同步

四、安装k8s高可用集群(master1-3,node1-2)

1、修改内核参数(五台设备上执行)

modprobe br_netfilter

lsmod | grep br_netfilter

cat > /etc/sysctl.d/k8s.conf <2、开启Ipvs 五台设备

lsmod|grep ip_vs

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

lsmod|grep ip_vs

modprobe br_netfilter

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/ipv4/ip_forward3、安装containerd(五台设备执行)

dnf install -y device-mapper-persistent-data lvm2 ipvsadm iproute-tc

dnf install -y containerd

containerd config default > /etc/containerd/config.toml更改配置文件

sed -i 's#registry.k8s.io#192.168.8.91/myhub#g' /etc/containerd/config.toml

vim /etc/containerd/config.toml

增加如下内容

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.8.91"]

endpoint = ["https://192.168.8.91"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.8.91".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.8.91".auth]

username = "admin"

password = "password"启动containerd

systemctl daemon-reload

systemctl enable --now containerd.service

systemctl status containerd.service

crictl config runtime-endpoint unix:///run/containerd/containerd.sock

crictl config image-endpoint unix:///run/containerd/containerd.sock4、安装kubelet kubeadm kubectl

在三台master设备上执行

firewall-cmd --permanent --add-port=6443/tcp

firewall-cmd --permanent --add-port=2379-2380/tcp

firewall-cmd --permanent --add-port=10250-10252/tcp

firewall-cmd --permanent --add-port=16443/tcp

firewall-cmd --permanent --add-port=80/tcp

firewall-cmd --reload

dnf install -y kubelet-1.24.6 kubeadm-1.24.6 kubectl-1.24.6

systemctl enable kubelet在node1及node2上执行

firewall-cmd --permanent --add-port=6443/tcp

firewall-cmd --permanent --add-port=2379-2380/tcp

firewall-cmd --permanent --add-port=10250-10252/tcp

firewall-cmd --permanent --add-port=16443/tcp

firewall-cmd --permanent --add-port=80/tcp

firewall-cmd --reload

dnf install -y kubelet-1.24.6 kubeadm-1.24.6

systemctl enable kubelet5、下载镜像并上传(manager)

kubeadm config images list

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.6

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.24.6

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.6

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.6

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.3-0

docker pull registry.aliyuncs.com/google_containers/coredns:v1.8.6

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.6 \

192.168.8.91/myhub/kube-apiserver:v1.24.6

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.24.6 \

192.168.8.91/myhub/kube-proxy:v1.24.6

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.6 \

192.168.8.91/myhub/kube-scheduler:v1.24.6

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.6 \

192.168.8.91/myhub/kube-controller-manager:v1.24.6

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7 \

192.168.8.91/myhub/pause:3.7

docker tag registry.aliyuncs.com/google_containers/etcd:3.5.3-0 \

192.168.8.91/myhub/etcd:3.5.3-0

docker tag registry.aliyuncs.com/google_containers/coredns:v1.8.6 \

192.168.8.91/myhub/coredns:v1.8.6

docker pull docker.io/calico/cni:v3.24.4

docker pull docker.io/calico/node:v3.24.4

docker pull docker.io/calico/kube-controllers:v3.24.4

docker tag docker.io/calico/cni:v3.24.4 192.168.8.91/myhub/cni:v3.24.4

docker tag docker.io/calico/node:v3.24.4 192.168.8.91/myhub/node:v3.24.4

docker tag docker.io/calico/kube-controllers:v3.24.4 192.168.8.91/myhub/kube-controllers:v3.24.4

docker push 192.168.8.91/myhub/cni:v3.24.4

docker push 192.168.8.91/myhub/node:v3.24.4

docker push 192.168.8.91/myhub/kube-controllers:v3.24.4

wget -O /var/www/html/packages/calico.yaml https://docs.projectcalico.org/manifests/calico.yaml

6、安装keepalive+nginx

1)(三台master上执行)

yum install nginx keepalived nginx-mod-stream -ycat << EOF > /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.8.81:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.8.82:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.8.83:6443 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 16443;

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

EOF2)keeplive配置(master1)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.88/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service2)keeplive配置(master2)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.88/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service3)keeplive配置(master3)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.88/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service4)测试

master1

ip addrsystemctl stop keepalivedmaster2

master1

systemctl start keepalived

ip addr7、初始化k8s集群(master节点上执行)

配置文件

cat > kubeadm-config.yaml <初始化集群

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification | tee $HOME/k8s.txt执行命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configk8s命令自动补全

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc8、安装网络组件

wget http://192.168.8.80/packages/calico.yaml

sed -i 's#docker.io/calico#192.168.8.91/myhub#g' calico.yaml

kubectl apply -f calico.yaml

监控pod状态,待所有pod状态为running

kubectl get pods -n kube-system -w

kubectl get nodes9、将master2、master3加入集群

master2 master3

mkdir -p /etc/kubernetes/pki/etcd

mkdir -p ~/.kube/master1

scp /etc/kubernetes/pki/ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master3:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master3:/etc/kubernetes/pki/etcd/kubeadm token create --print-join-command![]()

master2 master3

kubeadm join 192.168.8.88:16443 --token 8682o7.gl9oij7g1hbmymmc --discovery-token-ca-cert-hash \

sha256:eb8beb55fd9a315e1d2cb675a36d762641e2f2caabf12a4cb1b40d32a40d2311 \

--control-plane --ignore-preflight-errors=SystemVerification

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

10、工作节点加入集群

两台node节点上执行

kubeadm join 192.168.8.88:16443 --token 8682o7.gl9oij7g1hbmymmc --discovery-token-ca-cert-hash \

sha256:eb8beb55fd9a315e1d2cb675a36d762641e2f2caabf12a4cb1b40d32a40d2311

firewall-cmd --permanent --zone=trusted --change-interface=tunl0

firewall-cmd --permanent --add-port=30000-32767/tcp

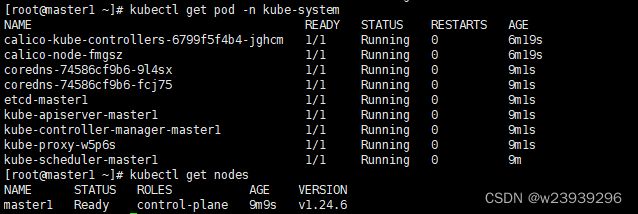

firewall-cmd --reload11、验证

在master1上查看

监控pod状态,待所有pod状态为running

kubectl get nodes

kubectl get componentstatuses

kubectl cluster-info

kubectl -n kube-system get pod

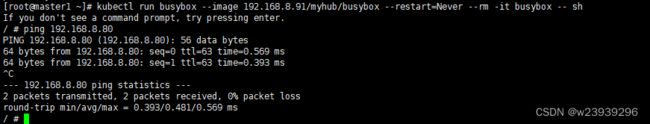

12、测试

12、测试

1)测试网络

kubectl run busybox --image 192.168.8.91/myhub/busybox --restart=Never --rm -it busybox -- sh2) 测试pod

cat > mypod.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: 192.168.8.91/myhub/nginx

ports:

- containerPort: 80

EOF

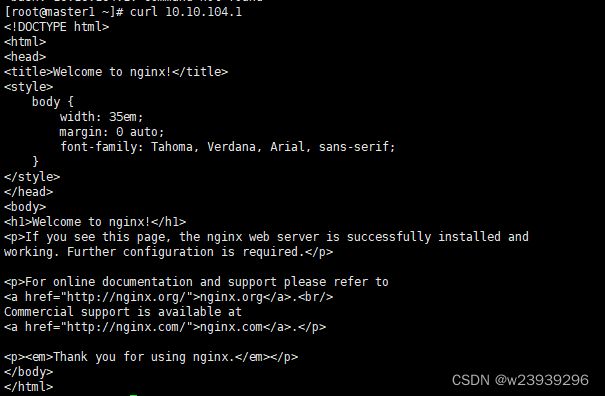

kubectl apply -f mypod.yamlkubectl get pods -o widecurl 10.10.104.113、部署ingress

manager

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 \

192.168.8.91/myhub/kube-webhook-certgen:v1.1.1

docker push 192.168.8.91/myhub/kube-webhook-certgen:v1.1.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.1 \

192.168.8.91/myhub/nginx-ingress-controller:v1.1.1

docker push 192.168.8.91/myhub/nginx-ingress-controller:v1.1.1master1:

cat > deploy.yaml << EOF

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

allow-snippet-annotations: 'true'

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ipFamilyPolicy: SingleStack

ipFamilies:

- IPv4

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: controller

image: 192.168.8.91/myhub/nginx-ingress-controller:v1.1.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: 192.168.8.91/myhub/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: 192.168.8.91/myhub/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

EOF

kubectl apply -f deploy.yaml

kubectl get pods -n ingress-nginx2)根据域名的访问

创建deployment

kubectl create deployment nginx1 --image=192.168.8.91/myhub/nginx

kubectl create deployment nginx2 --image=192.168.8.91/myhub/nginx

创建service

kubectl expose deployment nginx1 --port=8080 --target-port=80

kubectl expose deployment nginx2 --port=8081 --target-port=80

更改nginx文件内容

kubectl exec -it nginx1-56797bcd6c-wqz6l -- /bin/bash

echo 'mynginx1' > /usr/share/nginx/html/index.html

exit

kubectl exec -it nginx2-85f7fcc878-kj7m9 -- /bin/bash

echo 'mynginx2' > /usr/share/nginx/html/index.html

exit

cat > myingress.yaml << EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: myingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: nginx1.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx1

port:

number: 8080

- host: nginx2.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx2

port:

number: 8081

EOF

kubectl apply -f myingress.yaml

kubectl get ingressmanager

cat >> /etc/hosts << EOF

192.168.8.85 nginx1.example.com nginx2.example.com

EOF

curl nginx1.example.com

curl nginx2.example.com五、安装ceph

1、安装cephadm(cepha)

systemctl disable firewalld.service

systemctl stop firewalld

dnf -y install docker-ce

systemctl daemon-reload

systemctl start docker && systemctl enable docker.service

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries": [ "192.168.8.91","harbor1" ]

}

EOF

systemctl daemon-reload

systemctl restart docker

docker login 192.168.8.91

dnf -y install cephadm

dnf -y install ceph-common2、下载镜像(manager)

docker pull quay.io/ceph/ceph:v17

docker pull quay.io/ceph/haproxy:2.3

docker pull quay.io/prometheus/prometheus:v2.33.4

docker pull quay.io/prometheus/node-exporter:v1.3.1

docker pull quay.io/prometheus/alertmanager:v0.23.0

docker pull quay.io/ceph/ceph-grafana:8.3.5

docker pull quay.io/ceph/keepalived:2.1.5

docker pull docker.io/maxwo/snmp-notifier:v1.2.1

docker pull docker.io/grafana/promtail:2.4.0

docker pull docker.io/grafana/loki:2.4.0

docker tag quay.io/ceph/ceph:v17 192.168.8.91/myhub/ceph/ceph:v17

docker push 192.168.8.91/myhub/ceph/ceph:v17

docker save -o /var/www/html/packages/ceph.tar quay.io/ceph/ceph:v17 \

quay.io/ceph/haproxy:2.3 quay.io/ceph/keepalived:2.1.5 \

quay.io/prometheus/node-exporter:v1.3.1 quay.io/prometheus/prometheus:v2.33.4 \

quay.io/ceph/ceph-grafana:8.3.5 docker.io/maxwo/snmp-notifier:v1.2.1 \

docker.io/grafana/promtail:2.4.0 docker.io/grafana/loki:2.4.0 \

quay.io/prometheus/alertmanager:v0.23.0

chmod 644 /var/www/html/packages/ceph.tar3、初始化集群

sed -i 's#quay.io/ceph/ceph:v17#192.168.8.91/myhub/ceph/ceph:v17#g' /usr/sbin/cephadm

wget http://manager/packages/ceph.tar

docker load -i ceph.tar

cephadm bootstrap --mon-ip 192.168.8.61 --cluster-network 192.168.8.0/24集群初如化成功

sudo /usr/sbin/cephadm shell --fsid 5307d468-5cf9-11ed-9f4f-000c298a5a85 \

-c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

exit

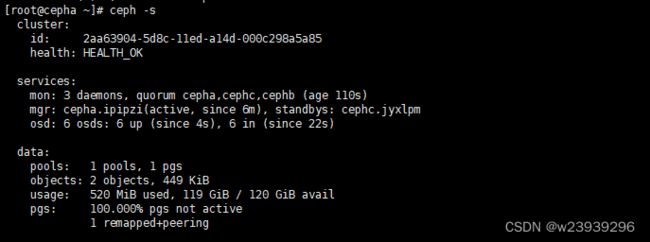

ceph -s

ceph version安装 ceph-common(cephb、cephc)

systemctl disable firewalld.service

systemctl stop firewalld

dnf -y install docker-ce

systemctl daemon-reload

systemctl start docker && systemctl enable docker.service

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries": [ "192.168.8.91","harbor1" ]

}

EOF

systemctl daemon-reload

systemctl restart docker

docker login 192.168.8.91

wget http://manager/packages/ceph.tar

docker load -i ceph.tar

dnf -y install ceph-commoncepha

ceph -s

docker pskey 文件:

cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQDH2RljgCNXBRAAKaOpbXMB6hD9G3uVC9KRYQ==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

加入cephb cephc

ssh-copy-id -f -i /etc/ceph/ceph.pub cephb

ssh-copy-id -f -i /etc/ceph/ceph.pub cephc

ceph orch host add cephb 192.168.8.62

ceph orch host add cephc 192.168.8.63

ceph orch host ls填加标签

ceph orch host label add cephb _admin

ceph orch host label add cephc _admin

ceph -smon mgr填加节点后会自动部署

填加OSD

ceph orch daemon add osd cepha:/dev/sdb,/dev/sdc

ceph orch daemon add osd cephb:/dev/sdb,/dev/sdc

ceph orch daemon add osd cephc:/dev/sdb,/dev/sdc

ceph -sweb查看https://192.168.8.61:8443

4、ceph对接k8s

创建cephfs

ceph fs volume create k8sfs --placement=3

ceph fs ls

ceph -s

ceph fs status

ceph fs volume lsk8s工作节点安装ceph-common(node1 node2)

yum install ceph-common -y

scp 192.168.8.61:/etc/ceph/* /etc/ceph/

cat /etc/ceph/ceph.client.admin.keyring |grep key|awk -F" " '{print $3}' > /etc/ceph/admin.secret

mkdir /data

mount -t ceph 192.168.8.61:6789:/ /data -o name=admin,secretfile=/etc/ceph/admin.secret

df -h | grep /data

cat >> /etc/fstab << EOF

192.168.8.61:6789:/ /data ceph name=admin,secretfile=/etc/ceph/admin.secret,_netdev 0 0

EOF

mount -anode1:

cd /data/

mkdir k8sdata

chmod 0777 k8sdata/

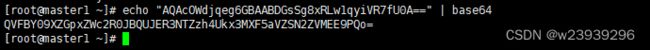

cat /etc/ceph/admin.secretmaster1:

echo "AQAcOWdjqeg6GBAABDGsSg8xRLw1qyiVR7fU0A==" | base64cat > cephfs-secret.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: cephfs-secret

data:

key: QVFBY09XZGpxZWc2R0JBQUJER3NTZzh4Ukx3MXF5aVZSN2ZVMEE9PQo=

EOF

kubectl apply -f cephfs-secret.yaml

cat > cephfs-pv.yaml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: cephfs-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

cephfs:

monitors:

- 192.168.8.61:6789

path: /k8sdata

user: admin

readOnly: false

secretRef:

name: cephfs-secret

persistentVolumeReclaimPolicy: Recycle

EOF

cat > cephfs-pvc.yaml << EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

volumeName: cephfs-pv

resources:

requests:

storage: 1Gi

EOF

kubectl apply -f cephfs-pv.yaml

kubectl get pv

kubectl apply -f cephfs-pvc.yaml

kubectl get pvc创建测试pod1

cat > cephfs-pod-1.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: cephfs-pod-1

spec:

containers:

- image: 192.168.8.91/myhub/nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-v1

mountPath: /usr/share/nginx/html

volumes:

- name: test-v1

persistentVolumeClaim:

claimName: cephfs-pvc

EOF

kubectl apply -f cephfs-pod-1.yaml

kubectl get pods -o wide

kubectl exec -it cephfs-pod-1 -- /bin/bash

echo 'mycephfs-nginx' > /usr/share/nginx/html/index.html

exit

curl 10.10.104.4创建测试pod2

cat > cephfs-pod-2.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: cephfs-pod-2

spec:

containers:

- image: 192.168.8.91/myhub/nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-v2

mountPath: /usr/share/nginx/html

volumes:

- name: test-v2

persistentVolumeClaim:

claimName: cephfs-pvc

EOF

kubectl apply -f cephfs-pod-2.yaml

kubectl get pods -o wide

curl 10.10.166.130可查看两个pod已经共享k8sdata目录

kubectl delete pods cephfs-pod-1

kubectl delete pods cephfs-pod-2六、安装mysql(MGR)

1、三台设置防火墙(mysqla、mysqlb、mysqlc)

firewall-cmd --permanent --add-port=3306/tcp

firewall-cmd --permanent --add-port=33060/tcp

firewall-cmd --permanent --add-port=33061/tcp

firewall-cmd --permanent --add-port=13306/tcp

firewall-cmd --reload2、安装mysql

下载软件

mysql-commercial-common-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-icu-data-files-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-libs-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-server-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-client-plugins-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-client-8.0.31-1.1.el8.x86_64.rpm

mysql-commercial-backup-8.0.31-1.1.el8.x86_64.rpm

安装需求包及软件包

yum install -y perl

rpm -ivh mysql-commercial-common-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-icu-data-files-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-client-plugins-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-libs-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-client-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-server-8.0.31-1.1.el8.x86_64.rpm

rpm -ivh mysql-commercial-backup-8.0.31-1.1.el8.x86_64.rpm3、启动mysqld

systemctl start mysqld

systemctl enable mysqld

grep password /var/log/mysqld.logmysql -uroot -p'V&qksQCh>7!B'

alter user root@localhost identified by 'Admin@123';

exit4、安装插件及配置用户

mysqla mysqlb mysqlc

mysql -uroot -pAdmin@123

install plugin group_replication soname 'group_replication.so';

show plugins;set sql_log_bin=0;

create user repl@'192.168.8.%' identified by 'Admin@123';

grant replication slave on *.* to repl@'192.168.8.%';

set sql_log_bin=1;

exit5、配置mgr

mysqla:

cat >> /etc/my.cnf << EOF

server_id=51

gtid_mode=on

binlog_checksum=NONE

enforce-gtid-consistency=on

disabled_storage_engines='MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY'

log_bin=binlog

log_slave_updates=ON

binlog_format=ROW

master_info_repository=TABLE

relay_log_info_repository=TABLE

transaction_write_set_extraction=XXHASH64

loose_group_replication_recovery_use_ssl=on

group_replication_group_name='5de7369a-031f-4006-adee-ef023fc3b591'

group_replication_start_on_boot=off

group_replication_local_address='192.168.8.51:33061'

group_replication_group_seeds='192.168.8.51:33061,192.168.8.52:33061,192.168.8.53:33061'

group_replication_bootstrap_group=off

EOF

systemctl restart mysqldgroup_name可由uuidgen生成

mysql -uroot -pAdmin@123

change master to

master_user='repl',

master_password='Admin@123'

for channel 'group_replication_recovery';

set global group_replication_bootstrap_group=on;

start group_replication;

set global group_replication_bootstrap_group=off;

select * from performance_schema.replication_group_members;

exit增加mysqlb

cat >> /etc/my.cnf << EOF

server_id=52

gtid_mode=on

binlog_checksum=NONE

enforce-gtid-consistency=on

disabled_storage_engines='MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY'

log_bin=binlog

log_slave_updates=ON

binlog_format=ROW

master_info_repository=TABLE

relay_log_info_repository=TABLE

transaction_write_set_extraction=XXHASH64

loose_group_replication_recovery_use_ssl=on

loose_group_replication_group_name='5de7369a-031f-4006-adee-ef023fc3b591'

loose_group_replication_start_on_boot=off

loose_group_replication_local_address='192.168.8.52:33061'

loose_group_replication_group_seeds='192.168.8.51:33061,192.168.8.52:33061,192.168.8.53:33061'

loose_group_replication_bootstrap_group=off

EOF

systemctl restart mysqld

mysql -uroot -pAdmin@123

change master to

master_user='repl',

master_password='Admin@123'

for channel 'group_replication_recovery';

start group_replication;

exit增加mysqlc

cat >> /etc/my.cnf << EOF

server_id=53

gtid_mode=on

binlog_checksum=NONE

enforce-gtid-consistency=on

disabled_storage_engines='MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY'

log_bin=binlog

log_slave_updates=ON

binlog_format=ROW

master_info_repository=TABLE

relay_log_info_repository=TABLE

transaction_write_set_extraction=XXHASH64

loose_group_replication_recovery_use_ssl=on

loose_group_replication_group_name='5de7369a-031f-4006-adee-ef023fc3b591'

loose_group_replication_start_on_boot=off

loose_group_replication_local_address='192.168.8.53:33061'

loose_group_replication_group_seeds='192.168.8.51:33061,192.168.8.52:33061,192.168.8.53:33061'

loose_group_replication_bootstrap_group=off

EOF

systemctl restart mysqld

mysql -uroot -pAdmin@123

change master to

master_user='repl',

master_password='Admin@123'

for channel 'group_replication_recovery';

start group_replication;

exit6、修改配置文件

mysqla mysqlb mysqlc:

改 group_replication_start_on_boot=off 为 group_replication_start_on_boot=on

去掉loose_

sed -i 's#group_replication_start_on_boot=off#group_replication_start_on_boot=on#g' \

/etc/my.cnf

sed -i 's#loose_group#group#g' /etc/my.cnf

systemctl restart mysqld7、验证

mysqla

mysql -uroot -pAdmin@123

select * from performance_schema.replication_group_members;

show variables like 'group_replication%';

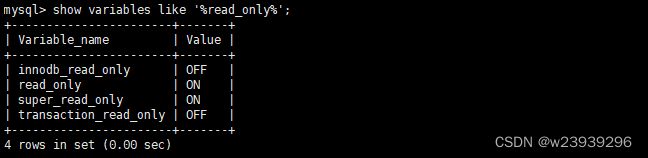

show variables like '%read_only%';8、改为多主模式

mysqla:(主节点)

mysql -uroot -pAdmin@123

stop group_replication;

set global group_replication_single_primary_mode=off;

set global group_replication_enforce_update_everywhere_checks=on;

set global group_replication_bootstrap_group=on;

start group_replication;

set global group_replication_bootstrap_group=off;msyqlb mysqlc(从节点):

mysql -uroot -pAdmin@123

stop group_replication;

set global group_replication_single_primary_mode=off;

set global group_replication_enforce_update_everywhere_checks=on;

start group_replication;验证

select * from performance_schema.replication_group_members;

show variables like '%read_only%';

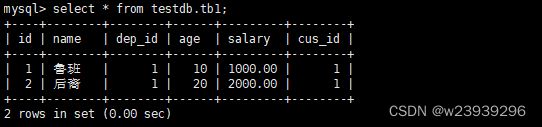

create database testdb;

create user myuser@'192.168.8.%' identified by 'Admin@123';

grant all on testdb.* to myuser@'192.168.8.%';

use testdb

DROP TABLE IF EXISTS `tb1`;

CREATE TABLE `tb1` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(20) DEFAULT NULL,

`dep_id` int(11) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

`salary` decimal(10,2) DEFAULT NULL,

`cus_id` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=109 DEFAULT CHARSET=utf8;

INSERT INTO `tb1` VALUES ('1', '鲁班', '1', '10', '1000.00', '1');

INSERT INTO `tb1` VALUES ('2', '后裔', '1', '20', '2000.00', '1');

select * from testdb.tb1;9、安装 keepalived+nginx 实现 MGR高可用

1)(三台mysql上执行)

yum install nginx keepalived nginx-mod-stream -ycat << EOF > /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream mysql-server {

server 192.168.8.51:3306 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.8.52:3306 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.8.53:3306 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 13306;

proxy_pass mysql-server;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

EOF2)keeplive配置(mysqla)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.55/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service3)keeplive配置(mysqlb)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.55/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service4)keeplive配置(mysqlc)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.8.55/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent

firewall-cmd --reload

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service10、测试mysql读写(manager)

yum install mysql -y

mysql -umyuser -pAdmin@123 -h192.168.8.55 -P13306

select * from testdb.tb1;