爬虫学习笔记(用python爬取东方财富网实验)

参考文章以及视频:(11条消息) 爬虫实战 | 爬取东方财富网股票数据_简说Python的博客-CSDN博客、手把手教你从东方财富网上获取股票数据_哔哩哔哩_bilibili、【Python爬虫案例】如何用Python爬取股市数据,并进行数据可视化_哔哩哔哩_bilibili、python爬虫爬取豆瓣网评分最高的250部电影_哔哩哔哩_bilibili

分为3个步骤:

1. 爬取网页

2.逐一解析数据

3. 保存网页

1. 爬取网页

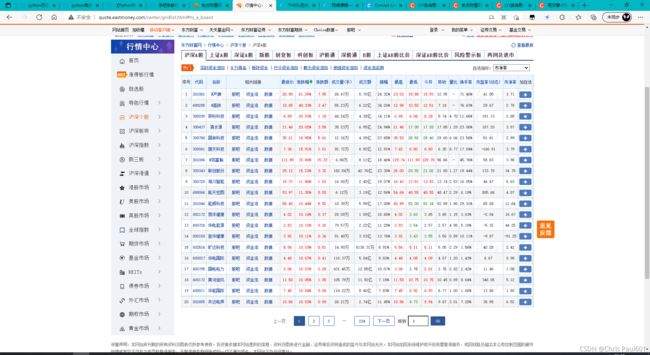

打开网站,找到需要的数据。

行情中心:国内快捷全面的股票、基金、期货、美股、港股、外汇、黄金、债券行情系统_东方财富网 (eastmoney.com)

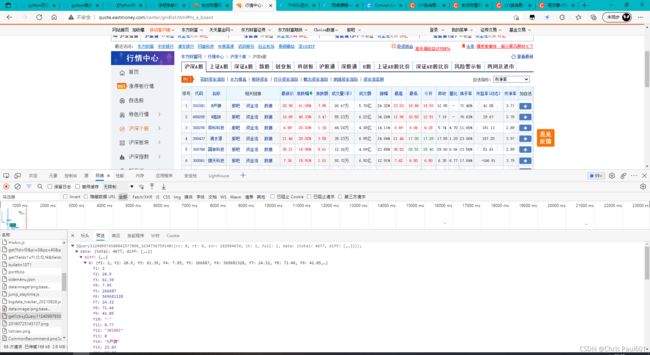

按F12进入开发者模式,选择网络,再刷新页面后找到数据存放的位置。

开始构建requests(可以通过Convert curl command syntax to Python requests, Ansible URI, browser fetch, MATLAB, Node.js, R, PHP, Strest, Go, Dart, Java, JSON, Elixir, and Rust code网站快速生成)

import requests

cookies = {

'qgqp_b_id': '02d480cce140d4a420a0df6b307a945c',

'cowCookie': 'true',

'em_hq_fls': 'js',

'intellpositionL': '1168.61px',

'HAList': 'a-sz-300059-%u4E1C%u65B9%u8D22%u5BCC%2Ca-sz-000001-%u5E73%u5B89%u94F6%u884C',

'st_si': '07441051579204',

'st_asi': 'delete',

'st_pvi': '34234318767565',

'st_sp': '2021-09-28%2010%3A43%3A13',

'st_inirUrl': 'http%3A%2F%2Fdata.eastmoney.com%2F',

'st_sn': '31',

'st_psi': '20211020210419860-113300300813-5631892871',

'intellpositionT': '1007.88px',

}

headers = {

'Connection': 'keep-alive',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36 Edg/94.0.992.50',

'DNT': '1',

'Accept': '*/*',

'Referer': 'http://quote.eastmoney.com/',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

}

params = (

('cb', 'jQuery112404825022376475756_1634735261901'),

('pn', '1'),

('pz', '20'),

('po', '1'),

('np', '1'),

('ut', 'bd1d9ddb04089700cf9c27f6f7426281'),

('fltt', '2'),

('invt', '2'),

('fid', 'f3'),

('fs', 'm:0 t:6,m:0 t:80,m:1 t:2,m:1 t:23'),

('fields', 'f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152'),

('_', '1634735261902'),

)

response = requests.get('http://54.push2.eastmoney.com/api/qt/clist/get', headers=headers, params=params, cookies=cookies, verify=False)

#NB. Original query string below. It seems impossible to parse and

#reproduce query strings 100% accurately so the one below is given

#in case the reproduced version is not "correct".

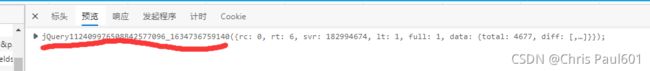

# response = requests.get('http://54.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112404825022376475756_1634735261901&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1634735261902', headers=headers, cookies=cookies, verify=False)但是这样只能得到第一页的数据,通过分析可得,params中的pn代表每一页的页号,所以需要写一个for循环来获得每一页的数据。

for page in range(1,50):

params = (

('cb', 'jQuery1124031167968836399784_1615878909521'),

('pn', str(page)),

('pz', '20'),

('po', '1'),

('np', '1'),

('ut', 'bd1d9ddb04089700cf9c27f6f7426281'),

('fltt', '2'),

('invt', '2'),

('fid', 'f3'),

('fs', 'm:0 t:6,m:0 t:13,m:0 t:80,m:1 t:2,m:1 t:23'),

('fields', 'f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152'),

)2.逐一解析数据

由于数据存储时多出来了前面这一段数据,所以将数据转化为字符串,然后用正则表达式将需要的数据提取出来。

daimas = re.findall('"f12":(.*?),',response.text)

names = re.findall('"f14":"(.*?)"',response.text)

zuixinjias = re.findall('"f2":(.*?),',response.text)

zhangdiefus = re.findall('"f3":(.*?),',response.text)

zhangdiees = re.findall('"f4":(.*?),',response.text)

chengjiaoliangs = re.findall('"f5":(.*?),',response.text)

chengjiaoes = re.findall('"f6":(.*?),',response.text)

zhenfus = re.findall('"f7":(.*?),',response.text)

zuigaos = re.findall('"f15":(.*?),',response.text)

zuidis = re.findall('"f16":(.*?),',response.text)

jinkais = re.findall('"f17":(.*?),',response.text)

zuoshous = re.findall('"f18":(.*?),',response.text)

liangbis = re.findall('"f10":(.*?),',response.text)

huanshoulvs = re.findall('"f8":(.*?),',response.text)

shiyinglvs = re.findall('"f9":(.*?),',response.text)3. 保存网页

将数据存储并保存到excel文件中

for i in range(20):

sheet.append([daimas[i],names[i],zuixinjias[i],zhangdiefus[i],zhangdiees[i],

chengjiaoliangs[i],chengjiaoes[i],zhenfus[i],zuigaos[i],zuidis[i],

jinkais[i],zuoshous[i],liangbis[i],huanshoulvs[i],shiyinglvs[i]])

wb = openpyxl.Workbook()

sheet = wb.active

sheet['A1'] = '代码'

sheet['B1'] = '名称'

sheet['C1'] = '最新价'

sheet['D1'] = '涨跌幅'

sheet['E1'] = '涨跌额'

sheet['F1'] = '成交量'

sheet['G1'] = '成交额'

sheet['H1'] = '振幅'

sheet['I1'] = '最高'

sheet['J1'] = '最低'

sheet['K1'] = '今开'

sheet['L1'] = '昨收'

sheet['M1'] = '量比'

sheet['N1'] = '换手率'

sheet['O1'] = '市盈率'

main()

wb.save('股票数据.xlsx')