hudi同时读写遇到的问题,以及疑惑汇总

1,创建一个kafka的表

%flink.ssql

DROP TABLE IF EXISTS logtail;--创建kafka表

CREATE TABLE logtail (

order_state_tag int

......................

) WITH (

'connector' = 'kafka',

'topic' = 'ods.rds_core.plateform_stable.assure_orders',

'properties.bootstrap.servers' = 'dev-ct6-dc-worker01:9092,dev-ct6-dc-worker02:9092,dev-ct6-dc-worker03:9092',

'properties.group.id' = 'testGroup2',

'format' = 'canal-json',

'scan.startup.mode' = 'earliest-offset'

)

2,创建hudi表

%flink.ssql

drop table if exists hudi_order_ods_test ;

CREATE TABLE hudi_order_ods_test(

order_number varchar ,

order_key varchar ,

ts TIMESTAMP(3),

PRIMARY KEY(order_number) NOT ENFORCED

)

WITH (

'connector' = 'hudi',

'path' = 'hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test',

'table.type' = 'MERGE_ON_READ',

'read.streaming.enabled'= 'true',

'write.tasks'= '1',

'compaction.tasks'= '1',

'read.streaming.check-interval'= '30',

'write.insert.drop.duplicates' = 'true'

);

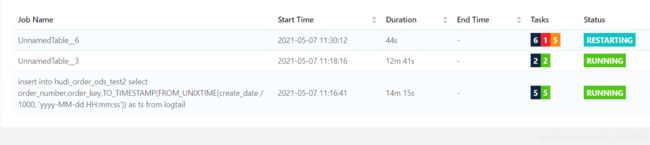

3,同时读写

%flink.ssql(type=update)

insert into hudi_order_ods_test select order_number,order_key,TO_TIMESTAMP(FROM_UNIXTIME(create_date / 1000, 'yyyy-MM-dd HH:mm:ss')) as ts from logtail;

%flink.ssql(type=update)

-- select count(distinct(order_number)) from hudi_order_ods_test4;

select * from hudi_order_ods_test;

4,关闭读任务,再次启动读任务

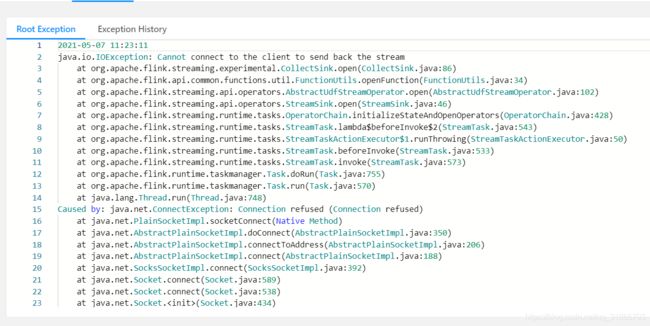

报错:

5,查看日志

2021-05-07 15:01:15,806 INFO org.apache.hudi.common.table.view.HoodieTableFileSystemView [] - Adding file-groups for partition :, #FileGroups=2

2021-05-07 15:01:15,806 INFO org.apache.hudi.common.table.view.AbstractTableFileSystemView [] - addFilesToView: NumFiles=14, NumFileGroups=2, FileGroupsCreationTime=1, StoreTimeTaken=0

2021-05-07 15:01:15,806 INFO org.apache.hudi.common.table.view.AbstractTableFileSystemView [] - Time to load partition () =5

2021-05-07 15:01:15,806 INFO org.apache.hudi.common.table.view.AbstractTableFileSystemView [] - Pending Compaction instant for (FileSlice {fileGroupId=HoodieFileGroupId{partitionPath='', fileId='61a65cf3-ae91-4e77-af19-26d9c1cea230'}, baseCommitTime=20210507150056, baseFile='HoodieBaseFile{fullPath=hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/61a65cf3-ae91-4e77-af19-26d9c1cea230_0-1-0_20210507150056.parquet, fileLen=439367, BootstrapBaseFile=null}', logFiles='[HoodieLogFile{pathStr='hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.61a65cf3-ae91-4e77-af19-26d9c1cea230_20210507150056.log.1_0-1-0', fileLen=1885}]'}) is :Optional.empty

2021-05-07 15:01:15,807 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (2/4)#239 (0478013d0ec2a918eada606c0c21421b) switched from CANCELING to CANCELED.

2021-05-07 15:01:15,807 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for split_reader -> SinkConversionToTuple2 (2/4)#239 (0478013d0ec2a918eada606c0c21421b).

2021-05-07 15:01:15,807 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state CANCELED to JobManager for task split_reader -> SinkConversionToTuple2 (1/4)#239 acf040250b4fa87d6ae4b8b2ac06df5d.

2021-05-07 15:01:15,808 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state CANCELED to JobManager for task split_reader -> SinkConversionToTuple2 (3/4)#239 626f50defe9f75cb4523619fd09c72f7.

2021-05-07 15:01:15,808 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state CANCELED to JobManager for task split_reader -> SinkConversionToTuple2 (2/4)#239 0478013d0ec2a918eada606c0c21421b.

2021-05-07 15:01:15,813 INFO org.apache.hudi.table.MarkerFiles [] - Creating Marker Path=hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.hoodie/.temp/20210507150106/61a65cf3-ae91-4e77-af19-26d9c1cea230_0-1-0_20210507150056.parquet.marker.APPEND

2021-05-07 15:01:15,820 INFO org.apache.hudi.common.table.log.HoodieLogFormat$WriterBuilder [] - Building HoodieLogFormat Writer

2021-05-07 15:01:15,820 INFO org.apache.hudi.common.table.log.HoodieLogFormat$WriterBuilder [] - HoodieLogFile on path hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.61a65cf3-ae91-4e77-af19-26d9c1cea230_20210507150056.log.1_0-1-0

2021-05-07 15:01:15,822 INFO org.apache.hudi.common.table.log.HoodieLogFormatWriter [] - HoodieLogFile{pathStr='hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.61a65cf3-ae91-4e77-af19-26d9c1cea230_20210507150056.log.1_0-1-0', fileLen=1885} exists. Appending to existing file

2021-05-07 15:01:15,865 INFO org.apache.hudi.io.HoodieAppendHandle [] - AppendHandle for partitionPath filePath .61a65cf3-ae91-4e77-af19-26d9c1cea230_20210507150056.log.1_0-1-0, took 85 ms.

2021-05-07 15:01:15,880 INFO org.apache.hudi.common.fs.FSUtils [] - Hadoop Configuration: fs.defaultFS: [hdfs://bi-524:8020], Config:[Configuration: core-default.xml, core-site.xml, mapred-default.xml, mapred-site.xml, yarn-default.xml, yarn-site.xml, hdfs-default.xml, hdfs-site.xml, /etc/hadoop/conf.cloudera.hdfs/core-site.xml, /etc/hadoop/conf.cloudera.hdfs/hdfs-site.xml], FileSystem: [DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1220740860_94, ugi=OpsUser (auth:SIMPLE)]]]

2021-05-07 15:01:15,881 ERROR org.apache.hudi.source.StreamReadMonitoringFunction [] - Get write status of path: hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.61a65cf3-ae91-4e77-af19-26d9c1cea230_20210507145735.log.1_0-1-0 error

2021-05-07 15:01:15,882 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: streaming_source (1/1)#239 (21419e6d2a499d0d6702212854e2d83d) switched from CANCELING to CANCELED.

2021-05-07 15:01:15,882 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Source: streaming_source (1/1)#239 (21419e6d2a499d0d6702212854e2d83d).

2021-05-07 15:01:15,882 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state CANCELED to JobManager for task Source: streaming_source (1/1)#239 21419e6d2a499d0d6702212854e2d83d.

2021-05-07 15:01:15,891 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Loading HoodieTableMetaClient from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,892 INFO org.apache.hudi.io.FlinkAppendHandle [] - Closing the file 61a65cf3-ae91-4e77-af19-26d9c1cea230 as we are done with all the records 0

2021-05-07 15:01:15,892 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Loading HoodieTableMetaClient from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,893 INFO org.apache.hudi.common.fs.FSUtils [] - Hadoop Configuration: fs.defaultFS: [hdfs://bi-524:8020], Config:[Configuration: core-default.xml, core-site.xml, mapred-default.xml, mapred-site.xml, yarn-default.xml, yarn-site.xml, hdfs-default.xml, hdfs-site.xml, /etc/hadoop/conf.cloudera.hdfs/core-site.xml, /etc/hadoop/conf.cloudera.hdfs/hdfs-site.xml], FileSystem: [DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1220740860_94, ugi=OpsUser (auth:SIMPLE)]]]

2021-05-07 15:01:15,894 INFO org.apache.hudi.client.HoodieFlinkWriteClient [] - Cleaner has been spawned already. Waiting for it to finish

2021-05-07 15:01:15,894 INFO org.apache.hudi.client.AsyncCleanerService [] - Waiting for async cleaner to finish

2021-05-07 15:01:15,894 INFO org.apache.hudi.client.HoodieFlinkWriteClient [] - Cleaner has finished

2021-05-07 15:01:15,894 INFO org.apache.hudi.sink.CleanFunction [] - Executor executes action [wait for cleaning finish] success!

2021-05-07 15:01:15,894 INFO org.apache.hudi.common.table.HoodieTableConfig [] - Loading table properties from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.hoodie/hoodie.properties

2021-05-07 15:01:15,896 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Finished Loading Table of type MERGE_ON_READ(version=1, baseFileFormat=PARQUET) from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,896 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Loading Active commit timeline for hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,899 INFO org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Loaded instants [[20210507144916__rollback__COMPLETED], [20210507145145__clean__COMPLETED], [20210507145235__clean__COMPLETED], [20210507145325__clean__COMPLETED], [20210507145415__clean__COMPLETED], [20210507145505__clean__COMPLETED], [20210507145555__clean__COMPLETED], [20210507145645__clean__COMPLETED], [20210507145735__clean__COMPLETED], [20210507145735__commit__COMPLETED], [20210507145736__deltacommit__COMPLETED], [20210507145746__deltacommit__COMPLETED], [20210507145756__deltacommit__COMPLETED], [20210507145806__deltacommit__COMPLETED], [20210507145816__deltacommit__COMPLETED], [20210507145825__clean__COMPLETED], [20210507145825__commit__COMPLETED], [20210507145826__deltacommit__COMPLETED], [20210507145836__deltacommit__COMPLETED], [20210507145847__deltacommit__COMPLETED], [20210507145856__deltacommit__COMPLETED], [20210507145906__deltacommit__COMPLETED], [20210507145915__clean__COMPLETED], [20210507145915__commit__COMPLETED], [20210507145916__deltacommit__COMPLETED], [20210507145926__deltacommit__COMPLETED], [20210507145937__deltacommit__COMPLETED], [20210507145946__deltacommit__COMPLETED], [20210507145956__deltacommit__COMPLETED], [20210507150005__clean__COMPLETED], [20210507150005__commit__COMPLETED], [20210507150006__deltacommit__COMPLETED], [20210507150016__deltacommit__COMPLETED], [20210507150027__deltacommit__COMPLETED], [20210507150036__deltacommit__COMPLETED], [20210507150047__deltacommit__COMPLETED], [20210507150055__clean__COMPLETED], [20210507150056__commit__COMPLETED], [20210507150057__deltacommit__COMPLETED], [==>20210507150106__deltacommit__INFLIGHT]]

2021-05-07 15:01:15,900 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating View Manager with storage type :REMOTE_FIRST

2021-05-07 15:01:15,900 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating remote first table view

2021-05-07 15:01:15,900 INFO org.apache.flink.streaming.api.operators.AbstractStreamOperator [] - No compaction plan for checkpoint 72

2021-05-07 15:01:15,913 INFO org.apache.hudi.common.fs.FSUtils [] - Hadoop Configuration: fs.defaultFS: [hdfs://bi-524:8020], Config:[Configuration: core-default.xml, core-site.xml, mapred-default.xml, mapred-site.xml, yarn-default.xml, yarn-site.xml, hdfs-default.xml, hdfs-site.xml, /etc/hadoop/conf.cloudera.hdfs/core-site.xml, /etc/hadoop/conf.cloudera.hdfs/hdfs-site.xml], FileSystem: [DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1220740860_94, ugi=OpsUser (auth:SIMPLE)]]]

2021-05-07 15:01:15,914 INFO org.apache.hudi.common.table.HoodieTableConfig [] - Loading table properties from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test/.hoodie/hoodie.properties

2021-05-07 15:01:15,915 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Finished Loading Table of type MERGE_ON_READ(version=1, baseFileFormat=PARQUET) from hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,915 INFO org.apache.hudi.common.table.HoodieTableMetaClient [] - Loading Active commit timeline for hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,918 INFO org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Loaded instants [[20210507144916__rollback__COMPLETED], [20210507145145__clean__COMPLETED], [20210507145235__clean__COMPLETED], [20210507145325__clean__COMPLETED], [20210507145415__clean__COMPLETED], [20210507145505__clean__COMPLETED], [20210507145555__clean__COMPLETED], [20210507145645__clean__COMPLETED], [20210507145735__clean__COMPLETED], [20210507145735__commit__COMPLETED], [20210507145736__deltacommit__COMPLETED], [20210507145746__deltacommit__COMPLETED], [20210507145756__deltacommit__COMPLETED], [20210507145806__deltacommit__COMPLETED], [20210507145816__deltacommit__COMPLETED], [20210507145825__clean__COMPLETED], [20210507145825__commit__COMPLETED], [20210507145826__deltacommit__COMPLETED], [20210507145836__deltacommit__COMPLETED], [20210507145847__deltacommit__COMPLETED], [20210507145856__deltacommit__COMPLETED], [20210507145906__deltacommit__COMPLETED], [20210507145915__clean__COMPLETED], [20210507145915__commit__COMPLETED], [20210507145916__deltacommit__COMPLETED], [20210507145926__deltacommit__COMPLETED], [20210507145937__deltacommit__COMPLETED], [20210507145946__deltacommit__COMPLETED], [20210507145956__deltacommit__COMPLETED], [20210507150005__clean__COMPLETED], [20210507150005__commit__COMPLETED], [20210507150006__deltacommit__COMPLETED], [20210507150016__deltacommit__COMPLETED], [20210507150027__deltacommit__COMPLETED], [20210507150036__deltacommit__COMPLETED], [20210507150047__deltacommit__COMPLETED], [20210507150055__clean__COMPLETED], [20210507150056__commit__COMPLETED], [20210507150057__deltacommit__COMPLETED], [20210507150106__deltacommit__COMPLETED]]

2021-05-07 15:01:15,918 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating View Manager with storage type :MEMORY

2021-05-07 15:01:15,918 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating in-memory based Table View

2021-05-07 15:01:16,802 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,803 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task split_reader -> SinkConversionToTuple2 (1/4)#240 (cd4f4161b6076b5e6027ccb7fe3d6cd0), deploy into slot with allocation id 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,804 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (1/4)#240 (cd4f4161b6076b5e6027ccb7fe3d6cd0) switched from CREATED to DEPLOYING.

2021-05-07 15:01:16,804 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task split_reader -> SinkConversionToTuple2 (1/4)#240 (cd4f4161b6076b5e6027ccb7fe3d6cd0) [DEPLOYING].

2021-05-07 15:01:16,804 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: split_reader -> SinkConversionToTuple2 (1/4)#240 (cd4f4161b6076b5e6027ccb7fe3d6cd0) [DEPLOYING].

2021-05-07 15:01:16,804 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot a6eea6ac244fafa405ea346a9641a283.

2021-05-07 15:01:16,804 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task split_reader -> SinkConversionToTuple2 (2/4)#240 (60beee17803679dd046dcc89da86f304), deploy into slot with allocation id a6eea6ac244fafa405ea346a9641a283.

2021-05-07 15:01:16,804 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,805 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,805 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (1/4)#240 (cd4f4161b6076b5e6027ccb7fe3d6cd0) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:16,805 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (2/4)#240 (60beee17803679dd046dcc89da86f304) switched from CREATED to DEPLOYING.

2021-05-07 15:01:16,805 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task split_reader -> SinkConversionToTuple2 (2/4)#240 (60beee17803679dd046dcc89da86f304) [DEPLOYING].

2021-05-07 15:01:16,805 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: split_reader -> SinkConversionToTuple2 (2/4)#240 (60beee17803679dd046dcc89da86f304) [DEPLOYING].

2021-05-07 15:01:16,805 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,806 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,806 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Source: streaming_source (1/1)#240 (ac5cc6182c4f6f2fba334b72e3d030b3), deploy into slot with allocation id 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (2/4)#240 (60beee17803679dd046dcc89da86f304) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: streaming_source (1/1)#240 (ac5cc6182c4f6f2fba334b72e3d030b3) switched from CREATED to DEPLOYING.

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Source: streaming_source (1/1)#240 (ac5cc6182c4f6f2fba334b72e3d030b3) [DEPLOYING].

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Source: streaming_source (1/1)#240 (ac5cc6182c4f6f2fba334b72e3d030b3) [DEPLOYING].

2021-05-07 15:01:16,806 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot cea2236d73314728d987bfe5e8c61605.

2021-05-07 15:01:16,807 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task split_reader -> SinkConversionToTuple2 (3/4)#240 (7c635644487dd020b1dfc2d6416ea6ab), deploy into slot with allocation id cea2236d73314728d987bfe5e8c61605.

2021-05-07 15:01:16,807 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,807 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,808 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (3/4)#240 (7c635644487dd020b1dfc2d6416ea6ab) switched from CREATED to DEPLOYING.

2021-05-07 15:01:16,808 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task split_reader -> SinkConversionToTuple2 (3/4)#240 (7c635644487dd020b1dfc2d6416ea6ab) [DEPLOYING].

2021-05-07 15:01:16,808 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: split_reader -> SinkConversionToTuple2 (3/4)#240 (7c635644487dd020b1dfc2d6416ea6ab) [DEPLOYING].

2021-05-07 15:01:16,808 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,808 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: streaming_source (1/1)#240 (ac5cc6182c4f6f2fba334b72e3d030b3) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6), deploy into slot with allocation id 9d70513c6b98da8f4a1d3c3df16cbc50.

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate [] - Converting recovered input channels (1 channels)

2021-05-07 15:01:16,809 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,809 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.taskmanager.Task [] - Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6) switched from CREATED to DEPLOYING.

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6) [DEPLOYING].

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 7d1cd3f503bb01554b4aca9a8c2d84bf.

2021-05-07 15:01:16,810 INFO org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate [] - Converting recovered input channels (1 channels)

2021-05-07 15:01:16,810 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task split_reader -> SinkConversionToTuple2 (4/4)#240 (556986f5978ba737cd65018176627bc2), deploy into slot with allocation id 7d1cd3f503bb01554b4aca9a8c2d84bf.

2021-05-07 15:01:16,809 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (3/4)#240 (7c635644487dd020b1dfc2d6416ea6ab) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:16,810 INFO org.apache.flink.runtime.taskmanager.Task [] - Registering task at network: Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6) [DEPLOYING].

2021-05-07 15:01:16,810 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,810 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:16,810 INFO org.apache.flink.runtime.taskmanager.Task [] - Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:16,811 WARN org.apache.flink.metrics.MetricGroup [] - The operator name Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 exceeded the 80 characters length limit and was truncated.

2021-05-07 15:01:16,812 WARN org.apache.flink.runtime.taskmanager.Task [] - Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#240 (2eb329672da8a5a63bef2a49b777c5e6) switched from RUNNING to FAILED.

java.io.IOException: Cannot connect to the client to send back the stream

at org.apache.flink.streaming.experimental.CollectSink.open(CollectSink.java:86) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:34) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.StreamSink.open(StreamSink.java:46) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.OperatorChain.initializeStateAndOpenOperators(OperatorChain.java:428) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$beforeInvoke$2(StreamTask.java:543) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.runThrowing(StreamTaskActionExecutor.java:50) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:533) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:573) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.2.jar:1.12.2]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_162]

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) ~[?:1.8.0_162]

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) ~[?:1.8.0_162]

at java.net.Socket.connect(Socket.java:589) ~[?:1.8.0_162]

at java.net.Socket.connect(Socket.java:538) ~[?:1.8.0_162]

at java.net.Socket.(Socket.java:434) ~[?:1.8.0_162]

at java.net.Socket.(Socket.java:244) ~[?:1.8.0_162]

at org.apache.flink.streaming.experimental.CollectSink.open(CollectSink.java:82) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

... 11 more 6,还有报错信息:

群里大佬说是冲突 :

是servlet-api 冲突

ServletRequestWrapper应该是这个类冲突

需要解决冲突重新打包试试。

2021-05-07 15:01:15,783 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using job/cluster config to configure application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:15,783 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Using application-defined state backend: File State Backend (checkpoints: 'hdfs:/flink/checkpoints', savepoints: 'null', asynchronous: TRUE, fileStateThreshold: 20480)

2021-05-07 15:01:15,784 INFO org.apache.flink.runtime.taskmanager.Task [] - Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#239 (25b1eac0559e303c5c9d405390cd20d8) switched from DEPLOYING to RUNNING.

2021-05-07 15:01:15,784 WARN org.apache.flink.metrics.MetricGroup [] - The operator name Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 exceeded the 80 characters length limit and was truncated.

2021-05-07 15:01:15,786 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating remote view for basePath hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test. Server=0.0.0.0:17598, Timeout=300

2021-05-07 15:01:15,786 INFO org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate [] - Converting recovered input channels (1 channels)

2021-05-07 15:01:15,786 INFO org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate [] - Converting recovered input channels (1 channels)

2021-05-07 15:01:15,786 INFO org.apache.hudi.common.table.view.FileSystemViewManager [] - Creating InMemory based view for basePath hdfs://bi-524:8020/tmp/hudi/hudi_order_ods_test

2021-05-07 15:01:15,793 INFO org.apache.hudi.common.table.view.AbstractTableFileSystemView [] - Took 0 ms to read 0 instants, 0 replaced file groups

2021-05-07 15:01:15,786 WARN org.apache.flink.runtime.taskmanager.Task [] - Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#239 (25b1eac0559e303c5c9d405390cd20d8) switched from RUNNING to FAILED.

java.io.IOException: Cannot connect to the client to send back the stream

at org.apache.flink.streaming.experimental.CollectSink.open(CollectSink.java:86) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:34) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.StreamSink.open(StreamSink.java:46) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.OperatorChain.initializeStateAndOpenOperators(OperatorChain.java:428) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$beforeInvoke$2(StreamTask.java:543) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.runThrowing(StreamTaskActionExecutor.java:50) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:533) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:573) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.2.jar:1.12.2]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_162]

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) ~[?:1.8.0_162]

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) ~[?:1.8.0_162]

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) ~[?:1.8.0_162]

at java.net.Socket.connect(Socket.java:589) ~[?:1.8.0_162]

at java.net.Socket.connect(Socket.java:538) ~[?:1.8.0_162]

at java.net.Socket.(Socket.java:434) ~[?:1.8.0_162]

at java.net.Socket.(Socket.java:244) ~[?:1.8.0_162]

at org.apache.flink.streaming.experimental.CollectSink.open(CollectSink.java:82) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

... 11 more

2021-05-07 15:01:15,793 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#239 (25b1eac0559e303c5c9d405390cd20d8).

2021-05-07 15:01:15,794 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FAILED to JobManager for task Sink: Zeppelin Flink Sql Stream Collect Sink 98d7f457-f5fa-4e8b-8f3f-9f2be85a1fc2 (1/1)#239 25b1eac0559e303c5c9d405390cd20d8.

2021-05-07 15:01:15,794 INFO org.apache.hudi.common.util.ClusteringUtils [] - Found 0 files in pending clustering operations

2021-05-07 15:01:15,795 INFO org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView [] - Sending request : (http://0.0.0.0:17598/v1/hoodie/view/slices/file/latest/?partition=&basepath=hdfs%3A%2F%2Fbi-524%3A8020%2Ftmp%2Fhudi%2Fhudi_order_ods_test&fileid=61a65cf3-ae91-4e77-af19-26d9c1cea230&lastinstantts=20210507150057&timelinehash=a61e57e82b6c2075384049565e0049510163b558cae52e5f6ddf181370ca44ee)

2021-05-07 15:01:15,798 ERROR io.javalin.Javalin [] - Exception occurred while servicing http-request

java.lang.NoSuchMethodError: io.javalin.core.CachedRequestWrapper.getContentLengthLong()J

at io.javalin.core.CachedRequestWrapper.(CachedRequestWrapper.kt:22) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at io.javalin.core.JavalinServlet.service(JavalinServlet.kt:34) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at io.javalin.core.util.JettyServerUtil$initialize$httpHandler$1.doHandle(JettyServerUtil.kt:72) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:203) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.servlet.ServletHandler.doScope(ServletHandler.java:480) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1668) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:201) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1247) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:144) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.HandlerList.handle(HandlerList.java:61) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.StatisticsHandler.handle(StatisticsHandler.java:174) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:132) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.Server.handle(Server.java:502) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.HttpChannel.handle(HttpChannel.java:370) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.server.HttpConnection.onFillable(HttpConnection.java:267) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:305) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.io.FillInterest.fillable(FillInterest.java:103) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.io.ChannelEndPoint$2.run(ChannelEndPoint.java:117) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:333) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:310) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:168) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:126) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:366) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:765) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.org.apache.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:683) [hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_162]

2021-05-07 15:01:15,800 INFO org.apache.flink.runtime.taskmanager.Task [] - Attempting to cancel task split_reader -> SinkConversionToTuple2 (4/4)#239 (3e60d03785ac1060bb50e21fc291da1e).

2021-05-07 15:01:15,800 INFO org.apache.flink.runtime.taskmanager.Task [] - split_reader -> SinkConversionToTuple2 (4/4)#239 (3e60d03785ac1060bb50e21fc291da1e) switched from RUNNING to CANCELING.

2021-05-07 15:01:15,800 INFO org.apache.flink.runtime.taskmanager.Task [] - Triggering cancellation of task code split_reader -> SinkConversionToTuple2 (4/4)#239 (3e60d03785ac1060bb50e21fc291da1e).

2021-05-07 15:01:15,801 INFO org.apache.flink.runtime.taskmanager.Task [] - Attempting to cancel task Source: streaming_source (1/1)#239 (21419e6d2a499d0d6702212854e2d83d).

2021-05-07 15:01:15,801 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: streaming_source (1/1)#239 (21419e6d2a499d0d6702212854e2d83d) switched from RUNNING to CANCELING.

2021-05-07 15:01:15,801 INFO org.apache.flink.runtime.taskmanager.Task [] - Triggering cancellation of task code Source: streaming_source (1/1)#239 (21419e6d2a499d0d6702212854e2d83d).

2021-05-07 15:01:15,800 ERROR org.apache.hudi.common.table.view.PriorityBasedFileSystemView [] - Got error running preferred function. Trying secondary

org.apache.hudi.exception.HoodieRemoteException: Server Error

at org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView.getLatestFileSlice(RemoteHoodieTableFileSystemView.java:297) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.common.table.view.PriorityBasedFileSystemView.execute(PriorityBasedFileSystemView.java:97) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.common.table.view.PriorityBasedFileSystemView.getLatestFileSlice(PriorityBasedFileSystemView.java:252) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.io.HoodieAppendHandle.init(HoodieAppendHandle.java:129) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.io.HoodieAppendHandle.doAppend(HoodieAppendHandle.java:328) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.delta.BaseFlinkDeltaCommitActionExecutor.handleUpdate(BaseFlinkDeltaCommitActionExecutor.java:55) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.BaseFlinkCommitActionExecutor.handleUpsertPartition(BaseFlinkCommitActionExecutor.java:194) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.BaseFlinkCommitActionExecutor.execute(BaseFlinkCommitActionExecutor.java:110) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.BaseFlinkCommitActionExecutor.execute(BaseFlinkCommitActionExecutor.java:72) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.FlinkWriteHelper.write(FlinkWriteHelper.java:70) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.action.commit.delta.FlinkUpsertDeltaCommitActionExecutor.execute(FlinkUpsertDeltaCommitActionExecutor.java:49) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.table.HoodieFlinkMergeOnReadTable.upsert(HoodieFlinkMergeOnReadTable.java:60) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.client.HoodieFlinkWriteClient.upsert(HoodieFlinkWriteClient.java:146) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.sink.StreamWriteFunction.lambda$initWriteFunction$1(StreamWriteFunction.java:236) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.sink.StreamWriteFunction.lambda$flushRemaining$5(StreamWriteFunction.java:377) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at java.util.LinkedHashMap$LinkedValues.forEach(LinkedHashMap.java:608) ~[?:1.8.0_162]

at org.apache.hudi.sink.StreamWriteFunction.flushRemaining(StreamWriteFunction.java:371) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.sink.StreamWriteFunction.snapshotState(StreamWriteFunction.java:168) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.flink.streaming.util.functions.StreamingFunctionUtils.trySnapshotFunctionState(StreamingFunctionUtils.java:118) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.util.functions.StreamingFunctionUtils.snapshotFunctionState(StreamingFunctionUtils.java:99) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.snapshotState(AbstractUdfStreamOperator.java:89) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.StreamOperatorStateHandler.snapshotState(StreamOperatorStateHandler.java:205) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.StreamOperatorStateHandler.snapshotState(StreamOperatorStateHandler.java:162) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.api.operators.AbstractStreamOperator.snapshotState(AbstractStreamOperator.java:371) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.checkpointStreamOperator(SubtaskCheckpointCoordinatorImpl.java:686) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.buildOperatorSnapshotFutures(SubtaskCheckpointCoordinatorImpl.java:607) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.takeSnapshotSync(SubtaskCheckpointCoordinatorImpl.java:572) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.checkpointState(SubtaskCheckpointCoordinatorImpl.java:298) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$performCheckpoint$9(StreamTask.java:1004) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.runThrowing(StreamTaskActionExecutor.java:50) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.performCheckpoint(StreamTask.java:988) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.triggerCheckpointOnBarrier(StreamTask.java:947) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.CheckpointBarrierHandler.notifyCheckpoint(CheckpointBarrierHandler.java:115) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.SingleCheckpointBarrierHandler.processBarrier(SingleCheckpointBarrierHandler.java:156) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.CheckpointedInputGate.handleEvent(CheckpointedInputGate.java:180) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.CheckpointedInputGate.pollNext(CheckpointedInputGate.java:157) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:179) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:396) ~[flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:191) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:617) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:581) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.2.jar:1.12.2]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.2.jar:1.12.2]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_162]

Caused by: org.apache.http.client.HttpResponseException: Server Error

at org.apache.http.impl.client.AbstractResponseHandler.handleResponse(AbstractResponseHandler.java:69) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.http.client.fluent.Response.handleResponse(Response.java:90) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.http.client.fluent.Response.returnContent(Response.java:97) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView.executeRequest(RemoteHoodieTableFileSystemView.java:179) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

at org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView.getLatestFileSlice(RemoteHoodieTableFileSystemView.java:293) ~[hudi-flink-bundle_2.11-0.9.0-SNAPSHOT.jar:0.9.0-SNAPSHOT]

... 44 more

2,checkpoint是多个算子同时chenkpoint?

看hdfs路径:

3, zeppelin执行任务报错

目前解决方案和查找问题:

1.升级zeppelin版本 为0.9.1

2,flink日志设置DEBUG ,监控日志

3,设置参数(目前我设置为8)

还在继续测试是否会重新出现类似问题 。

结果,没用:

查看日志。

情景再现:

所以可能是hudi本身同时读写的时候会端口或者进程冲突。

这种问题要怎么解决呢?

部分总结:

目前暂停hudi的研究使用,总结遇到的问题:

1,目前社区比较活跃,但是大部分都是调研为主,遇到的问题比较多。

2,目前hudi存在的bug较多,其中的版本依赖冲突,对hive的兼容性较差(1.x 不支持,2.1也有一些问题,比如字段类型,只支持parquert格式等),hive的版本最好是2.3+

3,运行稳定性目前体验感较差,这边确实不只是我一个人感受到了。

4,下一个hudi版本可能在6月份出来,所以目前还是等待吧。

5,个人愚昧,目前还没有思考清楚hudi要怎么使用,不支持维表关联,文档也没有表join(官网也不更新,这边要吐槽)

6,我开始的设想是替代hive或者替代部分功能,目前看这个路还很长,持续观察吧。