【三维几何学习】从零开始网格上的深度学习-1:输入篇(Pytorch)

本文参加新星计划人工智能(Pytorch)赛道:https://bbs.csdn.net/topics/613989052

从零开始网格上的深度学习-1:输入篇

- 引言

- 一、概述

-

- 1.1 三角网格

- 1.2 DataLoader

- 二、核心代码

-

- 2.1 读取Mesh,计算特征

- 2.2 DataLoader

- 三、一个简单的网格分类实验

-

- 3.1 分类结果

- 3.2 全部代码

引言

本文主要内容如下:

- 介绍

三角网格,以及其读取和特征计算 - 介绍Pytorch中的

DataLoader - 一个简单的

网格分类实验

一、概述

1.1 三角网格

三角网格是一种较为流形的3D模型表示方式:模型由多个三角形拼接而成,如上图所示。

- 可以简单将其看做是点、边、面的结合

本文将其面作为基本元素进行特征提取 - 相比点云或者体素等表示,其可以使用少量三角形表示更丰富的细节

可参考百度或1:一文搞懂三角网格(Triangle Mesh)

网格上的深度学习需要将这种不规则的表示转为标准的矩阵结构( 如:面的个数 × \times × 特征数)

1.2 DataLoader

训练或者测试神经网络,需要将数据输入到网络中,可以手动写代码去设置数据的batch_size,数据每轮训练完后是否要打乱顺序,数据的读取是否多线程以及数据是否对齐等等

DataLoader是一个很方便的库,完美的解决了以上问题,还可以继承进行自定义修改或增加功能

| 主要参数 | 参数说明 |

|---|---|

| dataset | 自定义数据集 |

| batch_size | 每个批次加载多少个样本,默认为1 |

| shuffle | 是否打乱数据顺序,默认False |

| collate_fn | 合并多个样本以形成一个Tensor用于输入 |

更多可参考官方文档或2:torch.utils.data.DataLoader()官方文档翻译

二、核心代码

2.1 读取Mesh,计算特征

数据集是SHREC’11 可参考三角网格(Triangular Mesh)分类数据集 或 MeshCNN

- obj文件的网格,前几行 v v v开头的是顶点坐标,后面 f f f开头的是顶点索引

- 每个网格由三个顶点 or 三条边组成,找到两个面共同的边 or 两个顶点,即可视为两个面相邻

- 这里的特征是

网格面与其邻面的六个二面角:四个面两两成对 即有六个二面角(这里不考虑法向量朝向) 如果只输入三个二面角,下图中左右两个形状的输入是一样的,会有一定的歧义:

def __getitem__(self, index):

path = self.paths[index][0] # 路径

label = self.paths[index][1] # 类别 标签

# cache文件

filename, _ = os.path.splitext(path)

prefix = os.path.basename(filename)

cache = os.path.join(self.cache_dir, prefix + '.pkl')

if os.path.exists(cache): # 存在cache文件则直接读取

with open(cache, 'rb') as f:

meta = pickle.load(f)

else: # 不存在则读取obj文件,生成所需信息

mesh = Mesh()

f = open(path)

vs, faces = [], []

for line in f:

line = line.strip()

splitted_line = line.split()

if not splitted_line:

continue

elif splitted_line[0] == 'v':

vs.append([float(v) for v in splitted_line[1:4]])

elif splitted_line[0] == 'f':

face_vertex_ids = [int(c.split('/')[0]) for c in splitted_line[1:]]

assert len(face_vertex_ids) == 3

face_vertex_ids = [(ind - 1) if (ind >= 0) else (len(vs) + ind)

for ind in face_vertex_ids]

faces.append(face_vertex_ids)

f.close()

vs = np.asarray(vs)

faces = np.asarray(faces, dtype=int)

# 网格面的邻接矩阵

mesh_nb = [[] for _ in faces] # 初始化

edge_faces = dict() # 存储 (v,v):[f1,f2] 每条边所在面的序号

for face_id, face in enumerate(faces): # 遍历每一个face

edge_num = len(face) # 边的数量

for i in range(edge_num): # 遍历face中的每一个顶点 构造边

edge = (face[i], face[(i + 1) % edge_num]) # 构造边 (顶点序号,顶点序号)

edge = tuple(sorted(list(edge))) # 固定边的表示

if edge not in edge_faces: # 如果不存在这个key

edge_faces[edge] = [] # 相应key 新建空list

edge_faces[edge].append(face_id) # 存入此边对应的面的序号

for edge, face in edge_faces.items(): # 根据(v,v):[f1,f2] 即可知 f1 f2相邻

if len(face) == 2: # 一条边 对应两个face

mesh_nb[face[1]].append(face[0]) # 在对应face 中添加相邻face序号

mesh_nb[face[0]].append(face[1]) # 在对应face 中添加相邻face序号

for i in range(len(mesh_nb)):

if len(mesh_nb[i]) < 3: # 缺一个邻接面?

mesh_nb[i].append(i) # 填补自己

if len(mesh_nb[i]) < 3: # 缺两个邻接面?

mesh_nb[i].append(i) # 填补自己

if len(mesh_nb[i]) < 3: # 缺三个邻接面 是孤立面

mesh_nb[i].append(i) # 也填补自己

mesh_nb = np.array(mesh_nb, dtype=np.int64) # 转为np

# 网格面的法向量

face_normals = np.cross(vs[faces[:, 1]] - vs[faces[:, 0]],

vs[faces[:, 2]] - vs[faces[:, 1]])

face_areas = np.sqrt((face_normals ** 2).sum(axis=1)) # 面的面积

face_error = np.where(face_areas == 0) # 面积为0的索引

face_areas_b = face_areas.copy()

face_areas_b[face_error] = 1

face_normals /= face_areas_b[:, np.newaxis]

face_areas *= 0.5

# 网格面 六个二面角

face_dangle = mesh_nb.copy().astype(np.float64)

nb_dangle = mesh_nb.copy().astype(np.float64)

for a in range(len(mesh_nb)): # 遍历每一个face

normal_a = face_normals[a] # 当前面法向量

for b in range(len(mesh_nb[a])): # 遍历所有邻居

normal_b = face_normals[mesh_nb[a][b]] # 邻居面法向量

tmp = np.sum(normal_a * normal_b).clip(-1, 1) # 避免异常值

face_dangle[a][b] = np.arccos(tmp)

nb_dangle[a][0] = np.arccos(

np.sum((face_normals[mesh_nb[a][0]] * face_normals[mesh_nb[a][1]])).clip(-1, 1))

nb_dangle[a][1] = np.arccos(

np.sum((face_normals[mesh_nb[a][0]] * face_normals[mesh_nb[a][2]])).clip(-1, 1))

nb_dangle[a][2] = np.arccos(

np.sum((face_normals[mesh_nb[a][1]] * face_normals[mesh_nb[a][2]])).clip(-1, 1))

dihedral_angle = np.concatenate([face_dangle, nb_dangle], axis=1)

# xyz

xyz_min = np.min(vs[:, 0:3], axis=0)

xyz_max = np.max(vs[:, 0:3], axis=0)

xyz_move = xyz_min + (xyz_max - xyz_min) / 2

vs[:, 0:3] = vs[:, 0:3] - xyz_move

# scale

scale = np.max(vs[:, 0:3])

vs[:, 0:3] = vs[:, 0:3] / scale

# 面中心坐标

xyz = []

for i in range(3):

xyz.append(vs[faces[:, i]])

xyz = np.array(xyz) # 转为np

mean_xyz = xyz.sum(axis=0) / 3

# 需要存储的信息

mesh.vs = vs

mesh.faces = faces

mesh.xyz = mean_xyz.swapaxes(0, 1)

mesh.mesh_nb = mesh_nb

mesh.dihedral_angle = dihedral_angle

meta = {'mesh': mesh, 'label': label}

with open(cache, 'wb') as f:

pickle.dump(meta, f)

meta['face_features'] = meta['mesh'].dihedral_angle

return meta

2.2 DataLoader

- 自定义了DataLoader,加入了输入特征个数、分类个数和torch.device(‘cuda:0’)

- 使用torch.utils.data.DataLoader记得

import torch.utils.data可显示代码参数 collate_fn有改进空间,如果多个三角网格面的个数不一致,可以用0填补以组成一个batch(对齐)- yield可以看作是return,详情参考3:python中yield的用法详解——最简单,最清晰的解释

class DataLoader:

def __init__(self, phase='train'):

self.dataset = Shrec11(phase=phase)

self.input_n = self.dataset.input_n

self.class_n = self.dataset.class_n

self.device = torch.device('cuda:0') # torch.device('cpu')

self.dataloader = torch.utils.data.DataLoader(

self.dataset,

batch_size=16,

shuffle=True,

num_workers=0,

collate_fn=collate_fn)

def __len__(self):

return len(self.dataset)

def __iter__(self):

for _, data_i in enumerate(self.dataloader):

yield data_i

def collate_fn(batch):

mesh = [d['mesh'] for d in batch]

label = [d['label'] for d in batch]

feature = [d['face_features'] for d in batch]

meta = dict()

meta['mesh'] = mesh

meta['label'] = np.array(label)

meta['face_features'] = np.array(feature)

return meta

三、一个简单的网格分类实验

分类网络:主要由多个线性层组成

使用Adam优化器,交叉熵作为损失函数

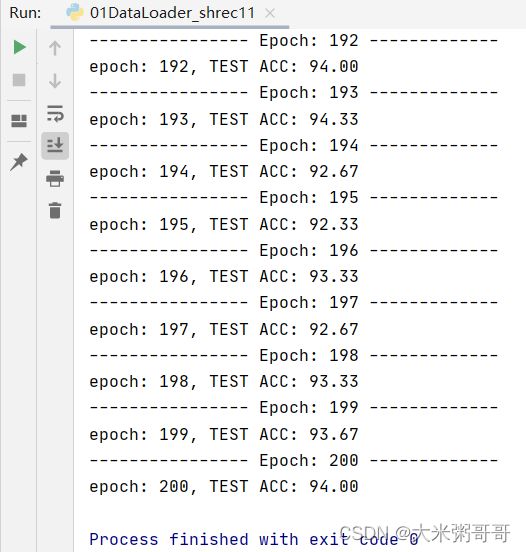

3.1 分类结果

| 方法 | 分类准确率 |

|---|---|

| 本文 | 94 (1 run best) |

| MeshCNN-论文 | 91 (3 run avg) |

| MeshCNN-复现 | 93 (1 run best) |

| MeshCNN-MeshNet++论文复现结果 | 93.4 |

挺有意思一件事,随便写的一个网络就媲美MeshCNN

原因分析:

- 部分代码参考MeshCNN,站在了其肩膀上

感谢MeshCNN的开源 - 特征工程做的比较好,或者说这个特征比较适合在这个数据集上进行分类

- 用了最新的激活函数

GELU(),不得不说深度学习发展是真快

3.2 全部代码

import os

import torch

import torch.utils.data

import numpy as np

import pickle

import torch.nn as nn

import torch.nn.functional as F

class Mesh:

def __init__(self):

pass

class Shrec11:

def __init__(self, phase='train'):

self.root = './datasets/shrec_10/'

self.dir = os.path.join(self.root)

self.classes, self.class_to_idx = self.find_classes(self.dir) # 获取分类

self.paths = self.make_dataset_by_class(self.dir, self.class_to_idx, phase) # 每个网格的路径

self.cache_dir = './results/cache'

if not os.path.exists(self.cache_dir):

os.makedirs(self.cache_dir)

self.size = len(self.paths)

# 输入维度 以及分割数量

self.input_n = 6

self.class_n = len(self.classes)

def __getitem__(self, index):

path = self.paths[index][0] # 路径

label = self.paths[index][1] # 类别 标签

# cache文件

filename, _ = os.path.splitext(path)

prefix = os.path.basename(filename)

cache = os.path.join(self.cache_dir, prefix + '.pkl')

if os.path.exists(cache): # 存在cache文件则直接读取

with open(cache, 'rb') as f:

meta = pickle.load(f)

else: # 不存在则读取obj文件,生成所需信息

mesh = Mesh()

f = open(path)

vs, faces = [], []

for line in f:

line = line.strip()

splitted_line = line.split()

if not splitted_line:

continue

elif splitted_line[0] == 'v':

vs.append([float(v) for v in splitted_line[1:4]])

elif splitted_line[0] == 'f':

face_vertex_ids = [int(c.split('/')[0]) for c in splitted_line[1:]]

assert len(face_vertex_ids) == 3

face_vertex_ids = [(ind - 1) if (ind >= 0) else (len(vs) + ind)

for ind in face_vertex_ids]

faces.append(face_vertex_ids)

f.close()

vs = np.asarray(vs)

faces = np.asarray(faces, dtype=int)

# 网格面的邻接矩阵

mesh_nb = [[] for _ in faces] # 初始化

edge_faces = dict() # 存储 (v,v):[f1,f2] 每条边所在面的序号

for face_id, face in enumerate(faces): # 遍历每一个face

edge_num = len(face) # 边的数量

for i in range(edge_num): # 遍历face中的每一个顶点 构造边

edge = (face[i], face[(i + 1) % edge_num]) # 构造边 (顶点序号,顶点序号)

edge = tuple(sorted(list(edge))) # 固定边的表示

if edge not in edge_faces: # 如果不存在这个key

edge_faces[edge] = [] # 相应key 新建空list

edge_faces[edge].append(face_id) # 存入此边对应的面的序号

for edge, face in edge_faces.items(): # 根据(v,v):[f1,f2] 即可知 f1 f2相邻

if len(face) == 2: # 一条边 对应两个face

mesh_nb[face[1]].append(face[0]) # 在对应face 中添加相邻face序号

mesh_nb[face[0]].append(face[1]) # 在对应face 中添加相邻face序号

for i in range(len(mesh_nb)):

if len(mesh_nb[i]) < 3: # 缺一个邻接面?

mesh_nb[i].append(i) # 填补自己

if len(mesh_nb[i]) < 3: # 缺两个邻接面?

mesh_nb[i].append(i) # 填补自己

if len(mesh_nb[i]) < 3: # 缺三个邻接面 是孤立面

mesh_nb[i].append(i) # 也填补自己

mesh_nb = np.array(mesh_nb, dtype=np.int64) # 转为np

# 网格面的法向量

face_normals = np.cross(vs[faces[:, 1]] - vs[faces[:, 0]],

vs[faces[:, 2]] - vs[faces[:, 1]])

face_areas = np.sqrt((face_normals ** 2).sum(axis=1)) # 面的面积

face_error = np.where(face_areas == 0) # 面积为0的索引

face_areas_b = face_areas.copy()

face_areas_b[face_error] = 1

face_normals /= face_areas_b[:, np.newaxis]

face_areas *= 0.5

# 网格面 六个二面角

face_dangle = mesh_nb.copy().astype(np.float64)

nb_dangle = mesh_nb.copy().astype(np.float64)

for a in range(len(mesh_nb)): # 遍历每一个face

normal_a = face_normals[a] # 当前面法向量

for b in range(len(mesh_nb[a])): # 遍历所有邻居

normal_b = face_normals[mesh_nb[a][b]] # 邻居面法向量

tmp = np.sum(normal_a * normal_b).clip(-1, 1) # 避免异常值

face_dangle[a][b] = np.arccos(tmp)

nb_dangle[a][0] = np.arccos(

np.sum((face_normals[mesh_nb[a][0]] * face_normals[mesh_nb[a][1]])).clip(-1, 1))

nb_dangle[a][1] = np.arccos(

np.sum((face_normals[mesh_nb[a][0]] * face_normals[mesh_nb[a][2]])).clip(-1, 1))

nb_dangle[a][2] = np.arccos(

np.sum((face_normals[mesh_nb[a][1]] * face_normals[mesh_nb[a][2]])).clip(-1, 1))

dihedral_angle = np.concatenate([face_dangle, nb_dangle], axis=1)

# xyz

xyz_min = np.min(vs[:, 0:3], axis=0)

xyz_max = np.max(vs[:, 0:3], axis=0)

xyz_move = xyz_min + (xyz_max - xyz_min) / 2

vs[:, 0:3] = vs[:, 0:3] - xyz_move

# scale

scale = np.max(vs[:, 0:3])

vs[:, 0:3] = vs[:, 0:3] / scale

# 面中心坐标

xyz = []

for i in range(3):

xyz.append(vs[faces[:, i]])

xyz = np.array(xyz) # 转为np

mean_xyz = xyz.sum(axis=0) / 3

# 需要存储的信息

mesh.vs = vs

mesh.faces = faces

mesh.xyz = mean_xyz.swapaxes(0, 1)

mesh.mesh_nb = mesh_nb

mesh.dihedral_angle = dihedral_angle

meta = {'mesh': mesh, 'label': label}

with open(cache, 'wb') as f:

pickle.dump(meta, f)

meta['face_features'] = meta['mesh'].dihedral_angle

return meta

def __len__(self):

return self.size

@staticmethod

def find_classes(dirs):

classes = [d for d in os.listdir(dirs) if os.path.isdir(os.path.join(dirs, d))]

classes.sort()

class_to_idx = {classes[i]: i for i in range(len(classes))}

return classes, class_to_idx

@staticmethod

def make_dataset_by_class(dirs, class_to_idx, phase):

meshes = []

dirs = os.path.expanduser(dirs)

for target in sorted(os.listdir(dirs)):

d = os.path.join(dirs, target)

if not os.path.isdir(d):

continue

for root, _, all_files in sorted(os.walk(d)):

for file_name in sorted(all_files):

if root.count(phase) == 1:

path = os.path.join(root, file_name)

item = (path, class_to_idx[target])

meshes.append(item)

return meshes

class DataLoader:

def __init__(self, phase='train'):

self.dataset = Shrec11(phase=phase)

self.input_n = self.dataset.input_n

self.class_n = self.dataset.class_n

self.device = torch.device('cuda:0') # torch.device('cpu')

self.dataloader = torch.utils.data.DataLoader(

self.dataset,

batch_size=16,

shuffle=True,

num_workers=0,

collate_fn=collate_fn)

def __len__(self):

return len(self.dataset)

def __iter__(self):

for _, data_i in enumerate(self.dataloader):

yield data_i

def collate_fn(batch):

mesh = [d['mesh'] for d in batch]

label = [d['label'] for d in batch]

feature = [d['face_features'] for d in batch]

meta = dict()

meta['mesh'] = mesh

meta['label'] = np.array(label)

meta['face_features'] = np.array(feature)

return meta

class Net(nn.Module):

def __init__(self, dim_in=6, class_n=30):

super(Net, self).__init__()

self.linear1 = nn.Conv1d(dim_in, 128, 1, bias=False)

self.bn1 = nn.BatchNorm1d(128)

self.linear2 = nn.Conv1d(128, 128, 1, bias=False)

self.bn2 = nn.BatchNorm1d(128)

self.linear3 = nn.Conv1d(128, 128, 1, bias=False)

self.bn3 = nn.BatchNorm1d(128)

self.gp = nn.AdaptiveAvgPool1d(1)

self.linear4 = nn.Linear(128, 128)

self.bn4 = nn.BatchNorm1d(128)

self.linear5 = nn.Linear(128, class_n)

self.act = nn.GELU() # 激活函数

def forward(self, x):

x = x.permute(0, 2, 1).contiguous()

x = self.act(self.bn1(self.linear1(x)))

x = self.act(self.bn2(self.linear2(x)))

x = self.act(self.bn3(self.linear3(x)))

x = self.gp(x) # 平均池化

x = x.squeeze(-1)

x = self.act(self.bn4(self.linear4(x)))

x = self.linear5(x)

return x

if __name__ == '__main__':

# 输入

data_train = DataLoader(phase='train') # 训练集

data_test = DataLoader(phase='test') # 测试集

dataset_size = len(data_train) # 数据长度

print('#training meshes = %d' % dataset_size) # 输出模型个数

# 网络

net = Net(data_train.input_n, data_train.class_n) # 创建网络 以及 优化器

optimizer = torch.optim.Adam(net.parameters(), lr=0.001, betas=(0.9, 0.999))

net = net.cuda(0)

loss_fun = torch.nn.CrossEntropyLoss(ignore_index=-1)

num_params = 0

for param in net.parameters():

num_params += param.numel()

print('[Net] Total number of parameters : %.3f M' % (num_params / 1e6))

print('-----------------------------------------------')

# 迭代训练

for epoch in range(1, 201):

print('---------------- Epoch: %d -------------' % epoch)

for i, data in enumerate(data_train):

# 前向传播

net.train(True) # 训练模式

optimizer.zero_grad() # 梯度清零

face_features = torch.from_numpy(data['face_features']).float()

face_features = face_features.to(data_train.device).requires_grad_(True)

labels = torch.from_numpy(data['label']).long().to(data_train.device)

out = net(face_features) # 输入到网络

# 反向传播

loss = loss_fun(out, labels)

loss.backward()

optimizer.step() # 参数更新

# 测试

net.eval()

acc = 0

for i, data in enumerate(data_test):

with torch.no_grad():

# 前向传播

face_features = torch.from_numpy(data['face_features']).float()

face_features = face_features.to(data_test.device).requires_grad_(False)

labels = torch.from_numpy(data['label']).long().to(data_test.device)

out = net(face_features)

# 计算准确率

pred_class = out.data.max(1)[1]

correct = pred_class.eq(labels).sum().float()

acc += correct

acc = acc / len(data_test)

print('epoch: %d, TEST ACC: %0.2f' % (epoch, acc * 100))

一文搞懂三角网格(Triangle Mesh) ↩︎

torch.utils.data.DataLoader()官方文档翻译 ↩︎

python中yield的用法详解——最简单,最清晰的解释 ↩︎