【云原生 · Kubernetes】部署Kubernetes集群

【云原生 · Kubernetes】搭建Harbor仓库

接着上次的内容,后续来了!

在master节点执行脚本k8s_master_install.sh即可完成K8S集群的部署,具体步骤参考如下(1)-(4)步骤。

5.部署Kubernetes集群

(1)安装Kubeadm

所有节点安装Kubeadm工具:

[root@master ~]# yum -y install kubeadm-1.18.1 kubectl-1.18.1 kubelet-1.18.1

[root@master ~]# systemctl enable kubelet && systemctl start kubelet

(2)初始化集群

在master节点初始化集群:

[root@master opt]# kubeadm init --kubernetes-version=1.18.1 --apiserver-advertise-address=192.168.100.10 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.18.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

..................

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.18.4.10:6443 --token cxtb79.mqg7drycn5s82hhc \

--discovery-token-ca-cert-hash sha256:d7465b10f81ecb32ca30459efc1e0efe4f22bfbddc0c17d9b691f611082f415c

初始化完成后执行:

[root@master opt]# mkdir -p $HOME/.kube

[root@master opt]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master opt]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

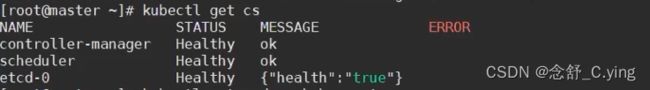

查看集群状态:

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

查看节点状态:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 2m57s v1.18.1

可以发现master处于notready状态,这是正常的,因为还没有网络插件,接下来安装网络后就变为正常了:

[root@master ~]# kubectl apply -f yaml/kube-flannel.yaml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7ff77c879f-7vj79 1/1 Running 0 14m

kube-system coredns-7ff77c879f-nvclj 1/1 Running 0 14m

kube-system etcd-master 1/1 Running 0 14m

kube-system kube-apiserver-master 1/1 Running 0 14m

kube-system kube-controller-manager-master 1/1 Running 0 14m

kube-system kube-flannel-ds-d5p4g 1/1 Running 0 11m

kube-system kube-proxy-2gstw 1/1 Running 0 14m

kube-system kube-scheduler-master 1/1 Running 0 14m

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 17m v1.18.1

(3)安装Dashboard

创建证书:

[root@master ~]# mkdir dashboard-certs

[root@master ~]# cd dashboard-certs/

[root@master ~]# kubectl create namespace kubernetes-dashboard

[root@master ~]# openssl genrsa -out dashboard.key 2048

Generating RSA private key, 2048 bit long modulus

......................................+++

...........................................................+++

e is 65537 (0x10001)

[root@master ~]## openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

[root@master ~]## openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

Signature ok

subject=/CN=dashboard-cert

Getting Private key

[root@master ~]## kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

安装Dashboard:

[root@master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

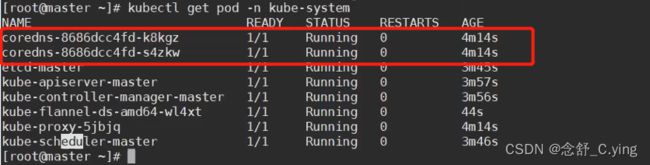

查看Dashboard 关联Pod和Service的状态:

[root@master ~]# kubectl get pod,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-6b4884c9d5-f7qxd 1/1 Running 0 62s

pod/kubernetes-dashboard-5585794759-2c6xt 1/1 Running 0 62s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.105.228.249 <none> 8000/TCP 62s

service/kubernetes-dashboard NodePort 10.98.134.7 <none> 443:30000/TCP 62s

创建serviceaccount和clusterrolebinding

[root@master ~]# kubectl apply -f dashboard-adminuser.yaml

serviceaccount/dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin-bind-cluster-role created

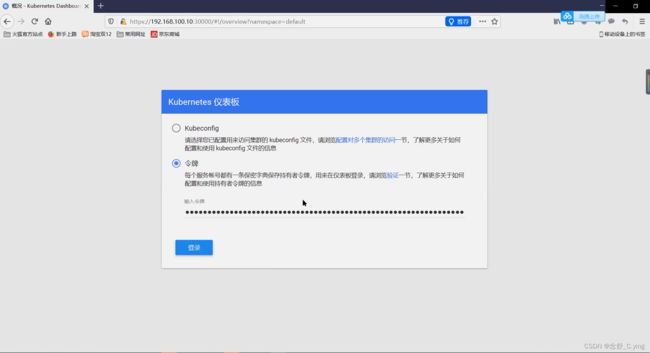

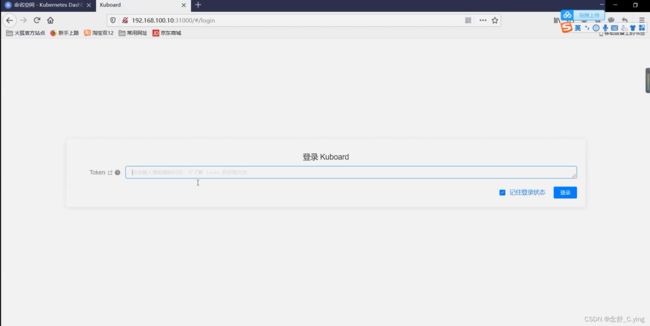

在浏览器访问dashboard(https://IP:30000)

获取Token:

# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-x9fnq

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: f780f22d-f620-4cdd-ad94-84bf593ca882

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkRWNXNrYWV6dFo4bUJrRHVZcmwtcTVpNzdFMDZYZjFYNzRzQlRyYmlVOGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4teDlmbnEiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjc4MGYyMmQtZjYyMC00Y2RkLWFkOTQtODRiZjU5M2NhODgyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.h5BGk2yunmRcA8U60wIJh0kWpRLI1tZqS58BaDy137k1SYkvwG4rfG8MGnoDMAREWd9JIX43N4qpfbivIefeKIO_CZhYjv4blRefjAHo9c5ABChMc1lrZq9m_3Br_fr7GonsYulkaW6qYkCcQ0RK1TLlxntvLTi7gWMSes8w-y1ZumubL4YIrUh-y2OPoi2jJNevn4vygkgxtX5Y9LlxegVYJfeE_Sb9jV9ZL7e9kDqmBIYxm5PBJoPutjsTBmJf3IFrf6vUk6bBWtE6-nZgdf6FAGDd2W2-1YcidjITwgUvj68OfQ5tbB94EYlJhuoAGVajKxO14XaE9NH0_NZjqw

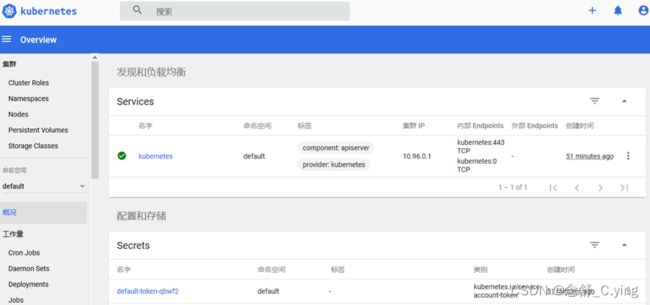

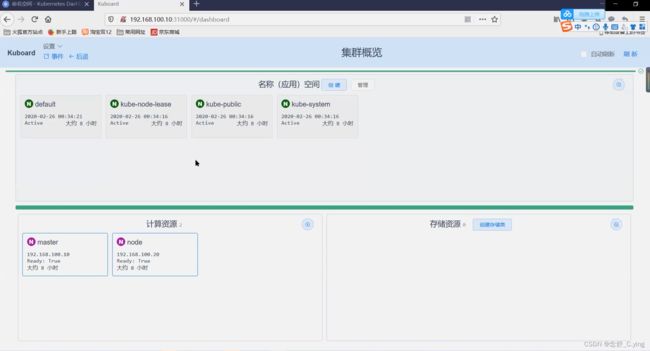

输入Token后进入Dashboard仪表盘界面

(4)删除污点

出于安全考虑,默认配置下Kubernetes不会将Pod调度到Master节点。如果希望将master也当作Node节点使用,可以执行如下命令:

# kubectl taint node master node-role.kubernetes.io/master-node/master untainted

或者在浏览器访问dashboard(http://IP:31000)

6.node节点加入集群

在node节点执行脚本k8s_node_install.sh即可将node节点加入集群,具体步骤参考如下(1)-(2)步骤。

(1)node节点加入集群

在node节点执行以下命令加入集群:

# kubeadm join 192.168.100.10:6443 \

--token cxtb79.mqg7drycn5s82hhc --discovery-token-ca-cert-hash \

sha256:d7465b10f81ecb32ca30459efc1e0efe4f22bfbddc0c17d9b691f611082f415c

(2)查看节点信息

在master节点查看节点状态:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 56m v1.18.1

node Ready <none> 60s v1.18.1

期待下次的分享,别忘了三连支持博主呀~

我是 念舒_C.ying ,期待你的关注~