【三维几何学习】从零开始网格上的深度学习-4:训练测试篇(Pytorch)

本文参加新星计划人工智能(Pytorch)赛道:https://bbs.csdn.net/topics/613989052

从零开始网格上的深度学习-4:训练测试篇

- 引言

- 一、概述

- 二、核心代码

-

- 2.1 网格上的Transformer

- 2.2 模型初始化、学习率动态调整

- 2.3 随机种子设定、使用tensorboardX可视化

- 三、网格分类实验

-

- 3.1 分类结果

- 3.2 全部代码

引言

本文主要内容如下:

- 对上一篇Transformer网络进行

改进 - 加入

模型初始化、学习率动态调整 - 设定

随机种子、打印训练时间、使用tensorboardX可视化(上图)

一、概述

- 前三篇主要讲述了网格的

输入特征以及网络框架部分,包括了基于面元素的二面角特征计算、卷积网络、Transformer网络,以及隐含网络中的激活函数、归一化函数、损失函数等等。

除了以上内容,还有一些知识点是深度学习的重要组成部分:如何训练一个网络… 如:网络的初始化-Kaiming初始化、学习率的调整-模拟退火、训练loss的可视化以调整网络框架 or 微调参数-tensorboardX、优化器-Adam等等 本文对以上内容进行简述

二、核心代码

2.1 网格上的Transformer

- 将sum求和形式的位置嵌入,改为了

concat拼接,然后统一经过两个面卷积层 - 最后

增加了一个线性层

Transformer.py

class TriTransNet(nn.Module):

def __init__(self, classes_n=30):

super().__init__()

self.conv_1 = FaceConv(9, 128, 4)

self.conv_2 = FaceConv(128, 128, 4)

self.bn_1 = nn.BatchNorm1d(128)

self.bn_2 = nn.BatchNorm1d(128)

self.sa1 = SA(128)

self.sa2 = SA(128)

self.gp = nn.AdaptiveAvgPool1d(1)

self.linear1 = nn.Linear(256, 128, bias=False)

self.bn1 = nn.BatchNorm1d(128)

self.linear2 = nn.Linear(128, 64)

self.bn2 = nn.BatchNorm1d(64)

self.linear3 = nn.Linear(64, classes_n)

self.act = nn.GELU()

def forward(self, x, mesh):

x = x.permute(0, 2, 1).contiguous()

# 位置编码 放到DataLoader中比较好

pos = [m.xyz for m in mesh]

pos = np.array(pos)

pos = torch.from_numpy(pos).float().to(x.device).requires_grad_(True)

batch_size, _, N = x.size()

x = torch.cat((x, pos), dim=1) # 拼接 Not sum

x = self.act(self.bn_1(self.conv_1(x, mesh).squeeze(-1)))

x = self.act(self.bn_2(self.conv_2(x, mesh).squeeze(-1)))

x1 = self.sa1(x)

x2 = self.sa2(x1)

x = torch.cat((x1, x2), dim=1)

x = self.gp(x)

x = x.view(batch_size, -1)

x = self.act(self.bn1(self.linear1(x)))

x = self.act(self.bn2(self.linear2(x)))

x = self.linear3(x)

return x

2.2 模型初始化、学习率动态调整

- 网络模型初始化策略有很多:预训练、随机、固定…等初始化

可参考1:pytorch nn.init 中实现的初始化函数 uniform, normal, const, Xavier, He initialization

def init_func(m):

className = m.__class__.__name__

if hasattr(m, 'weight') and (className.find('Conv') != -1 or className.find('Linear') != -1):

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

以上进行对线性层和卷积层中的参数进行normal_初始化,均值=0 标准差=0.02

- 学习率的调整为训练一定轮次后线性衰减,使用

Adam优化器

可参考2:史上最全学习率调整策略lr_scheduler

def get_scheduler(optimizer, epoch_max):

def lambda_rule(epoch):

lr_l = 1.0 - max(0, epoch + 1 + 1 - epoch_max/2) / float(epoch_max/2 + 1)

return lr_l

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda_rule)

return scheduler

optimizer = torch.optim.Adam(self.net.parameters(), lr=0.001, betas=(0.9, 0.999))

2.3 随机种子设定、使用tensorboardX可视化

- 设定随机种子,方便实验复现

但很难进行一丝不差的进行复现

详情可参考3:【pytorch】结果无法复现

import numpy as np

import random

import torch

random.seed(0)

np.random.seed(0)

torch.manual_seed(0)

torch.cuda.manual_seed(0)

torch.cuda.manual_seed_all(0)

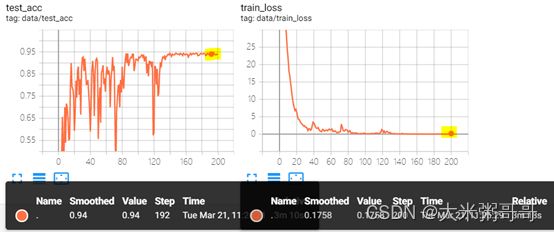

- 使用tensorboardX可视化

# 先进行创建

SW = SummaryWriter(log_dir='runs', comment='cls')

# 然后add相关数据 绘制曲线即可

SW.add_scalar('data/train_loss', loss, epoch)

SW.add_scalar('data/test_acc', acc, epoch)

然后在控制台中输入tensorboard --logdir [path]即可 [path]就是SummaryWriter创建log_dir路径runs下生成的events开头的文件,如下图:

![]()

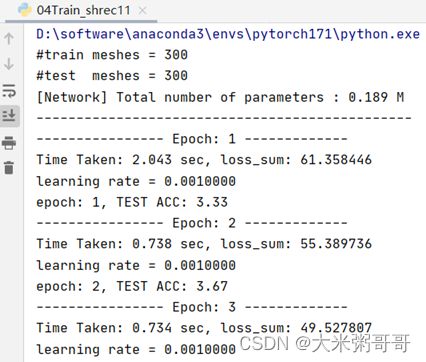

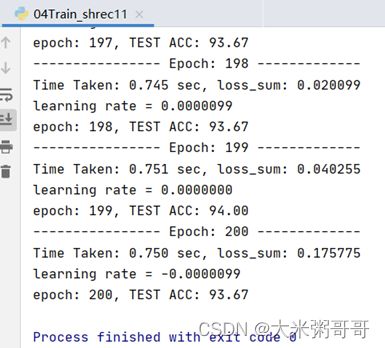

三、网格分类实验

数据集是SHREC’11 可参考三角网格(Triangular Mesh)分类数据集

3.1 分类结果

相比4:从零开始网格上的深度学习-3:Transformer篇

准确率提升约十个百分点 84% -> 94%

3.2 全部代码

DataLoader代码请参考5:从零开始网格上的深度学习-1:输入篇(Pytorch)

FaceConv代码请参考6:从零开始网格上的深度学习-2:卷积网络CNN篇

Model.py

from torch.optim import lr_scheduler

import torch

from Transformer import TriTransNet

def init_func(m):

className = m.__class__.__name__

if hasattr(m, 'weight') and (className.find('Conv') != -1 or className.find('Linear') != -1):

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

def get_scheduler(optimizer, epoch_max):

def lambda_rule(epoch):

lr_l = 1.0 - max(0, epoch + 1 + 1 - epoch_max/2) / float(epoch_max/2 + 1)

return lr_l

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda_rule)

return scheduler

class Model:

def __init__(self):

self.device = torch.device('cuda:0') # torch.device('cpu')

self.loss_fun = torch.nn.CrossEntropyLoss(ignore_index=-1)

self.loss = None

# 网络

self.net = TriTransNet()

self.optimizer = torch.optim.Adam(self.net.parameters(), lr=0.001, betas=(0.9, 0.999))

self.scheduler = get_scheduler(self.optimizer, epoch_max=200)

# 自定义初始化

self.net = self.net.cuda(0)

self.net.apply(init_func)

# 打印网络参数量

num_params = 0

for param in self.net.parameters():

num_params += param.numel()

print('[Network] Total number of parameters : %.3f M' % (num_params / 1e6))

print('-----------------------------------------------')

def train(self, data):

self.net.train(True)

self.optimizer.zero_grad() # 梯度清零

# 前向传播

face_features = torch.from_numpy(data['face_features']).float()

face_features = face_features.to(self.device).requires_grad_(True)

labels = torch.from_numpy(data['label']).long().to(self.device)

mesh = data['mesh']

out = self.net(face_features, mesh)

# 反向传播

loss = self.loss_fun(out, labels)

loss.backward()

self.loss = float(loss) # 只要值

self.optimizer.step() # 参数更新

def test(self, data):

self.net.eval()

with torch.no_grad():

# 前向传播

face_features = torch.from_numpy(data['face_features']).float()

face_features = face_features.to(self.device).requires_grad_(False)

labels = torch.from_numpy(data['label']).long().to(self.device)

mesh = data['mesh']

out = self.net(face_features, mesh)

# 计算准确率

pred_class = out.data.max(1)[1]

correct = pred_class.eq(labels).sum().float()

return correct

def update_learning_rate(self):

self.scheduler.step()

lr = self.optimizer.param_groups[0]['lr']

print('learning rate = %.7f' % lr)

Train_shrec11.py

import sys

import os

import time

sys.path.append(os.path.join(os.path.dirname(__file__), "../../"))

from DataLoader_shrec11 import DataLoader

from Model import Model

from tensorboardX import SummaryWriter

from DataLoader_shrec11 import Mesh

if __name__ == '__main__':

# 0.设定seed 尽量可复现

import numpy as np

import random

import torch

random.seed(0)

np.random.seed(0)

torch.manual_seed(0)

torch.cuda.manual_seed(0)

torch.cuda.manual_seed_all(0)

# 1.数据读取

data_train = DataLoader(phase='train') # 通过参数 获取数据

data_test = DataLoader(phase='test') # 通过参数 获取数据

print('#train meshes = %d' % len(data_train)) # 输出训练模型个数

print('#test meshes = %d' % len(data_test)) # 输出测试模型个数

# 2.开始训练

model = Model() # 创建模型

SW = SummaryWriter(log_dir='runs', comment='cls') # 保存训练曲线

for epoch in range(1, 201):

print('---------------- Epoch: %d -------------' % epoch)

epoch_start_time = time.time()

epoch_iter = 0

loss = 0

for i, data in enumerate(data_train):

model.train(data)

loss += model.loss

print('Time Taken: %.3f sec, loss_sum: %.6f' % ((time.time() - epoch_start_time), loss))

model.update_learning_rate()

# 测试新的模型准确率

acc = 0

for i, data in enumerate(data_test):

acc += model.test(data)

acc = acc / len(data_test)

print('epoch: %d, TEST ACC: %0.2f' % (epoch, acc * 100))

# 绘制曲线

SW.add_scalar('data/train_loss', loss, epoch)

SW.add_scalar('data/test_acc', acc, epoch)

pytorch nn.init 中实现的初始化函数 uniform, normal, const, Xavier, He initialization ↩︎

史上最全学习率调整策略lr_scheduler ↩︎

【pytorch】结果无法复现 ↩︎

从零开始网格上的深度学习-3:Transformer篇 ↩︎

从零开始网格上的深度学习-1:输入篇(Pytorch) ↩︎

从零开始网格上的深度学习-2:卷积网络CNN篇 ↩︎