【第十一届“泰迪杯”数据挖掘挑战赛】泰迪杯c题爬虫采集数据(源码+数据)

【”第十一届“泰迪杯”数据挖掘挑战赛—— C 题:泰迪内推平台招聘与求职双向推荐系统构建(采集数据)】

问题:

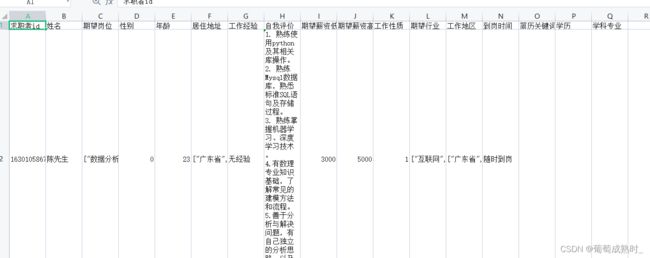

数据详情:

根据人才id获取详细数据(10897条).csv:

爬虫设计:

- 首先获取所有id数据存入txt

- 再根据txt的id构造网址,请求详细信息页面

- 获取详细页面数据

工作数据获取:

# -*- coding: utf-8 -*-

# @Time : 2023/2/23/023 21:05

# @Author : LeeSheel

# @File : 01-1多线程获取所有id.py

# @Project : 爬虫

import json

import requests

import time

import threading

from queue import Queue

class IDSpider():

def __init__(self):

self.url = '*****ic/es?pageSize=10&pageNumber={}&willNature=&function=&wageList=%5B%5D&workplace=&keyword='

# 创建队列

self.q = Queue()

# 创建锁

self.lock = threading.Lock()

# 把目标url放入队列中

def put_url(self):

# range(3)--(0,1,2)

for page in range(1,159):

url = self.url.format(page)

self.q.put(url)

# 发请求 获响应 解析数据

def parse_html(self):

while True:

self.lock.acquire()

if not self.q.empty():

url = self.q.get()

self.lock.release()

headers = {

"Cookie": "DEFAULT_ENTERPRISE_IMG=company.jpg; APP_HEADER_NAME=%E6%B3%B0%E8%BF%AA%E5%86%85%E6%8E%A8; APP_TITLE=%E6%B3%B0%E8%BF%AA%E5%86%85%E6%8E%A8; APP_RESOURCE_SCOPE_NAME=%E6%95%B0%E6%8D%AE%E4%B8%AD%E5%BF%83; APP_HELP_DOC_URL=http://45.116.35.168:8083/eb; REGISTER_URL=http://www.5iai.com:444/oauth/register",

"Referer": "https://www.5iai.com/",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36"

}

res = requests.get(url, headers=headers).text

# 解析数据

json_data = json.loads(str(res))

contents = json_data['data']['content']

print(contents)

with open("找工作页面id.txt", "a+", encoding='utf-8') as f:

for content in contents:

id = content['id']

f.write(id + '\n')

print(id)

time.sleep(2)

else:

self.lock.release()

time.sleep(2)

break

def run(self):

self.put_url()

# 线程列表

t_lst = []

for i in range(10):

t = threading.Thread(target=self.parse_html)

t_lst.append(t)

t.start()

######为过审,代码只能不全#####################

######为过审,代码只能不全#####################

######为过审,代码只能不全#####################

文件夹展示:

代码+数据获取:

由于多线程式会造成数据丟失,只能采用循环单线程获取~~~,

制作不易,还请见谅,

https://mbd.pub/o/bread/ZJaWlp5r