企业项目实战k8s篇(十九)K8s高可用+负载均衡集群

K8s高可用+负载均衡集群

- 一.K8s高可用+负载均衡集群概述

- 二.K8s高可用+负载均衡集群部署

-

- 1.pacemaker+haproxy的高可用+负载均衡部署

- 2.k8s高可用集群部署

一.K8s高可用+负载均衡集群概述

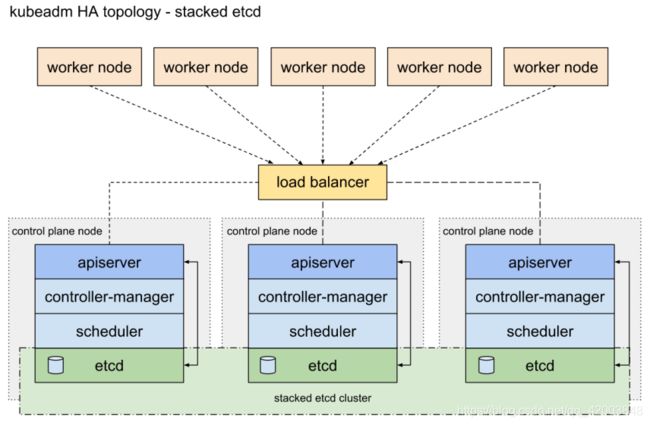

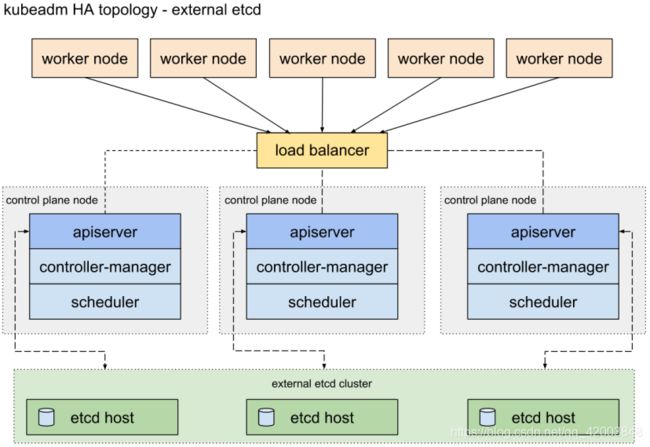

配置高可用(HA)Kubernetes集群,有以下两种可选的etcd拓扑:

- 集群master节点与etcd节点共存,etcd也运行在控制平面节点上

- 使用外部etcd节点,etcd节点与master在不同节点上运行

堆叠的etcd拓扑

外部etcd拓扑

二.K8s高可用+负载均衡集群部署

使用6台虚拟机

server3 提供harbor仓库服务

server5/6提供haproxy负载均衡

server7/8/9 提供k8s集群主机master节点(至少三台才能实现高可用k8s集群)

1.pacemaker+haproxy的高可用+负载均衡部署

server5/6安装pacemaker相关组件,设置开机自启

[root@server5 ~]# yum install -y pacemaker pcs psmisc policycoreutils-python

Installed:

pacemaker.x86_64 0:1.1.19-8.el7 pcs.x86_64 0:0.9.165-6.el7

policycoreutils-python.x86_64 0:2.5-29.el7 psmisc.x86_64 0:22.20-15.el7

[root@server5 ~]# systemctl enable --now pcsd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/pcsd.service to /usr/lib/systemd/system/pcsd.service.

修改server5/6 的hacluster用户密码为westos

[root@server5 ~]# passwd hacluster

Changing password for user hacluster.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

pcs注册认证server5 server6

[root@server5 ~]# pcs cluster auth server5 server6

Username: hacluster

Password:

server5: Authorized

server6: Authorized

创建集群命名mycluster,server5/6为成员,集群成员也可以后续添加

[root@server5 ~]# pcs cluster setup --name mycluster server5 server6

Destroying cluster on nodes: server5, server6...

server5: Stopping Cluster (pacemaker)...

server6: Stopping Cluster (pacemaker)...

server5: Successfully destroyed cluster

server6: Successfully destroyed cluster

Sending 'pacemaker_remote authkey' to 'server5', 'server6'

server5: successful distribution of the file 'pacemaker_remote authkey'

server6: successful distribution of the file 'pacemaker_remote authkey'

Sending cluster config files to the nodes...

server5: Succeeded

server6: Succeeded

Synchronizing pcsd certificates on nodes server5, server6...

server5: Success

server6: Success

Restarting pcsd on the nodes in order to reload the certificates...

server5: Success

server6: Success

设置集群服务启动并开机自启

[root@server5 ~]# pcs cluster enable --all

server5: Cluster Enabled

server6: Cluster Enabled

[root@server5 ~]# pcs cluster start --all

server5: Starting Cluster (corosync)...

server6: Starting Cluster (corosync)...

server5: Starting Cluster (pacemaker)...

server6: Starting Cluster (pacemaker)...

集群检测,发现报错

[root@server5 ~]# crm_verify -L -V

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

设置stonith-enabled=false,再次检测不再报错

[root@server5 ~]# pcs property set stonith-enabled=false

[root@server5 ~]# crm_verify -L -V

设置vip 172.25.3.100,用于故障无缝切换

[root@server5 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.3.100 op monitor interval=30s

查看集群状态 vip位于server5,Online: [ server5 server6 ]

[root@server5 ~]# pcs status

Cluster name: mycluster

Stack: corosync

Current DC: server6 (version 1.1.19-8.el7-c3c624ea3d) - partition with quorum

Last updated: Fri Aug 6 23:09:16 2021

Last change: Fri Aug 6 23:09:12 2021 by root via cibadmin on server5

2 nodes configured

1 resource configured

Online: [ server5 server6 ]

Full list of resources:

vip (ocf::heartbeat:IPaddr2): Started server5

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

查看server5 上vip 172.25.3.100/24

[root@server5 ~]# ip a

2: eth0: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 172.25.3.100/24 brd 172.25.3.255 scope global secondary eth0

server5/6安装haproxy

[root@server5 ~]# yum install -y haproxy

Installed:

haproxy.x86_64 0:1.5.18-8.el7

配置haproxy.cfg,启动服务查看端口6443

[root@server5 ~]# cd /etc/haproxy/

[root@server5 haproxy]# vim haproxy.cfg

[root@server5 haproxy]# systemctl start haproxy.service

[root@server5 haproxy]# netstat -antlp|grep :6443

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 5518/haproxy

配置内容haproxy.cfg

listen stats *:80

stats uri /status

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:6443

mode tcp

default_backend app

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend app

balance roundrobin

mode tcp

server k8s1 172.25.3.7:6443 check

server k8s2 172.25.3.8:6443 check

server k8s3 172.25.3.9:6443 check

将配置文件传至server6,启动服务

[root@server5 haproxy]# scp haproxy.cfg server6:/etc/haproxy/

Warning: Permanently added 'server6,172.25.3.6' (ECDSA) to the list of known hosts.

root@server6's password:

haproxy.cfg 100% 2685 5.6MB/s 00:00

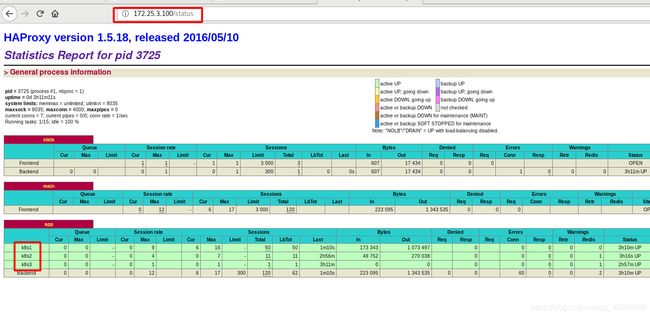

访问 172.25.3.5/status,此时k8s节点未建立,还是红色

haproxy服务放入pcs集群

[root@server5 haproxy]# pcs resource create haproxy systemd:haproxy op monitor interval=60s

查看集群状态 vip在server5 haproxy 在server6,按逻辑应该都位于一台机,master出现故障将会将服务和vip一同迁移至集群其他主机

[root@server5 haproxy]# pcs status

Cluster name: mycluster

Stack: corosync

Current DC: server6 (version 1.1.19-8.el7-c3c624ea3d) - partition with quorum

Last updated: Fri Aug 6 23:19:30 2021

Last change: Fri Aug 6 23:19:27 2021 by root via cibadmin on server5

2 nodes configured

2 resources configured

Online: [ server5 server6 ]

Full list of resources:

vip (ocf::heartbeat:IPaddr2): Started server5

haproxy (systemd:haproxy): Started server6

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

建立group hagroup 成员 vip haproxy,查看状态,二者同步,位于一台主机

[root@server5 haproxy]# pcs resource group add hagroup vip haproxy

[root@server5 haproxy]# pcs status

Cluster name: mycluster

Stack: corosync

Current DC: server6 (version 1.1.19-8.el7-c3c624ea3d) - partition with quorum

Last updated: Fri Aug 6 23:22:14 2021

Last change: Fri Aug 6 23:22:10 2021 by root via cibadmin on server5

2 nodes configured

2 resources configured

Online: [ server5 server6 ]

Full list of resources:

Resource Group: hagroup

vip (ocf::heartbeat:IPaddr2): Started server5

haproxy (systemd:haproxy): Started server5

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

pacemaker+haproxy的高可用+负载均衡部署成功

2.k8s高可用集群部署

配置dvd.repo,并传递到部署docer主机server7/8/9

[docker]

name= docker

baseurl=http://172.25.3.250/docker-ce

gpgcheck=0

scp /etc/yum.repos.d/dvd.repo server7:/etc/yum.repos.d/dvd.repo

scp /etc/yum.repos.d/dvd.repo server8:/etc/yum.repos.d/dvd.repo

scp /etc/yum.repos.d/dvd.repo server9:/etc/yum.repos.d/dvd.repo

server7/8/9安装docker-ce,开机自启

[root@server7 ~]# yum install -y docker-ce

[root@server7 ~]# systemctl enable --now docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

配置内核参数,解决docker网络通信问题创建文件/etc/sysctl.d/docker.conf,内容如下

[root@server8 ~]# cat /etc/sysctl.d/docker.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

配置生效命令sysctl --system,server7/8/9均执行此操作配置

[root@server8 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/docker.conf ...

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

* Applying /etc/sysctl.conf ...

切换docker cgroups:systemd,k8s需要在此环境基础才能运行,同时设置默认仓库地址为https://reg.westos.org

server7/8/9,配置文件内容,

[root@server8 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

重启docker,查看docker info,配置成功

[root@server8 ~]# docker info

Logging Driver: json-file

Cgroup Driver: systemd

Registry Mirrors:

https://reg.westos.org/

Live Restore Enabled: false

发送仓库认证目录certs.d到server7/8/9

scp -r certs.d/ server7:/etc/docker/

scp -r certs.d/ server8:/etc/docker/

scp -r certs.d/ server9:/etc/docker/

server7/8/9安装ipvsadm,加载内核模块:(kube_proxy使用IPVS模式)

[root@server7 ~]# yum install -y ipvsadm

[root@server7 ~]# modprobe ip_vs

[root@server7 ~]# lsmod | grep ip_vs

ip_vs 145497 0

nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server7 ~]# ipvsadm -l

server7/8/9关闭swap分区,注释/etc/fstab 内swap内容

[root@server7 ~]# swapoff -a

[root@server7 ~]# vim /etc/fstab

#/dev/mapper/rhel-swap swap swap defaults 0 0

准备k8s组件安装包

[root@server7 ~]# cd packages/

[root@server7 packages]# ls

14bfe6e75a9efc8eca3f638eb22c7e2ce759c67f95b43b16fae4ebabde1549f3-cri-tools-1.13.0-0.x86_64.rpm

23f7e018d7380fc0c11f0a12b7fda8ced07b1c04c4ba1c5f5cd24cd4bdfb304d-kubeadm-1.21.3-0.x86_64.rpm

7e38e980f058e3e43f121c2ba73d60156083d09be0acc2e5581372136ce11a1c-kubelet-1.21.3-0.x86_64.rpm

b04e5387f5522079ac30ee300657212246b14279e2ca4b58415c7bf1f8c8a8f5-kubectl-1.21.3-0.x86_64.rpm

db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm

进入软件目录, 安装kubelet组件,server7/8/9内执行

[root@server7 ~]# cd packages/

[root@server7 ~]# yum install -y *

Installed:

cri-tools.x86_64 0:1.13.0-0 kubeadm.x86_64 0:1.21.3-0

kubectl.x86_64 0:1.21.3-0 kubelet.x86_64 0:1.21.3-0

kubernetes-cni.x86_64 0:0.8.7-0

Dependency Installed:

conntrack-tools.x86_64 0:1.4.4-4.el7

libnetfilter_cthelper.x86_64 0:1.0.0-9.el7

libnetfilter_cttimeout.x86_64 0:1.0.0-6.el7

libnetfilter_queue.x86_64 0:1.0.2-2.el7_2

socat.x86_64 0:1.7.3.2-2.el7

设置开启自启

[root@server7 ~]# systemctl enable --now kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

server7内配置高可用,导出kubeadm-init.yaml配置文件

[root@server7 ~]# kubeadm config images pull --config kubeadm-init.yaml

配置文件内容

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.25.3.7

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server7

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.3.100:6443" #监听端口提供给haproxy

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/k8s #镜像地址为本地仓库

kind: ClusterConfiguration

kubernetesVersion: 1.21.3

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # pod网段

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1 #使用IPVS模式

kind: KubeProxyConfiguration

mode: ipvs

拉取使用镜像

[root@server7 ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled reg.westos.org/k8s/kube-apiserver:v1.21.3

[config/images] Pulled reg.westos.org/k8s/kube-controller-manager:v1.21.3

[config/images] Pulled reg.westos.org/k8s/kube-scheduler:v1.21.3

[config/images] Pulled reg.westos.org/k8s/kube-proxy:v1.21.3

[config/images] Pulled reg.westos.org/k8s/pause:3.4.1

[config/images] Pulled reg.westos.org/k8s/etcd:3.4.13-0

[config/images] Pulled reg.westos.org/k8s/coredns:v1.8.0

k8s高可用集群初始化

kubeadm init --config kubeadm-init.yaml --upload-certs

[root@server7 ~]# kubeadm init --config kubeadm-init.yaml --upload-certs

[init] Using Kubernetes version: v1.21.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local server7] and IPs [10.96.0.1 172.25.3.7 172.25.3.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost server7] and IPs [172.25.3.7 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost server7] and IPs [172.25.3.7 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 13.019126 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

cb848b21df0ca822301d9cfbe9c7395613b3c6fdd4c4e019bb5ce0f3ea9e0455

[mark-control-plane] Marking the node server7 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node server7 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad \

--control-plane --certificate-key cb848b21df0ca822301d9cfbe9c7395613b3c6fdd4c4e019bb5ce0f3ea9e0455

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad

创建master执行命令

kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad \

--control-plane --certificate-key cb848b21df0ca822301d9cfbe9c7395613b3c6fdd4c4e019bb5ce0f3ea9e0455

创建worker执行命令

kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad

添加环境变量

export KUBECONFIG=/etc/kubernetes/admin.conf

配置kubectl命令补齐功能:

[root@server7 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@server7 ~]# source .bashrc

查看kube-system,coredns处于pedning状态,原因是缺少flannel网络支持

[root@server7 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server7 NotReady control-plane,master 2m13s v1.21.3

[root@server7 ~]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

coredns-7777df944c-7hp9s 0/1 Pending 0 2m14s

coredns-7777df944c-vl2wb 0/1 Pending 0 2m14s

etcd-server7 1/1 Running 0 2m21s

kube-apiserver-server7 1/1 Running 0 2m21s

kube-controller-manager-server7 1/1 Running 0 2m21s

kube-proxy-gh2sx 1/1 Running 0 2m14s

kube-scheduler-server7 1/1 Running 0 2m21s

部署flannel,提前准备flannel到harbor仓库

[root@server7 ~]# kubectl apply -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

脚本内容

[root@server7 ~]# cat kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

查看kube-system,全部部署成功

[root@server7 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7777df944c-7hp9s 1/1 Running 0 8m4s

coredns-7777df944c-vl2wb 1/1 Running 0 8m4s

etcd-server7 1/1 Running 0 8m11s

kube-apiserver-server7 1/1 Running 0 8m11s

kube-controller-manager-server7 1/1 Running 0 8m11s

kube-flannel-ds-wv9fb 1/1 Running 0 22s

kube-proxy-gh2sx 1/1 Running 0 8m4s

kube-scheduler-server7 1/1 Running 0 8m11s

添加节点server7 server8,执行命令

kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad \

--control-plane --certificate-key cb848b21df0ca822301d9cfbe9c7395613b3c6fdd4c4e019bb5ce0f3ea9e0455

部署成功

[root@server9 ~]# kubeadm join 172.25.3.100:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:3d242753e63f0f6642e47281b37dfbec436ec43d917fef1cbd9ebadee0c765ad \

> --control-plane --certificate-key cb848b21df0ca822301d9cfbe9c7395613b3c6fdd4c4e019bb5ce0f3ea9e0455

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost server9] and IPs [172.25.3.9 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost server9] and IPs [172.25.3.9 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local server9] and IPs [10.96.0.1 172.25.3.9 172.25.3.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node server9 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node server9 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

添加环境变量

export KUBECONFIG=/etc/kubernetes/admin.conf

配置kubectl命令补齐功能:

[root@server7 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@server7 ~]# source .bashrc

查看node,均处于ready状态,部署成功

[root@server7 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server7 Ready control-plane,master 3h8m v1.21.3

server8 Ready control-plane,master 179m v1.21.3

server9 Ready control-plane,master 176m v1.21.3

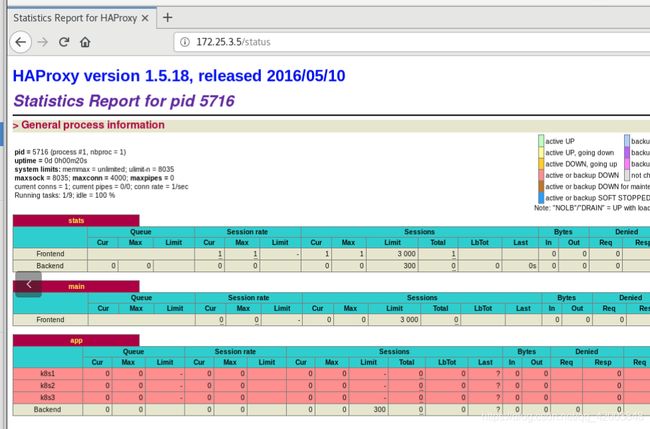

测试访问是否被haproxy监听,访问172.25.3.100/status,均处可见状态,说明K8s高可用+负载均衡集群部署成果