Redis从搭建集群到实现缓存功能

Redis从搭建集群到实现缓存功能

- 1.Redis实现缓存

-

- 1.1.使用Docker搭建Redis集群

- 1.2.Docker的网络类型

- 1.3.Spring-Data-Redis代码测试

- 1.4.缓存命中

- 1.5.响应结果写入缓存

- 2.6.增加CORS的支持

1.Redis实现缓存

在接口服务中,如果每次都进行数据库查询,那么必然会给数据库造成很大的并发压力,所以需要为接口添加缓存,缓存技术选用Redis,并且使用Redis集群,Api使用的是Spring-Data-Redis。

1.1.使用Docker搭建Redis集群

#拉取镜像

docker pull redis:5.0.2

#创建容器

docker create --name redis-node01 -v /opt/redis/data/node01:/data -p 6379:6379 redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-01.conf

docker create --name redis-node02 -v /opt/redis/data/node02:/data -p 6380:6379 redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-02.conf

docker create --name redis-node03 -v /opt/redis/data/node03:/data -p 6381:6379 redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-03.conf

#启动容器

docker start redis-node01 redis-node02 redis-node03

#开始组建集群

#进入redis-node01进行操作

docker exec -it redis-node01 /bin/bash

#在容器内组建集群(没有replicas,即没有分片,全是master主节点)

redis-cli --cluster create 172.17.0.1:6379 172.17.0.1:6380 172.17.0.1:6381 --cluster-replicas 0

这时候出现连接不到redis节点的问题,我们尝试使用容器的ip地址

#查看容器的ip地址

#172.17.0.4

docker inspect redis-node01

#172.17.0.5

docker inspect redis-node02

#172.17.0.6

docker inspect redis-node03

#再次进入redis-node01进行操作

docker exec -it redis-node01 /bin/bash

#组建集群(注意端口的变化)

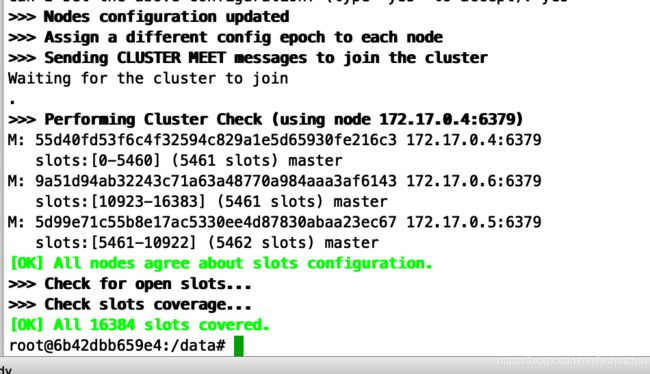

redis-cli --cluster create 172.17.0.4:6379 172.17.0.5:6379 172.17.0.6:6379 --cluster-replicas 0

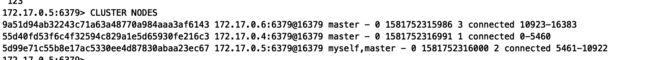

我们在redis容器中使用CLUSTER NODES来查看该集群信息:

我们可以看到,集群中节点的ip地址是docker分配的地址,在外部客户端是没有办法访问到的。我们需要使用docker的网络类型进行操作。

1.2.Docker的网络类型

docker的网络类型

- None:不为容器配置任何网络功能,没有网络 --net=none

- Container:与另一个运行中的容器共享Network Namespace,–net=container:containerID

- Host:与主机共享Network NameSpace, --net=host

- Bridge:Docker设计的NAT网络模型(默认类型)

host模式创建的容器没有自己独立的网络命名空间,是和物理机共享一个Network Namespace,并且共享物理机的所有端口与IP。但是它将容器直接暴露在公共网络中,是有安全隐患的。但是目前我们只能使用这种方法。

最终版的Redis集群

#创建容器

docker create --name redis-node01 --net host -v /opt/redis/data/node01:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-01.conf --port 6379

docker create --name redis-node02 --net host -v /opt/redis/data/node02:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-02.conf --port 6380

docker create --name redis-node03 --net host -v /opt/redis/data/node03:/data redis:5.0.2 --cluster-enabled yes --cluster-config-file nodes-node-03.conf --port 6381

#启动容器

docker start redis-node01 redis-node02 redis-node03

#开始组建集群

#进入redis-node01进行操作

docker exec -it redis-node01 /bin/bash

#组建集群(172.16.124.131是主机的ip地址)

redis-cli --cluster create 172.16.124.131:6379 172.16.124.131:6380 172.16.124.131:6381 --cluster-replicas 0

外部测试连接,成功!

1.3.Spring-Data-Redis代码测试

1.导入相关依赖

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-data-redisartifactId>

dependency>

<dependency>

<groupId>redis.clientsgroupId>

<artifactId>jedisartifactId>

<version>2.9.0version>

dependency>

<dependency>

<groupId>commons-iogroupId>

<artifactId>commons-ioartifactId>

<version>2.6version>

dependency>

2.编写配置文件

# redis集群配置

spring.redis.jedis.pool.max-wait = 5000

spring.redis.jedis.pool.max-Idle = 100

spring.redis.jedis.pool.min-Idle = 10

spring.redis.timeout = 10

spring.redis.cluster.nodes = 172.16.124.131:6379,172.16.124.131:6380,172.16.124.131:6381

spring.redis.cluster.max-redirects=5

3.编写properties类

package org.fechin.haoke.dubbo.api.config;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

import java.util.List;

@Component

@ConfigurationProperties(prefix = "spring.redis.cluster")

@Data

public class ClusterConfigurationProperties {

private List<String> nodes;

private Integer maxRedirects;

}

4.编写配置类

package org.fechin.haoke.dubbo.api.config;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisClusterConfiguration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.jedis.JedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.StringRedisSerializer;

@Configuration

public class RedisClusterConfig {

@Autowired

private ClusterConfigurationProperties clusterProperties;

@Bean

public RedisConnectionFactory connectionFactory() {

RedisClusterConfiguration configuration = new

RedisClusterConfiguration(clusterProperties.getNodes());

configuration.setMaxRedirects(clusterProperties.getMaxRedirects());

return new JedisConnectionFactory(configuration);

}

@Bean

public RedisTemplate<String, String> redisTemplate(RedisConnectionFactory redisConnectionfactory) {

RedisTemplate<String, String> redisTemplate = new RedisTemplate<>();

redisTemplate.setConnectionFactory(redisConnectionfactory);

redisTemplate.setKeySerializer(new StringRedisSerializer());

redisTemplate.setValueSerializer(new StringRedisSerializer());

redisTemplate.afterPropertiesSet();

return redisTemplate;

}

}

4.编写测试类

package org.fechin.haoke.dubbo.api;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.test.context.junit4.SpringRunner;

import java.util.Set;

/**

* @Author:朱国庆

* @Date:2020/2/15 16:41

* @Desription: haoke-manage

* @Version: 1.0

*/

@RunWith(SpringRunner.class)

@SpringBootTest

public class RedisTest {

@Autowired

private RedisTemplate<String, String> redisTemplate;

@Test

public void testSave() {

for (int i = 0; i < 100; i++) {

this.redisTemplate.opsForValue().set("key_" + i, "value_" + i);

}

Set<String> keys = this.redisTemplate.keys("key_*");

for (String key : keys) {

String value = this.redisTemplate.opsForValue().get(key);

System.out.println(value);

this.redisTemplate.delete(key);

}

}

}

1.4.缓存命中

实现缓存逻辑有两种方式:1.每个接口单独控制缓存逻辑;2.统一控制缓存逻辑。我们采用第二种方式。

1.编写拦截器

package org.fechin.haoke.dubbo.api.interceptor;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.commons.codec.digest.DigestUtils;

import org.apache.commons.io.IOUtils;

import org.apache.commons.lang3.StringUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import org.springframework.web.servlet.HandlerInterceptor;

import org.springframework.web.servlet.ModelAndView;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.util.Map;

@Component

public class RedisCacheInterceptor implements HandlerInterceptor {

private static ObjectMapper mapper = new ObjectMapper();

@Autowired

private RedisTemplate<String, String> redisTemplate;

/**

* 在请求到达之前执行,返回true就放行,返回false就不放行;

* @param request

* @param response

* @param handler

* @return

* @throws Exception

*/

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

if(StringUtils.equalsIgnoreCase(request.getMethod(), "OPTIONS")){

return true;

}

// 判断请求方式,get还是post还是其他。。。

if (!StringUtils.equalsIgnoreCase(request.getMethod(), "GET")) {

// 非get请求,如果不是graphql请求,放行

if (!StringUtils.equalsIgnoreCase(request.getRequestURI(), "/graphql")) {

return true;

}

}

// 通过缓存做命中,查询redis,redisKey ? 组成:md5(请求的url + 请求参数)

String redisKey = createRedisKey(request);

String data = this.redisTemplate.opsForValue().get(redisKey);

if (StringUtils.isEmpty(data)) {

// 缓存未命中

return true;

}

// 将data数据进行响应

response.setCharacterEncoding("UTF-8");

response.setContentType("application/json; charset=utf-8");

// 支持跨域

response.setHeader("Access-Control-Allow-Origin", "*");

response.setHeader("Access-Control-Allow-Methods", "GET,POST,PUT,DELETE,OPTIONS");

response.setHeader("Access-Control-Allow-Credentials", "true");

response.setHeader("Access-Control-Allow-Headers", "Content-Type,X-Token");

response.setHeader("Access-Control-Allow-Credentials", "true");

//缓存命中

response.getWriter().write(data);

return false;

}

public static String createRedisKey(HttpServletRequest request) throws

Exception {

String paramStr = request.getRequestURI();

Map<String, String[]> parameterMap = request.getParameterMap();

if (parameterMap.isEmpty()) {

paramStr += IOUtils.toString(request.getInputStream(), "UTF-8");

} else {

paramStr += mapper.writeValueAsString(request.getParameterMap());

}

String redisKey = "WEB_DATA_" + DigestUtils.md5Hex(paramStr);

return redisKey;

}

}

2.注册拦截器到Spring容器中

package org.fechin.haoke.dubbo.api.config;

import org.fechin.haoke.dubbo.api.interceptor.RedisCacheInterceptor;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.servlet.config.annotation.InterceptorRegistry;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurer;

@Configuration

public class WebConfig implements WebMvcConfigurer {

@Autowired

private RedisCacheInterceptor redisCacheInterceptor;

@Override

public void addInterceptors(InterceptorRegistry registry) {

registry.addInterceptor(this.redisCacheInterceptor).addPathPatterns("/**");

}

}

3.进行测试

我们发现如果是POST请求的话,会报400的错误

错误原因是,在拦截器中读取了输入流的数据,在request中的输入流只能读取一次,请求进入controller时候,输入流已经没有数据了,导致获取不到数据。

4.通过包装request解决

package org.fechin.haoke.dubbo.api.interceptor;

import org.apache.commons.io.IOUtils;

import javax.servlet.ReadListener;

import javax.servlet.ServletInputStream;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletRequestWrapper;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

/**

* 包装HttpServletRequest

*/

public class MyServletRequestWrapper extends HttpServletRequestWrapper {

private final byte[] body;

/**

* Construct a wrapper for the specified request.

*

* @param request The request to be wrapped

*/

public MyServletRequestWrapper(HttpServletRequest request) throws IOException {

super(request);

body = IOUtils.toByteArray(super.getInputStream());

}

@Override

public BufferedReader getReader() throws IOException {

return new BufferedReader(new InputStreamReader(getInputStream()));

}

@Override

public ServletInputStream getInputStream() throws IOException {

return new RequestBodyCachingInputStream(body);

}

private class RequestBodyCachingInputStream extends ServletInputStream {

private byte[] body;

private int lastIndexRetrieved = -1;

private ReadListener listener;

public RequestBodyCachingInputStream(byte[] body) {

this.body = body;

}

@Override

public int read() throws IOException {

if (isFinished()) {

return -1;

}

int i = body[lastIndexRetrieved + 1];

lastIndexRetrieved++;

if (isFinished() && listener != null) {

try {

listener.onAllDataRead();

} catch (IOException e) {

listener.onError(e);

throw e;

}

}

return i;

}

@Override

public boolean isFinished() {

return lastIndexRetrieved == body.length - 1;

}

@Override

public boolean isReady() {

// This implementation will never block

// We also never need to call the readListener from this method, as this method will never return false

return isFinished();

}

@Override

public void setReadListener(ReadListener listener) {

if (listener == null) {

throw new IllegalArgumentException("listener cann not be null");

}

if (this.listener != null) {

throw new IllegalArgumentException("listener has been set");

}

this.listener = listener;

if (!isFinished()) {

try {

listener.onAllDataRead();

} catch (IOException e) {

listener.onError(e);

}

} else {

try {

listener.onAllDataRead();

} catch (IOException e) {

listener.onError(e);

}

}

}

@Override

public int available() throws IOException {

return body.length - lastIndexRetrieved - 1;

}

@Override

public void close() throws IOException {

lastIndexRetrieved = body.length - 1;

body = null;

}

}

}

我们在过滤器中对Request进行替换

package org.fechin.haoke.dubbo.api.interceptor;

import org.springframework.stereotype.Component;

import org.springframework.web.filter.OncePerRequestFilter;

import javax.servlet.FilterChain;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

/**

* 替换Request对象

*/

@Component

public class RequestReplaceFilter extends OncePerRequestFilter {

@Override

protected void doFilterInternal(HttpServletRequest request, HttpServletResponse response, FilterChain filterChain) throws ServletException, IOException {

if (!(request instanceof MyServletRequestWrapper)) {

request = new MyServletRequestWrapper(request);

}

filterChain.doFilter(request, response);

}

}

1.5.响应结果写入缓存

通过ResponseBodyAdvice进行实现

ResponseBodyAdvice是Spring提供的高级用法,会在结果被处理前进行拦截,拦截的逻辑自己实现,这样就可以实现拿到结果数据进行写入缓存的操作了。

package org.fechin.haoke.dubbo.api.interceptor;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.commons.lang3.StringUtils;

import org.fechin.haoke.dubbo.api.controller.GraphQLController;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.MethodParameter;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.http.MediaType;

import org.springframework.http.server.ServerHttpRequest;

import org.springframework.http.server.ServerHttpResponse;

import org.springframework.http.server.ServletServerHttpRequest;

import org.springframework.web.bind.annotation.ControllerAdvice;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.servlet.mvc.method.annotation.ResponseBodyAdvice;

import java.time.Duration;

@ControllerAdvice

public class MyResponseBodyAdvice implements ResponseBodyAdvice {

@Autowired

private RedisTemplate<String, String> redisTemplate;

private ObjectMapper mapper = new ObjectMapper();

@Override

public boolean supports(MethodParameter returnType, Class converterType) {

if (returnType.hasMethodAnnotation(GetMapping.class)) {

return true;

}

if (returnType.hasMethodAnnotation(PostMapping.class) &&

StringUtils.equals(GraphQLController.class.getName(), returnType.getExecutable().getDeclaringClass().getName())) {

return true;

}

return false;

}

@Override

public Object beforeBodyWrite(Object body, MethodParameter returnType, MediaType selectedContentType, Class selectedConverterType, ServerHttpRequest request, ServerHttpResponse response) {

try {

String redisKey = RedisCacheInterceptor.createRedisKey(((ServletServerHttpRequest) request).getServletRequest());

String redisValue;

if (body instanceof String) {

redisValue = (String) body;

} else {

redisValue = mapper.writeValueAsString(body);

}

this.redisTemplate.opsForValue().set(redisKey, redisValue, Duration.ofHours(1));

} catch (Exception e) {

e.printStackTrace();

}

return body;

}

}

经过测试,数据已经写入了缓存。

2.6.增加CORS的支持

整合前端系统测试会发现,前面实现的拦截器并没有对跨域进行支持,需要对CORS跨域支持。