Kubeedge & Edgemesh & Sedna 配置

Setting Of Kubeedge & EdgeMesh & Sedna Installation

准备安装环境(主节点和从节点均有)

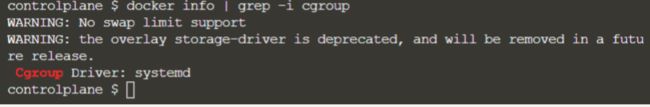

Edge nod & Cloud node 两节点均需配置cgroups

wget https://raw.githubusercontent.com/ansjin/katacoda-scenarios/main/getting-started-with-kubeedge/assets/daemon.json

mv daemon.json /etc/docker/daemon.json

systemctl daemon-reload

service docker restart

docker info | grep -i cgroup

kubeadm init --kubernetes-version=1.20.0 --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

kubectl cluster-info

kubectl get nodes

#去除主节点上的污点,以便sedna安装

kubectl taint nodes --all node-role.kubernetes.io/master-

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-7pRIXUw2-1640680683134)(Start With Kubeedge & Sedna Installation.assets/image-20211114100242495.png)]

这里如果coredns没有ready的话就apply flannel:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

下载所需版本的keadm,解压到当前文件,复制到local/bin下面

cp keadm-v1.7.2-linux-amd64/keadm/keadm /usr/local/bin/keadm

keadm init --advertise-address=158.39.201.145

检查cloudnode的状态是否running

cat /var/log/kubeedge/cloudcore.log

ln -s /etc/kubeedge/cloudcore.service /etc/systemd/system/cloudcore.service

sudo service cloudcore restart

获取本地tokken

keadm gettoken

Edge节点加入cloud node

keadm join --cloudcore-ipport=158.39.201.145:10000 --token=8f469f894ff8cf79ac451aa32861954b6a3d03adaed980c7d03f8e187dfe8c52.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2NDA3MTAxNjV9.TRXiklCsGB-Ph4jtXt0O5YUkEsHMXpbvKPopAhpvaF0

kubectl get node #打印节点状态

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-AzBtoVDg-1640680683136)(Start With Kubeedge & Sedna Installation.assets/image-20211114101558992.png)]

加入成功,各节点ready

Enable KubeEdge Logs in Cloud Side

——————————————————————————————————————————————————————————

(PS 以下步骤不一定要做,初次安装可以跳过)

默认情况下,kubectl 不能访问边缘节点日志,所以需要在这里启动边缘节点访问权限

wget https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/tools/certgen.sh --no-check-certificate

mv certgen.sh /etc/kubeedge/certgen.sh

chmod +x /etc/kubeedge/certgen.sh

设置 cloud core IP 环境变量

export CLOUDCOREIPS=158.39.201.145

#Generate Certificates

bash /etc/kubeedge/certgen.sh stream

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to 158.39.201.145:10003

PS. IPtaleb 配置时侯有可能出现问题

kubectl get cm tunnelport -nkubeedge -oyaml

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

tunnelportrecord.kubeedge.io: '{"ipTunnelPort":{"192.168.1.16":10350, "192.168.1.17":10351},"port":{"10350":true, "10351":true}}'

creationTimestamp: "2021-06-01T04:10:20Z"

...

If you are not sure if you have setting of iptables, and you want to clean all of them.

(If you set up iptables wrongly, it will block you out of your `kubectl logs` feature)

The following command can be used to clean up iptables:

```shell

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

```

——————————————————————————————————————————————————————————-

修改Cloudcore.yaml配置

vi /etc/kubeedge/config/cloudcore.yaml

Modify /etc/kubeedge/config/cloudcore.yaml to allow:

{'cloudStream': {'enable': true}}},

(PS. 这里 true以后会导致集群不可用,原因不清楚,可以先不做修改)

{'dynamicController': {'enable': true}}

pkill cloudcore

nohup cloudcore > cloudcore.log 2>&1 &

cat cloudcore.log

修改Edgecore.yaml

vi /etc/kubeedge/config/edgecore.yaml

Modify /etc/kubeedge/config/edgecore.yaml to allow:

{'edgeStream': {'enable': true}}},

{'edged': {'clusterDNS': '10.96.0.10'}},

{'edged': {'clusterDomain': 'cluster.local'}},

{‘metaManager’: {‘metaServer’: {‘enable’: true}}}

Avoid Kube-proxy(忽略kube-proxy的检查措施)

vi /etc/kubeedge/edgecore.service

Description=edgecore.service

[Service] Type=simple

ExecStart=/root/cmd/ke/edgecore --logtostderr=false --log-file=/root/cmd/ke/edgecore.log

Environment="CHECK_EDGECORE_ENVIRONMENT=false"

[Install]

WantedBy=multi-user.target

Restart Edgecore

systemctl restart edgecore.service

systemctl daemon-reload

Restart pods on cloud side which are pending

kubectl -n kube-system delete pods --field-selector=status.phase=Pending

Support Metrics-server in Cloud

Edge_Mesh Deploy

自动安装:

手动工作

(1)开启 Local APIServer

在云端,开启 dynamicController 模块,并重启 cloudcore

$ vim /etc/kubeedge/config/cloudcore.yaml

modules:

..

dynamicController:

enable: true

..

# 如果 cloudcore 没有配置为 systemd 管理,则使用如下命令重启(cloudcore 默认没有配置为 systemd 管理)

$ pkill cloudcore ; nohup /usr/local/bin/cloudcore > /var/log/kubeedge/cloudcore.log 2>&1 &

# 如果 cloudcore 配置为 systemd 管理,则使用如下命令重启

$ systemctl restart cloudcore

# OR

$ nohup /usr/local/bin/cloudcore > /var/log/kubeedge/cloudcore.log 2>&1 &

(1)在边缘节点,打开 metaServer 模块(如果你的 KubeEdge < 1.8.0,还需关闭 edgeMesh 模块)

$ vim /etc/kubeedge/config/edgecore.yaml

modules:

..

edgeMesh:

enable: false

..

metaManager:

metaServer:

enable: true

..

(2)配置 clusterDNS,clusterDomain

在边缘节点,配置 clusterDNS 和 clusterDomain,并重启 edgecore

$ vim /etc/kubeedge/config/edgecore.yaml

modules:

..

edged:

# EdgeMesh 的 DNS 模块暂时不支持解析外网域名,如果你希望在 Pod 内部解析外网域名

# 可以将 clusterDNS 配置成 "169.254.96.16,8.8.8.8"

clusterDNS: "169.254.96.16"

clusterDomain: "cluster.local"

..

$ systemctl restart edgecore

提示

clusterDNS 设置的值 ‘169.254.96.16’ 来自于 build/agent/kubernetes/edgemesh-agent/05-configmap.yaml 中的 commonConfig.dummyDeviceIP,如需修改请保持两者一致

(3)验证

在边缘节点,测试 Local APIServer 是否开启

$ curl 127.0.0.1:10550/api/v1/services

{"apiVersion":"v1","items":[{"apiVersion":"v1","kind":"Service","metadata":{"creationTimestamp":"2021-04-14T06:30:05Z","labels":{"component":"apiserver","provider":"kubernetes"},"name":"kubernetes","namespace":"default","resourceVersion":"147","selfLink":"default/services/kubernetes","uid":"55eeebea-08cf-4d1a-8b04-e85f8ae112a9"},"spec":{"clusterIP":"10.96.0.1","ports":[{"name":"https","port":443,"protocol":"TCP","targetPort":6443}],"sessionAffinity":"None","type":"ClusterIP"},"status":{"loadBalancer":{}}},{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{"prometheus.io/port":"9153","prometheus.io/scrape":"true"},"creationTimestamp":"2021-04-14T06:30:07Z","labels":{"k8s-app":"kube-dns","kubernetes.io/cluster-service":"true","kubernetes.io/name":"KubeDNS"},"name":"kube-dns","namespace":"kube-system","resourceVersion":"203","selfLink":"kube-system/services/kube-dns","uid":"c221ac20-cbfa-406b-812a-c44b9d82d6dc"},"spec":{"clusterIP":"10.96.0.10","ports":[{"name":"dns","port":53,"protocol":"UDP","targetPort":53},{"name":"dns-tcp","port":53,"protocol":"TCP","targetPort":53},{"name":"metrics","port":9153,"protocol":"TCP","targetPort":9153}],"selector":{"k8s-app":"kube-dns"},"sessionAffinity":"None","type":"ClusterIP"},"status":{"loadBalancer":{}}}],"kind":"ServiceList","metadata":{"resourceVersion":"377360","selfLink":"/api/v1/services"}}

自动安装edgemesh

helm install edgemesh \

--set server.nodeName= \

--set server.publicIP= \

https://raw.githubusercontent.com/kubeedge/edgemesh/main/build/helm/edgemesh.tgz

server.nodeName 指定 edgemesh-server 部署的节点,server.publicIP 指定节点的公网 IP。其中 server.publicIP 是可以省略的,因为 edgemesh-server 会自动探测并配置节点的公网 IP,但不保证正确。

测试样例:

https://edgemesh.netlify.app/zh/guide/test-case.html#http

Sedna 安装:

Deploy Sedna¶

Currently GM is deployed as a deployment, and LC is deployed as a daemonset.

Run the one liner:

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=create bash -

It requires the network to access github since it will download the sedna crd yamls. If you have unstable network to access github or existing sedna source, you can try the way:

# SEDNA_ROOT is the sedna git source directory or cached directory

export SEDNA_ROOT=/opt/sedna

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=create bash -

Debug¶

- Check the GM status:

kubectl get deploy -n sedna gm

- Check the LC status:

kubectl get ds lc -n sedna

- Check the pod status:

kubectl get pod -n sedna

Uninstall Sedna¶

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=delete bash -

PreviousNext