Python爬虫之Scrapy制作爬虫

前几天我有用过Scrapy架构编写了一篇爬虫的代码案例深受各位朋友们喜欢,今天趁着热乎在上一篇有关Scrapy制作的爬虫代码,相信有些基础的程序员应该能看的懂,很简单,废话不多说一起来看看。

前期准备:

通过爬虫语言框架制作一个爬虫程序

import scrapy

from tutorial.items import DmozItem

class DmozSpider(scrapy.Spider):

name = 'dmoz'

allowed_domains = ['dmoz.org']

start_urls = [

"http://www.dmoz.org/Computers/Programming/Languages/Python/Books/",

"http://www.dmoz.org/Computers/Programming/Languages/Python/Resources/"

]

def parse(self, response):

sel = Selector(response)

sites = sel.xpath('//ul[@class="directory-url"]/li')

for sel in sites:

item = DmozItem() # 实例化一个 DmozItem 类

item['title'] = sel.xpath('a/text()').extract()

item['link'] = sel.xpath('a/@href').extract()

item['desc'] = sel.xpath('text()').extract()

yield item

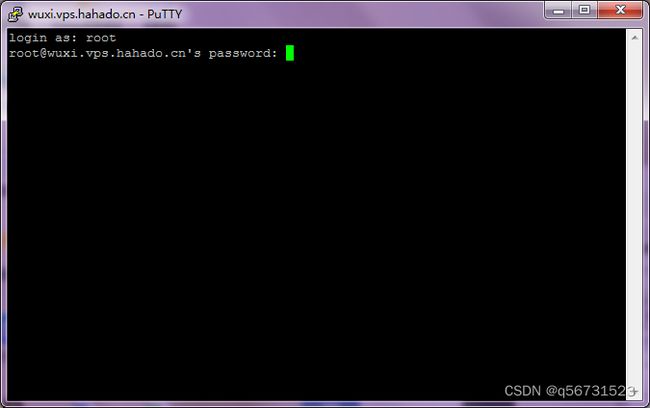

程序运行:

通过爬虫程序输入命令,执行爬虫采集目标网站

#! -*- encoding:utf-8 -*-

import base64

import sys

import random

PY3 = sys.version_info[0] >= 3

def base64ify(bytes_or_str):

if PY3 and isinstance(bytes_or_str, str):

input_bytes = bytes_or_str.encode('utf8')

else:

input_bytes = bytes_or_str

output_bytes = base64.urlsafe_b64encode(input_bytes)

if PY3:

return output_bytes.decode('ascii')

else:

return output_bytes

class ProxyMiddleware(object):

def process_request(self, request, spider):

# 爬虫ip服务器 (http://jshk.com.cn/mb/reg.asp?kefu=xjy )

proxyHost = "ip地址"

proxyPort = "端口"

# 爬虫ip验证信息

proxyUser = "username"

proxyPass = "password"

数据保存:

Scrapy爬虫方式一般分为4种,可以参考以下保存方式

json格式,默认为Unicode编码

scrapy crawl itcast -o teachers.json

json lines格式,默认为Unicode编码

scrapy crawl itcast -o teachers.jsonl

csv 逗号表达式,可用Excel打开

scrapy crawl itcast -o teachers.csv

xml格式

scrapy crawl itcast -o teachers.xml