VirtIO实现原理——PCI基础

文章目录

- PCI配置空间

-

- 通用配置空间

- virtio配置空间

- virtio通用配置空间

- virtio磁盘配置空间

- VirtIO-PCI初始化

-

- PCI初始化

-

- 枚举

- 配置

- 加载virtio-pci驱动

-

- PCI总线的注册

- PCI驱动的注册

- PCI设备的match

- PCI设备的探测

-

- 映射BAR空间

-

- 使能BAR空间

- 识别cap

- 映射

- 注册virtio-pci方法

- 注册设备到virtio总线

virtio设备可以基于不同总线来实现,本文介绍基于pci实现的virtio-pci设备。以virtio-blk为例,首先介绍PCI配置空间内容,virtio-pci实现的硬件基础——capability,最后分析PIC设备的初始化以及virtio-pci设备的初始化。

PCI配置空间

- virtio设备作为pci设备,必须实现pci local bus spec规定的配置空间(最大256字节),前64字节是spec中定义好的,称预定义空间,其中前16字节对所有类型的pci设备都相同,之后的空间格式因类型而不同,对前16字节空间,我称它为通用配置空间

通用配置空间

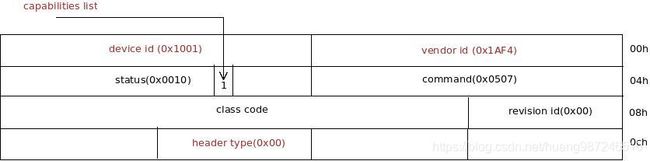

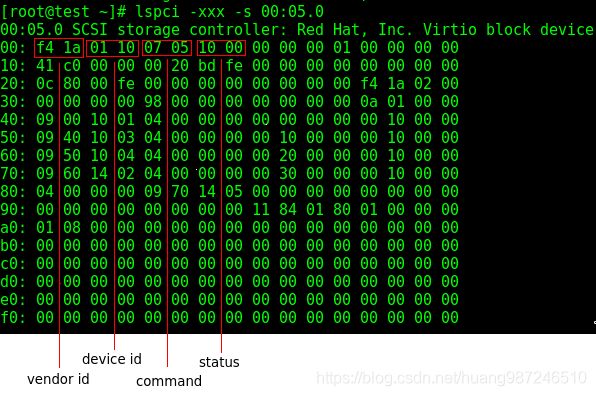

- 前16字节中有4个地方用来识别virtio设备

- vendor id:厂商ID,用来标识pci设备出自哪个厂商,这里是0x1af4,来自Red Hat。

- device id:厂商下的产品ID,传统virtio-blk设备,这里是0x1001

- revision id:厂商决定是否使用,设备版本ID,这里未使用

- header type:pci设备类型,0x00(普通设备),0x01(pci bridge),0x02(CardBus bridge)。virtio是普通设备,这里是0x00

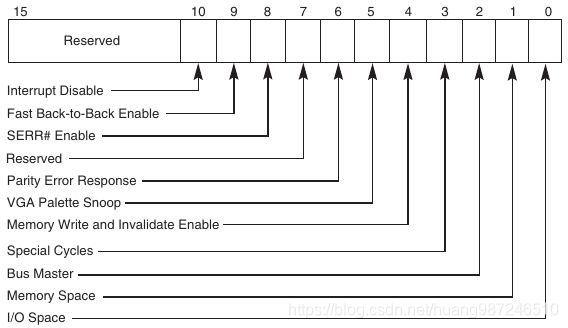

- command字段用来控制pci设备,打开某些功能的开关,virtio-blk设备是(0x0507 = 0b1010111),command的各字段含义如下图,低三位的含义如下:

- I/O Space:如果PCI设备实现了IO空间,该字段用来控制是否接收总线上对IO空间的访问。如果PCI设备没有IO空间,该字段不可写。

- Memory Space:如果PCI设备实现了内存空间,该字段用来控制是否接收总线上对内存空间的访问。如果PCI设备没有内存空间,该字段不可写。

- Bus Master:控制pci设备是否具有作为Master角色的权限。

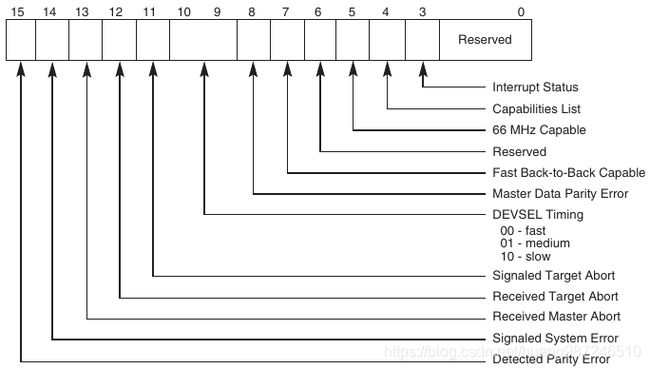

- status字段用来记录pci设备的状态信息,virtio-blk是(0x10 = 0x10000),status各字段含义如下图:

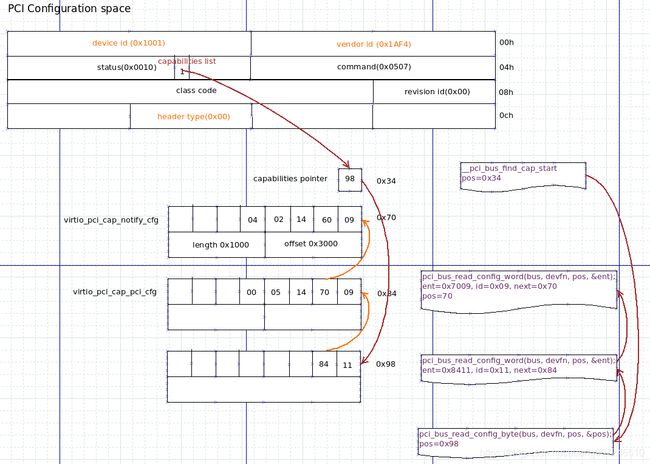

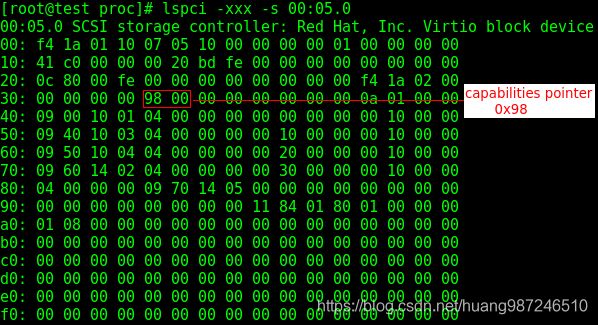

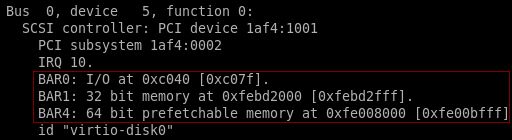

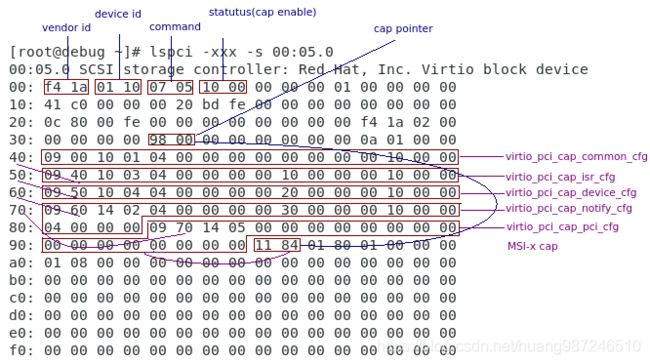

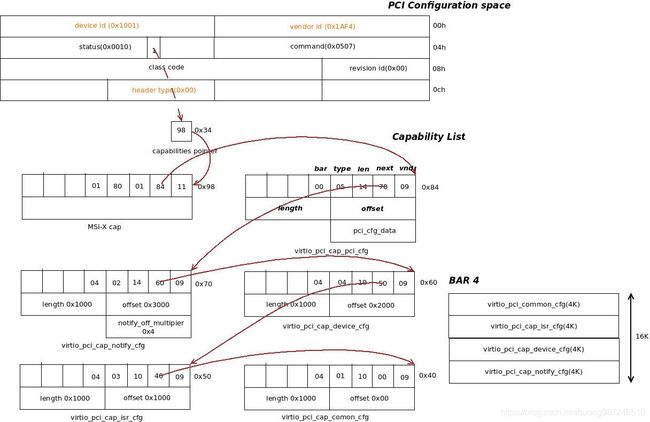

其中有一位是Capabilities List,它是pci规范定义的附加空间标志位,Capabilities List的意义是允许在pci设备配置空间之后加上额外的寄存器,这些寄存器由Capability List组织起来,用来实现特定的功能,附加空间在64字节配置空间之后,最大不能超过256字节。以virtio-blk为例,它标记了这个位,因此在virtio-blk设备配置空间之后,还有一段空间用来实现virtio-blk的一些特有功能。1表示capabilities pointer字段(0x34)存放了附加寄存器组的起始地址。这里的地址表示附加空间在pci设备空间内的偏移 - virtio-blk配置空间的内容可以通过lspci命令查看到,如下

virtio配置空间

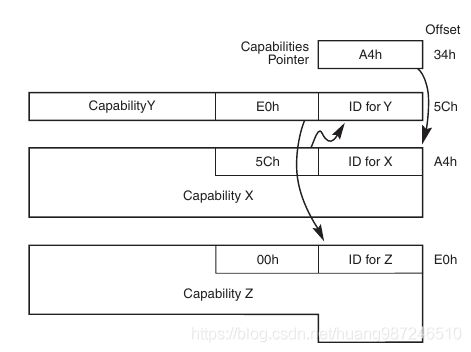

- virtio-pci设备实现pci spec规定的通用配置空间后,设计了自己的配置空间,用来实现virtio-pci的功能。pci通过

status字段的capabilities listbit标记自己在64字节预定义配置空间之后有附加的寄存器组,capabilities pointer会存放寄存器组链表的头部指针,这里的指针代表寄存器在配置空间内的偏移

- pci spec中描述的

capabilities list格式如下,第1个字节存放capability ID,标识后面配置空间实现的是哪种capability,第2个字节存放下一个capability的地址。capability ID查阅参见pci spec3.0 附录H。virtio-blk实现的capability有两种,一种是MSI-X( Message Signaled Interrupts - Extension),ID为0x11,一种是Vendor Specific,ID为0x9,后面一种capability设计目的就是让厂商实现自己的功能。virtio-blk的实现以此为基础

- virtio-pci根据自己的功能需求,设计了如下的capabilities布局,右侧是6个capability,其中5个用做描述virtio-pci的capability,1个用作描述MSI-X的capability,这里我们只介绍用作virtio-pci的capability。再看下图,右边描述了virtio-blk的

capability布局,左边是每个capability指向的物理地址空间布局。virtio-pci设备的初始化,前后端通知,数据传递等核心功能,就在这5个capability中实现

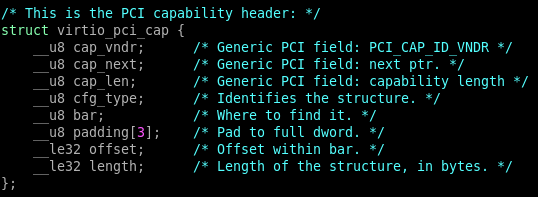

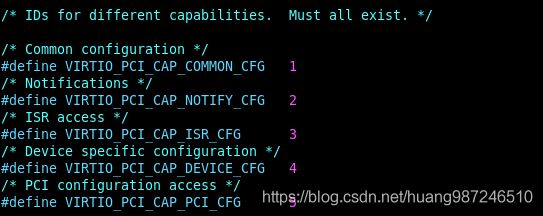

- 根据virtio spec的规范,要实现virtio-pci的capabilty,其布局应该如下

vndr表示capability类型,next表示下一个capability在pci配置空间的位置,len表示capability这个数据结构的长度,type有如下取值,将virtio-pci的capability又细分成几类

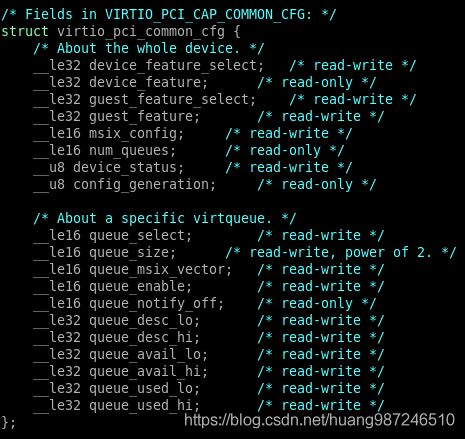

virtio通用配置空间

- capability中最核心的内容是

virtio_pci_common_cfg,它是virtio前后端沟通的主要桥梁,common config分两部分,第一部分用于设备配置,第二部分用于virtqueue使用。virtio驱动初始化利用第一部分来和后端进行沟通协商,比如支持的特性(guest_feature),初始化时设备的状态(device_status),设备的virtqueue个数(num_queues)。第二部分用来实现前后段数据传输。后面会详细提到两部分在virtio初始化和数据传输中的作用。virtio_pci_common_cfg数据结构如下

virtio磁盘配置空间

- TODO

VirtIO-PCI初始化

- virtio-blk基于virtio-pci,virtio-pci基于pci,所以virtio-blk初始化要从pci设备初始化说起

PCI初始化

- PCI驱动框架初始化在内核中有两个入口,分别如下:

- arch_initcall(pci_arch_init),体系结构的初始化,包括初始化IO地址0XCF8

- subsys_initcall(pci_subsys_init),PCI子系统初始化,这个过程会完成PIC总线树上设备的枚举,Host bridge会为PCI设备分配地址空间并将其写入BAR寄存器。体系结构初始化不做介绍,这张主要介绍PCI总线树的枚举和配置

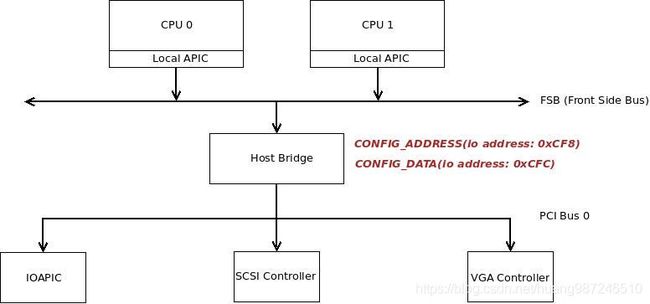

枚举

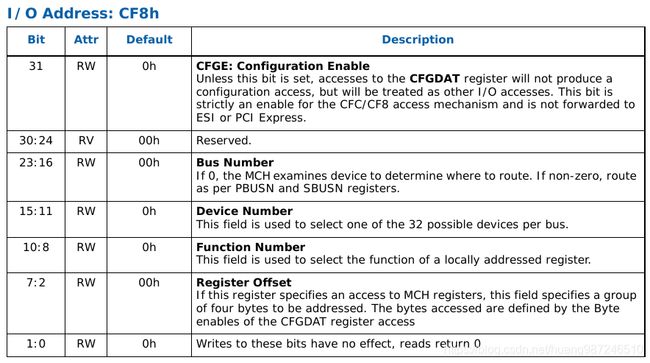

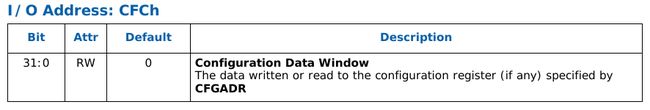

- 枚举的前提该PCI设备可以访问,PCI规范规定,设备在还没有配置地址前,CPU往两个IO端口分别写入地址和数据,实现对PCI设备的读写。如下图所示,这两个IO端口对应的是两个Host桥上的寄存器,它们可以直接通过io指令访问

- 这两个寄存器位于Host桥上,翻看Host桥(Intel 5000X MCH 3.5章节)的手册可以找到寄存器各字段具体含义,如下图所示。当cpu要访问某个pci设备时,先往0XCF8写入4字节的

bus/slot/function地址,然后通过0XCFC的IO空间读取或者写入数据。地址空间0XCF8的初始化发生在pci_arch_init里面。

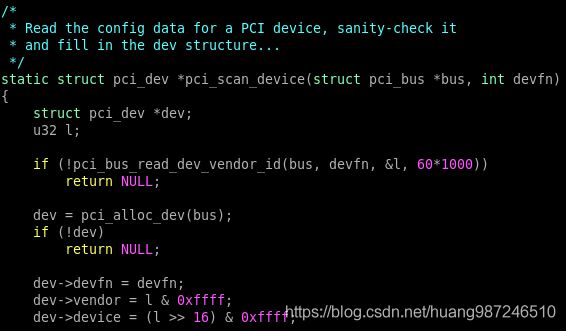

- 有了读写pci设备寄存器的方法,cpu可以读取任意pci总线上任意设备配置空间的任意寄存器。读取slot设备配置空间的vendor id,如果返回全1表示没有设备,如果返回具体值表示slot上存在function,继续判断是否为multifunction。通过这样的方式逐总线搜索下去,枚举每一个slot上存在的pci设备,直到遍历完总线树。其中两处涉及到配置空间寄存器的读写,一是识别设备的时候需要读取vendor id和device id,一是读取bar空间大小时需要读写bar寄存器。枚举发生在pci_subsys_init,如下:

pci_subsys_init

x86_init.pci.init => x86_default_pci_init

pci_legacy_init

pcibios_scan_root

x86_pci_root_bus_resources // 为Host bridge分配资源,通常情况下就是64K IO空间地址和内存空间地址就在这里划分

pci_scan_root_bus // 枚举总线树上的设备

pci_create_root_bus // 创建Host bridge

pci_scan_child_bus // 扫描总线树上所有设备,如果有pci桥,递归扫描下去

pci_scan_slot

pci_scan_single_device // 扫描设备,读取vendor id和device id

pci_scan_device

pci_setup_device

pci_read_bases

__pci_read_base // 读取bar空间大小

- 读取到PCI设备BAR空间大小后,就可以向Host bridge申请物理地址区间了,如果成功,PCI设备就得到了一段PCI空间的,大于等于BAR空间大小的物理地址。注意,Host bridge掌握着PCI总线上所有设备可以使用的IO资源和存储资源,这里说的资源,就是物理地址空间。下面是两个关键的数据结构

/*

* Resources are tree-like, allowing

* nesting etc..

*/

struct resource {

resource_size_t start;

resource_size_t end;

const char *name;

unsigned long flags;

unsigned long desc;

struct resource *parent, *sibling, *child;

};

resource代表一个资源,可以是一段IO地址区间,或者Mem地址区间,总线树上每枚举一个设备,Host bridge就根据设备的BAR空间大小分配合适的资源给这个PCI设备用,这里的资源就是IO或者内存空间的物理地址。PCI设备BAR寄存器的值就是从这里申请得来的。申请的流程如下

pci_read_bases

/* 遍历每个BAR寄存器,读取其内容,并为其申请物理地址空间 */

for (pos = 0; pos < howmany; pos++) {

struct resource *res = &dev->resource[pos]; // 申请的地址空间放在这里面

reg = PCI_BASE_ADDRESS_0 + (pos << 2);

pos += __pci_read_base(dev, pci_bar_unknown, res, reg);

}

region.start = l64;

region.end = l64 + sz64;

/* 申请资源,将申请到的资源放在res中, region存放PCI设备BAR空间区间 */

pcibios_bus_to_resource(dev->bus, res, ®ion);

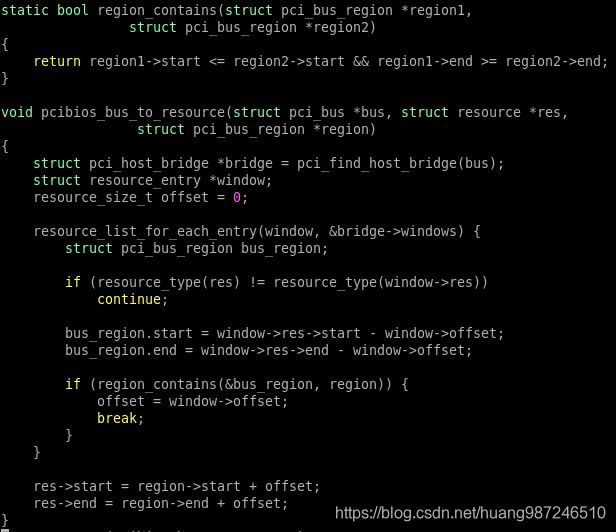

分析资源申请函数,它首先取出PCI设备所在的Host bridge,pci_host_bridge.windows链表维护了Host bridge管理的所有资源,遍历其windows成员链表,找到合适的区间,然后分给PCI设备。

至此,PCI设备有了PCI域的物理地址,当扫描结束后,内核会逐一为这些PCI设备配置这个物理地址

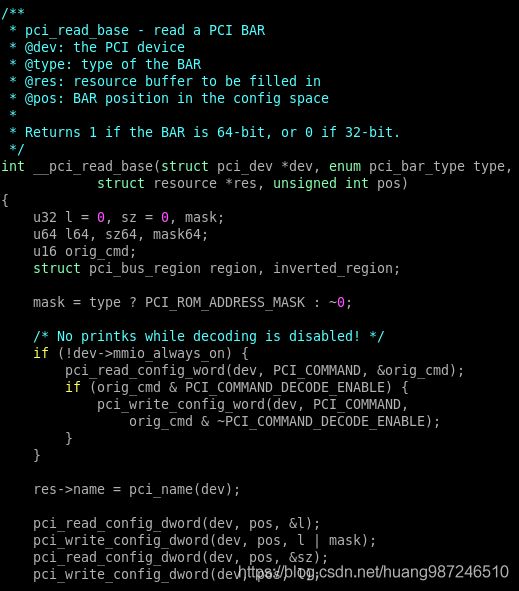

- 读取BAR空间32bit的原始内容,保存

- BAR空间所有bit写1

- 再次读取BAR空间内容,右起第一个非0位所在的bit位,它的值就是BAR空间大小

假设是右起第12bit为1,那么BAR空间的大小就是2^12 = 4KB - 将原始内容写入BAR空间,恢复其原始状态,留待下一次读取

- 枚举之后,内核记录PCI总线树上所有PCI设备的基本硬件信息,比如vendor id,device id,同时也建立了一张所有设备的内存拓扑图,填充了大部分pci_dev数据结构,余下部分比如PCI设备的驱动(pci_dev.driver)在这个时候还没有找到

/*

* The pci_dev structure is used to describe PCI devices.

*/

struct pci_dev {

struct list_head bus_list; /* node in per-bus list */

struct pci_bus *bus; /* bus this device is on */

struct pci_bus *subordinate; /* bus this device bridges to */

void *sysdata; /* hook for sys-specific extension */

struct proc_dir_entry *procent; /* device entry in /proc/bus/pci */

struct pci_slot *slot; /* Physical slot this device is in */

unsigned int devfn; /* encoded device & function index */

unsigned short vendor;

unsigned short device;

unsigned short subsystem_vendor;

unsigned short subsystem_device;

unsigned int class; /* 3 bytes: (base,sub,prog-if) */

u8 revision; /* PCI revision, low byte of class word */

u8 hdr_type; /* PCI header type (`multi' flag masked out) */

#ifdef CONFIG_PCIEAER

u16 aer_cap; /* AER capability offset */

#endif

u8 pcie_cap; /* PCIe capability offset */

u8 msi_cap; /* MSI capability offset */

u8 msix_cap; /* MSI-X capability offset */

u8 pcie_mpss:3; /* PCIe Max Payload Size Supported */

u8 rom_base_reg; /* which config register controls the ROM */

u8 pin; /* which interrupt pin this device uses */

u16 pcie_flags_reg; /* cached PCIe Capabilities Register */

unsigned long *dma_alias_mask;/* mask of enabled devfn aliases */

struct pci_driver *driver; /* which driver has allocated this device */

u64 dma_mask; /* Mask of the bits of bus address this

device implements. Normally this is

0xffffffff. You only need to change

this if your device has broken DMA

or supports 64-bit transfers. */

......

}

配置

- 枚举完成之后,内核得到pci总线树的拓扑图,然后开始配置总线树上的所有设备。Host bridge按照枚举过程中读取的pci设备的bar空间大小,将这段地址空间分成大小不同的地址空间。简单说就是为每个pci设备分配一个能够满足它使用的pci总线域地址段。确立了每个pci设备拥有的地址空间后,Host bridge将其首地址写到bar寄存器中,这就是配置,注意,bar空间写入的起始地址是pci总线域的,不是cpu域的。配置过程在pci_subsys_init中完成,首先遍历总线树上所有设备,统一检查在枚举阶段设备申请的资源,判断多个设备之前是否有资源冲突,内核要保证资源统一并且正确的分配给每一个PCI设备。检查完成后分配资源。最后向每个PCI设备的

BAR寄存器写入分配到的地址空间起始值,完成配置。流程如下:

pci_subsys_init

pcibios_resource_survey

pcibios_allocate_bus_resources(&pci_root_buses); // 首先将整个资源按照总线再分成一段段空间

pcibios_allocate_resources(0); // 检查资源是否统一并且不冲突

pcibios_allocate_resources(1);

pcibios_assign_resources(); // 写入地址到BAR寄存器

pci_assign_resource

_pci_assign_resource

__pci_assign_resource

pci_bus_alloc_resource

pci_update_resource

pci_std_update_resource

pci_write_config_dword(dev, reg, new) // 往BAR寄存器写入起始地址

BAR寄存器中写入的地址,乍一看就是系统的物理地址,但实际上,它与CPU域的物理地址有所不同,它是PCI域的物理地址。两个域的地址需要通过Host bridge的转换。只不过,X86上Host bridge偷懒了,直接采用了一一映射的方式。因此两个域的地址空间看起来一样。在别的结构上(PowerPC)这个地址不一样。

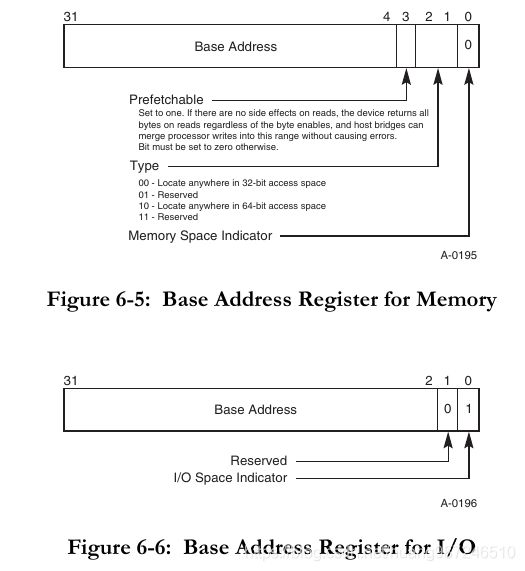

- 下面是virtio-blk设备配置空间的格式,截图自pci3.0 spec 6.2.5,bar寄存器配置之后,它的内容是bar空间的pci总线域起始地址,其中低4bit描述了这段空间的属性,在取地址的时候要作与0操作。最低位表示这个pci设备的bar空间,映射到的是cpu总线域的哪类空间,为1是IO空间,为0是内存空间。当映射到内存空间时,还用[2-1]这两位区分cpu域地址总线的宽度,为00表示映射到32位宽总线的cpu域内存空间,为10时表示映射到64位宽总线的cpu域内存空间。

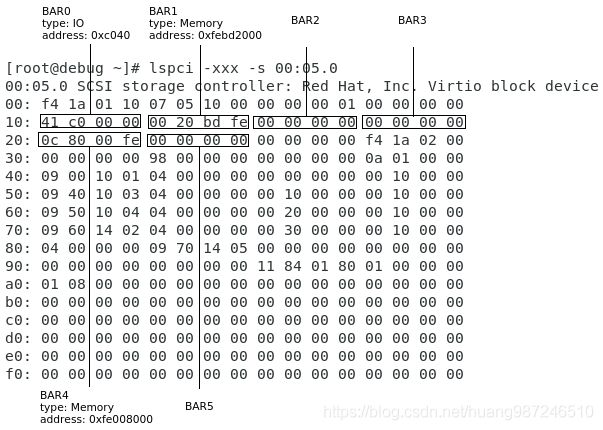

- 下面是一个典型的virtio-blk设备配置空间bar寄存器内容

- BAR0:0XC041,将低4位与0,BAR0空间的起始地址0XC040,从最低位可以看出来这映射的是IO空间

- BAR1:0XFEBD2000,BAR1空间的起始地址0XFEBD2000,这是个内存空间

- BAR4:0XFE00800C,将低4位与0,BAR2空间的起始地址0XFE008000,这是个内存空间,64-bit,支持prefetch

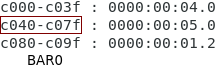

- 在系统的IO地址空间中,可以看到BAR0占用的IO地址,

cat /proc/ioports

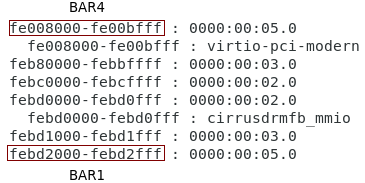

- 在系统的内存空间中,可以看到BAR1和BAR4占用的内存空间,

cat /proc/iomem

- 从主机上可以看到qemu统计的virtio-blk设备BAR空间的使用情况,

virsh qemu-monitor-command vm --hmp info pci

- pci设备的配置有几个地方容易混淆,特此说明自己的理解,如有不对,请留言指出:

- 枚举过程中访问pci配置空间寄存器,软件接口是往特定寄存器写东西,真正发起读写请求的,是host bridge,它在pci总线上传输的是command type为configuration wirte/read的transaction。

配置完成后,软件对pci bar空间关联的内存进行读写,可以直接使用mov内存访问指令,这时候host bridge在pci总线上传输的是command type为IO Read/Write或者Memory Read/Write的transaction。 - pci设备被配置之后,bar寄存器中存放了关联的内存起始地址,这个地址是pci总线域的物理地址,不是cpu域的物理地址,虽然这两个值一样,但这只是x86这种架构的特殊情况,因为host bridge转换的时候是一一映射的,才造成这种假象。在别的架构上,比如power pc,cpu域的地址想要访问pci总线域的地址,需要通过host bridge进行地址转换才能进行,两种地址并不相同。

- pci设备被配置之后,虽然看上去软件可以像访问内存一样访问pci设备的bar的空间,但cpu真正访问的时候,是要把地址交给host bridge,让它去访问才可以。可以说,访问pci设备bar空间的不是cpu,而是host bridge。至始至终,cpu都不能直接管理pci设备的bar空间,它只能通过host bridge来间接管理。

加载virtio-pci驱动

- virtio-blk最底层是pci,其上是virtio-pci,因此初始化流程从pci bus的注册开始,然后是virtio-pci,然后是virtio-blk逐级往上介绍,virtio-blk的驱动加载会留到下一章介绍。

- linux设备驱动有一套bus,device,driver基础框架,这种设计将设备与板载信息解耦,使得设备和驱动可以灵活加载,屏蔽了板载信息的变化。当内核从一个平台移植到另外一个平台时,bus,device,driver这套机制的实现使得设备驱动的加载可以自适应。驱动不会因为板载设备的变化而重写代码。

- 设备驱动框架中,bus是连接device和driver的桥梁,因此在系统启动过程中它首先被注册。bus的作用,就是让系统启动时注册的设备可以找到对应的驱动,注册的驱动可以找到对应的设备,这个过程称为设备与驱动的绑定。

- 总线上发生两类事件可以导致设备与驱动绑定行为的发生:一是通过device_register函数向某一bus上注册设备,这种情况下内核除了将该设备加入到bus上设备链表的尾端,同时会试图将此设备与总线上所有驱动对象进行绑定操作;二是通过driver_register将某一驱动注册到其所属的bus上,内核此时除了将驱动对象加入到bus所有驱动对象构成的链表的尾部,也会试图将驱动与器上的所有设备进行绑定操作。

- virtio-pci设备是注册到pci总线上的,因此加载它的驱动应该时pci总线上的驱动,首先介绍PCI总线的注册,然后介绍总线如何将设备和驱动绑定,最后介绍设备的探测。

PCI总线的注册

- PCI总线声明了一个bus_type的结构,称为pci_bus_type,它由下面的值初始化:

struct bus_type pci_bus_type = {

.name = "pci",

.match = pci_bus_match,

.uevent = pci_uevent,

.probe = pci_device_probe,

.remove = pci_device_remove,

......

};

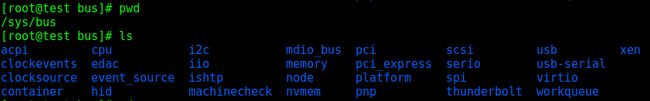

- 将PCI模块加载到内核时,通过调用bus_register将pci_bus_type注册到驱动程序核心的顶层bus中,这个过程将在/sys/bus下创建pci目录,然后在/sys/bus/pci中创建两个目录:devices和drivers,代码如下:

pci_driver_init

bus_register(&pci_bus_type) // pci总线数据结构

priv->subsys.kobj.kset = bus_kset; // 指向代表顶层bus的kset

priv->devices_kset = kset_create_and_add("devices", NULL, &priv->subsys.kobj);

priv->drivers_kset = kset_create_and_add("drivers", NULL, &priv->subsys.kobj);

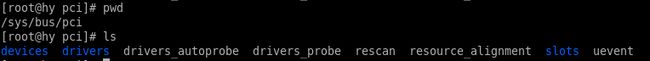

生成的pci目录如下:

创建的devices和drivers如下:

- PCI总线注册后,重要的一个功能就是绑定设备和驱动,当由PCI设备注册到总线上时,它遍历总线上的所有驱动,调用match函数判断这对驱动和设备是否匹配。下一节具体介绍match的流程。

PCI驱动的注册

- 所有pci总线上的驱动都必须实现一个pci_driver的变量,这个结构中有一个device_driver结构,在注册PCI驱动程序时,这个结构将被初始化,如下:

static struct pci_driver virtio_pci_driver = {

.name = "virtio-pci",

.id_table = virtio_pci_id_table,

.probe = virtio_pci_probe,

.remove = virtio_pci_remove,

......

}

module_pci_driver(virtio_pci_driver)

pci_register_driver

__pci_register_driver

int __pci_register_driver(struct pci_driver *drv, struct module *owner,

const char *mod_name)

{

/* initialize common driver fields */

drv->driver.name = drv->name;

drv->driver.bus = &pci_bus_type; // 将驱动程序的总线指向了pci_bus_type

drv->driver.owner = owner;

drv->driver.mod_name = mod_name;

drv->driver.groups = drv->groups;

spin_lock_init(&drv->dynids.lock);

INIT_LIST_HEAD(&drv->dynids.list);

/* register with core */

return driver_register(&drv->driver); // 向驱动核心注册

}

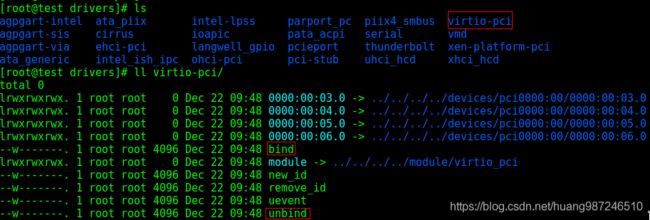

- PCI驱动注册调用内核驱动核心接口driver_register,该接口会在sysfs目录建立相应的目录和属性文件,为用户空间提供控制驱动卸载和加载的接口,具体流程如下:

driver_register

driver_find // 查找是否总线上已存在相同驱动,防止重复注册

bus_add_driver

driver_create_file(drv, &driver_attr_uevent) // 在virtio-pci目录下创建uevent属性文件

add_bind_files(drv) // 在virtio-pci目录下创建bind/unbind属性文件

/* driver_attr_uevent 变量通过以下宏定义,其余driver属性文件类似 */

static DRIVER_ATTR_WO(uevent)

#define DRIVER_ATTR_WO(_name) \

struct driver_attribute driver_attr_##_name = __ATTR_WO(_name)

重点看unbind属性文件的创建,它为用户提供了卸载驱动的接口,当用户向unbind属性文件里面写入pci设备的地址时,内核会将该设备与其驱动解绑,相当于绑定的逆操作,对应的解邦操作函数unbind_store,如下:

/* Manually detach a device from its associated driver. */

static ssize_t unbind_store(struct device_driver *drv, const char *buf,

size_t count)

{

struct bus_type *bus = bus_get(drv->bus); // 找到驱动所在总线

struct device *dev;

int err = -ENODEV;

dev = bus_find_device_by_name(bus, NULL, buf); // 通过buf中存放的设备名字找到其在内核中对应的device

if (dev && dev->driver == drv) { // 确认设备的驱动就是自己

if (dev->parent) /* Needed for USB */

device_lock(dev->parent);

device_release_driver(dev); // 解绑定!!!

if (dev->parent)

device_unlock(dev->parent);

err = count;

}

put_device(dev);

bus_put(bus);

return err;

}

static DRIVER_ATTR_IGNORE_LOCKDEP(unbind, S_IWUSR, NULL, unbind_store)

#define DRIVER_ATTR_IGNORE_LOCKDEP(_name, _mode, _show, _store) \

struct driver_attribute driver_attr_##_name = \

__ATTR_IGNORE_LOCKDEP(_name, _mode, _show, _store)

回到virtio-pci驱动的注册流程都走完之后,sysfs中多了virtio-pci驱动的目录和属性文件,如下:

PCI设备的match

- PCI设备注册后,驱动核心遍历总线上每个驱动,依次执行match函数,查看是否能配对,下面这个函数时内核提供的遍历总线上所有驱动的工具函数,遍历PCI总线似乎没有用这个函数,但动作应该类似。

int bus_for_each_drv(struct bus_type * bus, struct device_driver * start,

void * data, int (*fn)(struct device_driver *, void *));

- match动作的具体流程如下,它判断设备和驱动能否绑定的标志,对virtio-pci设备来说,就是vendor id相等就可以了

pci_bus_match

pci_match_device

pci_match_id

pci_match_one_device

static inline const struct pci_device_id *

pci_match_one_device(const struct pci_device_id *id, const struct pci_dev *dev)

{

if ((id->vendor == PCI_ANY_ID || id->vendor == dev->vendor) &&

(id->device == PCI_ANY_ID || id->device == dev->device) &&

(id->subvendor == PCI_ANY_ID || id->subvendor == dev->subsystem_vendor) &&

(id->subdevice == PCI_ANY_ID || id->subdevice == dev->subsystem_device) &&

!((id->class ^ dev->class) & id->class_mask))

return id;

return NULL;

}

pci_match_one_device函数中,第一个参数是设备驱动注册时硬编码的ID结构体,第二个参数是pci设备,当PCI驱动指定的ID为PCI_ANY_ID时,表示可以匹配任何的ID,查看virtio_pci_driver注册时设置的virtio_pci_id_table,如下,可以看到,驱动只设置了vendor id,所有只要vendor id为0x1af4,都可以match成功。在系统枚举PCI设备时,已经从PCI设备的配置空间中读到了vendor id。因此,如果是virtio设备,不论是哪一种,都可以成功绑定virtio-pci驱动

static const struct pci_device_id virtio_pci_id_table[] = {

{ PCI_DEVICE(PCI_VENDOR_ID_REDHAT_QUMRANET, PCI_ANY_ID) },

{ 0 }

};

#define PCI_VENDOR_ID_REDHAT_QUMRANET 0x1af4

#define PCI_DEVICE(vend,dev) \

.vendor = (vend), .device = (dev), \

.subvendor = PCI_ANY_ID, .subdevice = PCI_ANY_ID

PCI设备的探测

PCI总线match设备和驱动成功后,驱动程序核心会把device结构中的driver指针指向这个驱动程序,两者就联系起来,然后调用device_driver结构中的probe函数探测PCI设备。这里就是virtio_pci_driver指定的virtio_pci_probe函数。probe的主要动作包含:

- 使能PCI设备BAR空间的映射能力,设置command寄存器的I/O space和memory space为1,将pci设备映射IO空间和内存空间的开关打开

- 读取virtio-pci设备配置空间的附加寄存器组,识别capability,读取PCI设备每个BAR内容到内存,并映射BAR空间到内核高地址

- 完善内核驱动核心数据结构,将virtio-pci设备注册到virtio总线上,这一步会触发virtio总线的match动作

映射BAR空间

- PCI设备的BAR寄存器已经被写入了PCI域的起始地址,但BAR空间还不能被驱动程序使用,需要将其映射到内核虚拟地址空间(3G-4G),才能使用

使能BAR空间

- PCI配置空间的command寄存器,控制着PCI设备对来自总线上的访问是否响应的开关。使能BAR空间,就是让PCI设备开放总线对PCI设备中IO或Memory空间的访问权限

virtio_pci_driver.probe

virtio_pci_probe

pci_enable_device

pci_enable_device

pci_enable_device_flags(dev, IORESOURCE_MEM | IORESOURCE_IO) // 打开内存和IO访问权限

do_pci_enable_device

pcibios_enable_device

pci_enable_resources

pci_write_config_word(dev, PCI_COMMAND, cmd) //向command寄存器字段写1

识别cap

- 使能BAR空间之后,开始查找BAR空间在设备上的位置,根据PCI的规范,我们首先要查看设备是否开启capability,如果开启再到PCI规范中约定的地方获取cap链表的起始偏移,virtio-pci cap布局如下:

cap探测入口在virtio_pci_modern_probe,如果是传统模式,入口在virtio_pci_legacy_probe,这里以modern probe为例

virtio_pci_probe

virtio_pci_modern_probe

virtio_pci_find_capability

pci_find_capability(dev, PCI_CAP_ID_VNDR)

pos = __pci_bus_find_cap_start // 判断入口点,如果是普通pci设备,返回0x34,这个地方存放cap链表的入口偏移

pos = __pci_find_next_cap // 依次搜索每一条cap,找到类型为PCI_CAP_ID_VNDR的第一个cap,返回它在配置空间的偏移

__pci_find_next_cap_ttl

函数首先通过pci_find_capability查找类型为PCI_CAP_ID_VNDR(0x9)的capability bar位置,这是PCI规范中定义的扩展capability类型,在查找前首先确定capability在配置空间的位置入口,检查PCI设备是否实现capabilty,如果实现了,是普通设备或者pci桥,它在配置空间偏移0x34的地方,如果是Card Bus,它在配置空间偏移0x14的地方,找到capabitliy起始位置后依次查找链表上每个cap,直到找到PCI_CAP_ID_VNDR类型的cap(检查cap空间type字段是否为PCI_CAP_ID_VNDR),找到后返回cap在配置空间中的偏移。整个过程关键代码和示意图如下

/**

* virtio_pci_find_capability - walk capabilities to find device info.

* @dev: the pci device

* @cfg_type: the VIRTIO_PCI_CAP_* value we seek

* @ioresource_types: IORESOURCE_MEM and/or IORESOURCE_IO.

*

* Returns offset of the capability, or 0.

*/

static inline int virtio_pci_find_capability(struct pci_dev *dev, u8 cfg_type,

u32 ioresource_types, int *bars)

{

int pos;

/* 查找cap结构的在配置空间中的偏移地址 */

for (pos = pci_find_capability(dev, PCI_CAP_ID_VNDR);

pos > 0;

pos = pci_find_next_capability(dev, pos, PCI_CAP_ID_VNDR)) {

u8 type, bar;

/* 取出virtio_pci_cap数据结构中type成员的值 */

pci_read_config_byte(dev, pos + offsetof(struct virtio_pci_cap, cfg_type), &type);

/* 取出virtio_pci_cap数据结构中bar成员的值 */

pci_read_config_byte(dev, pos + offsetof(struct virtio_pci_cap, bar), &bar);

/* Ignore structures with reserved BAR values */

if (bar > 0x5)

continue;

/* 如果是我们想要的type,返回该cap在配置空间中的偏移 */

if (type == cfg_type) {

if (pci_resource_len(dev, bar) &&

pci_resource_flags(dev, bar) & ioresource_types) {

*bars |= (1 << bar);

return pos;

}

}

}

return 0;

}

- cap偏移确认后,就可以找到virtio-pci存放在BAR空间的数据,cap中有4个成员是描述这个数据,bar指示数据放在第几个bar空间(取值0-5),cfg_type指示数据的类型,offset指示数据在bar空间的偏移,length存放数据长度。

/* This is the PCI capability header: */

struct virtio_pci_cap {

__u8 cap_vndr; /* Generic PCI field: PCI_CAP_ID_VNDR */

__u8 cap_next; /* Generic PCI field: next ptr. */

__u8 cap_len; /* Generic PCI field: capability length */

__u8 cfg_type; /* Identifies the structure. */

__u8 bar; /* Where to find it. */

__u8 padding[3]; /* Pad to full dword. */

__le32 offset; /* Offset within bar. */

__le32 length; /* Length of the structure, in bytes. */

};

映射

- 回到virtio_pci_modern_probe,依次找到virtio规范定义的几种类型的cap在配置空间的偏移,注意common,isr,notify和device变量存放的是配置空间的偏移,最后的device结构与具体virtio设备有关,每种virtio设备实现自己的配置空间,virtio-blk的device-specific结构为

virtio_blk_config

common = virtio_pci_find_capability(pci_dev, VIRTIO_PCI_CAP_COMMON_CFG,

IORESOURCE_IO | IORESOURCE_MEM,

&vp_dev->modern_bars);

isr = virtio_pci_find_capability(pci_dev, VIRTIO_PCI_CAP_ISR_CFG,

IORESOURCE_IO | IORESOURCE_MEM,

&vp_dev->modern_bars);

notify = virtio_pci_find_capability(pci_dev, VIRTIO_PCI_CAP_NOTIFY_CFG,

IORESOURCE_IO | IORESOURCE_MEM,

&vp_dev->modern_bars);

/* Device capability is only mandatory for devices that have

* device-specific configuration.

*/

device = virtio_pci_find_capability(pci_dev, VIRTIO_PCI_CAP_DEVICE_CFG,

IORESOURCE_IO | IORESOURCE_MEM,

&vp_dev->modern_bars);

map_capability将BAR空间映射到内核的虚拟地址空间(3G - 4G)

vp_dev->common = map_capability(pci_dev, common,

sizeof(struct virtio_pci_common_cfg), 4,

0, sizeof(struct virtio_pci_common_cfg),

NULL);

vp_dev->device = map_capability(pci_dev, device, 0, 4,

0, PAGE_SIZE,

&vp_dev->device_len);

map_capability

pci_iomap_range(dev, bar, offset, length)

if (flags & IORESOURCE_IO) // 如果BAR空间实现的是IO空间,将其映射到CPU的IO地址空间

return __pci_ioport_map(dev, start, len);

if (flags & IORESOURCE_MEM) // 如果BAR空间实现的内存空间,将其映射到CPU的内存地址空间

return ioremap(start, len);

注册virtio-pci方法

- 当PCI设备的物理探测完成后,virtio-pci设备的探测接近尾声,最后是注册所有virtio-pci设备都需要基本方法,包括操作virtqueue和virtio-pci配置空间的

/* Again, we don't know how much we should map, but PAGE_SIZE

* is more than enough for all existing devices.

*/

if (device) {

vp_dev->device = map_capability(pci_dev, device, 0, 4,

0, PAGE_SIZE,

&vp_dev->device_len);

if (!vp_dev->device)

goto err_map_device;

vp_dev->vdev.config = &virtio_pci_config_ops; // 注册配置空间操作函数

} else {

vp_dev->vdev.config = &virtio_pci_config_nodev_ops;

}

vp_dev->config_vector = vp_config_vector;

vp_dev->setup_vq = setup_vq; // 注册virtqueue初始化函数

vp_dev->del_vq = del_vq;

注册设备到virtio总线

- virtio-pci设备的probe过程,完成了BAR空间映射,注册config操作函数和virtqueue操作函数。作为virtio设备的父类,virtio-pci设备为它们完成了这些通用操作,接下来就是每个virtio设备的probe。通过

register_virtio_device函数向virtio总线注册设备,可以触发virtio总线上的match操作,然后进行virtio设备的探测,这里我们以virtio-blk设备为例,流程如下:

virtio_pci_probe

pci_enable_device

virtio_pci_modern_probe

register_virtio_device

dev->dev.bus = &virtio_bus // 将virtio_device.dev.bus设置成virito总线!!!

dev->config->reset(dev) // 复位virtio设备

virtio_add_status(dev, VIRTIO_CONFIG_S_ACKNOWLEDGE) // 设置设备状态为ACKNOWLEDGE,表示我们已经发现这个virtio设备

device_register(&dev->dev) // 向virtio总线注册设备,触发总线上的match操作

- 将PCI设备注册到virtio总线后,关于virtio-pci设备的初始化就告一段落,后面就是具体virtio设备的驱动加载和探测,包括以下设备,下面一章我们选取block device继续进行分析

1af4:1041 network device (modern)

1af4:1042 block device (modern)

1af4:1043 console device (modern)

1af4:1044 entropy generator device (modern)

1af4:1045 balloon device (modern)

1af4:1048 SCSI host bus adapter device (modern)

1af4:1049 9p filesystem device (modern)

1af4:1050 virtio gpu device (modern)

1af4:1052 virtio input device (modern)

legacy:

#define PCI_DEVICE_ID_VIRTIO_NET 0x1000

#define PCI_DEVICE_ID_VIRTIO_BLOCK 0x1001

#define PCI_DEVICE_ID_VIRTIO_BALLOON 0x1002

#define PCI_DEVICE_ID_VIRTIO_CONSOLE 0x1003

#define PCI_DEVICE_ID_VIRTIO_SCSI 0x1004

#define PCI_DEVICE_ID_VIRTIO_RNG 0x1005

#define PCI_DEVICE_ID_VIRTIO_9P 0x1009

#define PCI_DEVICE_ID_VIRTIO_VSOCK 0x1012

#define PCI_DEVICE_ID_VIRTIO_PMEM 0x1013

#define PCI_DEVICE_ID_VIRTIO_IOMMU 0x1014

#define PCI_DEVICE_ID_VIRTIO_MEM 0x1015