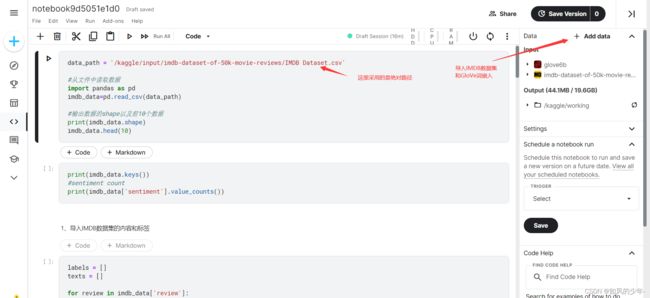

使用Glove词嵌入对IMDB数据集进行二分类

IMDB Dataset of 50K Movie Reviews

1、从文件中读取数据

data_path = '/kaggle/input/imdb-dataset-of-50k-movie-reviews/IMDB Dataset.csv'

#从文件中读取数据

import pandas as pd

imdb_data=pd.read_csv(data_path)

#输出数据的shape以及前10个数据

print(imdb_data.shape)

imdb_data.head(10)

需要注意的是imdb_data的数据类型是DataFrame的

print(imdb_data.keys())

#sentiment count

print(imdb_data['sentiment'].value_counts())

2、导入IMDB数据集的内容和标签

3、对IMDB原始数据的文本进行分词

import numpy as np

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

maxlen = 100 #只读取评论的前100个单词

max_words = 10000 #只考虑数据集中最常见的前10000个单词

tokenizer = Tokenizer(num_words=max_words)

tokenizer.fit_on_texts(texts)

sequences = tokenizer.texts_to_sequences(texts) #将texts文本转换成整数序列

word_index = tokenizer.word_index #单词和数字的字典

print('Found %s unique tokens' % len(word_index))

data = pad_sequences(sequences, maxlen=maxlen) #将data填充为一个(sequences, maxlen)的二维矩阵

data = np.asarray(data).astype('int64')

labels = np.asarray(labels).astype('float32')

print(data.shape, labels.shape)

train_reviews = data[: 40000]

train_sentiments = labels[:40000]

test_reviews = data[40000:]

test_sentiments = labels[40000:]

#show train datasets and test datasets shape

print(train_reviews.shape,train_sentiments.shape)

print(test_reviews.shape,test_sentiments.shape)

4、对嵌入进行预处理

glove.6B.100d.txt中有40k个单词的100维的嵌入向量,每一行的第一列是对应的单词,之后的100列是这个单词对应的嵌入向量

构建一个embedding_index的字典,键为单词,值为这个单词对应的嵌入向量

import os

glove_dir = '/kaggle/input/glove6b'

embedding_index = {}

f = open(os.path.join(glove_dir, 'glove.6B.100d.txt'))

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:], dtype = 'float32')

embedding_index[word] = coefs

f.close()

print('Found %s word vectors' % len(embedding_index))

![]()

接下来需要构建一个可以加载到Embedding层中的嵌入矩阵,他必须是一个形状为(max_words, embedding_dim)1的矩阵

对于单词索引为i的单词,这个矩阵中的第i行是word_index中这个单词对应的词向量

#准备GloVe词嵌入矩阵

import numpy as np

embedding_dim = 100

embedding_matrix = np.zeros((max_words, embedding_dim))

for word, i in word_index.items():

if i < max_words:

embedding_vector = embedding_index.get(word)

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

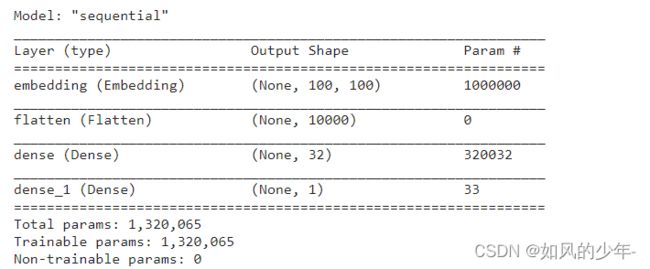

5、定义模型

from keras import Sequential

from keras.layers import Dense, Flatten, Embedding

network = Sequential()

network.add(Embedding(max_words, embedding_dim, input_length=100))

network.add(Flatten())

network.add(Dense(32, activation='relu'))

network.add(Dense(1, activation='sigmoid'))

network.summary()

from tensorflow.keras.utils import plot_model

plot_model(network, show_shapes = True)

6、在模型中加载GloVe词嵌入

#将预训练的的词嵌入加载到Embedding层中

network.layers[0].set_weights([embedding_matrix])

network.layers[0].trainable = False

network.summary()

7、训练与评估

network.compile('rmsprop', 'binary_crossentropy', 'accuracy')

history = network.fit(train_reviews, train_sentiments,

epochs=10,

batch_size=32,

validation_data=(test_reviews, test_sentiments))

#IMDB影评二分类

#1、从文件中读取数据

data_path = '/kaggle/input/imdb-dataset-of-50k-movie-reviews/IMDB Dataset.csv'

#为避免切换到不同目录下导致找不到文件,这里使用的是绝对路径

import pandas as pd

imdb_data = pd.read_csv(data_path) #从文件中读取数据

#输出IMDB的shap以及前10条数据的信息

print(imdb_data.shape)

imdb_data.head(10)

print(type(imdb_data)) #imdb_data是一个DataFrame类

print(imdb_data.keys()) #imdb_data有两列,review和sentiment

print(imdb_data['sentiment'].value_counts())

#2、导入IMDB数据集的内容和标签

labels = []

texts = []

for sentiment in imdb_data['sentiment']:

if sentiment == 'positive':

labels.append(float(1.0)) #需要注意的是labels.append(1.0) 可能导致存储的是1.0这个字符串,而不是我们想要的float型数据

else:

labels.append(float(0.0))

for review in imdb_data['review']:

texts.append(review)

#查看texts和labels的长度

print(len(texts), len(labels))

#3、对IMDB原始数据的文本进行分词

import numpy as np

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

max_words = 10000 #只考虑最常见的10000词

max_len = 100 #对于所有的评论,只截取前100个词

tokenizer = Tokenizer(num_words=max_words) #实例化一个只考虑最常用10000词的分词器

tokenizer.fit_on_texts(texts) #构建单词索引

sequences = tokenizer.texts_to_sequences(texts) #将texts中的文字转换成整数序列

word_index = tokenizer.word_index #分词器中的word_index字典

data = pad_sequences(sequences, maxlen=max_len) #填充sequences序列, data是一个(50000, 100)的2D张量

data = np.asarray(data).astype('int64') #data.shape = (50000, 100), labels.shape = (50000, )

labels = np.asarray(labels).astype('float32')

#划分训练集和验证集

train_reviews = data[: 40000]

train_sentiments = labels[:40000]

test_reviews = data[40000:]

test_sentiments = labels[40000:]

#show train datasets and test datasets shape

print(train_reviews.shape,train_sentiments.shape)

print(test_reviews.shape,test_sentiments.shape)

#4、对嵌入进行预处理

#glove.6B.100d.txt中有40k个单词的100维的嵌入向量,每一行的第一列是对应的单词,之后的100列是这个单词对应的嵌入向量

#构建一个embedding_index的字典,键为单词,值为这个单词对应的嵌入向量

import os

glove_dir = '/kaggle/input/glove6b'

#如果想在自己电脑上跑,需要下载上述两个数据集,并将路径替换为自己电脑上的本地路径

embedding_index = {}

f = open(os.path.join(glove_dir, 'glove.6B.100d.txt'), encoding='utf8') #注意编码格式为utf8

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:], dtype='float32')

embedding_index[word] = coefs

f.close()

print('Found %s word vectors' % len(embedding_index))

#接下来需要构建一个可以加载到Embedding层中的嵌入矩阵,他必须是一个形状为(max_words, embedding_dim)1的矩阵

#对于单词索引为i的单词,这个矩阵中的第i行是word_index中这个单词对应的词向量

import numpy as np

embedding_dim = 100

embedding_matrix = np.zeros((max_words, embedding_dim)) #构建一个(max_words, embedding)的二维矩阵

for word, i in word_index.items():

if i < max_words:

embedding_vector = embedding_index.get(word)

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

#5、定义模型

from keras.models import Sequential

from keras.layers import Dense, Flatten, Embedding

network = Sequential()

network.add(Embedding(max_words, embedding_dim, input_length=max_len))

network.add(Flatten())

network.add(Dense(32, activation='relu'))

network.add(Dense(1, activation='sigmoid'))

#查看模型中对应的参数

network.summary()

#绘制模型的网络结构

from tensorflow.keras.utils import plot_model

plot_model(network, show_shapes = True)

#6、在模型中加载GloVe词嵌入

#冻结Embedding层

network.layers[0].set_weights([embedding_matrix])

network.layers[0].trainable = False

network.summary()

#7、训练与评估

network.compile('rmsprop', 'binary_crossentropy', 'accuracy')

history = network.fit(train_reviews, train_sentiments,

epochs=10,

batch_size=32,

validation_data=(test_reviews, test_sentiments))

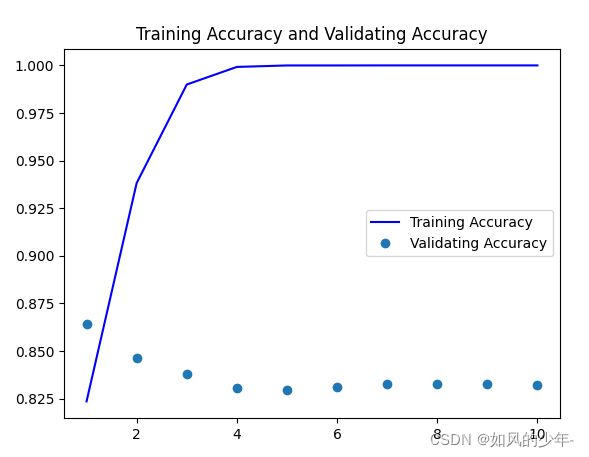

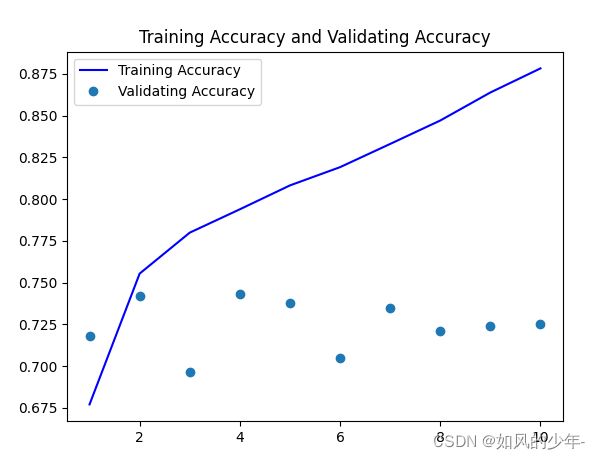

#8、绘制训练精度和验证精度

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'b', label = "Training Accuracy")

plt.plot(epochs, val_acc, 'o', label = "Validating Accuracy")

plt.title("Training Accuracy and Validating Accuracy")

plt.legend()

plt.show()

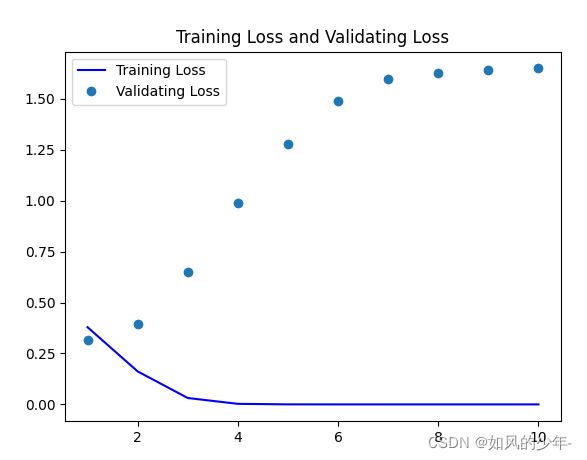

#9、绘制训练损失和验证损失

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.plot(epochs, loss, 'b', label = "Training Loss")

plt.plot(epochs, val_loss, 'o', label = "Validating Loss")

plt.title("Training Loss and Validating Loss")

plt.legend()

plt.show()

prediction = network.predict(test_reviews)

prediction = [(int) ((prediction[i][0] + 0.5) / 1.0) for i in range(len(prediction))]

prediction = np.asarray(prediction).astype('float32')

#构建二分类的混淆矩阵

import pandas as pd

pd.crosstab(test_sentiments, prediction, rownames = ['labels'], colnames = ['predictions'])

#利用sklearn中的classification_report来查看对应的准确率、召回率、F1-score

from sklearn.metrics import classification_report

print(classification_report(test_sentiments, prediction))

# 不使用预训练的词嵌入,来训练相同的模型

network = Sequential()

network.add(Embedding(max_words, embedding_dim, input_length=100)) #之前使用预训练好的词嵌入是将Embedding层冻结

network.add(Flatten())

network.add(Dense(32, activation='relu'))

network.add(Dense(1, activation='sigmoid'))

network.summary()

network.compile('rmsprop', 'binary_crossentropy', 'accuracy')

history = network.fit(train_reviews, train_sentiments,

epochs=10,

batch_size=32,

validation_data=(test_reviews, test_sentiments))

#绘制训练精度和验证精度

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'b', label = "Training Accuracy")

plt.plot(epochs, val_acc, 'o', label = "Validating Accuracy")

plt.title("Training Accuracy and Validating Accuracy")

plt.legend()

plt.show()

#绘制训练损失和验证损失

plt.plot(epochs, loss, 'b', label = "Training Loss")

plt.plot(epochs, val_loss, 'o', label = "Validating Loss")

plt.title("Training Loss and Validating Loss")

plt.legend()

plt.show()

使用词嵌入得到的结果:

可以看到在训练3次之后,模型就发生了过拟合(训练集上的精度不断上升,但在验证集上的精度在下降)