flink-cdc 环境搭建 version 1.14.3

flink-connector-cdc 独立于flink项目,顾名思义集成的时候要注意版本,注意版本,注意版本

flink-1.14.3 cdc jar 免费下载

1.环境

- java: jdk8+

- scala: 1.11 或 1.12看你的flink和cdc依赖的scala

- flink: 1.14.3

- mysql: 8.0

- flink-cdc

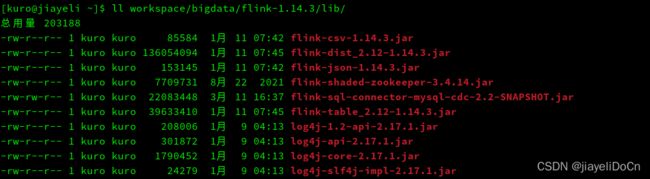

1.1 flink-sql环境:

如上flink-sql-connector-mysql-cdc-2.2-SNAPSHOT.jar即为flink1.14的依赖,需要在flink_home/lib/下面添加该依赖。

这个依赖需要自己编译,官方提供的只到2.1.1(在2022-03-11 17:05还没最新的)。方法如下:

官方提供的方法:flink-cdc readme

- 直接下载提供的jar

- 自己编译

git clone https://github.com/ververica/flink-cdc-connectors.git

cd flink-cdc-connectors

mvn clean install -DskipTests

编译好后直接用啥取啥,编译过程会下亿堆插件,so慢

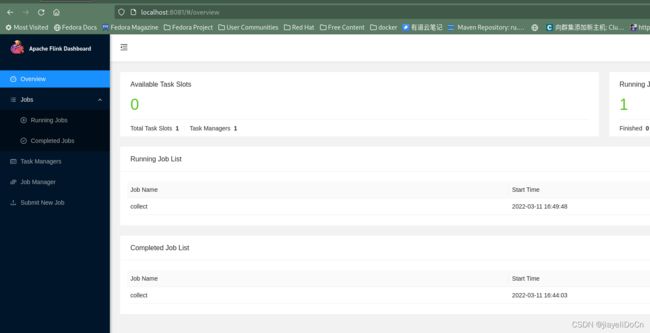

接下来进入flink的/bin目录启动集群

接下来进入flink的/bin目录启动集群

start-cluster.sh

查看

点这里看flink-web-ui

### demo flink-sql cdc mysql 数据

### demo flink-sql cdc mysql 数据

需要开启mysql的binlog,并且创建的表要有主键

3. 创建mysql表:

-- mysql

show databases;

use test;

create table if not exists test (

id int primary key auto_increment,

name varchar(32)

);

- 启动flink-sql client,创建flink流表

sql-client.sh

-- flink sql

CREATE TABLE test (

id INT,

name STRING,

PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc',

'hostname' = 'localhost',

'port' = '3306',

'username' = '用户名',

'password' = '密码',

'database-name' = '数据库名',

'table-name' = '表名'

);

- mysql数据库插入数据

-- mysql

insert into test values(0, "pjs");

insert into test values(0, "jyl");

6.查看flink-sql输出

-- flink sql

select * from test;

1.2 flink-stream

pom

<properties>

<maven.compiler.source>11maven.compiler.source>

<maven.compiler.target>11maven.compiler.target>

<flink.version>1.14.3flink.version>

<scala.version>2.11.12scala.version>

<scala.binary.version>2.11scala.binary.version>

<flink.cdc.version>2.2-SNAPSHOTflink.cdc.version>

properties>

<dependencies>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-clients_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-api-java-bridge_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-avroartifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-jdbc_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>com.ververicagroupId>

<artifactId>flink-connector-mysql-cdcartifactId>

<version>${flink.cdc.version}version>

dependency>

<dependency>

<groupId>com.ververicagroupId>

<artifactId>flink-sql-connector-mysql-cdcartifactId>

<version>${flink.cdc.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-runtime-web_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

dependencies>

demo:

gitee

public static void main(String[] args) throws Exception {

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname("localhost")

.port(3306)

.databaseList("test") // set captured database

.tableList("test.test") // set captured table

.username("kuro")

.password("pwdsdfsa;_=sfds")

.deserializer(new JsonDebeziumDeserializationSchema()) // converts SourceRecord to JString

.build();

Configuration configuration = Configuration.fromMap(Map.of("rest.port", "10010"));

StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(configuration);

// enable checkpoint

env.enableCheckpointing(3000);

env

.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")

// set 4 parallel source tasks

.setParallelism(4)

.print().setParallelism(1); // use parallelism 1 for sink to keep message ordering

env.execute("Print MySQL Snapshot + Binlog");

}

输出:

{"before":null,"after":{"id":2,"name":"ljy"},"source":{"version":"1.5.4.Final","connector":"mysql","name":"mysql_binlog_source","ts_ms":0,"snapshot":"false","db":"test","sequence":null,"table":"test","server_id":0,"gtid":null,"file":"","pos":0,"row":0,"thread":null,"query":null},"op":"r","ts_ms":1646993221523,"transaction":null}

{"before":null,"after":{"id":1,"name":"kuro"},"source":{"version":"1.5.4.Final","connector":"mysql","name":"mysql_binlog_source","ts_ms":0,"snapshot":"false","db":"test","sequence":null,"table":"test","server_id":0,"gtid":null,"file":"","pos":0,"row":0,"thread":null,"query":null},"op":"r","ts_ms":1646993221522,"transaction":null}

{"before":null,"after":{"id":3,"name":"liyouqiang"},"source":{"version":"1.5.4.Final","connector":"mysql","name":"mysql_binlog_source","ts_ms":0,"snapshot":"false","db":"test","sequence":null,"table":"test","server_id":0,"gtid":null,"file":"","pos":0,"row":0,"thread":null,"query":null},"op":"r","ts_ms":1646993221524,"transaction":null}

3月 11, 2022 6:07:05 下午 com.github.shyiko.mysql.binlog.BinaryLogClient connect

信息: Connected to localhost:3306 at mysql-bin.000001/3592 (sid:5536, cid:33)

{"before":{"id":1,"name":"kuro"},"after":{"id":1,"name":"LJY"},"source":{"version":"1.5.4.Final","connector":"mysql","name":"mysql_binlog_source","ts_ms":1646993370000,"snapshot":"false","db":"test","sequence":null,"table":"test","server_id":1,"gtid":null,"file":"mysql-bin.000001","pos":3813,"row":0,"thread":null,"query":null},"op":"u","ts_ms":1646993370264,"transaction":null}

flink-cdc 第一次会全量同步数据,其后就会增量进行同步