人工智能 | CIFAR10卷积神经网络实践

声明:凡代码问题,欢迎私信或在评论区沟通。承蒙指正,一起成长!

目录

一、实验目的

二、算法步骤

三、实验结果

一、实验目的

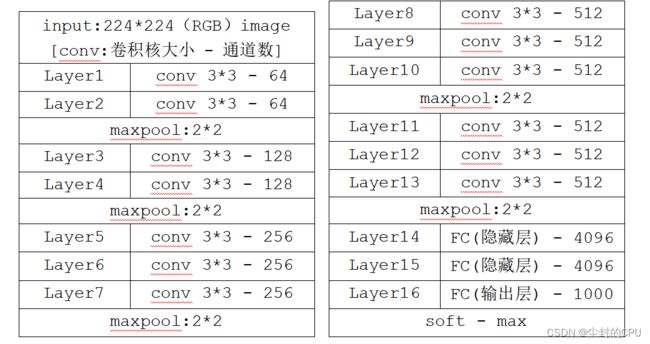

采用CIFAR10图像数据集中的猫狗图像集或kaggle的猫狗图像数据集对经典深度卷积神经网络模型VGG16进行分类识别训练和测试。

二、算法步骤

1、加载数据集:

从本地地址path加载数据集(共有25000张jpg猫和狗的图像)中的图片,其中,取5000张作为训练集,再取5000张作为测试集,将所有加载的图片的大小格式化为 224 * 224像素:

imgs = os.listdir(path)

train_data = np.empty((5000, resize, resize, 3), dtype="int32")

train_label = np.empty((5000,), dtype="int32")

test_data = np.empty((5000, resize, resize, 3), dtype="int32")

test_label = np.empty((5000,), dtype="int32")

for i in range(5000):

if i % 2:

train_data[i] = cv2.resize(cv2.imread(path + '/' + 'dog.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 1

else:

train_data[i] = cv2.resize(cv2.imread(path + '/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 0

#设置训练集和测试集的标签,偶数命名为猫,标签=0;奇数命名为狗,标签=1.

for i in range(5000, 10000):

j = i - 5000

if i % 2:

test_data[j] = cv2.resize(cv2.imread(path + '/' + 'dog.' + str(i) + '.jpg'), (resize, resize))

test_label[j] = 1

else:

test_data[j] = cv2.resize(cv2.imread(path + '/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

test_label[j] = 0

构建并训练VGG数据模型:

构建VGG模型(代码):

# layer1

model = Sequential()

model.add(Conv2D(64, (3, 3), padding='same',input_shape=(224, 224, 3), kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'));model.add(BatchNormalization())

model.add(Dropout(0.3))

# layer2

model.add(Conv2D(64, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu')); model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# layer3

model.add(Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu')); model.add(BatchNormalization())

model.add(Dropout(0.4))

# layer4

model.add(Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(MaxPooling2D(pool_size=(2, 2)))

# layer5

model.add(Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(Dropout(0.4))

# layer6

model.add(Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(Dropout(0.4))

# layer7

model.add(Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(MaxPooling2D(pool_size=(2, 2)))

# layer8

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

# layer9

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(Dropout(0.4))

# layer10

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization()); model.add(MaxPooling2D(pool_size=(2, 2)))

# layer11

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization());model.add(Dropout(0.4))

# layer12

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization());model.add(Dropout(0.4))

# layer13

model.add(Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'));model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)));model.add(Dropout(0.5))

# layer14

model.add(Flatten())

model.add(Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'));model.add(BatchNormalization())

# layer15

model.add(Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu')); model.add(BatchNormalization())

# layer16

model.add(Dropout(0.5));model.add(Dense(2))

model.add(Activation('softmax'))

3、VGG模型测试

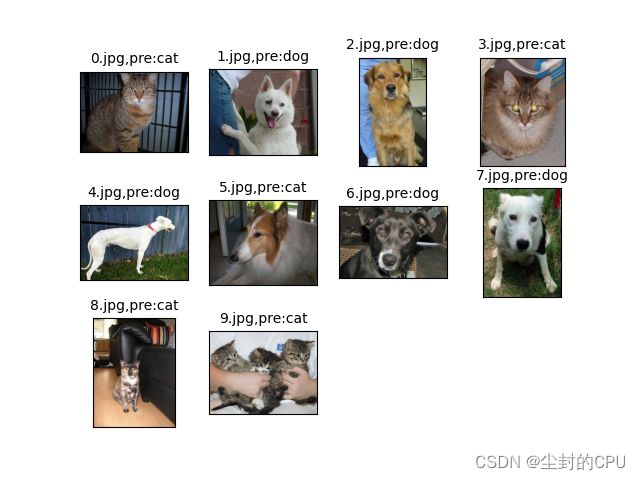

从测试集中选取10张图像,选取的图像中猫(4张)狗(6张),比例为2:3,进行测试:

def readimg(i): # 测试函数:参数i为图片名;

img = cv2.imread(str(i) + '-.jpg')

# 初始化每一张图片img的格式 ……

predicted = load_model.predict(img) # 输出预测结果;

# predicted包括两个预测数据:分别是当前图片是猫的准确率与是狗的准确率;

if(jundge(predicted)==1): res ='pre:dog' #res为标题中预测值;

else: res ='pre:cat'

title = str(i)+'.jpg,'+ res #图片标题

plt.subplot(3, 4,i+1 ) # 行,列,索引;

plt.imshow(img) # 将图片i.jpg加入到图相框中;

……

for i in range(10): #选取10张猫狗图片,分别为4张和6张;

readimg(i) #10张图片循环测试;

plt.show() #显示最终的图相框;

三、实验结果

- 输出的10张测试图像:

2. 测试准确率的截屏显示:

3. 结果分析:

本次10张测试图片文件名依次为0.jpg、1.jpg …… 9.jpg,其中,0号、3号、8号和9号是猫的图片,其余6张是狗的图片;

通过训练出的vgg16dogcat.h5模型数据对以上10张图片进行识别分析,识别结果依次为:cat、dog 、dog、cat、dog、cat、dog、dog、cat和cat;以上分析中,除5号图片识别结果错误之外,其他9张图均识别正确;

错误结果分析:5号识别错误。由于5号图片的狗有很多和猫图像相似的特征,如毛发的像素分布情况等。当某一特征的权值较大时,会导致整体识别结果改变,故该VGG模型将其识别成了猫,其中,预测结果与猫的相似度为0.732,与狗的相似度为0.268;

综上数据,整体识别准确率 = 9/10 =90%.