linux内核源码分析之slab(二)

目录

结构体分析

结构体之间关系

静态初始化

创建缓存

结构体分析

kmem_cache 每个缓存由kmem_cache结构的一个实例表示。

struct kmem_cache {

//是每个CPU一个array_cache类型的变量

struct array_cache __percpu *cpu_cache;

/* 1) Cache tunables. Protected by slab_mutex */

unsigned int batchcount;//指定了在per-CPU列表为空的情况下,从缓存的slab中获取对象的数目。它还表示在缓存增长时分配的对象数目

unsigned int limit;//指定per-CPU列表中保存的对象的最大数目

unsigned int shared;

unsigned int size;//cache大小

struct reciprocal_value reciprocal_buffer_size;

/* 2) touched by every alloc & free from the backend */

slab_flags_t flags; /* slab 标志constant flags */

unsigned int num; /* 对象个数 # of objs per slab */

/* 3) cache_grow/shrink */

/* order of pgs per slab (2^n) */

unsigned int gfporder;//分配内存页面的order

/* force GFP flags, e.g. GFP_DMA */

gfp_t allocflags;

size_t colour; /*着色区大小 cache colouring range */

unsigned int colour_off; /* colour offset */

struct kmem_cache *freelist_cache;

unsigned int freelist_size;

/* constructor func */

void (*ctor)(void *obj);

/* 4) cache creation/removal */

const char *name;//slab名字

struct list_head list;//所有slab链接

int refcount;//引用计数

int object_size;//对象大小

int align;//对齐大小

int obj_offset;

//指向管理kmemcache的上层结构

struct kmem_cache_node *node[MAX_NUMNODES];

};array_cache 缓存

struct array_cache {

unsigned int avail;//当前可用对象的数目

unsigned int limit;

unsigned int batchcount;

unsigned int touched;//从缓存移除一个对象时,将touched设置为1,而缓存收缩时,则将touched设置0

void *entry[]; //对象地址

};avail保存了当前可用对象的数目。在从缓存移除一个对象时,将touched设置1,而缓存收缩时,将touched设置为0

kmem_cache_node节点

struct kmem_cache_node {

spinlock_t list_lock;//自旋锁

#ifdef CONFIG_SLAB

struct list_head slabs_partial; /*对象被使用一部分的slab描述符的链表 */

struct list_head slabs_full;//对象被占用的slab描述符链表

struct list_head slabs_free;//只包含空闲对象的slab描述符链表

unsigned long total_slabs; /* 一共多少kmem_cache结构*/

unsigned long free_slabs; /* 空闲kmem_cache结构 */

unsigned long free_objects;/*空闲的对象*/

unsigned int free_limit;

unsigned int colour_next; /* Per-node cache coloring */

struct array_cache *shared; /*CPU共享本地缓存 shared per node */

struct alien_cache **alien; /* on other nodes */

unsigned long next_reap; /* 由slab的页回收算法使用 updated without locking */

int free_touched; /* 由slab的页回收算法使用 updated without locking */

#endif

};三种缓存 空闲,满,部分空闲。

结构体之间关系

静态初始化

伙伴系统已经完全启用,初始化slab数据结构,内核需要若干小于一整页的内存块,适合用kmalloc分配,但在slab启用后才能使用kmalloc。

kmem_cache_init函数用于初始化slab,在伙伴系统启用之后调用

/* internal cache of cache description objs */

static struct kmem_cache kmem_cache_boot = {

.batchcount = 1,

.limit = BOOT_CPUCACHE_ENTRIES,

.shared = 1,

.size = sizeof(struct kmem_cache),

.name = "kmem_cache",

};

void __init kmem_cache_init(void)

{

int i;

//指向静态定义的kmem_cache_boot

kmem_cache = &kmem_cache_boot;

if (!IS_ENABLED(CONFIG_NUMA) || num_possible_nodes() == 1)

use_alien_caches = 0;

for (i = 0; i < NUM_INIT_LISTS; i++)

kmem_cache_node_init(&init_kmem_cache_node[i]);

if (!slab_max_order_set && totalram_pages() > (32 << 20) >> PAGE_SHIFT)

slab_max_order = SLAB_MAX_ORDER_HI;

//建立保存kmem_cache结构的kmem_cache

/* 1) create the kmem_cache struct kmem_cache size depends on nr_node_ids & nr_cpu_ids*/

create_boot_cache(kmem_cache, "kmem_cache",

offsetof(struct kmem_cache, node) +

nr_node_ids * sizeof(struct kmem_cache_node *),

SLAB_HWCACHE_ALIGN, 0, 0);

//加入全局slab_caches

list_add(&kmem_cache->list, &slab_caches);

memcg_link_cache(kmem_cache, NULL);

slab_state = PARTIAL;

kmalloc_caches[KMALLOC_NORMAL][INDEX_NODE] = create_kmalloc_cache(

kmalloc_info[INDEX_NODE].name[KMALLOC_NORMAL],

kmalloc_info[INDEX_NODE].size,

ARCH_KMALLOC_FLAGS, 0,

kmalloc_info[INDEX_NODE].size);

slab_state = PARTIAL_NODE;

setup_kmalloc_cache_index_table();

slab_early_init = 0;

{

int nid;

//加入全局slab_cache链表中

for_each_online_node(nid) {

init_list(kmem_cache, &init_kmem_cache_node[CACHE_CACHE + nid], nid);

init_list(kmalloc_caches[KMALLOC_NORMAL][INDEX_NODE],

&init_kmem_cache_node[SIZE_NODE + nid], nid);

}

}

//建立kmalloc函数使用的kmem_cache

create_kmalloc_caches(ARCH_KMALLOC_FLAGS);

}

kmem_cache_init创建系统中第一个slab缓存,以便为kmem_cache的实例提供内存,静态数据结构。初始化时建立了第一个 kmem_cache 结构之后,init_list 函数负责一个个分配内存空间。

/*

* swap the static kmem_cache_node with kmalloced memory

*/

static void __init init_list(struct kmem_cache *cachep, struct kmem_cache_node *list,

int nodeid)

{

struct kmem_cache_node *ptr;

//分配新的kmem_cache_node结构的空间

ptr = kmalloc_node(sizeof(struct kmem_cache_node), GFP_NOWAIT, nodeid);

BUG_ON(!ptr);

//复制初始时的静态kmem_cache_node结构

memcpy(ptr, list, sizeof(struct kmem_cache_node));

spin_lock_init(&ptr->list_lock);

MAKE_ALL_LISTS(cachep, ptr, nodeid);

//设置kmem_cache_node的地址

cachep->node[nodeid] = ptr;

}kmalloc_caches是kmalloc_cache的集合

初始化过程,遍历枚举

#define KMALLOC_SHIFT_HIGH ((MAX_ORDER + PAGE_SHIFT - 1) <= 25 ? \

(MAX_ORDER + PAGE_SHIFT - 1) : 25)

enum kmalloc_cache_type {

KMALLOC_NORMAL = 0,

KMALLOC_RECLAIM,

#ifdef CONFIG_ZONE_DMA

KMALLOC_DMA,

#endif

NR_KMALLOC_TYPES

};

extern struct kmem_cache *

kmalloc_caches[NR_KMALLOC_TYPES][KMALLOC_SHIFT_HIGH + 1];

void __init create_kmalloc_caches(slab_flags_t flags)

{

int i;

enum kmalloc_cache_type type;

for (type = KMALLOC_NORMAL; type <= KMALLOC_RECLAIM; type++) {

for (i = KMALLOC_SHIFT_LOW; i <= KMALLOC_SHIFT_HIGH; i++) {

if (!kmalloc_caches[type][i])

new_kmalloc_cache(i, type, flags);

if (KMALLOC_MIN_SIZE <= 32 && i == 6 &&

!kmalloc_caches[type][1])

new_kmalloc_cache(1, type, flags);

if (KMALLOC_MIN_SIZE <= 64 && i == 7 &&

!kmalloc_caches[type][2])

new_kmalloc_cache(2, type, flags);

}

}

/* Kmalloc array is now usable */

slab_state = UP;

#ifdef CONFIG_ZONE_DMA

for (i = 0; i <= KMALLOC_SHIFT_HIGH; i++) {

struct kmem_cache *s = kmalloc_caches[KMALLOC_NORMAL][i];

if (s) {

kmalloc_caches[KMALLOC_DMA][i] = create_kmalloc_cache(

kmalloc_info[i].name[KMALLOC_DMA],

kmalloc_info[i].size,

SLAB_CACHE_DMA | flags, 0, 0);

}

}

#endif

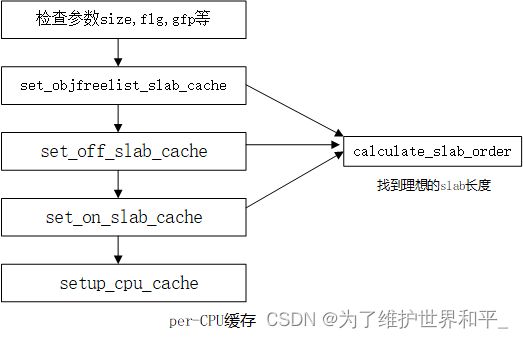

}创建缓存

static size_t calculate_slab_order(struct kmem_cache *cachep,

size_t size, slab_flags_t flags)

{

size_t left_over = 0;

int gfporder;

//每次order+1. 从第一页帧开始,每次倍增slab长度

//1) 8*left_over 小于slab长度,即浪费空间小于1/8

//2)gfp_order大于或等于slab_max_order

for (gfporder = 0; gfporder <= KMALLOC_MAX_ORDER; gfporder++) {

unsigned int num;

size_t remainder;

//找到一个slab布局,size对象长度,gfp_order页阶,num slab上对象数目

num = cache_estimate(gfporder, size, flags, &remainder);

if (!num)

continue;

if (num > SLAB_OBJ_MAX_NUM)

break;

//slab头在管理数据存储在slab之外

if (flags & CFLGS_OFF_SLAB) {

struct kmem_cache *freelist_cache;

size_t freelist_size;

freelist_size = num * sizeof(freelist_idx_t);

freelist_cache = kmalloc_slab(freelist_size, 0u);

if (!freelist_cache)

continue;

if (OFF_SLAB(freelist_cache))

continue;

if (freelist_cache->size > cachep->size / 2)

continue;

}

cachep->num = num;

cachep->gfporder = gfporder;

left_over = remainder;

if (flags & SLAB_RECLAIM_ACCOUNT)

break;

if (gfporder >= slab_max_order)

break;

if (left_over * 8 <= (PAGE_SIZE << gfporder))

break;

}

return left_over;

}如果下述条件之一成立,即结束循环

- 每次order+1. 从第一页帧开始,每次倍增slab长度

- 8*left_over 小于slab长度,即浪费空间小于1/8

- gfp_order大于或等于slab_max_order

内核试图通过calculate_slab_order实现的迭代过程,找到理想的slab长度。基于给定对象长度, cache_estimate针对特定的页数,来计算对象数目、浪费的空间、着色所需的空间。该函数会循环调用,直至内核对结果满意为止。

//产生per-CPU缓存

static int __ref setup_cpu_cache(struct kmem_cache *cachep, gfp_t gfp)

{

if (slab_state >= FULL)

return enable_cpucache(cachep, gfp);

cachep->cpu_cache = alloc_kmem_cache_cpus(cachep, 1, 1);

if (!cachep->cpu_cache)

return 1;

...

return 0;

}static int enable_cpucache(struct kmem_cache *cachep, gfp_t gfp)

{

...

if (cachep->size > 131072)

limit = 1;

else if (cachep->size > PAGE_SIZE)

limit = 8;

else if (cachep->size > 1024)

limit = 24;

else if (cachep->size > 256)

limit = 54;

else

limit = 120;

shared = 0;

if (cachep->size <= PAGE_SIZE && num_possible_cpus() > 1)

shared = 8;

batchcount = (limit + 1) / 2;//缓存中对象数的一半

skip_setup://初始化数据结构

err = do_tune_cpucache(cachep, limit, batchcount, shared, gfp);

end:

if (err)

pr_err("enable_cpucache failed for %s, error %d\n",

cachep->name, -err);

return err;

}

为各个处理器分配所需的内存:一个array_cache的实例和一个指针数组,数组项数目

在上述的计算中给出;并初始化数据结构,这些任务委托给do_tune_cpucache。

参考

《深入Linux内核架构》