k8s快速入门教程

1 命令集合123

1.1查看集群信息

kubectl cluster-info

输出信息如下:

Kubernetes master is running at https://192.168.3.71:6443

KubeDNS is running at https://192.168.3.71:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

查看集群证书有效期:

kubeadm alpha certs check-expiration

1.2 pod

命令创建一个pod

kubectl run --image=nginx --port=80 nginx0920

以上port为容器里面服务的端口。

输入pod的yaml文件

kubectl get pod nginx0920 -o yaml > nginx0920.yaml

启用一个测试的pod

kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

备注:busybox:1.28.4可以正常解析集群的DNS

删除pod,强制删除加–force

kubectl delete pod ceph-csi-rbd-nodeplugin-8vfsb -n ceph-rbd --force

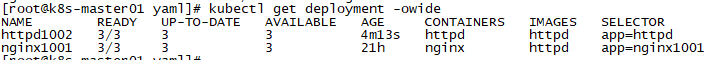

1.3 deployment

命令创建一个deployment

kubectl create deployment --image=nginx nginx0920

创建一个service,默认类型是cluster.

kubectl expose deployment nginx0920 --port=80 --target-port=8000

创建一个service,类型是NodePort

kubectl expose deployment nginx0920 --port=8080 --target-port=80 --type=NodePort

创建一个deployment yaml文件:

kubectl create deployment web --image=nginx --dry-run=client -o yaml > we.yaml

1.4 升级镜像

kubectl set image deployment/web container-eh09qq=wordpress:6.8 --record

备注:container-eh09qq为容器名字。

查看升级实时状态

kubectl rollout status deployment/web

暂停更新状态,扩容副本数这条命令无效。(对立刻修改yaml文件此命令无效。)

kubectl rollout pause deployment nginx1001

恢复更新状态

kubectl rollout resume deployment nginx1001

1.5 回滚

查看历史版本

kubectl rollout history deployment/web

查看历史版本的镜像

kubectl rollout history deployment/web --revision=3

回滚到上一个版本

kubectl rollout undo deployment/web

回滚到指定版本

kubectl rollout undo deployment/web --to-revision=1

1.6 扩容副本数

扩容depoloyment的pod数量

kubectl scale deployment web --replicas=5

1.7 启动一个临时pod

kubectl run -it --rm --image=busybox busybox-demo

1.8 污点和污点容忍度

查看污点情况:

kubectl describe nodes hz-kubesphere-worker01

master节点默认污点不允许调度:

kubectl taint node hz-kubesphere-master02 node-role.kubernetes.io/master="":NoSchedule

打开master节点默认可以调度:

kubectl taint node hz-kubesphere-master02 node-role.kubernetes.io/master-

增加节点(master或worker)的污点:

kubectl taint node hz-kubesphere-master02 node-type=master:NoSchedule

控制器yaml配置增加一下内容:

tolerations:

- key: node-type

operator: Equal

value: master

effect: NoSchedule

例如deployment:

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner-01

namespace: kube-system

spec:

..........

template:

metadata:

labels:

app: nfs-provisioner-01

spec:

tolerations:

- key: node-type

operator: Equal

value: master

effect: NoSchedule

serviceAccountName: nfs-client-provisioner

containers:

每一个污点都可以关联一个效果,效果包含了以下三种:

• NoSchedule表示如果pod没有容忍这些污点,pod则不能被调度到包含这些污点的节点上。

• PreferNoSchedule是NoSchedule的一个宽松的版本,表示尽量阻止pod被调度到这个节点上,但是如果没有其他节点可以调度,pod依然会被调度到这个节点上。

• NoExecute不同于NoSchedule以及PreferNoSchedule,后两者只在调度期间起作用,而NoExecute也会影响正在节点上运行着的pod。如果在一个节点上添加了NoExecute污点,那些在该节点上运行着的pod,如果没有容忍这个NoExecute污点,将会从这个节点去除。

1.9 非亲缘性

使用非亲缘性分散一个部署中的pod,app: nfs-provisioner-01为template的app: app: nfs-provisioner-01

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nfs-provisioner-01

完整的配置:

spec:

progressDeadlineSeconds: 600

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: nfs-provisioner-01

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: nfs-provisioner-01

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nfs-provisioner-01

containers:

- env:

- name: PROVISIONER_NAME

value: nfs-provisioner-01

- name: NFS_SERVER

value: 192.168.3.32

可以跟污点一起使用:

tolerations:

- effect: NoSchedule

key: node-type

operator: Equal

value: master

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nfs-provisioner-01

假如有2个节点,会出现:

[root@k8s-master01 ~]# kubectl get pod -n kube-system | grep nfs

nfs-provisioner-01-68cc74b9d8-clmfc 0/1 Pending 0 9m8s

nfs-provisioner-01-68cc74b9d8-wt6z8 1/1 Running 0 9m8s

kubectl describe pod nfs-provisioner-01-68cc74b9d8-clmfc -n kube-system

...........

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 65s (x9 over 9m41s) default-scheduler 0/7 nodes are available: 1 node(s) didn't match pod affinity/anti-affinity, 1 node(s) didn't satisfy existing pods anti-affinity rules, 2 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) had taint {node-type: dev}, that the pod didn't tolerate, 2 node(s) had taint {node-type: production}, that the pod didn't tolerate.

1.10 不可调度

配置节点不可调度

kubectl cordon k8s21-worker01

恢复节点调度

kubectl uncordon k8s21-worker01

1.11 k8s pod日志路径

/var/log/pods

2 控制器使用

2.1 deployment

2.1.1标准deployment配置

# 标准deployment配置:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx1001

name: nginx1001

namespace: default

spec:

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx1001

template:

metadata:

labels:

app: nginx1001

spec:

containers:

- image: httpd

imagePullPolicy: Always

name: nginx

restartPolicy: Always

nodeSelector:

locationnodes: worker01

2.1.2 imagePullPolicy

imagePullPolicy的选择有三个:Always IfNotPresent Never

k8s的配置文件中经常看到有imagePullPolicy属性,这个属性是描述镜像的拉取策略。

1. Always 总是拉取镜像

2. IfNotPresent 本地有则使用本地镜像,不拉取

3. Never 只使用本地镜像,从不拉取,即使本地没有

4. 如果省略imagePullPolicy 镜像tag为 :latest 策略为always ,否则 策略为 IfNotPresent

2.1.3 label

Deployment的selector其实为spec的label

2.1.4 nodeSelector

指定pod部署到哪个nodes

kubectl label node k8s-worker01 locationnodes=worker01

例如:

kind: Deployment

apiVersion: apps/v1

metadata:

name: httpd1101

namespace: ehr

labels:

app: httpd1101

spec:

replicas: 1

selector:

matchLabels:

app: httpd1101

template:

metadata:

labels:

app: httpd1101

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: volume-fnf6pi

persistentVolumeClaim:

claimName: ehr-demo-pvc1

containers:

- name: container-j1g6b1

image: httpd

ports:

- name: tcp-80

containerPort: 80

protocol: TCP

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: volume-fnf6pi

mountPath: /var/www/html

imagePullPolicy: IfNotPresent

restartPolicy: Always

nodeSelector:

locationnodes:worker01

内置node节点label:

nodeSelector:

kubernetes.io/hostname: gz2-k8s-worker01

2.1.4 revisionHistoryLimit

默认保存rs版本的副本数,默认是10

2.1.5 hostAliases

例如容器要解析内网地址:192.168.3.79 wjx.devops.com,可以在配置文件添加hostAliases.

cat test2.yaml

# 标准deployment配置:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: busybox1211

name: busybox1211

namespace: demo

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: busybox1211

template:

metadata:

labels:

app: busybox1211

spec:

hostAliases: #在此处添加hostAliases

- ip: "192.168.3.79"

hostnames:

- "wjx.devops.com"

tolerations:

- key: node-type

operator: Equal

value: production

effect: NoSchedule

containers:

- image: busybox

imagePullPolicy: Always

name: busybox

args:

- /bin/sh

- -c

- sleep 10; touch /tmp/healthy; sleep 30000

readinessProbe: #就绪探针

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10 #10s之后开始第一次探测

periodSeconds: 5

可以在容器看到:

[root@k8s-master01 ~]# kubectl exec -it busybox1211-786f455b5c-442kz -n demo -- sh

/ # cat /etc/hosts

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.255.69.216 busybox1211-786f455b5c-442kz

# Entries added by HostAliases.

192.168.3.79 wjx.devops.com

/ # ping wjx.devops.com

PING wjx.devops.com (192.168.3.79): 56 data bytes

64 bytes from 192.168.3.79: seq=0 ttl=63 time=0.525 ms

64 bytes from 192.168.3.79: seq=1 ttl=63 time=0.311 ms

^C

2.2 service

service介绍,待更新

2.2.1 标准service 配置

service分两种type: ClusterIP和NodePort。

2.2.1.1 ClusterIP

kind: Service

apiVersion: v1

metadata:

name: httpd-demo1

namespace: usopa-demo1

labels:

app: httpd-demo1

spec:

ports:

- name: http-80

protocol: TCP

port: 80

targetPort: 80

selector:

app: httpd-demo1

type: ClusterIP

备注:14行的selector为调度的deployment或者statesful,daemonset资源。默认配置type是ClusterIP,yaml不写默认是ClusterIP。

2.2.1.2 NodePort

kind: Service

apiVersion: v1

metadata:

name: kn1024-kibana

namespace: usopa-demo1

labels:

app: kibana

spec:

ports:

- name: http

protocol: TCP

port: 5601

targetPort: 5601

nodePort: 30296

selector:

app: kibana

type: NodePort

备注:17行type类型是NodePort,14行nodePort端口可以指定,也可以使用系统随机产生的,不写随机产生。

2.3 daemoset

daemonset在每个节点运行一个pod,也可以在指定节点运行。

2.3.1标准daemonset配置

# 标准daemonset配置

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: nginx1011

name: nginx1011

namespace: data-project1

spec:

selector:

matchLabels:

app: nginx1011

template:

metadata:

labels:

app: nginx1011

spec:

containers:

- image: httpd

imagePullPolicy: Always

name: nginx

restartPolicy: Always

nodeSelector:

node: worker

2.4 statesful

官方文档 https://kubernetes.io/zh/docs/tutorials/stateful-application/basic-stateful-set/

Pod 的序号、主机名、SRV 条目和记录名称没有改变,但和 Pod 相关联的 IP 地址可能发生了改变。 在本教程中使用的集群中它们就改变了。这就是为什么不要在其他应用中使用 StatefulSet 中的 Pod 的 IP 地址进行连接,这点很重要。

如果你需要查找并连接一个 StatefulSet 的活动成员,你应该查询 Headless Service 的 CNAME。 和 CNAME 相关联的 SRV 记录只会包含 StatefulSet 中处于 Running 和 Ready 状态的 Pod。

如果你的应用已经实现了用于测试 liveness 和 readiness 的连接逻辑,你可以使用 Pod 的 SRV 记录(web-0.nginx.default.svc.cluster.local, web-1.nginx.default.svc.cluster.local)。因为他们是稳定的,并且当你的 Pod 的状态变为 Running 和 Ready 时,你的应用就能够发现它们的地址。

2.4.1标准statesful配置

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx-svc"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

storageClassName: csi-rbd-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

statesfulset域名访问:nacos-0.nacos-headless.default.svc.cluster.local:8848

2.5 job

工作类容器job,job的配置文件myjob.yaml

apiVersion: batch/v1

kind: Job

metadata:

labels:

app: myjob

name: myjob

namespace: default

spec:

template:

metadata:

name: myjob

spec:

containers:

- name: hello

image: busybox

imagePullPolicy: Always

command: ["echo", "hello k8s job!"]

name: nginx

restartPolicy: Never

通过kubectl get pod会显示:

myjob-sst4m 0/1 Completed 0 42s

完成后就退出了,查看日志:

# kubectl logs myjob-sst4m

hello k8s job!

2.6 Health Check

2.6.1 init容器

先运行myapp-pod.yaml发现无法解释myservice和mydb,容器myapp-pod无法running;再执行myservice.yaml,发现可以正常解析,容器myapp-pod正常running。没有运行myservice.yaml会显示以下内容:

myapp-pod 0/1 Init:0/2 0 49s

myapp-pod.yaml如下:

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh','-c','echo The app is running! && sleep 3600']

initContainers:

- name: init-myservice

image: busybox

command: ['sh','-c','until nslookup myservice; do echo waiting for myservice; sleep 2;done;']

- name: init-mydb

image: busybox

command: ['sh','-c','until nslookup mydb; do echo waiting for mydb; sleep 2; done;']

myservice.yaml如下:

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

---

kind: Service

apiVersion: v1

metadata:

name: mydb

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9377

spec.restartPolicy: OnFailure/always, spec. containers.-容器名.imagePullPolicy: IfNotPresent/always

2.6.2 readinessProbe

检测探针 - 就绪检测

readinessProbe-httpget.yaml文件如下:

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget-pod

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: nginx

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

port: 80

path: /index1.html

initialDelaySeconds: 1

periodSeconds: 3

运行readinessProbe-httpget.yaml

kubectl apply -f readinessProbe-httpget.yaml

发现pod未就绪

[root@k8s-master01 yaml]# kubectl get pod | grep readiness

readiness-httpget-pod 0/1 Running 0 15s

[root@k8s-master01 yaml]# kubectl describe pod readiness-httpget-pod

Name: readiness-httpget-pod

Namespace: default

Priority: 0

Node: k8s-worker01/192.168.3.74

Start Time: Thu, 07 Oct 2021 18:18:39 +0800

Labels: <none>

Annotations: cni.projectcalico.org/containerID: 4ea9583f3cc95390ab77dd32e36f05fd46afa3ac650bb34e7c830db4529e06a3

cni.projectcalico.org/podIP: 172.16.79.89/32

cni.projectcalico.org/podIPs: 172.16.79.89/32

Status: Running

IP: 172.16.79.89

IPs:

IP: 172.16.79.89

Containers:

readiness-httpget-container:

Container ID: docker://507b37c114a077bd78fc2ad07895131e5488c16e9086ffa453140ec02f65a88f

Image: nginx

Image ID: docker-pullable://nginx@sha256:765e51caa9e739220d59c7f7a75508e77361b441dccf128483b7f5cce8306652

Port: <none>

Host Port: <none>

State: Running

Started: Thu, 07 Oct 2021 18:18:40 +0800

Ready: False

Restart Count: 0

Readiness: http-get http://:80/index1.html delay=1s timeout=1s period=3s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-r9vvb (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-r9vvb:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-r9vvb

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 29s default-scheduler Successfully assigned default/readiness-httpget-pod to k8s-worker01

Normal Pulled 29s kubelet Container image "nginx" already present on machine

Normal Created 29s kubelet Created container readiness-httpget-container

Normal Started 29s kubelet Started container readiness-httpget-container

Warning Unhealthy 2s (x9 over 26s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404

进入pod,增加index1.html

#kubectl exec -it readiness-httpget-pod -- /bin/bash

cd /usr/share/nginx/html

echo "i am allen" > index1.html

exit

重新查询

# kubectl get pod | grep readiness

readiness-httpget-pod 1/1 Running 0 7m8s

2.6.3 livenessProbe

检测探针 - 存活检测,一般有3种检查方法,一种是shell exec,一种是httpget,一种是TCPsocket探针。

livenessProbe-exec.yaml如下:

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod

spec:

containers:

- name: liveness-exec-container

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","touch /tmp/live; sleep 30; rm -rf /tmp/live; sleep 600"]

livenessProbe:

exec:

command: ["test","-e","/tmp/live"]

initialDelaySeconds: 1

periodSeconds: 3

备注:此例子pod会不断重建。

livenessProbe-httpget.yaml如下:

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

namespace: default

spec:

containers:

- name: liveness-httpget-container

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

livenessProbe-tcpsocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcpsocket-pod

namespace: data-project1

spec:

containers:

- name: liveness-tcpsocket-container

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

小结:

Liveness探测和Readiness探测做个比较: Liveness探测和Readiness探测是两种health check机制,如果不特意配置,kubernetes将对两种探测采取相同的默认行为,即通过判断容器启动进程的返回值是否为零来判断探测是否成功。 两种探测的配置方法完全一样,支持的配置参数也一样。不同之处在于探测失败后的行为:Liveness探测是重启容器;Readiness探测则是将容器设置为不可用,不接受Service转发的请求。 Liveness探测和Readiness探测是独立执行的,两者之间没有依赖,所以可以单独使用,也可以同时使用。用Liveness探测判断容器是否重启以实现自愈;用Readiness探测判断容器是否已经准备好对外提供服务。

2.7 ingress-nginx

2.7.1 yaml部署(deployment 青云)

#下载

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

#重命名

mv deploy.yaml ingress-nginx.yaml

#修改镜像

vi ingress-nginx.yaml

#将image的值改为如下值:

wangjinxiong/ingress-nginx-controller:v0.46.0

#部署

kubectl apply -f ingress-nginx.yaml

# 检查安装的结果

kubectl get pod,svc -n ingress-nginx

# 最后别忘记把svc暴露的端口要放行

如果下载不了,直接复制一下:ingress-nginx.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

2.7.2 使用

官网地址:https://kubernetes.github.io/ingress-nginx/

应用如下yaml,准备好

2.7.2.1 测试环境

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

2.7.2.2域名访问

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

其中demo.atguigu.com需要进入容器,在/usr/share/nginx/html下创建nginx目录,并建立index.html文件(内容是wangjinxiong)。

# elinks --dump http://hello.atguigu.com:30486

Hello World!

# elinks --dump http://demo.atguigu.com:30486/nginx/

wangjinxiong

2.7.2.3路径重写(未核实)

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

2.7.2.4流量限制(未核实)

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.atguigu.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

Kubernetes之上传文件报413 Request Entity Too Large : https://cloud.tencent.com/developer/article/1586810

2.7.2.5 通用配置

(1)创建secret ,http不想要配置secret,以下的证书假如是自签证书参考2.7.2.6

kubectl create secret tls nginx-test --cert=tls.crt --key=tls.key

(2) 创建ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: feiutest.cn

http:

paths:

- path:

backend:

serviceName: test-ingress

servicePort: 80

tls:

- hosts:

- feiutest.cn

secretName: nginx-test

(3)应用

kubectl apply -f nginx-ingress-yaml

参考博客:https://blog.csdn.net/bbwangj/article/details/82940419

2.7.2.6 自签证书

# openssl genrsa -out tls.key 2048

# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=Guangdong/L=Guangzhou/O=devops/CN=feiutest.cn

生成两个文件:

# ls

tls.crt tls.key

验证证书有效期:

openssl x509 -in tls.crt -noout -text |grep ' Not '

3 Secret and configmap

3.1 Secret

3.1.1 环境变量方式

加密密码:

echo -n 21vianet | base64

MjF2aWFuZXQ=

反密:

echo -n MjF2aWFuZXQ= | base64 --decode

21vianet

yaml:

apiVersion: v1

kind: Secret

metadata:

name: secret1017

data:

password: MjF2aWFuZXQ=

---

kind: Pod

apiVersion: v1

metadata:

name: mysql1017

labels:

app: mysql1017

spec:

containers:

- name: mysql1017

image: mysql:5.6

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: secret1017

key: password

imagePullPolicy: IfNotPresent

restartPolicy: Always

3.1.2 volume方式

(待补充)

3.2 ConfigMap

Secret可以为Pod 提供密码、Token、 私钥等敏感数据;对于一些非敏感数据,比如应用的配置信息,则可以用ConfigMap。ConfigMap的创建和使用方式与Secret 非常类似,主要的不同是数据以明文的形式存放。

与Secret 一样,ConfigMap 一般用于下面2种方式,一种是数据库环境变量传递;一种是配置文件读写。

3.2.1 环境变量传递

例如:

cat configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: configmap1008

namespace: data-project1

data:

MYSQL_DATABASE: "wangjinxiong"

MYSQL_PASSWORD: "21vianet"

MYSQL_ROOT_PASSWORD: "123456"

MYSQL_USER: "wangjinxiong"

---

kind: Pod

apiVersion: v1

metadata:

name: mysql1017

labels:

app: mysql1017

spec:

containers:

- name: mysql1017

image: mysql:5.6

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: secret1017

key: password

imagePullPolicy: IfNotPresent

restartPolicy: Always

cat mysql.yaml

kind: Pod

apiVersion: v1

metadata:

name: mysql1008

namespace: data-project1

labels:

app: mysql1008

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: mysql1008-pvc

persistentVolumeClaim:

claimName: mysql1008-pvc

- name: configmap1008

configMap:

name: configmap1008

containers:

- name: mysql1008

image: mysql:5.7

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_ROOT_PASSWORD

- name: MYSQL_DATABASE

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_DATABASE

- name: MYSQL_USER

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_USER

- name: MYSQL_PASSWORD

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_PASSWORD

resources:

limits:

cpu: 50m

memory: 4Gi

requests:

cpu: 50m

memory: 4Gi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: mysql1008-pvc

mountPath: /var/lib/mysql

imagePullPolicy: IfNotPresent

restartPolicy: Always

不用configmap配置是这样的:

kind: Pod

apiVersion: v1

metadata:

name: mysql1008

namespace: data-project1

labels:

app: mysql1008

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: mysql1008-pvc

persistentVolumeClaim:

claimName: mysql1008-pvc

containers:

- name: mysql1008

image: mysql:5.7

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

- name: MYSQL_DATABASE

value: wangjinxiong

- name: MYSQL_USER

value: wangjinxiong

- name: MYSQL_PASSWORD

value: '21vianet'

resources:

limits:

cpu: 50m

memory: 4Gi

requests:

cpu: 50m

memory: 4Gi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: mysql1008-pvc

mountPath: /var/lib/mysql

imagePullPolicy: IfNotPresent

restartPolicy: Always

3.2.2 配置文件传递

例如:

cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: configmap1009

namespace: data-project1

data:

wangjinxiong.txt: |

wangjinxiong=OK

cat mysql.yaml

kind: Pod

apiVersion: v1

metadata:

name: mysql1009

namespace: data-project1

labels:

app: mysql1009

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: mysql1009-pvc

persistentVolumeClaim:

claimName: mysql1009-pvc

- name: configmap1008

configMap:

name: configmap1008

- name: configmap1009

configMap:

name: configmap1009

containers:

- name: mysql1009

image: mysql:5.6

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_ROOT_PASSWORD

- name: MYSQL_DATABASE

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_DATABASE

- name: MYSQL_USER

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_USER

- name: MYSQL_PASSWORD

valueFrom:

configMapKeyRef:

name: configmap1008

key: MYSQL_PASSWORD

resources:

limits:

cpu: 50m

memory: 1Gi

requests:

cpu: 50m

memory: 1Gi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: mysql1009-pvc

mountPath: /var/lib/mysql

- name: configmap1009

mountPath: /etc/mysql/conf.d

readOnly: true

imagePullPolicy: IfNotPresent

restartPolicy: Always

3.2.3 配置文件例子

kubectl create configmap mysql-config --from-file=mysqld.cnf 配置文件写在一个文件里面

configmap:

# cat my.cnf

[mysqld]

pid-file = /var/run/mysqld/mysqld.pid

socket = /var/run/mysqld/mysqld.sock

datadir = /var/lib/mysql

secure-file-priv= NULL

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

# Custom config should go here

!includedir /etc/mysql/conf.d/

default_authentication_plugin= mysql_native_password

# kubectl create configmap mysql-config0117 --from-file=my.cnf

pvc:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: mysql-pvc0117

namespace: data-project1

annotations:

volume.beta.kubernetes.io/storage-provisioner: nfs-provisioner-01

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: nfs-synology

sts调用pvc及configmap:

kind: StatefulSet

apiVersion: apps/v1

metadata:

name: mysql0117

namespace: data-project1

labels:

app: mysql0117

spec:

replicas: 1

selector:

matchLabels:

app: mysql0117

template:

metadata:

labels:

app: mysql0117

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: volume0117

persistentVolumeClaim:

claimName: mysql-pvc0117

- name: volumeconfigmap

configMap:

name: mysql-config0117

items:

- key: my.cnf

path: my.cnf

containers:

- name: container0117

image: 'mysql:8.0'

ports:

- name: tcp-3306

containerPort: 3306

protocol: TCP

- name: tcp-33060

containerPort: 33060

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

value: gtland2021

resources:

limits:

cpu: '1'

memory: 4Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: volume0117

mountPath: /var/lib/mysql

- name: volumeconfigmap

subPath: my.cnf

mountPath: /etc/mysql/my.cnf

imagePullPolicy: IfNotPresent

nodeSelector:

node: worker

serviceName: mysql-svc0117

6 Volume

6.5 StorageClass

6.5.1 csi-driver-nfs

nfs服务器搭建另外,nfs的csi及sc创建

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner-01

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-provisioner-01

template:

metadata:

labels:

app: nfs-provisioner-01

spec:

serviceAccountName: nfs-client-provisioner

nodeSelector:

nfs-server: synology

containers:

- name: nfs-client-provisioner

image: wangjinxiong/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-provisioner-01 # 此处供应者名字供storageclass调用

- name: NFS_SERVER

value: 10.186.100.13 # 填入NFS的地址

- name: NFS_PATH

value: /volume2/EHR # 填入NFS挂载的目录

volumes:

- name: nfs-client-root

nfs:

server: 10.186.100.13 # 填入NFS的地址

path: /volume2/EHR # 填入NFS挂载的目录

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-synology

provisioner: nfs-provisioner-01

# Supported policies: Delete、 Retain , default is Delete

reclaimPolicy: Delete

#allowVolumeExpansion: true

创建PVC:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc101902

namespace: data-project1

annotations:

volume.beta.kubernetes.io/storage-provisioner: nfs-provisioner-01

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: nfs-synology

查看:

[root@gz-kubesphere-master01 ~]# kubectl get pvc -n data-project1 | grep nfs-synology

pvc1019 Bound pvc-f25f7e0c-7276-493c-996f-1d19315d58a4 1Gi RWX nfs-synology 31m

pvc101902 Bound pvc-a58d9019-84bf-4026-84ff-d268f78df0f4 2Gi RWX nfs-synology 25m

注意。多冗余可以将修改nfs provisioner插件为DaemonSet

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: nfs-provisioner-01

namespace: kube-system

spec:

selector:

matchLabels:

app: nfs-provisioner-01

template:

metadata:

labels:

app: nfs-provisioner-01

spec:

serviceAccountName: nfs-client-provisioner

tolerations:

- key: node-type

operator: Equal

value: master

effect: NoSchedule

containers:

- name: nfs-client-provisioner

image: wangjinxiong/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /k8s

env:

- name: PROVISIONER_NAME

value: nfs-provisioner-01 # 此处供应者名字供storageclass调用

- name: NFS_SERVER

value: 192.168.3.81 # 填入NFS的地址

- name: NFS_PATH

value: /nas/k8s # 填入NFS挂载的目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.3.81 # 填入NFS的地址

path: /nas/k8s # 填入NFS挂载的目录

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-server

# annotations:

# storageclass.beta.kubernetes.io/is-default-class: 'true'

provisioner: nfs-provisioner-01

# Supported policies: Delete、 Retain , default is Delete

reclaimPolicy: Delete

6.5.2 csi-driver-smb

6.5.2.1 安装SMB CSI驱动

通过helm安装

helm repo add csi-driver-smb https://raw.githubusercontent.com/kubernetes-csi/csi-driver-smb/master/charts

helm install csi-driver-smb csi-driver-smb/csi-driver-smb --namespace kube-system

查看pod状态是否正常

kubectl -n kube-system get pod |grep csi-smb

csi-smb-controller-5fc49df54f-prrmw 3/3 Running 9 39h

csi-smb-controller-5fc49df54f-w68r4 3/3 Running 11 39h

csi-smb-node-d5w96 3/3 Running 9 39h

csi-smb-node-thxcf 3/3 Running 9 39h

csi-smb-node-tqd9x 3/3 Running 9 39h

首先创建一个secret保存用户和密码。(本例子samba服务器已经创建,为windows共享文件夹或者linux samba服务)。

kubectl create secret generic smbcreds --from-literal username=rancher --from-literal password="rancher"

6.5.2.2 创建storage class

新建storage-class.yaml文件

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: smb

provisioner: smb.csi.k8s.io

parameters:

source: "//192.168.3.1/sda4"

csi.storage.k8s.io/provisioner-secret-name: "smbcreds"

csi.storage.k8s.io/provisioner-secret-namespace: "default"

csi.storage.k8s.io/node-stage-secret-name: "smbcreds"

csi.storage.k8s.io/node-stage-secret-namespace: "default"

# createSubDir: "false" # optional: create a sub dir for new volume

reclaimPolicy: Retain # only retain is supported

volumeBindingMode: Immediate

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=1001

- gid=1001

其中csi.storage.k8s.io/node-stage-secret-name对应的是上一步创建的secret

创建storage class

kubectl create -f storage-class.yaml

6.5.2.3部署应用

创建一个pod类型的应用,并挂载Samba卷

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: smb-pvc1019

# annotations:

# volume.beta.kubernetes.io/storage-class: "smb.csi.k8s.io"

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: "smb"

---

kind: Pod

apiVersion: v1

metadata:

name: nginx1019

labels:

app: nginx1019

spec:

volumes:

- name: smb-pvc1019

persistentVolumeClaim:

claimName: smb-pvc1019

containers:

- name: nginx1019

image: httpd

ports:

- name: tcp-80

containerPort: 80

protocol: TCP

volumeMounts:

- name: smb-pvc1019

mountPath: /var/www/html

imagePullPolicy: IfNotPresent

restartPolicy: Always

创建该应用(名字使用nginx,但用了httpd镜像,但不影响使用,其实名字无所谓)

kubectl apply -f smb-pod.yaml

查看应用状态

# kubectl get pod | grep nginx1019

nginx1019 1/1 Running 1 21h

可以看到,/var/www/html目录挂载了//192.168.3.1/sda4 Samba文件存储

# kubectl exec -it nginx1019 -- /bin/bash

root@nginx1019:/usr/local/apache2# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 195G 3.5G 192G 2% /

tmpfs 64M 0 64M 0% /dev

tmpfs 911M 0 911M 0% /sys/fs/cgroup

/dev/mapper/centos-root 195G 3.5G 192G 2% /etc/hosts

shm 64M 0 64M 0% /dev/shm

//192.168.3.1/sda4/pvc-dd932c8a-21bc-4c6f-b339-ea64a0918a6d 7.7G 5.0G 2.7G 66% /var/www/html

tmpfs 911M 12K 911M 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 911M 0 911M 0% /proc/acpi

tmpfs 911M 0 911M 0% /proc/scsi

tmpfs 911M 0 911M 0% /sys/firmware

参考文献:https://github.com/kubernetes-csi/csi-driver-smb

其他个人博客:https://www.cnblogs.com/zerchin/p/14549849.html (配置有点错误)

6.5.3 ceph-csi-rbd (helm部署)

(1) 添加仓库

helm repo add ceph-csi https://ceph.github.io/csi-charts

(2) 查询关于ceph的安装包

# helm search repo ceph

NAME CHART VERSION APP VERSION DESCRIPTION

ceph-csi/ceph-csi-cephfs 3.4.0 v3.4.0 Container Storage Interface (CSI) driver, provi...

ceph-csi/ceph-csi-rbd 3.4.0 v3.4.0 Container Storage Interface (CSI) driver, provi...

(3) 下载helm的rbd安装包

helm pull ceph-csi/ceph-csi-rbd

(4) 解压

tar zxvf ceph-csi-rbd-3.4.0.tgz

(5) 修改values文件的镜像地址

sed -i "s/k8s.gcr.io\/sig-storage/wangjinxiong/g" values.yaml

(6)设置ceph cluster变量

cat <<EOF>>ceph-csi-rbd_values.yml

csiConfig:

- clusterID: "319d2cce-b087-4de9-bd4a-13edc7644abc"

monitors:

- "192.168.3.61:6789"

- "192.168.3.62:6789"

- "192.168.3.63:6789"

EOF

(7) 安装csi

cd ceph-csi-rbd #进入解压目录

kubectl create namespace ceph-rbd

helm -n ceph-rbd install ceph-csi-rbd -f ceph-csi-rbd_values.yml .

查看pod情况:

# kubectl get pod -n ceph-rbd

NAME READY STATUS RESTARTS AGE

ceph-csi-rbd-nodeplugin-9mhmc 3/3 Running 0 10m

ceph-csi-rbd-nodeplugin-rrncz 3/3 Running 0 10m

ceph-csi-rbd-provisioner-7ccf65559f-44mqp 7/7 Running 0 10m

ceph-csi-rbd-provisioner-7ccf65559f-f5qsw 7/7 Running 0 10m

ceph-csi-rbd-provisioner-7ccf65559f-mnx5k 0/7 Pending 0 10m

(8) 创建ceph pool

ceph osd pool create kubernetes

rbd pool init kubernetes

创建ceph用户

ceph auth get-or-create \

client.kube mon 'allow r' \

osd 'allow class-read object_prefix rbd_children, allow rwx pool=kubernetes' \

-o /etc/ceph/ceph.client.kube.keyring

备注:本例子使用admin,可以不创建用户。

(9)创建k8s secret

cat <<EOF>>secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: ceph-rbd

stringData:

userID: admin

userKey: AQABcKZfMUF9FBAAf9gcYUkS0KW/ptcOpHPWyA==

EOF

创建storageClass

cat <<EOF>>storageClass.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 319d2cce-b087-4de9-bd4a-13edc7644abc

pool: kubernetes

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: ceph-rbd

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: ceph-rbd

reclaimPolicy: Delete

mountOptions:

- discard

EOF

应用:

kubectl apply -f secret.yaml

kubectl apply -f storageClass.yaml

创建pvc测试

cat <<EOF>>rbd-pvc1114.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: rbd-pvc1114

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

kubectl apply -f rbd-pvc1114.yaml

查看:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-pvc1114 Bound pvc-e91f7d67-b19e-48f0-822a-f9e01c32a25c 1Gi RWO csi-rbd-sc 26s

参考文档:https://github.com/ceph/ceph-csi/tree/devel/charts/ceph-csi-rbd

https://www.qedev.com/cloud/316999.html

ceph-rbd: https://blog.csdn.net/s7799653/article/details/88303605

6.5.4 ceph-csi-cephfs(helm部署)

(1) 添加仓库

helm repo add ceph-csi https://ceph.github.io/csi-charts

(2) 查询关于ceph的安装包

# helm search repo ceph

NAME CHART VERSION APP VERSION DESCRIPTION

ceph-csi/ceph-csi-cephfs 3.4.0 v3.4.0 Container Storage Interface (CSI) driver, provi...

ceph-csi/ceph-csi-rbd 3.4.0 v3.4.0 Container Storage Interface (CSI) driver, provi...

(3) 下载helm的rbd安装包

helm pull ceph-csi/ceph-csi-cephfs

(4) 解压

tar zxvf ceph-csi-cephfs-3.4.0.tgz

(5) 修改values文件的镜像地址

cd ceph-csi-cephfs

cp -p values.yaml values.yaml.bak

sed -i "s/k8s.gcr.io\/sig-storage/registry.aliyuncs.com\/google_containers/g" values.yaml

(6)设置ceph cluster变量

cat <>>ceph-csi-cephfs_values.yml

csiConfig:

- clusterID: "319d2cce-b087-4de9-bd4a-13edc7644abc"

monitors:

- "192.168.3.61:6789"

- "192.168.3.62:6789"

- "192.168.3.63:6789"

EOF

(7) 安装csi

cd ceph-csi-cephfs

kubectl create namespace ceph-cephfs

helm -n ceph-cephfs install ceph-csi-cephfs -f ceph-csi-cephfs_values.yml .

查看pod情况:

# kubectl get pod -n ceph-cephfs

NAME READY STATUS RESTARTS AGE

ceph-csi-cephfs-nodeplugin-sbl6v 3/3 Running 0 3h51m

ceph-csi-cephfs-nodeplugin-vvf87 3/3 Running 0 3h51m

ceph-csi-cephfs-provisioner-7bdfdbfdc6-bdk4l 0/6 Pending 0 3h51m

ceph-csi-cephfs-provisioner-7bdfdbfdc6-vlvst 6/6 Running 0 3h51m

ceph-csi-cephfs-provisioner-7bdfdbfdc6-z9rc8 6/6 Running 0 3h51m

(8) cepfs配置略

查看ceph的cephfs情况:

# ceph mds stat

cephfs:1 {0=ceph3=up:active} 2 up:standby

备注:本例子使用admin,可以不创建用户。

(9)创建k8s secret

cat secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-cephfs-secret

namespace: ceph-cephfs

stringData:

userID: admin

userKey: AQABcKZfMUF9FBAAf9gcYUkS0KW/ptcOpHPWyA==

adminID: admin

adminKey: AQABcKZfMUF9FBAAf9gcYUkS0KW/ptcOpHPWyA==

创建storageClass

# cat storageClass.yml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-cephfs-sc

provisioner: cephfs.csi.ceph.com

parameters:

clusterID: 319d2cce-b087-4de9-bd4a-13edc7644abc

fsName: cephfs

csi.storage.k8s.io/provisioner-secret-name: csi-cephfs-secret

csi.storage.k8s.io/provisioner-secret-namespace: ceph-cephfs

csi.storage.k8s.io/controller-expand-secret-name: csi-cephfs-secret

csi.storage.k8s.io/controller-expand-secret-namespace: ceph-cephfs

csi.storage.k8s.io/node-stage-secret-name: csi-cephfs-secret

csi.storage.k8s.io/node-stage-secret-namespace: ceph-cephfs

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- debug

应用:

# kubectl apply -f secret.yaml

# kubectl apply -f storageClass.yml

创建pvc测试

# cat cephfs-pvc0119.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc0119

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: csi-cephfs-sc

# kubectl apply -f cephfs-pvc0119.yaml

查看:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc0119 Bound pvc-970f7625-9783-4bee-ab4b-8bc1d3efb2cd 1Gi RWO csi-cephfs-sc 3h22m

部署参考: https://github.com/ceph/ceph-csi/tree/devel/charts/ceph-csi-cephfs https://github.com/ceph/ceph-csi/tree/devel/examples/cephfs

目前有问题

https://www.cnblogs.com/wsjhk/p/13710577.html

https://github.com/ceph/ceph-csi/blob/release-v3.1

6.5.5 cephfs-provisioner

安装cephfs-provisioner

因为k8s没有内置cephfs的provisioner,故须要安装第三方的。咱们先来简单看下此provisioner的架构:

主要有两部分:

- cephfs-provisioner.go

是cephfs-provisioner(cephfs的storageclass)的核心,主要是 watch kubernetes中 PVC 资源的CURD事件,而后以命令行方式调用 cephfs_provisor.py脚本建立PV。 - cephfs_provisioner.py

python 脚本实现的与cephfs交互的命令行工具。cephfs-provisioner 对cephfs端volume的建立都是经过该脚本实现。里面封装了volume的增删改查等功能。

6.5.5.1 插件安装

git clone https://github.com/kubernetes-retired/external-storage.git

克隆下来的目录包含以下yaml文件

clusterrole.yaml role.yaml clusterrolebinding.yaml deployment.yaml rolebinding.yaml serviceaccount.yaml

yaml默认的命名空间为cephfs

# cat ./* | grep namespace

namespace: cephfs

namespace: cephfs

namespace: cephfs

namespace: cephfs

namespace: cephfs

namespace: cephfs

namespace: cephfs

直接在所在目录运行yaml文件:

kubectl create cephfs

kubectl apply -f .

检查是否安装成功:

# kubectl get pod -n cephfs

NAME READY STATUS RESTARTS AGE

cephfs-provisioner-56f846d54b-6cd77 1/1 Running 0 71m

6.5.5.2 StorageClass创建

创建base64的ceph admin秘钥,得到秘钥写到ceph-admin-secret.yaml。

ceph auth get-key client.admin | base64

QVFBQmNLWmZNVUY5RkJBQWY5Z2NZVWtTMEtXL3B0Y09wSFBXeUE9PQ==

创建ceph-admin-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-admin-secret

namespace: cephfs

data:

key: "QVFBQmNLWmZNVUY5RkJBQWY5Z2NZVWtTMEtXL3B0Y09wSFBXeUE9PQ=="

type: kubernetes.io/rbd

创建StorageClass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephfs

provisioner: ceph.com/cephfs

parameters:

monitors: 192.168.3.61:6789,192.168.3.62:6789,192.168.3.63:6789

adminId: admin

adminSecretNamespace: "cephfs"

adminSecretName: ceph-admin-secret

reclaimPolicy: Delete

应用:

kubectl apply -f ceph-admin-secret.yaml StorageClass.yaml

查看sc情况:

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

cephfs ceph.com/cephfs Delete Immediate false 72m

6.5.5.3 PVC应用cephfs-provisioner

创建一个pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc1120

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: "cephfs"

查看pvc情况:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc1120 Bound pvc-de119af9-2f2b-4a26-b085-86c0aab3494e 2Gi RWO cephfs 71m

6.5.6 local-volume-provisioner

kubernetes从1.10版本开始支持local volume(本地卷),workload(不仅是statefulsets类型)可以充分利用本地快速SSD,从而获取比remote volume(如cephfs、RBD)更好的性能。

在local volume出现之前,statefulsets也可以利用本地SSD,方法是配置hostPath,并通过nodeSelector或者nodeAffinity绑定到具体node上。但hostPath的问题是,管理员需要手动管理集群各个node的目录,不太方便。

下面两种类型应用适合使用local volume。

数据缓存,应用可以就近访问数据,快速处理。

分布式存储系统,如分布式数据库Cassandra ,分布式文件系统ceph/gluster

下面会先以手动方式创建PV、PVC、Pod的方式,介绍如何使用local volume,然后再介绍external storage提供的半自动方式

本操作采用helm安装,其他方式参考官方文档,官方helm安装地址:https://github.com/kubernetes-sigs/sig-storage-local-static-provisioner/tree/master/helm,整个项目地址:https://github.com/kubernetes-sigs/sig-storage-local-static-provisioner

6.5.6.1下载git源码

已经将克隆的目录打包tar.gz上传到nas

git clone --depth=1 https://github.com/kubernetes-sigs/sig-storage-local-static-provisioner.git

进入sig-storage-local-static-provisioner, 修改 helm/provisioner/values.yaml 的镜像地址:

googleimages/local-volume-provisioner:v2.4.0

备注:k8s.gcr.io改成googleimages,也可以改成wangjinxiong docker hub地址wangjinxiong/local-volume-provisioner:v2.4.0,已经上传。

其中helm/examples/gke.yaml,默认的classes名为local-scsi

[root@k8s-master01 sig-storage-local-static-provisioner]# cat helm/examples/gke.yaml

common:

useNodeNameOnly: true

classes:

- name: local-scsi

hostDir: "/mnt/disks"

storageClass: true

helm安装

helm install -f <path-to-your-values-file> <release-name> --namespace <namespace> ./helm/provisioner

6.5.6.2 helm安装操作

cd sig-storage-local-static-provisioner

helm install -f helm/examples/gke.yaml local-volume-provisioner --namespace kube-system ./helm/provisioner

查看pod及sc情况:

[root@k8s-master01 sig-storage-local-static-provisioner]# kubectl get pod -n kube-system | grep local

local-volume-provisioner-5tcx5 1/1 Running 1 10h

local-volume-provisioner-lsd7d 1/1 Running 1 10h

local-volume-provisioner-ltwxr 1/1 Running 1 10h

[root@k8s-master01 sig-storage-local-static-provisioner]# kubectl get ds -n kube-system | grep local

local-volume-provisioner 3 3 3 3 3 kubernetes.io/os=linux 10h

local-volume-provisioner-win 0 0 0 0 0 kubernetes.io/os=windows 10h

[root@k8s-master01 sig-storage-local-static-provisioner]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

cephfs ceph.com/cephfs Delete Immediate false 42d

csi-rbd-sc rbd.csi.ceph.com Delete Immediate false 48d

local-scsi kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 10h

nfs-server (default) nfs-provisioner-01 Delete Immediate false 14d

6.5.6.3挂载磁盘

其Provisioner本身其并不提供local volume,但它在各个节点上的provisioner会去动态的“发现”挂载点(discovery directory),当某node的provisioner在/mnt/fast-disks目录下发现有挂载点时,会创建PV,该PV的local.path就是挂载点,并设置nodeAffinity为该node。

挂载盘麻烦或者没有,可以参考mount bind方式,这个需要各个节点都进行操作,执行下面脚本。

#!/bin/bash

for i in $(seq 1 5); do

mkdir -p /mnt/fast-disks-bind/vol${i}

mkdir -p /mnt/fast-disks/vol${i}

mount --bind /mnt/fast-disks-bind/vol${i} /mnt/fast-disks/vol${i}

done

查询目录情况:

[root@k8s-master01 mnt]# ls -ls

总用量 0

0 drwxr-xr-x 7 root root 66 1月 1 22:24 disks

0 drwxr-xr-x 7 root root 66 1月 1 22:24 disks-bind

[root@k8s-master01 mnt]# ls disks

vol1 vol2 vol3 vol4 vol5

[root@k8s-master01 mnt]# ls disks-bind/

vol1 vol2 vol3 vol4 vol5

[root@k8s-master01 mnt]#

执行该脚本后,等待一会,执行查询pv命令,就可以发现自动创建了 ,笔者上面有一个已经挂载,是因为整理笔记是后面操作的,正常情况都是Available。

[root@k8s-master01 mnt]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

local-pv-146f6f49 194Gi RWO Delete Available local-scsi 10h

local-pv-204215d7 194Gi RWO Delete Available local-scsi 10h

local-pv-304039a2 194Gi RWO Delete Available local-scsi 10h

local-pv-51a85a66 194Gi RWO Delete Available local-scsi 10h

local-pv-5e9db7ce 194Gi RWO Delete Available local-scsi 10h

local-pv-5f118901 194Gi RWO Delete Available local-scsi 10h

local-pv-6faed31c 194Gi RWO Delete Bound default/local-vol-web-0 local-scsi 10h

local-pv-98f553c1 194Gi RWO Delete Available local-scsi 10h

local-pv-b01c5d8f 194Gi RWO Delete Available local-scsi 10h

local-pv-c2117cdf 194Gi RWO Delete Available local-scsi 10h

local-pv-c934dbdd 194Gi RWO Delete Available local-scsi 10h

local-pv-d20a3b6 194Gi RWO Delete Available local-scsi 10h

local-pv-d2e2cbdc 194Gi RWO Delete Available local-scsi 10h

local-pv-d3e79a2c 194Gi RWO Delete Available local-scsi 10h

local-pv-e9a54db 194Gi RWO Delete Available local-scsi 9h

pvc-054f3494-c65d-499f-ab33-5a47cc6be764 10Gi RWX Delete Bound demo/demo-rabbitmq-pvc nfs-server 13d

pvc-67642ba8-5716-47d0-8c9a-2ac744cc43c6 1Gi RWX Delete Bound demo/demo1218 cephfs 14d

pvc-7fc0a4aa-12aa-44d7-9dc9-eedec3ff5677 2Gi RWO Delete Bound default/cephfs-pvc1120 cephfs 42d

pvc-840ac596-f5e2-4e15-8246-0d33857b3a77 10Gi RWX Delete Bound demo/demo-redis-pvc nfs-server 13d

pvc-8e1f7a47-3207-46cc-8328-5c11b7aabac3 100Gi RWX Delete Bound demo/demo-mysql-pvc nfs-server 13d

pvc-d3372ebb-6d64-42da-89a8-65b6ca14f759 1Gi RWX Delete Bound demo/demo1218-2 nfs-server 14d

pvc-dee11f5f-3024-4b0f-b7a8-b529e4e723be 2Gi RWO Delete Bound default/cephfs-pvc1121 cephfs 42d

pvc-e91f7d67-b19e-48f0-822a-f9e01c32a25c 1Gi RWO Delete Bound default/rbd-pvc1114 csi-rbd-sc 48d

编写一个statefulset去调用localvolume:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx-svc"

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: local-vol

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: local-vol

spec:

storageClassName: local-scsi

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

发觉pvc已经绑定了:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc1120 Bound pvc-7fc0a4aa-12aa-44d7-9dc9-eedec3ff5677 2Gi RWO cephfs 42d

cephfs-pvc1121 Bound pvc-dee11f5f-3024-4b0f-b7a8-b529e4e723be 2Gi RWO cephfs 42d

local-vol-web-0 Bound local-pv-6faed31c 194Gi RWO local-scsi 10h

rbd-pvc1114 Bound pvc-e91f7d67-b19e-48f0-822a-f9e01c32a25c 1Gi RWO csi-rbd-sc 48d

参考其他博客文档:https://www.jianshu.com/p/436945a25e9f

https://blog.csdn.net/weixin_42758299/article/details/119102461

https://blog.csdn.net/cpongo1/article/details/89549139

https://www.freesion.com/article/5811953555/

6.5.7 OpenEBS 实现 Local PV 动态持久化存储