pytorch实战4:基于pytorch复现GoogLeNet-v1

基于pytorch复现GoogLeNet-v1

前言

最近在看经典的卷积网络架构,打算自己尝试复现一下,在此系列文章中,会参考很多文章,有些已经忘记了出处,所以就不贴链接了,希望大家理解。

后期会补上使用数据训练的代码。

完整的代码在最后。

本系列必须的基础

python基础知识、CNN原理知识、pytorch基础知识

本系列的目的

一是帮助自己巩固知识点;

二是自己实现一次,可以发现很多之前的不足;

三是希望可以给大家一个参考。

目录结构

文章目录

-

- 基于pytorch复现GoogLeNet-v1

-

- 1. Inception-v1模型介绍:

- 2. GoogLeNet介绍:

- 3. 模型构建:

- 4. 总结:

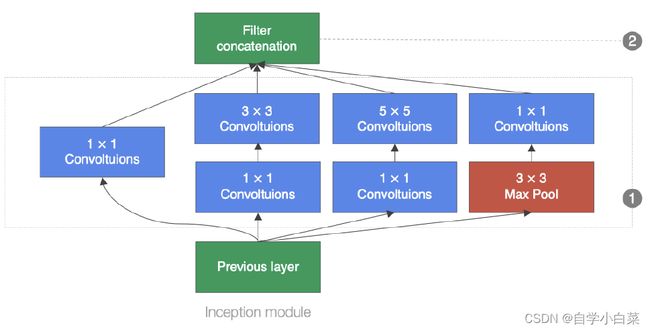

1. Inception-v1模型介绍:

GoogleNet是由Inception模块构建而成,我们首先介绍Inception-v1模块:

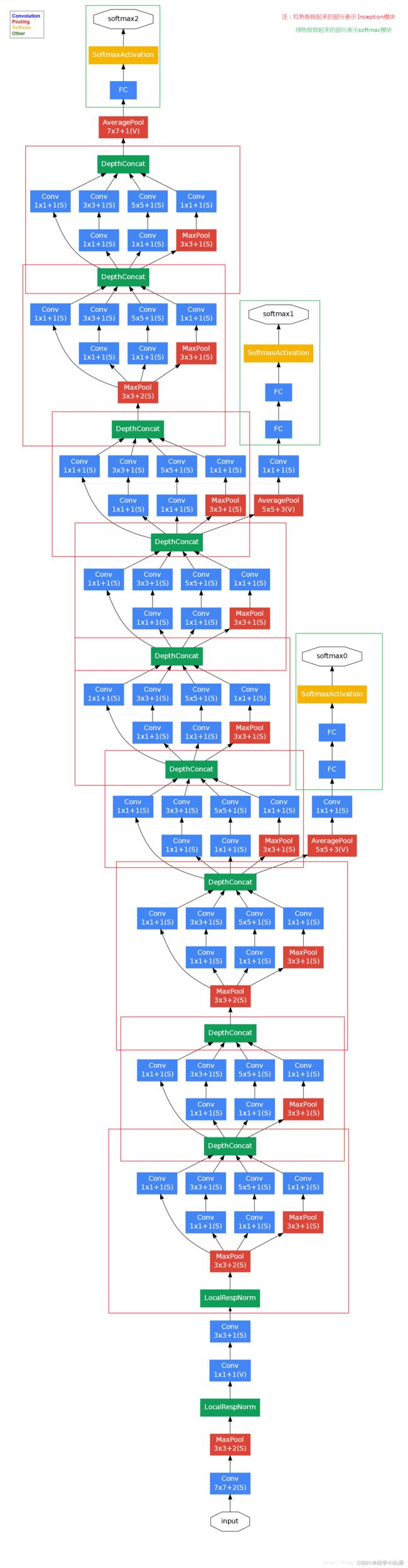

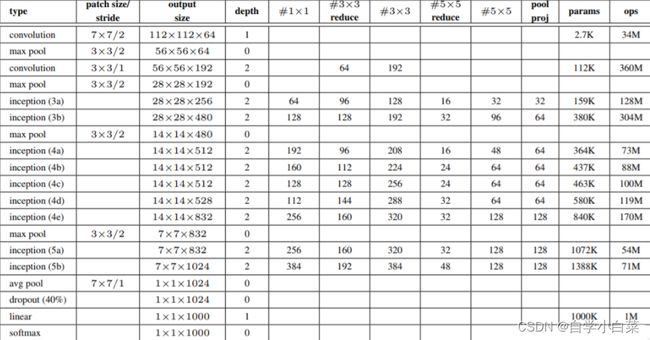

2. GoogLeNet介绍:

GoogLeNet架构:

上图中的分支是局部的损失训练,这里不做实现。

3. 模型构建:

参考资料:官方的实现方法。

首先,我们从图中可以看出,每一个卷积层后面都会接着BN层,因此考虑到使用一个类来包含它们,后面好直接调用:

# 定义BN层和ReLU层的类

class Basic_conv2d(nn.Module):

def __init__(self,in_channels,out_channels,**kwargs):

super(Basic_conv2d, self).__init__()

self.conv = nn.Conv2d(in_channels,out_channels,**kwargs)

self.bn = nn.BatchNorm2d(out_channels,eps=0.001)

def forward(self,x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x)

接着,我们来实现Inception模块(具体的可以看注释,有问题可以留言):

# 定义Inception类

class My_Inception_V1(nn.Module):

def __init__(self,in_planes,ch1x1,ch3x3red,ch3x3,ch5x5red,ch5x5,pool_planes):

'''

:param in_planes: 输入通道数,对于每一条线路初始都一样

:param ch1x1: 1*1卷积核的个数

:param ch3x3: 3*3卷积核的个数

:param ch3x3red: 3*3卷积核那条路的1*1卷积核的个数

:param ch5x5: 5*5卷积核的个数

:param ch5x5red: 5*5卷积核那条路的1*1的个数

:param pool_planes: pool那条路1*1卷积核个数

'''

super(My_Inception_V1,self).__init__()

# 1*1路线

self.b1_1x1 = Basic_conv2d(in_planes,ch1x1,kernel_size=1)

# 1*1 --- 3*3 线路

self.b2_1x1 = Basic_conv2d(in_planes,ch3x3red,kernel_size=1)

self.b2_3x3 = Basic_conv2d(ch3x3red,ch3x3,kernel_size=3,padding=1)

# 1*1 --- 5*5 线路

self.b3_1x1 = Basic_conv2d(in_planes,ch5x5red,kernel_size=1)

self.b3_5x5 = Basic_conv2d(ch5x5red,ch5x5,kernel_size=5,padding=1)

# pool --- 1*1 线路

self.b4_pool = nn.MaxPool2d(kernel_size=3,stride=1,padding=1)

self.b4_1x1 = Basic_conv2d(in_planes,pool_planes,kernel_size=1)

def forward(self,x):

# 四个路线计算值

y1 = self.b1_1x1(x)

y2 = self.b2_3x3(self.b2_1x1(x))

y3 = self.b3_5x5(self.b3_1x1(x))

y4 = self.b4_1x1(self.b4_pool(x))

return torch.cat([y1,y2,y3,y4],1) # 按行拼接

最后,来定义GoogLeNet模型即可:

# 定义GoogLeNet类

class My_GoogLeNet(nn.Module):

def __init__(self):

super(My_GoogLeNet, self).__init__()

self.model = nn.Sequential(

# 最前面的几层:看文章上面的图片来定义

Basic_conv2d(in_channels=3, out_channels=64, kernel_size=7, stride=2, padding=3),

nn.MaxPool2d(kernel_size=3, stride=2),

Basic_conv2d(64, 64, kernel_size=1),

Basic_conv2d(64, 192, kernel_size=3, padding=1),

# Inception模块重复

My_Inception_V1(192, 64, 96, 128, 16, 32, 32),

My_Inception_V1(256, 128, 128, 192, 32, 96, 64), # 256 = 64 + 128 +32+32

nn.MaxPool2d(kernel_size=3,stride=2),

My_Inception_V1(480, 192, 96, 208, 16, 48, 64), # 480 = 128+192+96+64

My_Inception_V1(512, 160, 112, 224, 24, 64, 64), # 512 = 192+208+48+64

My_Inception_V1(512, 128, 128, 256, 24, 64, 64), # 512 = 160+224+64+64

My_Inception_V1(512, 112, 144, 288, 32, 64, 64), # 512 = 128+256+64+64

My_Inception_V1(528, 256, 160, 320, 32, 128, 128), # 528 = 112+288+64+64

nn.MaxPool2d(2, stride=2),

My_Inception_V1(832, 256, 160, 320, 32, 128, 128),

My_Inception_V1(832, 384, 192, 384, 48, 128, 128),

# 输出

nn.AdaptiveAvgPool2d((1,1)),

nn.Dropout(0.5),

nn.Linear(1024,1000), # 1024 = 384+384+128+128

)

# 前向算法

def forward(self,x):

result = self.model(x)

return result

4. 总结:

GoogLeNet模型实现起来,说简单也不简单,说难也不是很难。主要还是先实现Inception,再来实现GoogLeNet,要十分注意参考图,这样无论是自己实现,还是看别人的实现方法,都可以快速看懂。

完整代码

# author: baiCai

# 导包

import torch

from torch import nn

from torch.nn import functional as F

from torchvision.models import GoogLeNet

# 定义BN层和ReLU层的类

class Basic_conv2d(nn.Module):

def __init__(self,in_channels,out_channels,**kwargs):

super(Basic_conv2d, self).__init__()

self.conv = nn.Conv2d(in_channels,out_channels,**kwargs)

self.bn = nn.BatchNorm2d(out_channels,eps=0.001)

def forward(self,x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x)

# 定义Inception类

class My_Inception_V1(nn.Module):

def __init__(self,in_planes,ch1x1,ch3x3red,ch3x3,ch5x5red,ch5x5,pool_planes):

'''

:param in_planes: 输入通道数,对于每一条线路初始都一样

:param ch1x1: 1*1卷积核的个数

:param ch3x3: 3*3卷积核的个数

:param ch3x3red: 3*3卷积核那条路的1*1卷积核的个数

:param ch5x5: 5*5卷积核的个数

:param ch5x5red: 5*5卷积核那条路的1*1的个数

:param pool_planes: pool那条路1*1卷积核个数

'''

super(My_Inception_V1,self).__init__()

# 1*1路线

self.b1_1x1 = Basic_conv2d(in_planes,ch1x1,kernel_size=1)

# 1*1 --- 3*3 线路

self.b2_1x1 = Basic_conv2d(in_planes,ch3x3red,kernel_size=1)

self.b2_3x3 = Basic_conv2d(ch3x3red,ch3x3,kernel_size=3,padding=1)

# 1*1 --- 5*5 线路

self.b3_1x1 = Basic_conv2d(in_planes,ch5x5red,kernel_size=1)

self.b3_5x5 = Basic_conv2d(ch5x5red,ch5x5,kernel_size=5,padding=1)

# pool --- 1*1 线路

self.b4_pool = nn.MaxPool2d(kernel_size=3,stride=1,padding=1)

self.b4_1x1 = Basic_conv2d(in_planes,pool_planes,kernel_size=1)

def forward(self,x):

# 四个路线计算值

y1 = self.b1_1x1(x)

y2 = self.b2_3x3(self.b2_1x1(x))

y3 = self.b3_5x5(self.b3_1x1(x))

y4 = self.b4_1x1(self.b4_pool(x))

return torch.cat([y1,y2,y3,y4],1) # 按行拼接

# 定义GoogLeNet类

class My_GoogLeNet(nn.Module):

def __init__(self):

super(My_GoogLeNet, self).__init__()

self.model = nn.Sequential(

# 最前面的几层:看文章上面的图片来定义

Basic_conv2d(in_channels=3, out_channels=64, kernel_size=7, stride=2, padding=3),

nn.MaxPool2d(kernel_size=3, stride=2),

Basic_conv2d(64, 64, kernel_size=1),

Basic_conv2d(64, 192, kernel_size=3, padding=1),

# Inception模块重复

My_Inception_V1(192, 64, 96, 128, 16, 32, 32),

My_Inception_V1(256, 128, 128, 192, 32, 96, 64), # 256 = 64 + 128 +32+32

nn.MaxPool2d(kernel_size=3,stride=2),

My_Inception_V1(480, 192, 96, 208, 16, 48, 64), # 480 = 128+192+96+64

My_Inception_V1(512, 160, 112, 224, 24, 64, 64), # 512 = 192+208+48+64

My_Inception_V1(512, 128, 128, 256, 24, 64, 64), # 512 = 160+224+64+64

My_Inception_V1(512, 112, 144, 288, 32, 64, 64), # 512 = 128+256+64+64

My_Inception_V1(528, 256, 160, 320, 32, 128, 128), # 528 = 112+288+64+64

nn.MaxPool2d(2, stride=2),

My_Inception_V1(832, 256, 160, 320, 32, 128, 128),

My_Inception_V1(832, 384, 192, 384, 48, 128, 128),

# 输出

nn.AdaptiveAvgPool2d((1,1)),

nn.Dropout(0.5),

nn.Linear(1024,1000), # 1024 = 384+384+128+128

)

# 前向算法

def forward(self,x):

result = self.model(x)

return result