kubernetes基础命令

目录

一、名称空间

二、Pod

三、 Deployment

四、Service

五、ingress

六、存储抽象

PV&PVC

ConfigMap

Secret

一、名称空间

资源隔离、但是网络不隔离

# 创建

kubectl create ns test

#删除

kubectl delete ns test

# yaml文件创建

[root@k8s-master ~]# vi test.yaml

[root@k8s-master ~]# cat test.yaml

apiVersion: v1

kind: Namespace

metadata:

name: test

[root@k8s-master ~]# kubectl apply -f test.yaml

Warning: resource namespaces/test is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

namespace/test configured

[root@k8s-master ~]# kubectl get ns -A

NAME STATUS AGE

default Active 156m

kube-node-lease Active 156m

kube-public Active 156m

kube-system Active 156m

kubernetes-dashboard Active 132m

test Active 2m23s

create只能创建资源 apply可以创建或者更新资源

二、Pod

pod是k8s的最小运行单位,一组运行的容器

简单例子

# 启动一个pod

[root@k8s-master ~]# kubectl run mynginx --image=nginx

pod/mynginx created

#查看pod情况

[root@k8s-master ~]# kubectl get pod mynginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynginx 1/1 Running 0 28s 192.168.169.130 k8s-node2

# 进入pod内部

[root@k8s-master ~]# kubectl exec -it mynginx -- /bin/bash

root@mynginx:/# cd /usr/share/nginx/html/

root@mynginx:/usr/share/nginx/html# echo "hello......" > index.html

root@mynginx:/usr/share/nginx/html# exit

exit

[root@k8s-master ~]# curl 192.168.169.130

hello......

# 查看pod详细信息

[root@k8s-master ~]# kubectl describe pod mynginx

Name: mynginx

Namespace: default

Priority: 0

Node: k8s-node2/172.31.0.3

Start Time: Thu, 21 Apr 2022 18:48:59 +0800

Labels: run=mynginx

Annotations: cni.projectcalico.org/podIP: 192.168.169.130/32

Status: Running

IP: 192.168.169.130

IPs:

IP: 192.168.169.130

Containers:

mynginx:

Container ID: docker://cf72dfb1b9f26864eda93e3eb0757977309310efa2e76f5233d078cd466b8447

Image: nginx

Image ID: docker-pullable://nginx@sha256:6d701d83f2a1bb99efb7e6a60a1e4ba6c495bc5106c91709b0560d13a9bf8fb6

Port:

Host Port:

State: Running

Started: Thu, 21 Apr 2022 18:49:15 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-zbvv9 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-zbvv9:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-zbvv9

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m27s default-scheduler Successfully assigned default/mynginx to k8s-node2

Normal Pulling 3m27s kubelet Pulling image "nginx"

Normal Pulled 3m12s kubelet Successfully pulled image "nginx" in 14.441079608s

Normal Created 3m12s kubelet Created container mynginx

Normal Started 3m12s kubelet Started container mynginx

#查看pod日志

[root@k8s-master ~]# kubectl logs mynginx

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2022/04/21 10:49:15 [notice] 1#1: using the "epoll" event method

2022/04/21 10:49:15 [notice] 1#1: nginx/1.21.6

2022/04/21 10:49:15 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2022/04/21 10:49:15 [notice] 1#1: OS: Linux 3.10.0-1127.19.1.el7.x86_64

2022/04/21 10:49:15 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2022/04/21 10:49:15 [notice] 1#1: start worker processes

2022/04/21 10:49:15 [notice] 1#1: start worker process 31

2022/04/21 10:49:15 [notice] 1#1: start worker process 32

192.168.235.192 - - [21/Apr/2022:10:50:38 +0000] "GET / HTTP/1.1" 200 12 "-" "curl/7.29.0" "-"

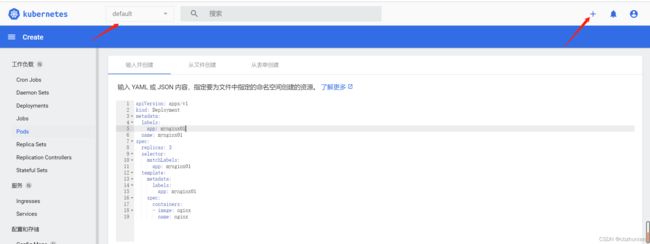

可视化操作

可视化操作创建一个新的pod

点击

查看详情信息

tomcat和nginx之后是可以进行通信的,因为在同一个pod中。

命令行进入容器

# 主要是 --container参数

[root@k8s-master ~]# kubectl exec -it myapp --container=nginx /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@myapp:/# ls

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint.d etc lib media opt root sbin sys usr

root@myapp:/# cd /usr/share/nginx/html/

root@myapp:/usr/share/nginx/html#

三、 Deployment

Pod多副本,自愈,扩缩容,升级,版本控制等等

1.多副本

[root@k8s-master ~]# kubectl create deployment mynginx --image=nginx --replicas=3

deployment.apps/mynginx created

[root@k8s-master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default mynginx-5b686ccd46-6v6ks 1/1 Running 0 9s

default mynginx-5b686ccd46-ghrbt 1/1 Running 0 9s

default mynginx-5b686ccd46-hn8bp 1/1 Running 0 9s

2.自愈

# 查看pod信息

[root@k8s-master ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynginx-5b686ccd46-6v6ks 1/1 Running 0 71s 192.168.36.69 k8s-node1

mynginx-5b686ccd46-ghrbt 1/1 Running 0 71s 192.168.169.134 k8s-node2

mynginx-5b686ccd46-hn8bp 1/1 Running 0 71s 192.168.36.68 k8s-node1

# 删除一个pod

[root@k8s-aster ~]# kubectl delete pod mynginx-5b686ccd46-6v6ks

pod "mynginx-5b686ccd46-6v6ks" deleted

#自动恢复

[root@k8s-master ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynginx-5b686ccd46-2sht7 0/1 ContainerCreating 0 5s k8s-node2

mynginx-5b686ccd46-ghrbt 1/1 Running 0 104s 192.168.169.134 k8s-node2

mynginx-5b686ccd46-hn8bp 1/1 Running 0 104s 192.168.36.68 k8s-node1

3.扩缩容

方法一

[root@k8s-master ~]# kubectl scale --replicas=5 deployment/mynginx

deployment.apps/mynginx scaled

[root@k8s-master ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynginx-5b686ccd46-2sht7 1/1 Running 0 98s 192.168.169.135 k8s-node2

mynginx-5b686ccd46-7657x 0/1 ContainerCreating 0 15s k8s-node1

mynginx-5b686ccd46-ghrbt 1/1 Running 0 3m17s 192.168.169.134 k8s-node2

mynginx-5b686ccd46-hn8bp 1/1 Running 0 3m17s 192.168.36.68 k8s-node1

mynginx-5b686ccd46-n8bmt 1/1 Running 0 15s 192.168.169.136 k8s-node2

# 监控deploy情况

[root@k8s-master ~]# kubectl get deploy -w

NAME READY UP-TO-DATE AVAILABLE AGE

mynginx 5/5 5 5 4m15s

方法二

#修改 replicas 输入 /replicas (查找)

kubectl edit deployment mynginx

可视化界面操作

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mynginx01

name: mynginx01

spec:

replicas: 3

selector:

matchLabels:

app: mynginx01

template:

metadata:

labels:

app: mynginx01

spec:

containers:

- image: nginx

name: nginx为什么没有在master节点上创建呢?

在k8s中master设置有污点,禁止调度。可以取消这个污点

4.更新和版本回退

部署完成阶段修改镜像版本,会启动一个容器杀死一个老的容器,确保应用不掉线。

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

mynginx 5/5 5 5 18m

mynginx01 3/3 3 3 10m

# 设置--record可以查看到这次变动历史

[root@k8s-master ~]# kubectl set image deploy mynginx nginx=nginx:1.16.1 --record

deployment.apps/mynginx image updated

#监控更新

[root@k8s-master ~]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

mynginx-5b686ccd46-2sht7 1/1 Running 0 17m

mynginx-5b686ccd46-7657x 1/1 Running 0 15m

mynginx-5b686ccd46-ghrbt 1/1 Running 0 18m

mynginx-5b686ccd46-hn8bp 1/1 Running 0 18m

mynginx-f9cbbdc9f-hr5fv 0/1 ContainerCreating 0 12s

mynginx-f9cbbdc9f-xg4n9 0/1 ContainerCreating 0 12s

mynginx-f9cbbdc9f-xh54c 0/1 ContainerCreating 0 12s

mynginx01-5dd469889f-6dvg8 1/1 Running 0 11m

mynginx01-5dd469889f-9jvld 1/1 Running 0 11m

mynginx01-5dd469889f-hrfmz 1/1 Running 0 11m

mynginx-f9cbbdc9f-hr5fv 1/1 Running 0 24s

mynginx-5b686ccd46-2sht7 1/1 Terminating 0 17m

mynginx-f9cbbdc9f-nqr72 0/1 Pending 0 0s

mynginx-f9cbbdc9f-nqr72 0/1 Pending 0 0s

mynginx-f9cbbdc9f-nqr72 0/1 ContainerCreating 0 0s

mynginx-f9cbbdc9f-nqr72 0/1 ContainerCreating 0 0s

mynginx-5b686ccd46-2sht7 0/1 Terminating 0 17m

mynginx-5b686ccd46-2sht7 0/1 Terminating 0 17m

mynginx-5b686ccd46-2sht7 0/1 Terminating 0 17m

# 查看历史版本

[root@k8s-master ~]# kubectl rollout history deploy mynginx

deployment.apps/mynginx

REVISION CHANGE-CAUSE

1

2 kubectl set image deploy mynginx nginx=nginx:1.16.1 --record=true

#--to-revision=1 回滚到哪个历史版本

[root@k8s-master ~]# kubectl rollout undo deploy mynginx --to-revision=1

deployment.apps/mynginx rolled back

# 查看是否回退成功

[root@k8s-master ~]# kubectl get deploy mynginx -oyaml |grep image

f:imagePullPolicy: {}

f:image: {}

- image: nginx

imagePullPolicy: Always

还有其他部署,详细查看 工作负载资源 | Kubernetes

四、Service

可以实现一组 pods 公开为网络服务

修改deploy=mynginx中三个容器 中index.html 为 111 222 333

# 部署的mynginx 80端口映射到service的8000

[root@k8s-master ~]# kubectl expose deploy mynginx --port=8000 --target-port=80

service/mynginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 3h31m

mynginx ClusterIP 10.96.182.10 8000/TCP 13s

[root@k8s-master ~]# kubectl scale deploy mynginx --replicas=3

deployment.apps/mynginx scaled

[root@k8s-master ~]# curl 10.96.182.10:8000

1111

[root@k8s-master ~]# curl 10.96.182.10:8000

1111

[root@k8s-master ~]# curl 10.96.182.10:8000

1111

[root@k8s-master ~]# curl 10.96.182.10:8000

2222

[root@k8s-master ~]#

[root@k8s-master ~]# curl 10.96.182.10:8000

2222

[root@k8s-master ~]#

[root@k8s-master ~]# curl 10.96.182.10:8000

3333

默认是使用Clusterip, 内部使用一个IP进行访问三个容器,外部(集群外)是访问不了这个IP

使用NodePort,暴露端口供外部使用,暴露一个随机的端口(30000-32767之间)

[root@k8s-master ~]# kubectl delete svc mynginx

service "mynginx" deleted

[root@k8s-master ~]# kubectl expose deploy mynginx --port=8000 --target-port=80 --type=NodePort

service/mynginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 3h45m

mynginx NodePort 10.96.227.25 8000:31313/TCP 7s

使用集群IP:31313,进行访问

五、ingress

service的统一入口

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml -O

vi deploy.yaml

# 修改image 为registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

# 启动

kubectl apply -f deploy.yaml

准备测试环境

ingress-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

--- #下一个yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

--- #下一个yaml文件

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

--- #下一个yaml文件

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

ingress-test.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.k8s.com" # 如果访问域名hello.k8s.com就会转发到hello-server:8000这个服务

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.k8s.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo

port:

number: 8000

[root@k8s-master ~]# kubectl apply -f ingress-deploy.yaml

deployment.apps/hello-server created

deployment.apps/nginx-demo created

service/nginx-demo created

service/hello-server created

[root@k8s-master ~]# kubectl apply -f ingress-test.yaml

ingress.networking.k8s.io/ingress-host-bar created

[root@k8s-master ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-host-bar nginx hello.k8s.com,demo.k8s.com 172.31.0.3 80 8m26s

[root@k8s-master ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.96.135.70 80:30535/TCP,443:32057/TCP 22m

ingress-nginx-controller-admission ClusterIP 10.96.174.219 443/TCP 22m

图示大致如下:

修改C:\Windows\System32\drivers\etc\hosts文件

添加两条映射 IP是集群IP任意一个

由于nginx服务下的 nginx目录下没有东西 所以说返回404

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2 # 配置路径重写

spec:

ingressClassName: nginx

rules:

- host: "hello.k8s.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.k8s.com"

http:

paths:

- pathType: Prefix

path: /nginx(/|$)(.*) # 如果访问 【demo.k8s.com/nginx 就转到 demo.k8s.com/】 【demo.k8s.com/nginx/111 就转到 demo.k8s.com/111】

backend:

service:

name: nginx-demo

port:

number: 8000

流量限制配置,添加hosts文件映射

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.k8s.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

出现503错误

六、存储抽象

为什么使用NFS文件服务器呢?

在k8s中进行了目录挂载在node1主机上,如果pod被销毁,在node2服务器了拉起一个pod,备份的数据在node1上导致数据不同步。

#所有机器安装

yum install -y nfs-utils

#主节点(让master作为NFS-server)

[root@k8s-master ~]# echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

[root@k8s-master ~]# mkdir -p /nfs/data

[root@k8s-master ~]# systemctl enable rpcbind --now

[root@k8s-master ~]# systemctl enable nfs-server --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

# 配置生效

[root@k8s-master ~]# exportfs -r

#从节点操作

# showmount -e 输出指定NFS服务器输出目录列表(共享目录列表)

[root@k8s-node1 ~]# showmount -e 172.31.0.1

Export list for 172.31.0.1:

/nfs/data *

[root@k8s-node1 ~]# mkdir -p /nfs/data

[root@k8s-node1 ~]# mount -t nfs 172.31.0.1:/nfs/data /nfs/data

[root@k8s-node1 ~]# echo "hello nfs server" > /nfs/data/test.txt

#主节点测试是否生效

[root@k8s-master ~]# cd /nfs/data/

[root@k8s-master data]# ls

test.txt

[root@k8s-master data]# cat test.txt

hello nfs server

简单例子

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html # 挂载实现,对应上面挂载名称

nfs:

server: 172.31.0.1 ## NFS服务器地址

path: /nfs/data/nginx-pv # 挂载到哪个位置

[root@k8s-master ~]# kubectl apply -f nfs-test.yaml

deployment.apps/nginx-pv-demo created

# 修改数据

[root@k8s-master ~]# echo "hello nginx" > /nfs/data/nginx-pv/index.html

[root@k8s-master ~]# kubectl get pods -owide | grep nginx-pv-demo

nginx-pv-demo-65f9644d7f-5g86v 1/1 Running 0 66s 192.168.36.76 k8s-node1

nginx-pv-demo-65f9644d7f-65r5m 1/1 Running 0 66s 192.168.169.148 k8s-node2

# 测试

[root@k8s-master ~]# curl 192.168.36.76

hello nginx

PV&PVC

产生原因:解决上述挂载方式会出现删除pod但并不会删除挂载目录,即nfs服务器中的/nfs/data/nginx-pv/目录不会被删除,浪费空间

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

#nfs主节点

[root@k8s-master ~]# mkdir -p /nfs/data/01

[root@k8s-master ~]# mkdir -p /nfs/data/02

[root@k8s-master ~]# mkdir -p /nfs/data/03

创建pv (持久卷声明)

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 172.31.0.1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 172.31.0.1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 172.31.0.1

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-10m 10M RWX Retain Available nfs 35s

pv02-1gi 1Gi RWX Retain Available nfs 35s

pv03-3gi 3Gi RWX Retain Available nfs 35s创建pvc,申请绑定到pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

# 1Gi的被绑定

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-10m 10M RWX Retain Available nfs 2m34s

pv02-1gi 1Gi RWX Retain Bound default/nginx-pvc nfs 2m34s

pv03-3gi 3Gi RWX Retain Available nfs 2m34s

创建PVC之后 ,pod进行使用

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc #在这边使用

# 修改02目录下的内容

[root@k8s-master ~]# echo 'pvc' > /nfs/data/02/index.html

# 查看pod信息

[root@k8s-master ~]# kubectl get pod -owide | grep pvc

nginx-deploy-pvc-79fc8558c7-78knm 1/1 Running 0 82s 192.168.36.77 k8s-node1

nginx-deploy-pvc-79fc8558c7-qqkm9 1/1 Running 0 82s 192.168.169.149 k8s-node2

[root@k8s-master ~]# curl 192.168.36.77

pvc

ConfigMap

配置文件创建为配置集,可以自动更新

创建配置集

[root@k8s-master ~]# echo "appendonly yes" > redis.conf

[root@k8s-master ~]# kubectl create cm redis-conf --from-file=redis.conf

[root@k8s-master ~]# kubectl get cm | grep redis

redis-conf 1 17s

# 查看配置集的详细内容

[root@k8s-master ~]# kubectl describe cm redis-conf

Name: redis-conf

Namespace: default

Labels:

Annotations:

Data

====

redis.conf:

----

appendonly yes

Events:

[root@k8s-master ~]#kubectl get cm redis-conf -oyaml

#删除不用的内容

apiVersion: v1

data: #data是所有数据,key默认是文件名 value配置文件的内容

redis.conf: | # key

appendonly yes # value

kind: ConfigMap

metadata:

name: redis-conf

namespace: default

创建pod使用配置集

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf"

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

上面实现将配置集redis-conf中的redis.conf(key值)挂载到容器中的/redis-master文件夹下的redis.conf文件中去

图示结构:

左边是pod

右边解析配置集和pod里面挂载的关系

1.首先pod挂载一个名为config,对应路径为pod中的redis-master

2.底下实现config挂载,是使用redis-conf配置集

items表示整个数据,可以用key来取值,取key为redis.conf的内容,并将内容存在redis.conf文件中去。

进入测试

[root@k8s-master ~]# kubectl exec -it redis /bin/bash

root@redis:/data# cat /redis-master/redis.conf

appendonly yes

root@redis:/data# cat /redis-master/redis.conf

appendonly yes

requirepass 123

# 编辑cm配置

[root@k8s-master ~]# kubectl edit cm redis-conf

apiVersion: v1

data:

redis.conf: |

appendonly yes

requirepass 123

kind: ConfigMap

# 进入redis控制台

root@redis:/data# redis-cli

127.0.0.1:6379> config get appendonly

1) "appendonly"

2) "yes"

127.0.0.1:6379> config get requirepass

1) "requirepass"

2) "" # 没生效原因 是因为配置文件是启动时加载,需要重启pod才可以

# 查看redis在哪里运行起来的

[root@k8s-master ~]# kubectl get pod redis -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis 1/1 Running 0 8m5s 192.168.169.150 k8s-node2

# 在k8s-node2节点上停止容器

[root@k8s-node2 ~]# docker ps | grep redis

a9b639ae2e3a redis "redis-server /redis…" 8 minutes ago Up 8 minutes k8s_redis_redis_default_511e3ccb-6904-4839-9b1a-bda4b1879a18_0

da0419de4c5a registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/pause:3.2 "/pause" 8 minutes ago Up 8 minutes k8s_POD_redis_default_511e3ccb-6904-4839-9b1a-bda4b1879a18_0

[root@k8s-node2 ~]# docker stop a9b639ae2e3a

a9b639ae2e3a

# 过一会 容器会自动恢复,k8s的自愈特性

[root@k8s-master ~]# kubectl exec -it redis/bin/bash

error: you must specify at least one command for the container

[root@k8s-master ~]# kubectl exec -it redis /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@redis:/data# redis-cli

127.0.0.1:6379> set aa bb

(error) NOAUTH Authentication required.

127.0.0.1:6379> exit

# 使用密码进入

root@redis:/data# redis-cli -a 123

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> set aa bb

OK

Secret

用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在pod的定义或者 容器镜像中来说更加安全和灵活。

# 命令格式

kubectl create secret docker-registry xxxx \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

#创建pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: 私有镜像

imagePullSecrets:

- name: XXX # 使用创建secret认证进行拉取