【Java实战篇】Day4.在线教育网课平台

文章目录

- 需求: 上传视频

- 1、需求分析

- 2、断点续传

-

- 2.1 分块与合并的简单实现

- 2.2 视频上传流程

- 3、接口定义

- 4、Dao层开发--上传分块

- 5、Service层开发--上传分块

- 6、完善controller层

- 7、Service层开发--合并分块

- 8、完善合并分块controller层

- 9、Spring事务失效的情况

需求: 上传视频

1、需求分析

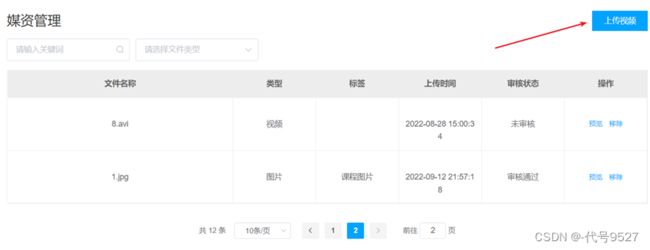

UI设计图:

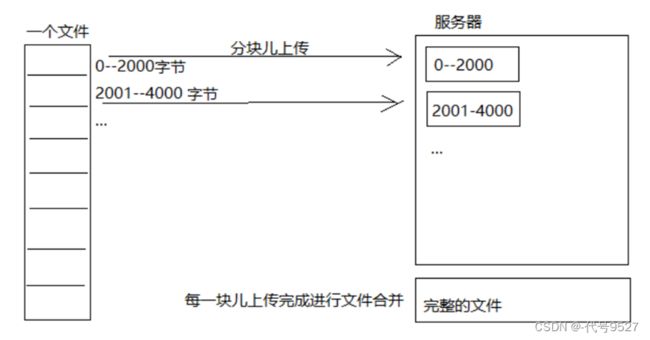

2、断点续传

http协议本身对上传文件大小没有限制,但是客户的网络环境质量、电脑硬件环境等参差不齐,如果一个大文件快上传完了网断了没有上传完成,需要客户重新上传,用户体验非常差,所以对于大文件上传的要求最基本的是断点续传。

断点续传指的是在下载或上传时,将下载或上传任务(一个文件或一个压缩包)人为的

划分为几个部分,每一个部分采用一个线程进行上传或下载,如果碰到网络故障,可以从已经上传或下载的部分开始继续上传下载未完成的部分,而没有必要从头开始上传下载,断点续传可以提高节省操作时间,提高用户体验性。

- 前端上传前先把文件分成块

- 一块一块的上传,上传中断后重新上传,已上传的分块则不用再上传

- 各分块上传完成最后在服务端合并文件

2.1 分块与合并的简单实现

文件分块的思路是:

- 获取源文件的长度–>File类中的length()方法

- 根据设定的分块大小计算块数

- 从源文件读数据并依次向每一块文件写数据

/**

* 大文件处理测试

*/

public class BigFileTest {

//测试文件分块方法

@Test

public void testChunk() throws IOException {

//源文件

File sourceFile = new File("d:/develop/bigfile_test/nacos.mp4");

//存放分块的目录

String chunkPath = "d:/develop/bigfile_test/chunk/";

File chunkFolder = new File(chunkPath);

if (!chunkFolder.exists()) {

chunkFolder.mkdirs();

}

//自己定的分块大小

long chunkSize = 1024 * 1024 * 1;

//分块数量

long chunkNum = (long) Math.ceil(sourceFile.length() * 1.0 / chunkSize);

System.out.println("分块总数:"+chunkNum);

//缓冲区大小

byte[] b = new byte[1024];

//使用RandomAccessFile访问文件, r即读

RandomAccessFile raf_read = new RandomAccessFile(sourceFile, "r");

//分块

for (int i = 0; i < chunkNum; i++) {

//创建分块文件

File file = new File(chunkPath + i);

if(file.exists()){

file.delete();

}

//创建分块文件file

boolean newFile = file.createNewFile();

//判断创建成功后开始写

if (newFile) {

//相当于创建了一个Writer流

RandomAccessFile raf_write = new RandomAccessFile(file, "rw");

int len = -1;

//write方法向分块文件中写数据

while ((len = raf_read.read(b)) != -1) {

raf_write.write(b, 0, len);

if (file.length() >= chunkSize) {

break;

}

}

raf_write.close();

System.out.println("完成分块"+i);

}

}

raf_read.close();

}

}

文件合并的思路是:

- 找到要合并的分块文件后进行排序

- 创建合并文件

- 依次从要合并的文件中读文件并写入上一步的合并文件中

- 使用源文件与合并后文件的md5值来判断是否合并成功

//测试文件合并方法

@Test

public void testMerge() throws IOException {

//块文件目录

File chunkFolder = new File("d:/develop/bigfile_test/chunk/");

//原始文件

File originalFile = new File("d:/develop/bigfile_test/nacos.mp4");

//最后合并成的文件

File mergeFile = new File("d:/develop/bigfile_test/nacos01.mp4");

if (mergeFile.exists()) {

mergeFile.delete();

}

//创建新的合并文件

mergeFile.createNewFile();

//用于写文件

RandomAccessFile raf_write = new RandomAccessFile(mergeFile, "rw");

//指针指向文件顶端

raf_write.seek(0);

//byte数组读写缓冲区

byte[] b = new byte[1024];

//获取分块目录下的文件列表

//listFiles方法获取当前目录下的所有子文件,返回一个File[]数组

File[] fileArray = chunkFolder.listFiles();

// 转成集合,便于排序

List<File> fileList = Arrays.asList(fileArray);

// 从小到大排序

Collections.sort(fileList, new Comparator<File>() {

@Override

public int compare(File o1, File o2) {

//按照文件名称进行排序,分块后文件名为1, 2 ,3...

return Integer.parseInt(o1.getName()) - Integer.parseInt(o2.getName());

}

});

//合并文件

for (File chunkFile : fileList) {

RandomAccessFile raf_read = new RandomAccessFile(chunkFile, "rw");

int len = -1;

//从分块中读,写入合并文件的File对象

while ((len = raf_read.read(b)) != -1) {

raf_write.write(b, 0, len);

}

raf_read.close();

}

raf_write.close();

//校验文件

try (

FileInputStream fileInputStream = new FileInputStream(originalFile);

FileInputStream mergeFileStream = new FileInputStream(mergeFile);

) {

//取出原始文件的md5

String originalMd5 = DigestUtils.md5Hex(fileInputStream);

//取出合并文件的md5进行比较

String mergeFileMd5 = DigestUtils.md5Hex(mergeFileStream);

if (originalMd5.equals(mergeFileMd5)) {

System.out.println("合并文件成功");

} else {

System.out.println("合并文件失败");

}

}

}

2.2 视频上传流程

注意根据流程去分析哪些地方需要后端开发接口,这个接口应该如何去实现

- 前端对文件进行分块

- 前端上传分块前请求媒资服务检查文件是否存在,存在则不上传

- 不存在则开始上传

- 媒资服务将分块上传至MinIO

- 前端将分块上传完毕请求媒资服务合并分块

- 媒资服务判断分块上传完成则请求MinIO合并文件

- 合并完成校验合并后的文件是否完整,如果不完整则删除文件

//将分块文件上传至minio

@Test

public void uploadChunk(){

String chunkFolderPath = "D:\\develop\\upload\\chunk\\";

File chunkFolder = new File(chunkFolderPath);

//获取分块文件的数组

File[] files = chunkFolder.listFiles();

//将每个分块文件上传至minio

for (int i = 0; i < files.length; i++) {

try {

UploadObjectArgs uploadObjectArgs = UploadObjectArgs.builder()

.bucket("testbucket")//桶

.object("chunk/" + i)//存放的子目录

.filename(files[i].getAbsolutePath())//本地文件的路径

.build();

minioClient.uploadObject(uploadObjectArgs);

System.out.println("上传分块成功"+i);

} catch (Exception e) {

e.printStackTrace();

}

}

}

使用minion提供的方法进行合并文件:

//合并文件,要求分块文件最小5M

@Test

public void test_merge() throws Exception {

//创建用于后面传入创建composeObjectArgs的source

//也可for循环实现

List<ComposeSource> sources = Stream.iterate(0, i -> ++i)

.limit(6)

//这里的map映射,有种把面粉做成面条的感觉

.map(i -> ComposeSource.builder()

.bucket("testbucket")

.object("chunk/".concat(Integer.toString(i)))

.build())

.collect(Collectors.toList());

ComposeObjectArgs composeObjectArgs = ComposeObjectArgs.builder().bucket("testbucket").object("merge01.mp4").sources(sources).build();

minioClient.composeObject(composeObjectArgs);

}

//清除分块文件

@Test

public void test_removeObjects(){

//合并分块完成将分块文件清除

List<DeleteObject> deleteObjects = Stream.iterate(0, i -> ++i)

.limit(6)

.map(i -> new DeleteObject("chunk/".concat(Integer.toString(i))))

.collect(Collectors.toList());

RemoveObjectsArgs removeObjectsArgs = RemoveObjectsArgs.builder().bucket("testbucket").objects(deleteObjects).build();

Iterable<Result<DeleteError>> results = minioClient.removeObjects(removeObjectsArgs);

results.forEach(r->{

DeleteError deleteError = null;

try {

deleteError = r.get();

} catch (Exception e) {

e.printStackTrace();

}

});

}

上面使用流来造ComposeSource的集合,使用for循环等价于:

List<ComposeSource> sources = new ArrayList<>();

//循环次数的控制,流中用Stream.iterate+limit实现

for(int i = 0; i<6 ; i++){

//这里在流中通过一个映射来实现

ComposeSource composeSource = ComposeSource.builder()

.bucket("testbucket")

.object("chunk/".concat(Integer.toString(i)))

.build());

sources.add(composeSource); //相当于最后的收集

}

3、接口定义

与前端约定结果类RestResponse.java,操作成功返回{code:0}否则返回{code:-1}

@Api(value = "大文件上传接口", tags = "大文件上传接口")

@RestController

public class BigFilesController {

@ApiOperation(value = "文件上传前检查文件")

@PostMapping("/upload/checkfile")

public RestResponse<Boolean> checkfile(

@RequestParam("fileMd5") String fileMd5

) throws Exception {

return null;

}

@ApiOperation(value = "分块文件上传前的检测")

@PostMapping("/upload/checkchunk")

public RestResponse<Boolean> checkchunk(@RequestParam("fileMd5") String fileMd5,

@RequestParam("chunk") int chunk) throws Exception {

return null;

}

@ApiOperation(value = "上传分块文件")

@PostMapping("/upload/uploadchunk")

public RestResponse uploadchunk(@RequestParam("file") MultipartFile file,

@RequestParam("fileMd5") String fileMd5,

@RequestParam("chunk") int chunk) throws Exception {

return null;

}

@ApiOperation(value = "合并文件")

@PostMapping("/upload/mergechunks")

public RestResponse mergechunks(@RequestParam("fileMd5") String fileMd5,

@RequestParam("fileName") String fileName,

@RequestParam("chunkTotal") int chunkTotal) throws Exception {

return null;

}

}

4、Dao层开发–上传分块

继承的BaseMapper足够使用

5、Service层开发–上传分块

先写检查文件或者分块是否存在的接口, Service层接口定义:

/**

* @description 检查文件是否存在

* @param fileMd5 文件的md5

* @return RestResponse false不存在,true存在

*/

public RestResponse<Boolean> checkFile(String fileMd5);

/**

* @description 检查分块是否存在

* @param fileMd5 文件的md5

* @param chunkIndex 分块序号

* @return RestResponse false不存在,true存在

*/

public RestResponse<Boolean> checkChunk(String fileMd5, int chunkIndex);

实现Service层方法:

@Override

public RestResponse<Boolean> checkFile(String fileMd5) {

//在媒资表中查询文件信息

MediaFiles mediaFiles = mediaFilesMapper.selectById(fileMd5);

//数据库存在,继续查minion,以防只是数据库中的脏数据

if (mediaFiles != null) {

//桶

String bucket = mediaFiles.getBucket();

//存储目录

String filePath = mediaFiles.getFilePath();

//文件流

InputStream stream = null;

try {

stream = minioClient.getObject(

GetObjectArgs.builder()

.bucket(bucket)

.object(filePath)

.build());

if (stream != null) {

//文件已存在

return RestResponse.success(true);

}

} catch (Exception e) {

}

}

//文件不存在

return RestResponse.success(false);

}

@Override

public RestResponse<Boolean> checkChunk(String fileMd5, int chunkIndex) {

//得到分块文件目录

String chunkFileFolderPath = getChunkFileFolderPath(fileMd5);

//得到分块文件的路径

String chunkFilePath = chunkFileFolderPath + chunkIndex;

//文件流

InputStream fileInputStream = null;

try {

fileInputStream = minioClient.getObject(

GetObjectArgs.builder()

.bucket(bucket_videoFiles)

.object(chunkFilePath)

.build());

if (fileInputStream != null) {

//分块已存在

return RestResponse.success(true);

}

} catch (Exception e) {

}

//分块未存在

return RestResponse.success(false);

}

//得到分块文件的目录

//我这里分块存储的路径是,md5值的前两位为两层目录.再加chunk

private String getChunkFileFolderPath(String fileMd5) {

return fileMd5.substring(0, 1) + "/" + fileMd5.substring(1, 2) + "/" + fileMd5 + "/" + "chunk" + "/";

}

接下来写上传分块的接口, Service层接口定义为:

/**

* @description 上传分块

* @param fileMd5 文件md5

* @param chunk 分块序号

* @param bytes 文件字节

*/

public RestResponse uploadChunk(String fileMd5,int chunk,byte[] bytes);

写实现类:

@Override

public RestResponse uploadChunk(String fileMd5, int chunk, byte[] bytes) {

//得到分块文件的目录路径

String chunkFileFolderPath = getChunkFileFolderPath(fileMd5);

//得到分块文件的路径

String chunkFilePath = chunkFileFolderPath + chunk;

try {

//直接调用之前自己定义的addMediaFilesToMinIO方法,将文件存储至minIO

addMediaFilesToMinIO(bytes, bucket_videoFiles,chunkFilePath);

return RestResponse.success(true);

} catch (Exception ex) {

ex.printStackTrace();

log.debug("上传分块文件:{},失败:{}",chunkFilePath,e.getMessage());

}

return RestResponse.validfail(false,"上传分块失败");

}

6、完善controller层

@ApiOperation(value = "文件上传前检查文件")

@PostMapping("/upload/checkfile")

public RestResponse<Boolean> checkfile(

@RequestParam("fileMd5") String fileMd5

) throws Exception {

return mediaFileService.checkFile(fileMd5);

}

@ApiOperation(value = "分块文件上传前的检测")

@PostMapping("/upload/checkchunk")

public RestResponse<Boolean> checkchunk(@RequestParam("fileMd5") String fileMd5,

@RequestParam("chunk") int chunk) throws Exception {

return mediaFileService.checkChunk(fileMd5,chunk);

}

@ApiOperation(value = "上传分块文件")

@PostMapping("/upload/uploadchunk")

public RestResponse uploadchunk(@RequestParam("file") MultipartFile file,

@RequestParam("fileMd5") String fileMd5,

@RequestParam("chunk") int chunk) throws Exception {

//创建临时文件

File tempFile = File.createTempFile("minio", "temp");

//上传的文件拷贝到临时文件

file.transferTo(tempFile);

//文件路径

String absolutePath = tempFile.getAbsolutePath();

//注意这里获取文件绝对路径的实现思路:创建临时文件--copy--getAbsolutPath

return mediaFileService.uploadChunk(fileMd5,chunk,absolutePath);

}

7、Service层开发–合并分块

定义Service层接口:

/**

* @description 合并分块

* @param companyId 机构id

* @param fileMd5 文件md5

* @param chunkTotal 分块总和

* @param uploadFileParamsDto 文件信息

*/

public RestResponse mergechunks(Long companyId,String fileMd5,int chunkTotal,UploadFileParamsDto uploadFileParamsDto);

写接口的实现类, 思路大概如下:

- 找到分块文件调用minion的sdk进行文件合并

- 校验合并后的文件和源文件是否一致, 一致则成功

- 将文件信息入库

- 删除清理分块文件

@Override

public RestResponse mergechunks(Long companyId, String fileMd5, int chunkTotal, UploadFileParamsDto uploadFileParamsDto) {

//=====获取分块文件路径=====

String chunkFileFolderPath = getChunkFileFolderPath(fileMd5);

//组成将分块文件路径组成 List8、完善合并分块controller层

@ApiOperation(value = "合并文件")

@PostMapping("/upload/mergechunks")

public RestResponse mergechunks(@RequestParam("fileMd5") String fileMd5,

@RequestParam("fileName") String fileName,

@RequestParam("chunkTotal") int chunkTotal) throws Exception {

Long companyId = 1232141425L;

UploadFileParamsDto uploadFileParamsDto = new UploadFileParamsDto();

uploadFileParamsDto.setFileType("001002");

uploadFileParamsDto.setTags("课程视频");

uploadFileParamsDto.setRemark("");

uploadFileParamsDto.setFilename(fileName);

return mediaFileService.mergechunks(companyId,fileMd5,chunkTotal,uploadFileParamsDto);

}