Springboot-Sharding-Jdbc-Seata集成(五)分布式事务

只有莲花才能比得上你的圣洁,只有月亮才能比得上你的冰清。

一.pom.xml

4.0.0

org.springframework.boot

spring-boot-starter-parent

2.4.2

com.yy

springboot-sharding-jdbc-seata

0.0.1-SNAPSHOT

springboot-sharding-jdbc-seata

Demo project for Spring Boot

1.8

4.1.1

2020.0.1

2021.1

org.springframework.boot

spring-boot-starter-web

org.springframework.boot

spring-boot-starter

org.springframework.boot

spring-boot-starter-jdbc

2.5.6

mysql

mysql-connector-java

runtime

org.springframework.boot

spring-boot-starter-actuator

com.alibaba.cloud

spring-cloud-starter-alibaba-nacos-discovery

org.springframework.cloud

spring-cloud-starter-bootstrap

com.alibaba.cloud

spring-cloud-starter-alibaba-nacos-config

org.apache.shardingsphere

sharding-jdbc-spring-boot-starter

${sharding-sphere.version}

org.apache.shardingsphere

sharding-transaction-xa-core

${sharding-sphere.version}

org.apache.shardingsphere

sharding-transaction-base-seata-at

${sharding-sphere.version}

com.alibaba.cloud

spring-cloud-alibaba-seata

2.2.0.RELEASE

io.seata

seata-spring-boot-starter

io.seata

seata-spring-boot-starter

1.4.2

com.alibaba

druid-spring-boot-starter

1.1.10

com.baomidou

mybatis-plus-boot-starter

3.2.0

com.baomidou

mybatis-plus-generator

3.2.0

org.freemarker

freemarker

2.3.28

io.springfox

springfox-swagger2

2.7.0

io.springfox

springfox-swagger-ui

2.7.0

org.projectlombok

lombok

true

cn.hutool

hutool-all

5.5.8

com.alibaba.nacos

nacos-client

1.4.1

org.springframework.boot

spring-boot-starter-test

test

org.springframework.cloud

spring-cloud-dependencies

${spring-cloud.version}

pom

import

com.alibaba.cloud

spring-cloud-alibaba-dependencies

${spring-cloud-alibab.version}

pom

import

org.springframework.boot

spring-boot-maven-plugin

public

aliyun nexus

https://maven.aliyun.com/nexus/content/groups/public/

true

public

aliyun nexus

https://maven.aliyun.com/nexus/content/groups/public//

true

false

二.配置文件

1.bootstrap.yml

spring:

# Nacos 注册 配置

cloud:

nacos:

config:

server-addr: ip:13348

file-extension: yaml

group: SEATA_GROUP

namespace: 85a8089c-4d4f-485d-b873-107e679528a6

prefix: ${spring.application.name}-${spring.profiles.active}.yaml

discovery:

server-addr: ip:13348

group: SEATA_GROUP

namespace: 85a8089c-4d4f-485d-b873-107e679528a6

application:

name: springboot-sharding-jdbc-seata

profiles:

active: seata

2.application.yml

server:

port: 10013

servlet:

context-path: /

spring:

application:

name: springboot-sharding-jdbc-seata

main:

allow-bean-definition-overriding: true

profiles:

active: seata

dynamic:

# 数据源配置

druid:

# druid连接池监控

stat-view-servlet:

enabled: true

url-pattern: /druid/*

login-username: admin

login-password: admin

# 初始化时建立物理连接的个数

initial-size: 5

# 最大连接池数量

max-active: 30

# 最小连接池数量

min-idle: 5

# 获取连接时最大等待时间,单位毫秒

max-wait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

time-between-eviction-runs-millis: 60000

# 连接保持空闲而不被驱逐的最小时间

min-evictable-idle-time-millis: 300000

# 用来检测连接是否有效的sql,要求是一个查询语句

validation-query: select count(*) from dual

# 建议配置为true,不影响性能,并且保证安全性。申请连接的时候检测,如果空闲时间大于timeBetweenEvictionRunsMillis,执行validationQuery检测连接是否有效。

test-while-idle: true

# 申请连接时执行validationQuery检测连接是否有效,做了这个配置会降低性能。

test-on-borrow: false

# 归还连接时执行validationQuery检测连接是否有效,做了这个配置会降低性能。

test-on-return: false

# 是否缓存preparedStatement,也就是PSCache。PSCache对支持游标的数据库性能提升巨大,比如说oracle。在mysql下建议关闭。

pool-prepared-statements: false

# 要启用PSCache,必须配置大于0,当大于0时,poolPreparedStatements自动触发修改为true。

max-pool-prepared-statement-per-connection-size: 50

# 配置监控统计拦截的filters,去掉后监控界面sql无法统计

filters: stat,wall

# 通过connectProperties属性来打开mergeSql功能;慢SQL记录

connection-properties:

druid.stat.mergeSql: true

druid.stat.slowSqlMillis: 500

# 合并多个DruidDataSource的监控数据

use-global-data-source-stat: true

filter:

stat:

log-slow-sql: true

slow-sql-millis: 1000

merge-sql: true

wall:

config:

multi-statement-allow: true

# mybatis-plus 配置

mybatis-plus:

configuration:

map-underscore-to-camel-case: true

auto-mapping-behavior: full

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

mapper-locations: classpath*:mapper/**/*Mapper.xml

global-config:

# 逻辑删除配置

db-config:

# 删除前

logic-not-delete-value: 0

# 删除后

logic-delete-value: 1

3.application-seata.yml

# sharding-jdbc 4.0 分布式事务XA

# 更多说明请参考官方文档:https://shardingsphere.apache.org/document/legacy/4.x/document/cn/overview/;

# 历史版本配置:https://github.com/apache/shardingsphere/blob/master/docs/document/content/others/api-change-history/shardingsphere-jdbc/spring-boot-starter.en.md

spring:

shardingsphere:

# 显示sql

props:

sql:

show: true

# 配置数据源

datasource:

names: ds0,ds1

ds0:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://ip:13301/lth?useUnicode=true&useSSL=false&serverTimezone=Asia/Shanghai

username: root

password:

minPoolSize: 5

ds1:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://ip:13301/mumu?useUnicode=true&useSSL=false&serverTimezone=Asia/Shanghai

username: root

password:

minPoolSize: 5

ds:

maxPoolSize: 100

sharding:

# 未配置分片规则的表将通过默认数据源定位 没有配置下面 actual-data-nodes 的表都会去ds0执行相关操作

default-data-source-name: ds0

# 数据库的表配置 说明:两个数据库不存在分库分表这些 就是单纯的两个毫无关系的数据库

# 其中 d_sharding_xa_test1表在(ds0)的lth库

# d_sharding_xa_test2表在(ds1)的mumu库

tables:

d_sharding_xa_test1:

actual-data-nodes: ds0.d_sharding_xa_test1

# 主键的列名;默认使用雪花算法,生成64bit的长整型数据,也支持UUID的方式。

key-generator:

# 主键的列名

column: id

# 主键生成策略 UUID SNOWFLAKE 符号位(1bit) 时间戳位(41bit)

type: SNOWFLAKE

d_sharding_xa_test2:

actual-data-nodes: ds1.d_sharding_xa_test2

# 主键的列名;默认使用雪花算法,生成64bit的长整型数据,也支持UUID的方式。

key-generator:

# 主键的列名

column: id

# 主键生成策略 UUID SNOWFLAKE 符号位(1bit) 时间戳位(41bit)

type: SNOWFLAKE

#seata分布式事务配置

seata:

enabled: true

application-id: ${spring.application.name} #服务名

tx-service-group: sharding_jdbc_tx_group # 自定义的事务分组名称

enable-auto-data-source-proxy: true # 启用自动数据源代理

use-jdk-proxy: false

service:

vgroupMapping:

sharding_jdbc_tx_group: seata-server # 是tc注册到注册中心的服务名称serverAddr

enable-degrade: false # 是否启用降级

disable-global-transaction: false # 是否禁用全局事务

use-jdk-proxy: false

grouplist:

seata-server: ip:8091

client:

load-balance:

type: RandomLoadBalance

rm:

report-success-enable: false

config:

type: nacos

nacos:

namespace: 85a8089c-4d4f-485d-b873-107e679528a6 # nacos的服务命名空间ID

serverAddr: ip:13348 # 分组名称

group: SEATA_GROUP

userName: "nacos"

password: "nacos"

registry:

type: nacos

nacos:

application: seata-server # seata 在注册中心的名称application(必须和服务端一致)

serverAddr: ip:13348

group: SEATA_GROUP

namespace: 85a8089c-4d4f-485d-b873-107e679528a6

userName: "nacos"

password: "nacos"

cluster: seata-server

4.seata.conf

client {

application.id = springboot-sharding-jdbc-seata

# get cluster name

transaction.service.group = sharding_jdbc_tx_group

}

## groupMapping.sharding_jdbc_tx_group = "default"

## 两种方式执行

## 1.yml配置文件+seata.conf执行

## 2.registry.conf+seata.conf执行

5.undo_log表新建sql

undo-log 是 AT 模式中的核心部分 , 他是在 RM 部分完成的 , 在每一个数据库单元处理时均会生成一条 undoLog 数据.

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

6.registry.conf

#seata服务, 将type从file改成nacos, 将seata服务配置进nacos

registry {

type = "nacos"

nacos {

application = "seata-serve"

serverAddr = "ip:13348"

group = "SEATA_GROUP"

namespace = "85a8089c-4d4f-485d-b873-107e679528a6"

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

#file、 nacos、 apollo、 zk、 consul、 etcd3

type = "nacos"

nacos {

serverAddr = "ip:13348"

namespace = "85a8089c-4d4f-485d-b873-107e679528a6"

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

}

}

三.功能验证

1.SeataAopConfig配置

@Aspect

@Configuration

public class SeataAopConfig {

private static final String AOP_POINTCUT_EXPRESSION = "@annotation(io.seata.spring.annotation.GlobalTransactional)";

@Bean

public GlobalTransactionalInterceptor globalTransactionalInterceptor() {

GlobalTransactionalInterceptor globalTransactionalInterceptor = new GlobalTransactionalInterceptor(null);

return globalTransactionalInterceptor;

}

@Bean

public Advisor seataAdviceAdvisor() {

AspectJExpressionPointcut pointcut = new AspectJExpressionPointcut();

pointcut.setExpression(AOP_POINTCUT_EXPRESSION);

return new DefaultPointcutAdvisor(pointcut, globalTransactionalInterceptor());

}

}

2.SeataFilter拦截器

@Slf4j

@Component

public class SeataFilter implements Filter {

@Override

public void init(FilterConfig filterConfig) throws ServletException {

log.info("执行 init 方法");

}

@Override

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse, FilterChain filterChain) throws IOException, ServletException {

HttpServletRequest req = (HttpServletRequest) servletRequest;

String xid = req.getHeader(RootContext.KEY_XID.toLowerCase());

log.info("xid->{}", xid);

boolean isBind = false;

if (StringUtils.isNotBlank(xid)) {

//如果xid不为空,则RootContext需要绑定xid,供给seata识别这是同一个分布式事务

RootContext.bind(xid);

isBind = true;

}

try {

filterChain.doFilter(servletRequest, servletResponse);

} finally {

if (isBind) {

RootContext.unbind();

}

}

}

@Override

public void destroy() {

log.info("执行 destroy 方法");

}

}

3.ShardingSeataTestController(Seata测试)

@Slf4j

// Seata分布式事务注解

@GlobalTransactional(rollbackFor = Exception.class)

@RestController

@RequestMapping("/api/tx")

public class ShardingSeataTestController {

@Autowired

private ShardingXaTest1Mapper xaTest1Mapper;

@Autowired

private ShardingXaTest2Mapper xaTest2Mapper;

@Transactional

@GetMapping("/saveSeata")

public String insertSeataTest(){

// d_sharding_xa_test1表在(ds0)的lth库

ShardingXaTest1 xaTest1 = new ShardingXaTest1();

xaTest1.setXaName(UUID.randomUUID().toString()+"-base");

xaTest1.setXaRemark(UUID.randomUUID().toString()+"-basemark");

xaTest1Mapper.insert(xaTest1);

// d_sharding_xa_test2表在(ds1)的mumu库

ShardingXaTest2 xaTest2 = new ShardingXaTest2();

xaTest2.setXaName(UUID.randomUUID().toString()+"-base");

xaTest2.setXaRemark(UUID.randomUUID().toString()+"-basemark");

xaTest2Mapper.insert(xaTest2);

return "success";

}

}

4.ShardingXaTestController(Shaerding-jdbc事务)

@Slf4j

@RestController

@RequestMapping("/api/tx")

public class ShardingXaTestController {

@Autowired

private ShardingXaTest1Mapper xaTest1Mapper;

@Autowired

private ShardingXaTest2Mapper xaTest2Mapper;

/**

* https://shardingsphere.apache.org/document/legacy/4.x/document/cn/features/transaction/

* https://shardingsphere.apache.org/document/legacy/4.x/document/cn/manual/sharding-jdbc/usage/transaction/#%E9%85%8D%E7%BD%AEspring-boot%E7%9A%84%E4%BA%8B%E5%8A%A1%E7%AE%A1%E7%90%86%E5%99%A8

*

* XA事务管理器参数配置(可选)

* ShardingSphere默认的XA事务管理器为Atomikos,在项目的logs目录中会生成xa_tx.log,

* 这是XA崩溃恢复时所需的日志,请勿删除。

* 也可以通过在项目的classpath中添加jta.properties来定制化Atomikos配置项。

*

* BASE柔性事务管理器(SEATA-AT配置)

* 1.按照seata-work-shop中的步骤,下载并启动seata server,参考 Step6 和 Step7即可。

* 2.在每一个分片数据库实例中执创建undo_log表(以MySQL为例)

* 3.在classpath中增加seata.conf

* 4.根据实际场景修改seata的file.conf和registry.conf文件

*/

/**

* 支持TransactionType.LOCAL, TransactionType.XA, TransactionType.BASE

* http://localhost:10013/api/xa/save

* @return

*/

@Transactional

@ShardingTransactionType(TransactionType.XA)

@GetMapping("/saveXa")

public String insertXaTest(){

// d_sharding_xa_test1表在(ds0)的lth库

ShardingXaTest1 xaTest1 = new ShardingXaTest1();

xaTest1.setXaName(UUID.randomUUID().toString()+"-xa");

xaTest1.setXaRemark(UUID.randomUUID().toString()+"-xamark");

xaTest1Mapper.insert(xaTest1);

// d_sharding_xa_test2表在(ds1)的mumu库

ShardingXaTest2 xaTest2 = new ShardingXaTest2();

xaTest2.setXaName(UUID.randomUUID().toString()+"-xa");

xaTest2.setXaRemark(UUID.randomUUID().toString()+"-xamark");

xaTest2Mapper.insert(xaTest2);

return "success";

}

@Transactional

@ShardingTransactionType(TransactionType.BASE)

@GetMapping("/saveBase")

public String insertBaseTest(){

// d_sharding_xa_test1表在(ds0)的lth库

ShardingXaTest1 xaTest1 = new ShardingXaTest1();

xaTest1.setXaName(UUID.randomUUID().toString()+"-base");

xaTest1.setXaRemark(UUID.randomUUID().toString()+"-basemark");

xaTest1Mapper.insert(xaTest1);

// d_sharding_xa_test2表在(ds1)的mumu库

ShardingXaTest2 xaTest2 = new ShardingXaTest2();

xaTest2.setXaName(UUID.randomUUID().toString()+"-base");

xaTest2.setXaRemark(UUID.randomUUID().toString()+"-basemark");

xaTest2Mapper.insert(xaTest2);

return "success";

}

}

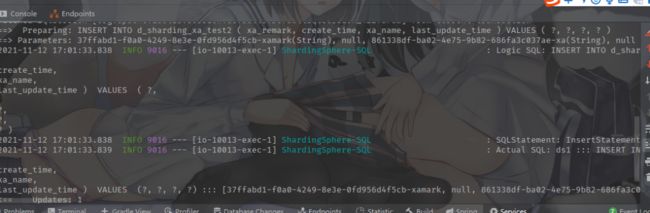

5.启动项目测试

sharding-jdbc 事务测试:http://localhost:10013/api/tx/saveXa

Seata 事务测试: http://localhost:10013/api/tx/saveBase

错误:搭建Seata问题汇总:

1.can not get cluster name in registry config 'service.vgroupMapping.account-service-fescar-service-group', please make sure registry config correct

1.1.把 vgroupMapping.my_test_tx_group = "default"

改成 vgroupMapping.account-service-fescar-service-group = "default"

1.2.dataId必须是service.vgroupMapping+分组

2.no available service found in cluster 'default', please make sure registry config correct and keep

2.1.registry.nacos.applictopn= seata 在注册中心的名称application

3:注意

vgroupMapping.delivery-note-group = “seata-server”

seata-server.grouplist = “192.168.145.185:8091”

这个配置一定要注意,默认格式是:

vgroupMapping.事务分组名称 = “事务分组名值”

事务分组名值.grouplist= “127.0.0.1:8091”

这里特别注意,如果seata服务器是其他IP地址,这里事务分组名值绝对不能写default,如果写default,seata会忽略下面的事务分组名值.grouplist= "127.0.0.1:8091"这句话

直接给事务分组名值.grouplist的值设置为127.0.0.1:8091,从而导致我们设置自己的seata服务器不生效。你如在夏日中盛开的君子兰,谦谦有礼,持宠不娇