【机器学习】感知机模型实现

文章目录

- 数据准备

- 原始形式

- 对偶形式

- 总结

- 参考

数据准备

这里采用minst手写数字数据集作为对象数据,源数据取自 Dight Recognizer | Kaggle;该数据集包含了42k张从0~9的手写数字,每一行数据代表了28 x 28的图片和它相应的标注类别。

由于感知机是一个二分类的线性判别分类器,因此需要对10个类别的原数据进行处理成两个类别;为了保持数据的平衡,这里简单将0~4取作负类 -1,将5~9取作正类 +1,代码如下:

# @Author: phd

# @Date: 19-3-28

# @Site: github.com/phdsky

# @Description:

# Prepare binary classification mnist data

# label(number) : -1(0 ~ 4) 1(5 ~ 9)

import os

import pandas as pd

mnist = pd.DataFrame(pd.read_csv("mnist.csv"))

print(mnist.shape)

# First convert 0 ~ 4 to -1

mnist.loc[mnist['label'] < 5, 'label'] = -1

# Then convert 5 to 9 to 1

mnist.loc[mnist['label'] >= 5, 'label'] = 1

file_name = "mnist_binary.csv"

if not os.path.exists(file_name):

mnist.to_csv(file_name, index=False)

else:

print("%s already exists" % file_name)

如果下载存在问题,处理前后的数据可以直接从github上下载:

https://github.com/phdsky/xCode/tree/main/机器学习/统计学习方法/data

原始形式

感知机利用每一个误分类点对参数进行随机梯度下降,直到所有输入样本均被正确分类;原始形式算法步骤如下:

根据上述算法步骤,将数据简单分为训练集和测试集两个部分,利用感知机模型对训练集数据直接进行训练(不进行图像特征提取),每遍历一次训练集输出本epoch的训练精度,最终用测试集对训练好的感知机模型进行测试;实现代码如下:

# @Author: phd

# @Date: 19-3-28

# @Site: github.com/phdsky

# @Description: NULL

import time

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

## Parameters setting

w0 = 0

b0 = 0

epoch_times = 300

# learning params

rate = 1 # (0 < rate <= 1)

ratio = 3

period = 30

def sign(x):

if x >= 0:

return 1

elif x < 0:

return -1

else:

print("Sign function input wrong!\n")

class Perceptron(object):

def __init__(self, w, b, epoch, learning_rate, learning_ratio, learning_period):

self.weight = w

self.bias = b

self.epoch = epoch

self.lr_rate = learning_rate

self.lr_ratio = learning_ratio

self.lr_period = learning_period

def train(self, X, y):

print("Training on train dataset...")

# Feature arrange - Simple init - Column vector

self.weight = np.full((len(X[0]), 1), self.weight, dtype=float)

for epoch in range(self.epoch):

miss_count = 0

data_count = len(X)

for i in range(data_count): # Single batch training

feature = X[i]

label = y[i]

result = label * (np.dot(feature, self.weight) + self.bias)

if result <= 0:

miss_count += 1

self.weight += np.reshape(self.lr_rate * label * feature, (len(feature), 1))

self.bias += self.lr_rate * label

# Print training log

print("\rEpoch %d\tLearning rate %f\tTraining accuracy %f" %

((epoch + 1), self.lr_rate, (int(data_count - miss_count) / data_count)), end='')

# Decay learning rate

if epoch % self.lr_period == 0:

self.lr_rate /= self.lr_ratio

# Stop training

if self.lr_rate <= 1e-6:

print("Learning rate is too low, Early stopping...\n")

break

# print("Parameters after learning")

# print(self.weight)

# print(self.bias)

print("\nEnd of training progress\n")

def predict(self, X, y):

print("Predicting on test dataset...")

hit_count = 0

data_count = len(X)

for i in range(data_count):

feature = X[i]

label = y[i]

result = np.dot(feature, self.weight) + self.bias

predict_label = sign(result)

if predict_label == label:

hit_count += 1

print("Predicting accuracy %f\n" % (int(hit_count) / data_count))

if __name__ == "__main__":

mnist_data = pd.read_csv("../data/mnist_binary.csv")

mnist_values = mnist_data.values

images = mnist_values[::, 1::]

labels = mnist_values[::, 0]

X_train, X_test, y_train, y_test = train_test_split(

images, labels, test_size=0.33, random_state=42

)

# bpc - Binary Perceptron Classification

bpc = Perceptron(w=w0, b=b0, epoch=epoch_times, learning_rate=rate,

learning_ratio=ratio, learning_period=period)

# Start training

bpc.train(X=X_train, y=y_train)

# Start predicting

bpc.predict(X=X_test, y=y_test)

代码输出

/Users/phd/Softwares/anaconda3/bin/python /Users/phd/Desktop/ML/perceptron/perceptron.py

Training on train dataset...

Epoch 300 Learning rate 0.000017 Training accuracy 0.838984

End of training progress

Predicting on test dataset...

Predicting accuracy 0.826118

Process finished with exit code 0

其中训练精度和测试精度均在80%+,相比于胡乱猜测50%的概率还是学到了一点东西,但是准确度还是不高,可见感知机的学习能力比较一般。

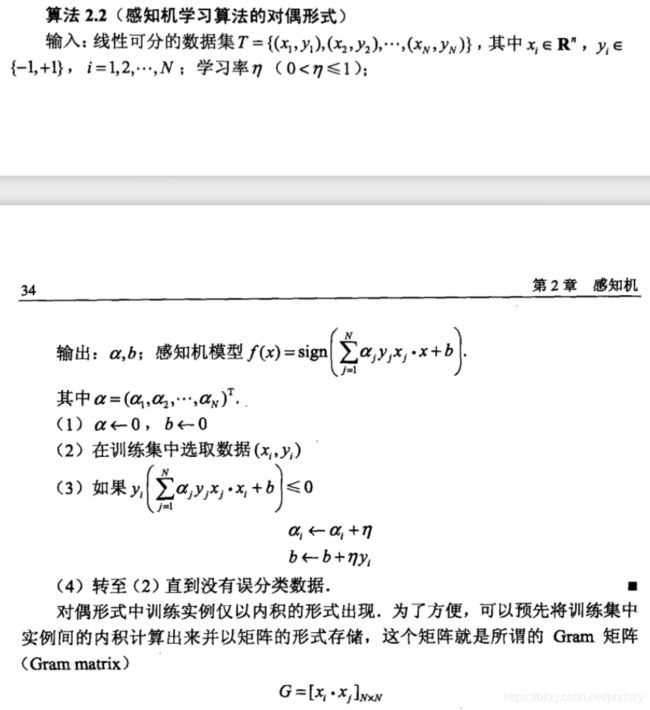

对偶形式

相比于原始形式每次采用一个误分类点对模型进行学习修正,对偶形式提前将误分类点对模型参数修正的贡献次数进行计算,通过提前计算样本间的内积矩阵Gram,减少算法在运行过程中的循环计算次数,通过查表就可以直接获得对应参数,加快了算法的迭代速度;对偶形式算法步骤如下:

根据上述算法步骤,同样地使用上述数据进行训练和预测,但在计算Gram矩阵的过程中耗费时间过长,因此使用书中的样例数据进行测试以验证代码的正确性;实现代码如下:

# @Author: phd

# @Date: 2019-03-30

# @Site: github.com/phdsky

# @Description: NULL

import time

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

## Parameters setting

w0 = 0

b0 = 0

epoch_times = 1

# learning params

rate = 1 # (0 < rate <= 1)

ratio = 3

period = 10

def sign(x):

if x >= 0:

return 1

elif x < 0:

return -1

else:

print("Sign function input wrong!\n")

class PerceptronDual(object):

def __init__(self, w, b, epoch, learning_rate, learning_ratio, learning_period):

self.weight = w

self.bias = b

self.epoch = epoch

self.lr_rate = learning_rate

self.lr_ratio = learning_ratio

self.lr_period = learning_period

def train(self, X, y):

print("Training on train dataset...")

data_count = len(X)

gram = np.zeros((data_count, data_count))

# Compute gram matrix - A symmetric matrix

# Too slow to compute

for i in range(data_count):

for j in range(i, data_count):

gram[i][j] = np.dot(X[i], X[j])

if i != j:

gram[j][i] = gram[i][j]

alpha = np.zeros((data_count, 1), dtype=float)

for epoch in range(self.epoch):

print("--------Epoch %d--------" % (epoch + 1))

print("Learning rate %f" % self.lr_rate)

miss_count = 0

data_arrange = [0, 2, 2, 2, 0, 2, 2]

for i in data_arrange:

# for i in range(data_count):

label_i = y[i]

result = 0

for j in range(data_count):

label_j = y[j]

result += alpha[j] * label_j * gram[i][j]

result = label_i * (result + self.bias)

if result <= 0:

miss_count += 1

alpha[i] += self.lr_rate

self.bias += self.lr_rate * label_i

print("a:", alpha.transpose())

print("b:", self.bias)

print("----------------------")

# print("Training accuracy %f\n" % (int(data_count - miss_count) / data_count))

# Decay learning rate

if epoch % self.lr_period == 0:

self.lr_rate /= self.lr_ratio

# Stop training

if self.lr_rate <= 1e-6:

print("Learning rate is too low, Early stopping...\n")

break

alpha_y = alpha * np.reshape(y, alpha.shape)

self.weight = np.dot(np.transpose(X), alpha_y)

print("Parameters after learning")

print("w:", self.weight.transpose())

print("b:", self.bias)

print("End of training progress\n")

def predict(self, X, y):

print("Predicting on test dataset...")

hit_count = 0

data_count = len(X)

for i in range(data_count):

feature = X[i]

label = y[i]

result = np.dot(feature, self.weight) + self.bias

predict_label = sign(result)

if predict_label == label:

hit_count += 1

print("Predicting accuracy %f\n" % (int(hit_count) / data_count))

if __name__ == "__main__":

#mnist_data = pd.read_csv("../data/mnist_binary.csv")

#mnist_values = mnist_data.values

#images = mnist_values[::, 1::]

#labels = mnist_values[::, 0]

# X_train, X_test, y_train, y_test = train_test_split(

# images, labels, test_size=0.33, random_state=42

# )

X_train = np.asarray([[3, 3], [4, 3], [1, 1]])

y_train = np.asarray([1, 1, -1])

# bdpc - Binary Dual Perceptron Classification

bdpc = PerceptronDual(w=w0, b=b0, epoch=epoch_times, learning_rate=rate,

learning_ratio=ratio, learning_period=period)

# Start training

bdpc.train(X=X_train, y=y_train)

# Start predicting

#bdpc.predict(X=X_test, y=y_test)

代码输出

/Users/phd/Softwares/anaconda3/bin/python /Users/phd/Desktop/ML/perceptron/perceptron_dual.py

Training on train dataset...

--------Epoch 1--------

Learning rate 1.000000

a: [[1. 0. 0.]]

b: 1

----------------------

a: [[1. 0. 1.]]

b: 0

----------------------

a: [[1. 0. 2.]]

b: -1

----------------------

a: [[1. 0. 3.]]

b: -2

----------------------

a: [[2. 0. 3.]]

b: -1

----------------------

a: [[2. 0. 4.]]

b: -2

----------------------

a: [[2. 0. 5.]]

b: -3

----------------------

Parameters after learning

w: [[1. 1.]]

b: -3

End of training progress

Process finished with exit code 0

总结

- 感知机是二分类线性判别分类器

- 感知机要求输入数据必须是线性可分的,否则感知机不收敛且迭代会发生震荡

- 数据可分意味着感知机一定存在满足条件的超平面将输入数据完全分开,并且在训练数据上的误分类次数有上界

- 感知机采用随机梯度下降进行参数更新,解具有随机性且具有无穷多个解

- 对偶形式可以加速算法迭代过程,Gram矩阵的计算需要进行特定的优化

参考

- 《统计学习方法》

- 感知机原理小结