高铁需求

[百度云数据源]“提取码:zgit”(https://pan.baidu.com/s/1Jtb2OrDoLcsLbux93pedsA)

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, SQLContext, SaveMode}

object Need2 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName(this.getClass.getSimpleName)

val sc = new SparkContext(conf)

val sQLContext = new SQLContext(sc)

import sQLContext.implicits._

val sorceFile: RDD[String] = sc.textFile("E:\\五道口大数据\\吉祥spark开始\\20200205\\sparkSQL第二天需求\\需求2\\列车出厂时间数据.txt")

val df1: DataFrame = sorceFile.map(line => {

val splits = line.split("[|]")

(splits(0), splits(1))

}).toDF("train_id", "out_time")

val sorceFile1: RDD[String] = sc.textFile("E:\\20200205\\sparkSQL第二天需求\\需求2\\高铁数据.txt")

val df2: DataFrame = sorceFile1.map(line => {

val splits = line.split("[|]")

(splits(1), splits(26))

}).toDF("train_id2", "atp_error")

df1.join(df2,$"train_id"===$"train_id2","right_outer")

.select("out_time","atp_error")

.createTempView("t_atp")

val result: DataFrame = sQLContext.sql(

"""

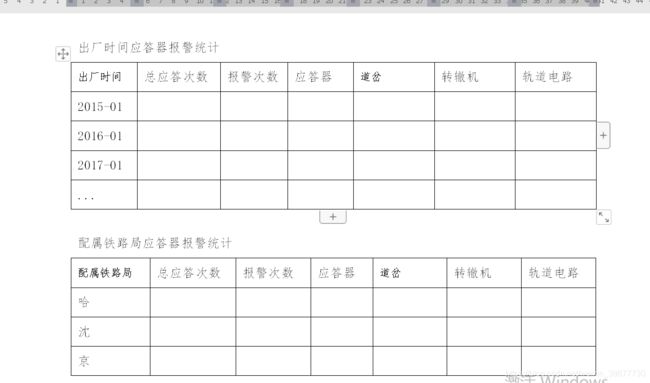

select out_time,count(1) total,

sum(case when atp_error='' then 0 else 1 end) as t_atp_sum,

sum(case when atp_error='应答器' then 1 else 0 end) as t_atp_yingda_sum,

sum(case when atp_error='道岔' then 1 else 0 end) as t_atp_daocha_sum,

sum(case when atp_error='转辙机' then 1 else 0 end) as t_atp_zhuanzheji_sum,

sum(case when atp_error='轨道电路' then 1 else 0 end) as t_atp_tiegui_sum

from t_atp

group by out_time

order by out_time

""".stripMargin)

val url:String="jdbc:mysql://localhost:3306/stu?characterEncoding=utf-8&serverTimezone=Asia/Shanghai"

val table:String="need03"

val conn = new Properties()

conn.setProperty("user","root")

conn.setProperty("password","123")

conn.setProperty("driver","com.mysql.jdbc.Driver")

result.write.mode(SaveMode.Overwrite).jdbc(url,table,conn)

sc.stop()

}

}

import java.util.Properties

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SQLContext, SaveMode}

object Need2pro {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName(this.getClass.getSimpleName)

val sc = new SparkContext(conf)

val sQLContext = new SQLContext(sc)

import sQLContext.implicits._

val sorceFile: RDD[String] = sc.textFile("E:\\20200205\\sparkSQL第二天需求\\需求2\\高铁数据.txt")

val df1: DataFrame = sorceFile.map(line => {

val splits = line.split("[|]")

val hum = splits(54).toInt

val strhum ={ if (hum < 0) {

"hum<0"

} else if (hum >= 0 && hum < 20) {

"0-20"

} else if (hum >= 20 && hum < 30) {

"20-30"

} else if (hum >= 30 && hum < 40) {

"30-40"

} else if (hum >= 40 && hum < 50) {

"40-50"

} else if (hum >= 50 && hum < 60) {

"50-60"

} else if (hum >= 60 && hum < 70) {

"60-70"

} else if (hum >= 70 && hum < 80) {

"70-80"

} else {

"80以上"

}

}

(strhum, splits(35))

}).toDF("hum", "signal_error")

df1.createTempView("t_hum")

val result: DataFrame = sQLContext.sql(

"""

select hum,count(1) total,

sum(case when signal_error='' then 0 else 1 end) as t_atp_sum,

sum(case when signal_error='电源' then 1 else 0 end) as t_atp_dy_sum,

sum(case when signal_error='灯泡' then 1 else 0 end) as t_atp_dp_sum,

sum(case when signal_error='开灯继电器' then 1 else 0 end) as t_atp_kd_sum,

sum(case when signal_error='信号机接口电路' then 1 else 0 end) as t_atp_xh_sum

from t_hum

group by hum

order by hum

""".stripMargin)

val url:String="jdbc:mysql://localhost:3306/stu?characterEncoding=utf-8&serverTimezone=Asia/Shanghai"

val table:String="need04"

val conn = new Properties()

conn.setProperty("user","root")

conn.setProperty("password","123")

conn.setProperty("driver","com.mysql.jdbc.Driver")

result.write.mode(SaveMode.Overwrite).jdbc(url,table,conn)

sc.stop()

}

}

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, SQLContext, SaveMode}

object ZiTestNeed3 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName(this.getClass.getSimpleName)

val sc = new SparkContext(conf)

val sQLContext = new SQLContext(sc)

import sQLContext.implicits._

val sorceFile: RDD[String] = sc.textFile("E:\\20200205\\sparkSQL第二天需求\\需求2\\高铁数据.txt")

val df1: DataFrame = sorceFile.map(line => {

val splits = line.split("[|]")

(splits(4), splits(35))

}).toDF("train_addr", "error")

df1.createTempView("t_addr")

val result: DataFrame = sQLContext.sql(

"""

select train_addr,count(1) total,

sum(case when error='' then 0 else 1 end) as t_atp_sum,

sum(case when error='电源' then 1 else 0 end) as t_atp_dy_sum,

sum(case when error='灯泡' then 1 else 0 end) as t_atp_dp_sum,

sum(case when error='开灯继电器' then 1 else 0 end) as t_atp_kd_sum,

sum(case when error='信号机接口电路' then 1 else 0 end) as t_atp_xh_sum

from t_addr

group by train_addr

order by train_addr

""".stripMargin)

val url:String="jdbc:mysql://localhost:3306/stu?characterEncoding=utf-8&serverTimezone=Asia/Shanghai"

val table:String="need05"

val conn = new Properties()

conn.setProperty("user","root")

conn.setProperty("password","123")

conn.setProperty("driver","com.mysql.jdbc.Driver")

result.write.mode(SaveMode.Overwrite).jdbc(url,table,conn)

sc.stop()

}

}

import java.util.Properties

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, SQLContext, SaveMode}

object ZItestNeed4 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName(this.getClass.getSimpleName)

val sc = new SparkContext(conf)

val sQLContext = new SQLContext(sc)

import sQLContext.implicits._

val sorceFile = sc.textFile("E:\\20200205\\sparkSQL第二天需求\\需求2\\高铁数据.txt")

val df1: DataFrame = sorceFile.map(line => {

val splits = line.split("[|]")

(splits(53), splits(26))

}).toDF("weather", "t_error")

df1.createTempView("t_weather")

val result: DataFrame = sQLContext.sql(

"""

select weather,count(1) total,

sum(case when t_error='' then 0 else 1 end) as t_atp_sum,

sum(case when t_error='应答器' then 1 else 0 end) as t_atp_dy_sum,

sum(case when t_error='道岔' then 1 else 0 end) as t_atp_dp_sum,

sum(case when t_error='转辙机' then 1 else 0 end) as t_atp_kd_sum,

sum(case when t_error='轨道电路' then 1 else 0 end) as t_atp_xh_sum

from t_weather

group by weather

order by weather

""".stripMargin)

val url:String="jdbc:mysql://localhost:3306/stu?characterEncoding=utf-8&serverTimezone=Asia/Shanghai"

val table:String="need06"

val conn = new Properties()

conn.setProperty("user","root")

conn.setProperty("password","123")

conn.setProperty("driver","com.mysql.jdbc.Driver")

result.write.mode(SaveMode.Overwrite).jdbc(url,table,conn)

sc.stop()

}

}