ElasticSearch系列——文档操作

文章目录

- Elasticsearch的增删查改(CURD)

-

- 一 CURD之Create

- 二 CURD之Update

- 三 CURD之Delete

- 四 CURD之Retrieve

- Elasticsearch之查询的两种方式

-

- 一 前言

- 二 准备数据

- 三 查询字符串

- 四 结构化查询

- term与match查询

-

- 一 match查询

-

- 1.1 准备数据

- 1.2 match系列之match(按条件查询)

- 1.3 match系列之match_all(查询全部)

- 1.4 match系列之match_phrase(短语查询)

- 1.4 match系列之match_phrase_prefix(最左前缀查询)

- 1.5 match系列之multi_match(多字段查询)

- 二 term查询

- 4 Elasticsearch之排序查询

-

- 一 准备数据

- 二 排序查询:sort

-

- 2.1 降序:desc

- 2.2 升序:asc

- 三 不是什么数据类型都能排序

- 4 Elasticsearch之排序查询

-

- 一 准备数据

- 二 排序查询:sort

-

- 2.1 降序:desc

- 2.2 升序:asc

- 三 不是什么数据类型都能排序

- 5-Elasticsearch之分页查询

-

- 一 准备数据

- 二 分页查询:from/size

- 6-Elasticsearch之布尔查询

-

- 一 前言

- 二 准备数据

- 三 must

- 四 should

- 五 must_not

- 6 filter

- 7-Elasticsearch之查询结果过滤

-

- 一 前言

- 二 准备数据

- 三 结果过滤:_source

- 7-Elasticsearch之高亮查询

-

- 一 前言

- 二 准备数据

- 三 默认高亮显示

- 四 自定义高亮显示

- 8-Elasticsearch之聚合函数

-

- 一 前言

- 二 准备数据

- 三 avg

- 四 max

- 五 min

- 六 sum

- 七 分组查询

- 9-Elasticsearch之mappings

-

- 一 前言

- 二 映射是什么?

- 三 映射类型

- 四 字段的数据类型

- 五 映射约束

- 六 一个简单的映射示例

- 10-Elasticsearch mappings之dynamic的三种状态

-

- 一 前言

- 二 动态映射(dynamic:true)

- 三 静态映射(dynamic:false)

- 四 严格模式(dynamic:strict)

- 一 前言

- 二 index

- 三 copy_to

- 四 对象属性

- 五 settings设置

-

- 5.1 设置主、复制分片

- 12-Elasticsearch之mappings parameters

-

- 一 ignore_above

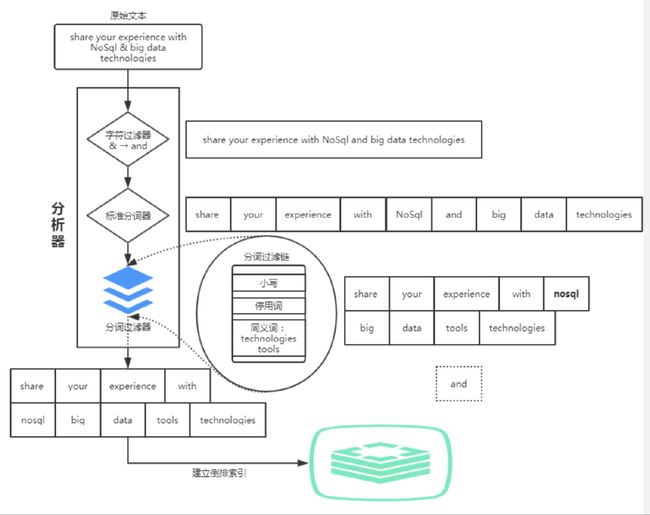

- 13-Elasticsearch - 分析过程

-

- 一 前言

- 二分析过程

- 三分析器

-

- 3.1 标准分析器:standard analyzer

- 3.2 简单分析器:simple analyzer

- 3.3 空白分析器:whitespace analyzer

- 3.4 停用词分析器:stop analyzer

- 3.5 关键词分析器:keyword analyzer

- 3.6 模式分析器:pattern analyzer

- 3.7 语言和多语言分析器:chinese

- 3.8 雪球分析器:snowball analyzer

- 四 字符过滤器

-

- 4.1 HTML字符过滤器

- 4.2 映射字符过滤器

- 4.3 模式替换过滤器

- 五 分词器

-

- 5.1 标准分词器:standard tokenizer

- 5.2 关键词分词器:keyword tokenizer

- 5.3 字母分词器:letter tokenizer

- 5.4 小写分词器:lowercase tokenizer

- 5.5 空白分词器:whitespace tokenizer

- 5.6 模式分词器:pattern tokenizer

- 5.7 UAX URL电子邮件分词器:UAX RUL email tokenizer

- 5.8 路径层次分词器:path hierarchy tokenizer

- 六 分词过滤器

-

- 6.1 自定义分词过滤器

- 6.2 自定义小写分词过滤器

- 6.3 多个分词过滤器

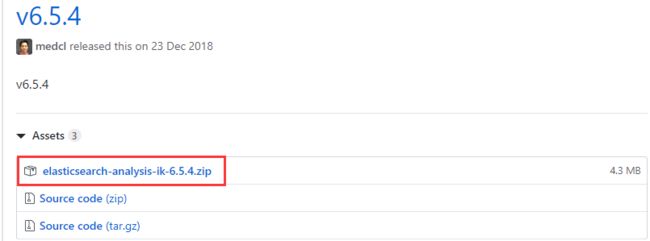

- 14-Elasticsearch - ik分词器

-

- 一 前言

- 二 ik分词器的由来

- 三 IK分词器插件的安装

-

- 3.1 安装

- 3.2 测试

- 3.3 ik目录简介

- 四 ik分词器的使用

-

- 4.1 第一个ik示例

- 4.2 ik_max_word

- 4.3 ik_smart

- 4.4 ik之短语查询

- 4.5 ik之短语前缀查询

- 15-Elasticsearch for Python之连接

-

- 一 前言

- 二 依赖下载

- 三 Python连接elasticsearch

- 四 配置忽略响应状态码

- 五 一个简单的示例

- 16-Elasticsearch for Python之操作

-

- 一 前言

- 二 结果过滤

- 三 Elasticsearch(es对象)

- 四 Indices(es.indices)

- 五 Cluster(集群相关)

- 六 Node(节点相关)

- 七 Cat(一种查询方式)

- 八 Snapshot(快照相关)

- 九 Task(任务相关)

Elasticsearch的增删查改(CURD)

一 CURD之Create

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

他明处貌似还有俩老婆:

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

家里红旗不倒,家外彩旗飘摇:

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

注意:当执行PUT命令时,如果数据不存在,则新增该条数据,如果数据存在则修改该条数据。

咱们通过GET命令查询一下:

GET lqz/doc/1

结果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_version" : 1,

"found" : true,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

}

查询也没啥问题,但是你可能说了,人家老二是黄种人,怎么是黑的呢?好吧咱改改desc和tags:

PUT lqz/doc/1

{

"desc":"皮肤很黄,武器很长,性格很直",

"tags":["很黄","很长", "很直"]

}

上例,我们仅修改了desc和tags两处,而name、age和from三个属性没有变化,我们可以忽略不写吗?查查看:

GET lqz/doc/1

结果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_version" : 3,

"found" : true,

"_source" : {

"desc" : "皮肤很黄,武器很长,性格很直",

"tags" : [

"很黄",

"很长",

"很直"

]

}

}

哎呀,出事故了!修改是修改了,但结果不太理想啊,因为name、age和from属性都没啦!

注意:**PUT命令,在做修改操作时,如果未指定其他的属性,则按照指定的属性进行修改操作。**也就是如上例所示的那样,我们修改时只修改了desc和tags两个属性,其他的属性并没有一起添加进去。

很明显,这是病!dai治!怎么治?上车,咱们继续往下走!

二 CURD之Update

让我们首先恢复一下事故现场:

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

我们要将黑修改成黄:

POST lqz/doc/1/_update

{

"doc": {

"desc": "皮肤很黄,武器很长,性格很直",

"tags": ["很黄","很长", "很直"]

}

}

上例中,我们使用POST命令,在id后面跟_update,要修改的内容放到doc文档(属性)中即可。

我们再来查询一次:

GET lqz/doc/1

结果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_version" : 5,

"found" : true,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤很黄,武器很长,性格很直",

"tags" : [

"很黄",

"很长",

"很直"

]

}

}

结果如上例所示,现在其他的属性没有变化,只有desc和tags属性被修改。

注意:POST命令,这里可用来执行修改操作(还有其他的功能),POST命令配合_update完成修改操作,指定修改的内容放到doc中。

写了这么多,我也发现我上面有讲的不对的地方——石头不是跟顾老二不清不楚,石头是跟小桃不清不楚!好吧,刚才那个数据是一个错误示范!我们这就把它干掉!

三 CURD之Delete

DELETE lqz/doc/4

很简单,通过DELETE命令,就可以删除掉那个错误示范了!

删除效果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_version" : 4,

"result" : "deleted",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 4,

"_primary_term" : 1

}

我们再来查询一遍:

GET lqz/doc/4

结果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"found" : false

}

上例中,found:false表示查询数据不存在。

四 CURD之Retrieve

我们上面已经不知不觉的使用熟悉这种简单查询方式,通过 GET命令查询指定文档:

GET lqz/doc/1

结果如下:

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_version" : 5,

"found" : true,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤很黄,武器很长,性格很直",

"tags" : [

"很黄",

"很长",

"很直"

]

}

}

Elasticsearch之查询的两种方式

一 前言

简单的没挑战,来点复杂的,elasticsearch提供两种查询方式:

- 查询字符串(query string),简单查询,就像是像传递URL参数一样去传递查询语句,被称为简单搜索或查询字符串(query string)搜索。

- 另外一种是通过DSL语句来进行查询,被称为DSL查询(Query DSL),DSL是Elasticsearch提供的一种丰富且灵活的查询语言,该语言以json请求体的形式出现,通过restful请求与Elasticsearch进行交互。

二 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

三 查询字符串

GET lqz/doc/_search?q=from:gu

还是使用GET命令,通过_serarch查询,查询条件是什么呢?条件是from属性是gu家的人都有哪些。最后,别忘了_search和from属性中间的英文分隔符?

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

我们来重点说下hits,hits是返回的结果集——所有from属性为gu的结果集。重点中的重点是_score得分,得分是什么呢?根据算法算出跟查询条件的匹配度,匹配度高得分就高。后面再说这个算法是怎么回事。

四 结构化查询

我们现在使用DSL方式,来完成刚才的查询,查看来自顾家的都有哪些人。

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

}

}

上例,查询条件是一步步构建出来的,将查询条件添加到match中即可,而match则是查询所有from字段的值中含有gu的结果就会返回。

当然结果没啥变化:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

term与match查询

一 match查询

1.1 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

1.2 match系列之match(按条件查询)

我们查看来自顾家的都有哪些人。

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

}

}

上例,查询条件是一步步构建出来的,将查询条件添加到match中即可,而match则是查询所有from字段的值中含有gu的结果就会返回。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

1.3 match系列之match_all(查询全部)

除了按条件查询之外,我们还可以查询lqz索引下的doc类型中的所有文档,那就是查询全部:

GET lqz/doc/_search

{

"query": {

"match_all": {}

}

}

match_all的值为空,表示没有查询条件,那就是查询全部。就像select * from table_name一样。

查询结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : 1.0,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : 1.0,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : 1.0,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 1.0,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 1.0,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 1.0,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

返回的是lqz索引下doc类型的所有文档!

1.4 match系列之match_phrase(短语查询)

我们现在已经对match有了基本的了解,match查询的是散列映射,包含了我们希望搜索的字段和字符串。也就说,只要文档中只要有我们希望的那个关键字,但也因此带来了一些问题。

首先来创建一些示例:

PUT t1/doc/1

{

"title": "中国是世界上人口最多的国家"

}

PUT t1/doc/2

{

"title": "美国是世界上军事实力最强大的国家"

}

PUT t1/doc/3

{

"title": "北京是中国的首都"

}

现在,当我们以中国作为搜索条件,我们希望只返回和中国相关的文档。我们首先来使用match查询:

GET t1/doc/_search

{

"query": {

"match": {

"title": "中国"

}

}

}

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.68324494,

"hits" : [

{

"_index" : "t1",

"_type" : "doc",

"_id" : "1",

"_score" : 0.68324494,

"_source" : {

"title" : "中国是世界上人口最多的国家"

}

},

{

"_index" : "t1",

"_type" : "doc",

"_id" : "3",

"_score" : 0.5753642,

"_source" : {

"title" : "北京是中国的首都"

}

},

{

"_index" : "t1",

"_type" : "doc",

"_id" : "2",

"_score" : 0.39556286,

"_source" : {

"title" : "美国是世界上军事实力最强大的国家"

}

}

]

}

}

虽然如期的返回了中国的文档。但是却把和美国的文档也返回了,这并不是我们想要的。是怎么回事呢?因为这是elasticsearch在内部对文档做分词的时候,对于中文来说,就是一个字一个字分的,所以,我们搜中国,中和国都符合条件,返回,而美国的国也符合。

而我们认为中国是个短语,是一个有具体含义的词。所以elasticsearch在处理中文分词方面比较弱势。后面会讲针对中文的插件。

但目前我们还有办法解决,那就是使用短语查询:

GET t1/doc/_search

{

"query": {

"match_phrase": {

"title": {

"query": "中国"

}

}

}

}

这里match_phrase是在文档中搜索指定的词组,而中国则正是一个词组,所以愉快的返回了。

那么,现在我们要想搜索中国和世界相关的文档,但又忘记其余部分了,怎么做呢?用match也不行,那就继续用match_phrase试试:

GET t1/doc/_search

{

"query": {

"match_phrase": {

"title": "中国世界"

}

}

}

返回结果也是空的,因为没有中国世界这个短语。

我们搜索中国和世界这两个指定词组时,但又不清楚两个词组之间有多少别的词间隔。那么在搜的时候就要留有一些余地。这时就要用到了slop了。相当于正则中的中国.*?世界。这个间隔默认为0,导致我们刚才没有搜到,现在我们指定一个间隔。

GET t1/doc/_search

{

"query": {

"match_phrase": {

"title": {

"query": "中国世界",

"slop": 2

}

}

}

}

现在,两个词组之间有了2个词的间隔,这个时候,就可以查询到结果了:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.7445889,

"hits" : [

{

"_index" : "t1",

"_type" : "doc",

"_id" : "1",

"_score" : 0.7445889,

"_source" : {

"title" : "中国是世界上人口最多的国家"

}

}

]

}

}

slop间隔你可以根据需要适当改动。

短语查询, 比如要查询:python系统

会把查询条件python和系统分词,放到列表中,再去搜索的时候,必须满足python和系统同时存在的才能搜出来

“slop”:6 :python和系统这两个词之间最小的距离

1.4 match系列之match_phrase_prefix(最左前缀查询)

现在凌晨2点半,单身狗小黑为了缓解寂寞,就准备搜索几个beautiful girl来陪伴自己。但是由于英语没过2级,但单词beautiful拼到bea就不知道往下怎么拼了。这个时候,我们的智能搜索要帮他啊,elasticsearch就看自己的词库有啥事bea开头的词,结果还真发现了两个:

PUT t3/doc/1

{

"title": "maggie",

"desc": "beautiful girl you are beautiful so"

}

PUT t3/doc/2

{

"title": "sun and beach",

"desc": "I like basking on the beach"

}

但这里用match和match_phrase都不太合适,因为小黑输入的不是完整的词。那怎么办呢?我们用match_phrase_prefix来搞:

GET t3/doc/_search

{

"query": {

"match_phrase_prefix": {

"desc": "bea"

}

}

}

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 0.39556286,

"hits" : [

{

"_index" : "t3",

"_type" : "doc",

"_id" : "1",

"_score" : 0.39556286,

"_source" : {

"title" : "maggie",

"desc" : "beautiful girl,you are beautiful so"

}

},

{

"_index" : "t3",

"_type" : "doc",

"_id" : "2",

"_score" : 0.2876821,

"_source" : {

"title" : "sun and beach",

"desc" : "I like basking on the beach"

}

}

]

}

}

前缀查询是短语查询类似,但前缀查询可以更进一步的搜索词组,只不过它是和词组中最后一个词条进行前缀匹配(如搜这样的you are bea)。应用也非常的广泛,比如搜索框的提示信息,当使用这种行为进行搜索时,最好通过max_expansions来设置最大的前缀扩展数量,因为产生的结果会是一个很大的集合,不加限制的话,影响查询性能。

GET t3/doc/_search

{

"query": {

"match_phrase_prefix": {

"desc": {

"query": "bea",

"max_expansions": 1

}

}

}

}

但是,如果此时你去尝试加上max_expansions测试后,你会发现并没有如你想想的一样,仅返回一条数据,而是返回了多条数据。

max_expansions执行的是搜索的编辑(Levenshtein)距离。那什么是编辑距离呢?编辑距离是一种计算两个字符串间的差异程度的字符串度量(string metric)。我们可以认为编辑距离就是从一个字符串修改到另一个字符串时,其中编辑单个字符(比如修改、插入、删除)所需要的最少次数。俄罗斯科学家Vladimir Levenshtein于1965年提出了这一概念。

我们再引用elasticsearch官网的一段话:该max_expansions设置定义了在停止搜索之前模糊查询将匹配的最大术语数,也可以对模糊查询的性能产生显着影响。但是,减少查询字词会产生负面影响,因为查询提前终止可能无法找到某些有效结果。重要的是要理解max_expansions查询限制在分片级别工作,这意味着即使设置为1,多个术语可能匹配,所有术语都来自不同的分片。此行为可能使其看起来好像max_expansions没有生效,因此请注意,计算返回的唯一术语不是确定是否有效的有效方法max_expansions。。

我想你也没看懂这句话是啥意思,但我们只需知道该参数工作于分片层,也就是Lucene部分,超出我们的研究范围了。

我们快刀斩乱麻的记住,使用前缀查询会非常的影响性能,要对结果集进行限制,就加上这个参数。

1.5 match系列之multi_match(多字段查询)

现在,我们有一个50个字段的索引,我们要在多个字段中查询同一个关键字,该怎么做呢?

PUT t3/doc/1

{

"title": "maggie is beautiful girl",

"desc": "beautiful girl you are beautiful so"

}

PUT t3/doc/2

{

"title": "beautiful beach",

"desc": "I like basking on the beach,and you? beautiful girl"

}

我们先用原来的方法查询:

GET t3/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"title": "beautiful"

}

},

{

"match": {

"desc": "beautiful"

}

}

]

}

}

}

使用must来限制两个字段(值)中必须同时含有关键字。这样虽然能达到目的,但是当有很多的字段呢,我们可以用multi_match来做:

GET t3/doc/_search

{

"query": {

"multi_match": {

"query": "beautiful",

"fields": ["title", "desc"]

}

}

}

我们将多个字段放到fields列表中即可。以达到匹配多个字段的目的。

除此之外,multi_match甚至可以当做match_phrase和match_phrase_prefix使用,只需要指定type类型即可:

GET t3/doc/_search

{

"query": {

"multi_match": {

"query": "gi",

"fields": ["title"],

"type": "phrase_prefix"

}

}

}

GET t3/doc/_search

{

"query": {

"multi_match": {

"query": "girl",

"fields": ["title"],

"type": "phrase"

}

}

}

小结:

- match:返回所有匹配的分词。

- match_all:查询全部。

- match_phrase:短语查询,在match的基础上进一步查询词组,可以指定

slop分词间隔。 - match_phrase_prefix:前缀查询,根据短语中最后一个词组做前缀匹配,可以应用于搜索提示,但注意和

max_expanions搭配。其实默认是50… - multi_match:多字段查询,使用相当的灵活,可以完成

match_phrase和match_phrase_prefix的工作。

二 term查询

默认情况下,elasticsearch在对文档分析期间(将文档分词后保存到倒排索引中),会对文档进行分词,比如默认的标准分析器会对文档进行:

- 删除大多数的标点符号。

- 将文档分解为单个词条,我们称为token。

- 将token转为小写。

完事再保存到倒排索引上,当然,原文件还是要保存一分的,而倒排索引使用来查询的。

例如Beautiful girl!,在经过分析后是这样的了:

POST _analyze

{

"analyzer": "standard",

"text": "Beautiful girl!"

}

# 结果

["beautiful", "girl"]

而当在使用match查询时,elasticsearch同样会对查询关键字进行分析:

PUT w10

{

"mappings": {

"doc":{

"properties":{

"t1":{

"type": "text"

}

}

}

}

}

PUT w10/doc/1

{

"t1": "Beautiful girl!"

}

PUT w10/doc/2

{

"t1": "sexy girl!"

}

GET w10/doc/_search

{

"query": {

"match": {

"t1": "Beautiful girl!"

}

}

}

也就是对查询关键字Beautiful girl!进行分析,得到["beautiful", "girl"],然后分别将这两个单独的token去索引w10中进行查询,结果就是将两篇文档都返回。

这在有些情况下是非常好用的,但是,如果我们想查询确切的词怎么办?也就是精确查询,将Beautiful girl!当成一个token而不是分词后的两个token。

这就要用到了term查询了,term查询的是没有经过分析的查询关键字。

但是,这同样需要限制,如果你要查询的字段类型(如上例中的字段t1类型是text)是text(因为elasticsearch会对文档进行分析,上面说过),那么你得到的可能是不尽如人意的结果或者压根没有结果:

GET w10/doc/_search

{

"query": {

"term": {

"t1": "Beautiful girl!"

}

}

}

如上面的查询,将不会有结果返回,因为索引w10中的两篇文档在经过elasticsearch分析后没有一个分词是Beautiful girl!,那此次查询结果为空也就好理解了。

所以,我们这里得到一个论证结果:不要使用term对类型是text的字段进行查询,要查询text类型的字段,请改用match查询。

学会了吗?那再来一个示例,你说一下结果是什么:

GET w10/doc/_search

{

"query": {

"term": {

"t1": "Beautiful"

}

}

}

答案是,没有结果返回!因为elasticsearch在对文档进行分析时,会经过小写!人家倒排索引上存的是小写的beautiful,而我们查询的是大写的Beautiful。

所以,要想有结果你这样:

GET w10/doc/_search

{

"query": {

"term": {

"t1": "beautiful"

}

}

}

那,term查询可以查询哪些类型的字段呢,例如elasticsearch会将keyword类型的字段当成一个token保存到倒排索引上,你可以将term和keyword结合使用。

最后,要想使用term查询多个精确的值怎么办?我只能说:亲,这里推荐卸载es呢!低调又不失尴尬的玩笑!

这里推荐使用terms查询:

GET w10/doc/_search

{

"query": {

"terms": {

"t1": ["beautiful", "sexy"]

}

}

}

4 Elasticsearch之排序查询

一 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

二 排序查询:sort

2.1 降序:desc

想到排序,出现在脑海中的无非就是升(正)序和降(倒)序。比如我们查询顾府都有哪些人,并根据age字段按照降序,并且,我只想看nmae和age字段:

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"sort": [

{

"age": {

"order": "desc"

}

}

]

}

上例,在条件查询的基础上,我们又通过sort来做排序,根据age字段排序,是降序呢还是升序,由order字段控制,desc是降序。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : null,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

},

"sort" : [

30

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : null,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

},

"sort" : [

29

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : null,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

},

"sort" : [

22

]

}

]

}

}

上例中,结果是以降序排列方式返回的。

2.2 升序:asc

那么想要升序怎么搞呢?

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"age": {

"order": "asc"

}

}

]

}

上例,想要以升序的方式排列,只需要将order值换为asc就可以了。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : null,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

},

"sort" : [

18

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : null,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

},

"sort" : [

22

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : null,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

},

"sort" : [

25

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : null,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

},

"sort" : [

29

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : null,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

},

"sort" : [

30

]

}

]

}

}

上例,可以看到结果是以age从小到大的顺序返回结果。

三 不是什么数据类型都能排序

那么,你可能会问,除了age,能不能以别的属性作为排序条件啊?来试试:

GET lqz/chengyuan/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"name": {

"order": "asc"

}

}

]

}

上例,我们以name属性来排序,来看结果:

{

"error": {

"root_cause": [

{

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

],

"type": "search_phase_execution_exception",

"reason": "all shards failed",

"phase": "query",

"grouped": true,

"failed_shards": [

{

"shard": 0,

"index": "lqz",

"node": "wrtr435jSgi7_naKq2Y_zQ",

"reason": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

}

],

"caused_by": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead.",

"caused_by": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

}

},

"status": 400

}

结果跟我们想象的不一样,报错了!

注意:在排序的过程中,只能使用可排序的属性进行排序。那么可以排序的属性有哪些呢?

- 数字

- 日期

其他的都不行!

4 Elasticsearch之排序查询

一 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

二 排序查询:sort

2.1 降序:desc

想到排序,出现在脑海中的无非就是升(正)序和降(倒)序。比如我们查询顾府都有哪些人,并根据age字段按照降序,并且,我只想看nmae和age字段:

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"sort": [

{

"age": {

"order": "desc"

}

}

]

}

上例,在条件查询的基础上,我们又通过sort来做排序,根据age字段排序,是降序呢还是升序,由order字段控制,desc是降序。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : null,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

},

"sort" : [

30

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : null,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

},

"sort" : [

29

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : null,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

},

"sort" : [

22

]

}

]

}

}

上例中,结果是以降序排列方式返回的。

2.2 升序:asc

那么想要升序怎么搞呢?

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"age": {

"order": "asc"

}

}

]

}

上例,想要以升序的方式排列,只需要将order值换为asc就可以了。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : null,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

},

"sort" : [

18

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : null,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

},

"sort" : [

22

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : null,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

},

"sort" : [

25

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : null,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

},

"sort" : [

29

]

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : null,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

},

"sort" : [

30

]

}

]

}

}

上例,可以看到结果是以age从小到大的顺序返回结果。

三 不是什么数据类型都能排序

那么,你可能会问,除了age,能不能以别的属性作为排序条件啊?来试试:

GET lqz/chengyuan/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"name": {

"order": "asc"

}

}

]

}

上例,我们以name属性来排序,来看结果:

{

"error": {

"root_cause": [

{

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

],

"type": "search_phase_execution_exception",

"reason": "all shards failed",

"phase": "query",

"grouped": true,

"failed_shards": [

{

"shard": 0,

"index": "lqz",

"node": "wrtr435jSgi7_naKq2Y_zQ",

"reason": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

}

],

"caused_by": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead.",

"caused_by": {

"type": "illegal_argument_exception",

"reason": "Fielddata is disabled on text fields by default. Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index. Note that this can however use significant memory. Alternatively use a keyword field instead."

}

}

},

"status": 400

}

结果跟我们想象的不一样,报错了!

注意:在排序的过程中,只能使用可排序的属性进行排序。那么可以排序的属性有哪些呢?

- 数字

- 日期

其他的都不行!

5-Elasticsearch之分页查询

一 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

二 分页查询:from/size

我们来看看elasticsearch是怎么将结果分页的:

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"age": {

"order": "desc"

}

}

],

"from": 2,

"size": 1

}

上例,首先以age降序排序,查询所有。并且在查询的时候,添加两个属性from和size来控制查询结果集的数据条数。

- from:从哪开始查

- size:返回几条结果

如上例的结果:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : null,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

},

"sort" : [

25

]

}

]

}

}

上例中,在返回的结果集中,从第2条开始,返回1条数据。

那如果想要从第2条开始,返回2条结果怎么做呢?

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"age": {

"order": "desc"

}

}

],

"from": 2,

"size": 2

}

上例中,我们指定from为2,意为从第2条开始返回,返回多少呢?size意为2条。

还可以这样:

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"age": {

"order": "desc"

}

}

],

"from": 4,

"size": 2

}

上例中,从第4条开始返回2条数据。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : null,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : null,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

},

"sort" : [

18

]

}

]

}

}

上例中仅有一条数据,那是为啥呢?因为我们现在只有5条数据,从第4条开始查询,就只有1条符合条件,所以,就返回了1条数据。

学到这里,我们也可以看到,我们的查询条件越来越多,开始仅是简单查询,慢慢增加条件查询,增加排序,对返回结果进行限制。所以,我们可以说:对于elasticsearch来说,所有的条件都是可插拔的,彼此之间用,分割。比如说,我们在查询中,仅对返回结果进行限制:

GET lqz/doc/_search

{

"query": {

"match_all": {}

},

"from": 4,

"size": 2

}

上例中,在所有的返回结果中,结果从4开始返回2条数据。

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : 1.0,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 1.0,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

但我们只有1条符合条件的数据。

6-Elasticsearch之布尔查询

一 前言

布尔查询是最常用的组合查询,根据子查询的规则,只有当文档满足所有子查询条件时,elasticsearch引擎才将结果返回。布尔查询支持的子查询条件共4中:

- must(and)

- should(or)

- must_not(not)

- filter

下面我们来看看每个子查询条件都是怎么玩的。

二 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

三 must

现在,我们用布尔查询所有from属性为gu的数据:

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "gu"

}

}

]

}

}

}

上例中,我们通过在bool属性(字段)内使用must来作为查询条件,那么条件是什么呢?条件同样被match包围,就是from为gu的所有数据。

这里需要注意的是must字段对应的是个列表,也就是说可以有多个并列的查询条件,一个文档满足各个子条件后才最终返回。

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

上例中,可以看到,所有from属性为gu的数据查询出来了。

那么,我们想要查询from为gu,并且age为30的数据怎么搞呢?

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "gu"

}

},

{

"match": {

"age": 30

}

}

]

}

}

}

上例中,在must列表中,在增加一个age为30的条件。

结果如下:

{

"took" : 8,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.287682,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 1.287682,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

}

]

}

}

上例,符合条件的数据被成功查询出来了。

注意:现在你可能慢慢发现一个现象,所有属性值为列表的,都可以实现多个条件并列存在

四 should

那么,如果要查询只要是from为gu或者tags为闭月的数据怎么搞?

GET lqz/doc/_search

{

"query": {

"bool": {

"should": [

{

"match": {

"from": "gu"

}

},

{

"match": {

"tags": "闭月"

}

}

]

}

}

}

上例中,或关系的不能用must的了,而是要用should,只要符合其中一个条件就返回。

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 4,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : 0.5753642,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

返回了所有符合条件的结果。

五 must_not

那么,如果我想要查询from既不是gu并且tags也不是可爱,还有age不是18的数据怎么办?

GET lqz/doc/_search

{

"query": {

"bool": {

"must_not": [

{

"match": {

"from": "gu"

}

},

{

"match": {

"tags": "可爱"

}

},

{

"match": {

"age": 18

}

}

]

}

}

}

上例中,must和should都不能使用,而是使用must_not,又在内增加了一个age为18的条件。

结果如下:

{

"took" : 9,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.0,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : 1.0,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

}

}

]

}

}

上例中,只有魏行首这一条数据,因为只有魏行首既不是顾家的人,标签没有可爱那一项,年龄也不等于18!

这里有点需要补充,条件中age对应的18你写成整形还是字符串都没啥……

6 filter

那么,如果要查询from为gu,age大于25的数据怎么查?

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "gu"

}

}

],

"filter": {

"range": {

"age": {

"gt": 25

}

}

}

}

}

}

这里就用到了filter条件过滤查询,过滤条件的范围用range表示,gt表示大于,大于多少呢?是25。

结果如下:

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

}

]

}

}

上例中,age大于25的条件都已经筛选出来了。

那么要查询from是gu,age大于等于30的数据呢?

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "gu"

}

}

],

"filter": {

"range": {

"age": {

"gte": 30

}

}

}

}

}

}

上例中,大于等于用gte表示。

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.2876821,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

}

]

}

}

那么,要查询age小于25的呢?

GET lqz/doc/_search

{

"query": {

"bool": {

"filter": {

"range": {

"age": {

"lt": 25

}

}

}

}

}

}

上例中,小于用lt表示,结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 0.0,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : 0.0,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.0,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

在查询一个age小于等于18的怎么办呢?

GET lqz/doc/_search

{

"query": {

"bool": {

"filter": {

"range": {

"age": {

"lte": 18

}

}

}

}

}

}

上例中,小于等于用lte表示。结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.0,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : 0.0,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

}

}

]

}

}

要查询from是gu,age在25~30之间的怎么查?

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "gu"

}

}

],

"filter": {

"range": {

"age": {

"gte": 25,

"lte": 30

}

}

}

}

}

}

上例中,使用lte和gte来限定范围。结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30,

"from" : "gu",

"desc" : "皮肤黑、武器长、性格直",

"tags" : [

"黑",

"长",

"直"

]

}

}

]

}

}

那么,要查询from是sheng,age小于等于25的怎么查呢?其实结果,我们可能已经想到了,只有一条,因为只有盛家小六符合结果。

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "sheng"

}

}

],

"filter": {

"range": {

"age": {

"lte": 25

}

}

}

}

}

}

结果果然不出洒家所料!

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : 0.6931472,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

}

}

]

}

}

但是,洒家手一抖,将must换为should看看会发生什么?

GET lqz/doc/_search

{

"query": {

"bool": {

"should": [

{

"match": {

"from": "sheng"

}

}

],

"filter": {

"range": {

"age": {

"lte": 25

}

}

}

}

}

}

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "2",

"_score" : 0.6931472,

"_source" : {

"name" : "大娘子",

"age" : 18,

"from" : "sheng",

"desc" : "肤白貌美,娇憨可爱",

"tags" : [

"白",

"富",

"美"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "5",

"_score" : 0.0,

"_source" : {

"name" : "魏行首",

"age" : 25,

"from" : "广云台",

"desc" : "仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags" : [

"闭月",

"羞花"

]

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.0,

"_source" : {

"name" : "龙套偏房",

"age" : 22,

"from" : "gu",

"desc" : "mmp,没怎么看,不知道怎么形容",

"tags" : [

"造数据",

"真",

"难"

]

}

}

]

}

}

结果有点出乎意料,因为龙套偏房和魏行首不属于盛家,但也被查询出来了。那你要问了,怎么肥四?小老弟!这是因为在查询过程中,优先经过filter过滤,因为should是或关系,龙套偏房和魏行首的年龄符合了filter过滤条件,也就被放行了!所以,如果在filter过滤条件中使用should的话,结果可能不会尽如人意!建议使用must代替。

注意:filter工作于bool查询内。比如我们将刚才的查询条件改一下,把filter从bool中挪出来。

GET lqz/doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"from": "sheng"

}

}

]

},

"filter": {

"range": {

"age": {

"lte": 25

}

}

}

}

}

如上例所示,我们将filter与bool平级,看查询结果:

{

"error": {

"root_cause": [

{

"type": "parsing_exception",

"reason": "[bool] malformed query, expected [END_OBJECT] but found [FIELD_NAME]",

"line": 12,

"col": 5

}

],

"type": "parsing_exception",

"reason": "[bool] malformed query, expected [END_OBJECT] but found [FIELD_NAME]",

"line": 12,

"col": 5

},

"status": 400

}

结果报错了!所以,filter工作位置很重要。

小结:

must:与关系,相当于关系型数据库中的and。should:或关系,相当于关系型数据库中的or。must_not:非关系,相当于关系型数据库中的not。filter:过滤条件。range:条件筛选范围。gt:大于,相当于关系型数据库中的>。gte:大于等于,相当于关系型数据库中的>=。lt:小于,相当于关系型数据库中的<。lte:小于等于,相当于关系型数据库中的<=。

7-Elasticsearch之查询结果过滤

一 前言

在未来,一篇文档可能有很多的字段,每次查询都默认给我们返回全部,在数据量很大的时候,是的,比如我只想查姑娘的手机号,你一并给我个喜好啊、三围什么的算什么?

所以,我们对结果做一些过滤,清清白白的告诉elasticsearch

二 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

三 结果过滤:_source

现在,在所有的结果中,我只需要查看name和age两个属性,其他的不要怎么办?

GET lqz/doc/_search

{

"query": {

"match": {

"name": "顾老二"

}

},

"_source": ["name", "age"]

}

如上例所示,在查询中,通过_source来控制仅返回name和age属性。

{

"took" : 8,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.8630463,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.8630463,

"_source" : {

"name" : "顾老二",

"age" : 30

}

}

]

}

}

在数据量很大的时候,我们需要什么字段,就返回什么字段就好了,提高查询效率

7-Elasticsearch之高亮查询

一 前言

如果返回的结果集中很多符合条件的结果,那怎么能一眼就能看到我们想要的那个结果呢?比如下面网站所示的那样,我们搜索elasticsearch,在结果集中,将所有elasticsearch高亮显示?

我们该怎么做呢?

二 准备数据

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

三 默认高亮显示

我们来查询:

GET lqz/doc/_search

{

"query": {

"match": {

"name": "石头"

}

},

"highlight": {

"fields": {

"name": {}

}

}

}

上例中,我们使用highlight属性来实现结果高亮显示,需要的字段名称添加到fields内即可,elasticsearch会自动帮我们实现高亮。

结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.5098256,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 1.5098256,

"_source" : {

"name" : "石头",

"age" : 29,

"from" : "gu",

"desc" : "粗中有细,狐假虎威",

"tags" : [

"粗",

"大",

"猛"

]

},

"highlight" : {

"name" : [

"石头"

]

}

}

]

}

}

上例中,elasticsearch会自动将检索结果用标签包裹起来,用于在页面中渲染。

四 自定义高亮显示

但是,你可能会问,我不想用em标签, 我这么牛逼,应该用个b标签啊!好的,elasticsearch同样考虑到你很牛逼,所以,我们可以自定义标签。

GET lqz/chengyuan/_search

{

"query": {

"match": {

"from": "gu"

}

},

"highlight": {

"pre_tags": "",

"post_tags": "",

"fields": {

"from": {}

}

}

}

上例中,在highlight中,pre_tags用来实现我们的自定义标签的前半部分,在这里,我们也可以为自定义的标签添加属性和样式。post_tags实现标签的后半部分,组成一个完整的标签。至于标签中的内容,则还是交给fields来完成。

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.5753642,

"hits" : [

{

"_index" : "lqz",

"_type" : "chengyuan",

"_id" : "1",

"_score" : 0.5753642,

"_source" : {

"name" : "老二",

"age" : 30,

"sex" : "male",

"birth" : "1070-10-11",

"from" : "gu",

"desc" : "皮肤黑,武器长,性格直",

"tags" : [

"黑",

"长",

"直"

]

},

"highlight" : {

"name" : [

"老二"

]

}

}

]

}

}

需要注意的是:自定义标签中属性或样式中的逗号一律用英文状态的单引号表示,应该与外部elasticsearch语法的双引号区分开。

8-Elasticsearch之聚合函数

一 前言

聚合函数大家都不陌生,elasticsearch中也没玩出新花样,所以,这一章相对简单,只需要记得:

- avg

- max

- min

- sum

以及各自的用法即可。先来看求平均。

二 准备数据

PUT lqz/doc/1

{

"name":"顾老二",

"age":30,

"from": "gu",

"desc": "皮肤黑、武器长、性格直",

"tags": ["黑", "长", "直"]

}

PUT lqz/doc/2

{

"name":"大娘子",

"age":18,

"from":"sheng",

"desc":"肤白貌美,娇憨可爱",

"tags":["白", "富","美"]

}

PUT lqz/doc/3

{

"name":"龙套偏房",

"age":22,

"from":"gu",

"desc":"mmp,没怎么看,不知道怎么形容",

"tags":["造数据", "真","难"]

}

PUT lqz/doc/4

{

"name":"石头",

"age":29,

"from":"gu",

"desc":"粗中有细,狐假虎威",

"tags":["粗", "大","猛"]

}

PUT lqz/doc/5

{

"name":"魏行首",

"age":25,

"from":"广云台",

"desc":"仿佛兮若轻云之蔽月,飘飘兮若流风之回雪,mmp,最后竟然没有嫁给顾老二!",

"tags":["闭月","羞花"]

}

三 avg

现在的需求是查询from是gu的人的平均年龄。

select max(age) as my_avg

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"aggs": {

"my_avg": {

"avg": {

"field": "age"

}

}

},

"_source": ["name", "age"]

}

上例中,首先匹配查询from是gu的数据。在此基础上做查询平均值的操作,这里就用到了聚合函数,其语法被封装在aggs中,而my_avg则是为查询结果起个别名,封装了计算出的平均值。那么,要以什么属性作为条件呢?是age年龄,查年龄的什么呢?是avg,查平均年龄。

返回结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "4",

"_score" : 0.6931472,

"_source" : {

"name" : "石头",

"age" : 29

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "顾老二",

"age" : 30

}

},

{

"_index" : "lqz",

"_type" : "doc",

"_id" : "3",

"_score" : 0.2876821,

"_source" : {

"name" : "龙套偏房",

"age" : 22

}

}

]

},

"aggregations" : {

"my_avg" : {

"value" : 27.0

}

}

}

上例中,在查询结果的最后是平均值信息,可以看到是27岁。

虽然我们已经使用_source对字段做了过滤,但是还不够。我不想看都有哪些数据,只想看平均值怎么办?别忘了size!

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"aggs": {

"my_avg": {

"avg": {

"field": "age"

}

}

},

"size": 0,

"_source": ["name", "age"]

}

上例中,只需要在原来的查询基础上,增加一个size就可以了,输出几条结果,我们写上0,就是输出0条查询结果。

查询结果如下:

{

"took" : 8,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"my_avg" : {

"value" : 27.0

}

}

}

查询结果中,我们看hits下的total值是3,说明有三条符合结果的数据。最后面返回平均值是27。

四 max

那怎么查最大值呢?

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"aggs": {

"my_max": {

"max": {

"field": "age"

}

}

},

"size": 0

}

上例中,只需要在查询条件中将avg替换成max即可。

返回结果如下:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"my_max" : {

"value" : 30.0

}

}

}

在返回的结果中,可以看到年龄最大的是30岁。

五 min

那怎么查最小值呢?

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"aggs": {

"my_min": {

"min": {

"field": "age"

}

}

},

"size": 0

}

最小值则用min表示。

返回结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"my_min" : {

"value" : 22.0

}

}

}

返回结果中,年龄最小的是22岁。

六 sum

那么,要是想知道它们的年龄总和是多少怎么办呢?

GET lqz/doc/_search

{

"query": {

"match": {

"from": "gu"

}

},

"aggs": {

"my_sum": {

"sum": {

"field": "age"

}

}

},

"size": 0

}

上例中,求和用sum表示。

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"my_sum" : {

"value" : 81.0

}

}

}

从返回的结果可以发现,年龄总和是81岁。

七 分组查询

现在我想要查询所有人的年龄段,并且按照15~20,20~25,25~30分组,并且算出每组的平均年龄。

分析需求,首先我们应该先把分组做出来。

GET lqz/doc/_search

{

"size": 0,

"query": {

"match_all": {}

},

"aggs": {

"age_group": {

"range": {

"field": "age",

"ranges": [

{

"from": 15,

"to": 20

},

{

"from": 20,

"to": 25

},

{

"from": 25,

"to": 30

}

]

}

}

}

}

上例中,在aggs的自定义别名age_group中,使用range来做分组,field是以age为分组,分组使用ranges来做,from和to是范围,我们根据需求做出三组。

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"age_group" : {

"buckets" : [

{

"key" : "15.0-20.0",

"from" : 15.0,

"to" : 20.0,

"doc_count" : 1

},

{

"key" : "20.0-25.0",

"from" : 20.0,

"to" : 25.0,

"doc_count" : 1

},

{

"key" : "25.0-30.0",

"from" : 25.0,

"to" : 30.0,

"doc_count" : 2

}

]

}

}

}

返回的结果中可以看到,已经拿到了三个分组。doc_count为该组内有几条数据,此次共分为三组,查询出4条内容。还有一条数据的age属性值是30,不在分组的范围内!

那么接下来,我们就要对每个小组内的数据做平均年龄处理。

GET lqz/doc/_search

{

"size": 0,

"query": {

"match_all": {}

},

"aggs": {

"age_group": {

"range": {

"field": "age",

"ranges": [

{

"from": 15,

"to": 20

},

{

"from": 20,

"to": 25

},

{

"from": 25,

"to": 30

}

]

},

"aggs": {

"my_avg": {

"avg": {

"field": "age"

}

}

}

}

}

}

上例中,在分组下面,我们使用aggs对age做平均数处理,这样就可以了。

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"age_group" : {

"buckets" : [

{

"key" : "15.0-20.0",

"from" : 15.0,

"to" : 20.0,

"doc_count" : 1,

"my_avg" : {

"value" : 18.0

}

},

{

"key" : "20.0-25.0",

"from" : 20.0,

"to" : 25.0,

"doc_count" : 1,

"my_avg" : {

"value" : 22.0

}

},

{

"key" : "25.0-30.0",

"from" : 25.0,

"to" : 30.0,

"doc_count" : 2,

"my_avg" : {

"value" : 27.0

}

}

]

}

}

}

在结果中,我们可以清晰的看到每组的平均年龄(my_avg的value中)。

注意:聚合函数的使用,一定是先查出结果,然后对结果使用聚合函数做处理

小结:

- avg:求平均

- max:最大值

- min:最小值

- sum:求和

欢迎斧正,that’s all

9-Elasticsearch之mappings

一 前言

我们应该知道,在关系型数据库中,必须先定义表结构,才能插入数据,并且,表结构不会轻易改变。而我们呢,我们怎么玩elasticsearch的呢:

PUT t1/doc/1

{

"name": "小黑"

}

PUT t1/doc/2

{

"name": "小白",

"age": 18

}

文档的字段可以是任意的,原本都是name字段,突然来个age。还要elasticsearch自动去猜,哦,可能是个long类型,然后加个映射!之后发什么什么?肯定是:猜猜猜,猜你妹!

难道你不想知道elasticsearch内部是怎么玩的吗?

当我们执行上述第一条PUT命令后,elasticsearch到底是怎么做的:

GET t1

结果:

{

"t1" : {

"aliases" : { },

"mappings" : {

"doc" : {

"properties" : {

"name" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1553334893136",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "lHfujZBbRA2K7QDdsX4_wA",

"version" : {

"created" : "6050499"

},

"provided_name" : "t1"

}

}

}

}

由返回结果可以看到,分为两大部分,第一部分关于t1索引类型相关的,包括该索引是否有别名aliases,然后就是mappings信息,包括索引类型doc,各字段的详细映射关系都收集在properties中。

另一部分是关于索引t1的settings设置。包括该索引的创建时间,主副分片的信息,UUID等等。

我们再执行第二条PUT命令,再查看该索引是否有什么变化,返回结果如下:

{

"t1" : {

"aliases" : { },

"mappings" : {

"doc" : {

"properties" : {

"age" : {

"type" : "long"

},

"name" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1553334893136",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "lHfujZBbRA2K7QDdsX4_wA",

"version" : {

"created" : "6050499"

},

"provided_name" : "t1"

}

}

}

}

由返回结果可以看到,settings没有变化,只是mappings中多了一条关于age的映射关系,这一切都是elasticsearch自动的,但特定的场景下,需要我们更多的设置。

所以,接下来,我们研究一下mappings到底是怎么回事!

二 映射是什么?

其实,映射mappings没那么神秘!说白了,就相当于原来由elasticsearch自动帮我们定义表结构。现在,我们要自己来了,旨在创建索引的时候,有更多定制的内容,更加的贴合业务场景。OK,坐好了,开车!

elasticsearch中的映射用来定义一个文档及其包含的字段如何存储和索引的过程。例如,我们可以使用映射来定义:

- 哪些字符串应该被视为全文字段。

- 哪些字段包含数字、日期或者地理位置。

- 定义日期的格式。

- 自定义的规则,用来控制动态添加字段的的映射。

三 映射类型

每个索引都有一个映射类型(这话必须放在elasticsearch6.x版本后才能说,之前版本一个索引下有多个类型),它决定了文档将如何被索引。

映射类型有:

- 元字段(meta-fields):元字段用于自定义如何处理文档关联的元数据,例如包括文档的

_index、_type、_id和_source字段。 - 字段或属性(field or properties):映射类型包含与文档相关的字段或者属性的列表。

继续往下走!

四 字段的数据类型

- 简单类型,如文本(

text)、关键字(keyword)、日期(date)、整形(long)、双精度(double)、布尔(boolean)或ip。 - 可以是支持

JSON的层次结构性质的类型,如对象或嵌套。 - 或者一种特殊类型,如

geo_point、geo_shape或completion。

为了不同的目的,以不同的方式索引相同的字段通常是有用的。例如,字符串字段可以作为全文搜索的文本字段进行索引,也可以作为排序或聚合的关键字字段进行索引。或者,可以使用标准分析器、英语分析器和法语分析器索引字符串字段。

这就是多字段的目的。大多数数据类型通过fields参数支持多字段。

五 映射约束

在索引中定义太多的字段有可能导致映射爆炸!因为这可能会导致内存不足以及难以恢复的情况,为此。我们可以手动或动态的创建字段映射的数量:

- index.mapping.total_fields.limit:索引中的最大字段数。字段和对象映射以及字段别名都计入此限制。默认值为1000。

- index.mapping.depth.limit:字段的最大深度,以内部对象的数量来衡量。例如,如果所有字段都在根对象级别定义,则深度为1.如果有一个子对象映射,则深度为2,等等。默认值为20。

- index.mapping.nested_fields.limit:索引中嵌套字段的最大数量,默认为50.索引1个包含100个嵌套字段的文档实际上索引101个文档,因为每个嵌套文档都被索引为单独的隐藏文档。

六 一个简单的映射示例

PUT mapping_test1

{

"mappings": {

"test1":{

"properties":{

"name":{"type": "text"},

"age":{"type":"long"}

}

}

}

}

上例中,我们在创建索引PUT mapping_test1的过程中,为该索引定制化类型(设计表结构),添加一个映射类型test1;指定字段或者属性都在properties内完成。

GET mapping_test1

通过GET来查看。

{

"mapping_test1" : {

"aliases" : { },

"mappings" : {

"test1" : {

"properties" : {

"age" : {

"type" : "long"

},

"name" : {

"type" : "text"

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1550469220778",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "7I_m_ULRRXGzWcvhIZoxnQ",

"version" : {

"created" : "6050499"

},

"provided_name" : "mapping_test1"

}

}

}

}

返回的结果中你肯定很熟悉!映射类型是test1,具体的属性都被封装在properties中。而关于settings的配置,我们暂时不管它。

我们为这个索引添加一些数据:

put mapping_test1/test1/1

{

"name":"张开嘴",

"age":16

}

上例中,mapping_test1是之前创建的索引,test1为之前自定义的mappings类型。字段是之前创建好的name和age。

GET mapping_test1/test1/_search

{

"query": {

"match": {

"age": 16

}

}

}

上例中,我们通过age条件查询。

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.0,

"hits" : [

{

"_index" : "mapping_test1",

"_type" : "test1",

"_id" : "1",

"_score" : 1.0,

"_source" : {

"name" : "张开嘴",

"age" : 16

}

}

]

}

}

返回了预期的结果信息。

10-Elasticsearch mappings之dynamic的三种状态

一 前言

一般的,mapping则又可以分为动态映射(dynamic mapping)和静态(显式)映射(explicit mapping)和精确(严格)映射(strict mappings),具体由dynamic属性控制。

二 动态映射(dynamic:true)

现在有这样的一个索引:

PUT m1

{

"mappings": {

"doc":{

"properties": {

"name": {

"type": "text"

},

"age": {

"type": "long"

}

}

}

}

}

通过GET m1/_mapping看一下mappings信息:

{

"m1" : {

"mappings" : {

"doc" : {

"dynamic" : "true",

"properties" : {

"age" : {

"type" : "long"

},

"name" : {

"type" : "text"

}

}

}

}

}

}

添加一些数据,并且新增一个sex字段:

PUT m1/doc/1

{

"name": "小黑",

"age": 18,

"sex": "不详"

}

当然,新的字段查询也没问题:

GET m1/doc/_search

{

"query": {

"match": {

"sex": "不详"

}

}

}

返回结果:

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.5753642,

"hits" : [

{

"_index" : "m1",

"_type" : "doc",

"_id" : "1",

"_score" : 0.5753642,

"_source" : {

"name" : "小黑",

"age" : 18,

"sex" : "不详"

}

}

]

}

}

现在,一切都很正常,跟elasticsearch自动创建时一样。那是因为,当 Elasticsearch 遇到文档中以前未遇到的字段,它用动态映射来确定字段的数据类型并自动把新的字段添加到类型映射。我们再来看mappings你就明白了:

{

"m1" : {

"mappings" : {

"doc" : {

"dynamic" : "true",

"properties" : {

"age" : {

"type" : "long"

},

"name" : {

"type" : "text"

},

"sex" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

}

}

}

通过上例可以发下,elasticsearch帮我们新增了一个sex的映射。所以。这一切看起来如此自然。这一切的功劳都要归功于dynamic属性。我们知道在关系型数据库中,字段创建后除非手动修改,则永远不会更改。但是,elasticsearch默认是允许添加新的字段的,也就是dynamic:true。

其实创建索引的时候,是这样的:

PUT m1

{

"mappings": {

"doc":{

"dynamic":true,

"properties": {

"name": {

"type": "text"

},

"age": {

"type": "long"

}

}

}

}

}

上例中,当dynamic设置为true的时候,elasticsearch就会帮我们动态的添加映射属性。也就是等于啥都没做!

这里有一点需要注意的是:mappings一旦创建,则无法修改。因为Lucene生成倒排索引后就不能改了。

三 静态映射(dynamic:false)

现在,我们将dynamic值设置为false:

PUT m2

{

"mappings": {

"doc":{

"dynamic":false,

"properties": {

"name": {

"type": "text"

},

"age": {

"type": "long"

}

}

}

}

}

现在再来测试一下false和true有什么区别:

PUT m2/doc/1

{

"name": "小黑",

"age":18

}

PUT m2/doc/2

{

"name": "小白",

"age": 16,

"sex": "不详"

}

第二条数据相对于第一条数据来说,多了一个sex属性,我们以sex为条件来查询一下:

GET m2/doc/_search

{

"query": {

"match": {

"sex": "不详"

}

}

}

结果如下:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 0,

"max_score" : null,

"hits" : [ ]

}

}

结果是空的,也就是什么都没查询到,那是为什呢?来GET m2/_mapping一下此时m2的mappings信息:

``{ “m2” : { “mappings” : { “doc” : { “dynamic” : “false”, “properties” : { “age” : { “type” : “long” }, “name” : { “type” : “text” } } } } } } 可以看到elasticsearch并没有为新增的sex建立映射关系。所以查询不到。 当elasticsearch察觉到有新增字段时,因为dynamic:false的关系,会忽略该字段,但是仍会存储该字段。 在有些情况下,dynamic:false`依然不够,所以还需要更严谨的策略来进一步做限制。

四 严格模式(dynamic:strict)

让我们再创建一个mappings,并且将dynamic的状态改为strict:

PUT m3

{

"mappings": {