【OpenCV】双目相机标定、极线矫正、SIFT匹配以及深度估计

【OpenCV】 双目相机标定、极线矫正、SIFT匹配以及深度估计

- 双目标定

- 直接打开双目相机处理图片:(这块代码没测试过,不保证一定正确)

- 极线校正

- SIFT匹配

- 深度估计

双目标定

双目标定有很多示例,就不多讲,直接放代码

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 100, 0.0001)

objp = np.zeros((8*6,3), np.float32) # 8*6 为标定板角点数,根据实际修改

objp[:,:2] = np.mgrid[0:6,0:8].T.reshape(-1,2)

objp *= 25 # 标定板小格子的宽度 (单位mm)

size=(704,576) # 相机分辨率

objpoints_left = [] # 3D points for storing world coordinates

objpoints_right = []

imgpoints_left = [] # 2D points for storing picture coordinates

imgpoints_right = []

images_left = glob.glob('input/calib/left/*.jpg') #左相机标定图片存放位置

for fname in images_left:

img_left = cv2.imread(fname)

gray_left = cv2.cvtColor(img_left,cv2.COLOR_BGR2GRAY)

# Find the corner of chessboard

ret_left, corners_left = cv2.findChessboardCorners(gray_left, (6,8),None)

# If found, add the corner information to the list

if ret_left == True:

objpoints_left.append(objp)

#Finding subpixel corners

cv2.cornerSubPix(gray_left,corners_left,(5,5),(-1,-1),criteria)

imgpoints_left.append(corners_left)

# Show corners found

cv2.drawChessboardCorners(img_left, (6,8), corners_left, ret_left)

img_left=cv2.resize(img_left, (0,0), fx=0.5, fy=0.5)

cv2.imshow('img',img_left)

cv2.waitKey(200)# 延迟200ms是为了方便观看效果

cv2.destroyAllWindows()

ret_left, mtx_left, dist_left, rvecs_left, tvecs_left = cv2.calibrateCamera(objpoints_left, imgpoints_left, size,None,None,flags=cv2.CALIB_FIX_K3)

print('mtx_left')

print(mtx_left)

print('dist_left')

print(dist_left)

images_right = glob.glob('input/calib/right/*.jpg') # 右相机标定图片存放位置

# 同上

for fname in images_right:

img_right = cv2.imread(fname)

gray_right = cv2.cvtColor(img_right,cv2.COLOR_BGR2GRAY)

ret_right, corners_right = cv2.findChessboardCorners(gray_right, (6,8),None)

if ret_right == True:

objpoints_right.append(objp)

cv2.cornerSubPix(gray_right,corners_right,(5,5),(-1,-1),criteria)

imgpoints_right.append(corners_right)

cv2.drawChessboardCorners(img_right, (6,8), corners_right, ret_right)

img_right=cv2.resize(img_right, (0,0), fx=0.5, fy=0.5)

cv2.imshow('img',img_right)

cv2.waitKey(200)

cv2.destroyAllWindows()

ret_right, mtx_right, dist_right, rvecs_right, tvecs_right = cv2.calibrateCamera(objpoints_right, imgpoints_right, size, None, None,flags=cv2.CALIB_FIX_K3)

print('mtx_right')

print(mtx_right)

print('dist_right')

print(dist_right)

print("----------------------------------------------")

ret,cameraMatrix1, distCoeffs1, cameraMatrix2, distCoeffs2, R, T, E, F=cv2.stereoCalibrate(objpoints_left, imgpoints_left, imgpoints_right, mtx_left, dist_left, mtx_right, dist_right, gray_left.shape[::-1],criteria,None) #双目标定函数

# print('cameraMatrix1')

# print(cameraMatrix1)

print('R')

print(R)

print('T')

print(T)

R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(mtx_left, dist_left, mtx_right, dist_right, size, R, T)

print('Q')

print(Q)

print('R1')

print(R1)

print('P1')

print(P1)

print('R2')

print(R2)

print('P2')

print(P2)

print("----------------------------------------------")

# ----------------------------------------------------------------------------

# The above part is to calculate the camera parameters, just run it once

# You can save the parameters in a file for easy calling

# This function is not implemented yet and is being implemented

# ----------------------------------------------------------------------------

left_map1, left_map2 = cv2.initUndistortRectifyMap(mtx_left, dist_left, R1, P2, size, cv2.CV_16SC2)

right_map1, right_map2 = cv2.initUndistortRectifyMap(mtx_right, dist_right, R2, P1, size, cv2.CV_16SC2)

有个小技巧就是可以先使用matlab标定箱试一下,把误差过大的左右相机图片剔除,保证精度

直接打开双目相机处理图片:(这块代码没测试过,不保证一定正确)

# Turn on the camera to get the left and right views, but they haven't been tested

cap1 = cv2.VideoCapture(0) # left

cap2 = cv2.VideoCapture(1) # right

cap1.set(cv2.CAP_PROP_FRAME_WIDTH, 704) # 分辨率设置

cap1.set(cv2.CAP_PROP_FRAME_HEIGHT, 576) # 分辨率设置

cap2.set(cv2.CAP_PROP_FRAME_WIDTH, 704) # 分辨率设置

cap2.set(cv2.CAP_PROP_FRAME_HEIGHT, 576) # 分辨率设置

curent_cap = False # 如果直接打开相机处理,则设置为True

while(curent_cap): # 这里只是先保存图片,如果想要实时处理,可以把后面代码放到这个循环里

ret1 ,frame1 = cap1.read()

ret2, frame2 = cap1.read()

frame = np.hstack([frame1, frame2])

frame = cv2.resize(frame, (0, 0), fx=1, fy=1) # 缩放系数,只是显示用,不影响实际大小

cv2.imshow("capture", frame)

k=cv2.waitKey(40)

if k==ord('q'):

break

if k==ord('s'):

cv2.imwrite("cap/left/cap.jpg",frame1)

cv2.imwrite("cap/right/cap.jpg", frame2)

cap1.release()

cap2.release()

break

#--------------------------------------------

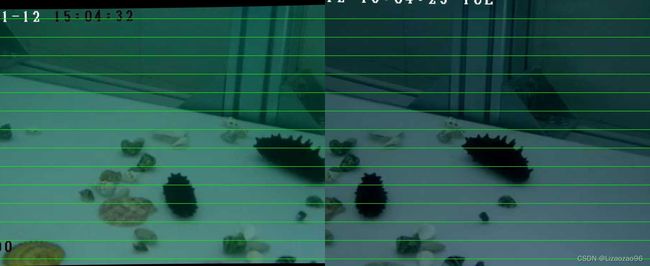

极线校正

frame1 = cv2.imread("input/underwear_trepang/trepang_left/trepang left_1.jpg")

frame2 = cv2.imread("input/underwear_trepang/trepang_right/trepang right_1.jpg") #frame1 is left, frame2 is right

#--------------------------------------------

img1_rectified = cv2.remap(frame1, left_map1, left_map2, cv2.INTER_LINEAR)

img2_rectified = cv2.remap(frame2, right_map1, right_map2, cv2.INTER_LINEAR)

img1_copy=img1_rectified.copy()

img2_copy=img2_rectified.copy()

cv2.imwrite("output\\left_rectified.jpg", img1_copy)

cv2.imwrite("output\\right_rectified.jpg", img2_copy)

i = 40 # 可视化检查极线校正效果

while(1):

cv2.line(img1_rectified, (0, i), (size[0], i), (0, 255, 0), 1)

cv2.line(img2_rectified, (0, i), (size[0], i), (0, 255, 0), 1)

i += 40

if i > size[1]:

break

imgsall = np.hstack([img1_rectified,img2_rectified])

cv2.imwrite("output\\rectified.jpg", imgsall)

imgL = cv2.cvtColor(img1_copy, cv2.COLOR_BGR2GRAY)

imgR = cv2.cvtColor(img2_copy, cv2.COLOR_BGR2GRAY)

SIFT匹配

匹配:

sift = cv2.xfeatures2d.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1_copy, None)

kp2, des2 = sift.detectAndCompute(img2_copy, None)

# BFMatcher with default params

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1, des2, k=2)

# good = [[m] for m, n in matches if m.distance < 0.7 * n.distance]

good = [] # It is used to store good matching point pairs

point = [] # Used to store matching point to coordinate

过滤:

for i, (m1, m2) in enumerate(matches): # Filter out the unqualified point pairs

if m1.distance < 1.5 * m2.distance: # 过滤条件1:欧式距离

# Filter condition 1: the smaller the Euclidean distance

# between two feature vectors, the higher the matching degree

if (label_top[0] < kp1[m1.queryIdx].pt[0] < label_bottom[0] and

label_top[1] < kp1[m1.queryIdx].pt[1] < label_bottom[1]):

if abs(kp1[m1.queryIdx].pt[1] - kp2[m1.trainIdx].pt[1]) < 5: # 过滤条件2:水平像素高度差

# Filter condition 2: because the epipolar line has been

# corrected, the matching point pair should be on the horizontal

# line, and a threshold of 10 pixels can be set, which can be smaller

good.append([m1])

pt1 = kp1[m1.queryIdx].pt # trainIdx 是匹配之后所对应关键点的序号,第一个载入图片的匹配关键点序号

pt2 = kp2[m1.trainIdx].pt # queryIdx 是匹配之后所对应关键点的序号,第二个载入图片的匹配关键点序号

# print(i, pt1, pt2)

point.append([pt1, pt2])

img4 = cv2.drawMatchesKnn(img1_copy, kp1, img2_copy, kp2, matches, None, flags=2)

cv2.imwrite("output\SIFT_MATCH_no_filter.jpg", img4)

img4 = cv2.resize(img4, (0,0), fx=0.6, fy=0.6)

cv2.imshow("sift-no_filter", img4)

img3 = cv2.drawMatchesKnn(img1_copy, kp1, img2_copy, kp2, good, None, flags=2)

cv2.rectangle(img3, label_top, label_bottom, (0, 0, 255), 2)

cv2.imwrite("output\SIFT_MATCH_filtered.jpg", img3)

img3 = cv2.resize(img3, (0,0), fx=0.6, fy=0.6) # Zoom 0.6 times

cv2.imshow("sift_filtered", img3)

这里过滤条件2需要注意一下,因为我们是进行了极线校正的,所以理论上左右视图相同的点应该在同一条水平线上,即像素高度应该一致,所以这里采用了过滤掉像素高度差大于5的匹配点对

深度估计

def img2_3D(target_point,label_top, label_bottom, point_cloud, Q):

# target_ x, target_ Y is the coordinate of the point we want

# to measure the distance. Because the point does not necessarily

# match, we will search for the nearest matching point pair, and

# use the distance of the point pair to approximate the distance

# of the point we want

final_point = []

min_distance = 1000000

for i in range(len(point)):

distance = (target_point[0] - point_cloud[i][0][0]) ** 2 \

+ (target_point[1] - point_cloud[i][0][1]) ** 2

if distance < min_distance :

final_point = point[i]

min_distance = distance

# print(final_point)

new_img=np.zeros(size,dtype=np.float32)

x = int(final_point[0][0])

y = int(final_point[0][1])

print("input coordinates of the point:({}, {})".format(target_point[0], target_point[1]))

print("The point coordinates closest to the input point:({}, {})".format(x, y))

cv2.circle(img1_copy, (x, y), 3, (0, 0, 255), -1)

cv2.circle(img1_copy, (target_point[0], target_point[1]), 3, (0, 255, 0), -1)

disp = final_point[0][0] - final_point[1][0]

print("Parallax at this point: ", disp)

new_img[x][y] = disp

threeD = cv2.reprojectImageTo3D(new_img, Q)

print("The three-dimensional coordinates of the point are: ", threeD[x][y])

print("The depth of the point is: {:.2f} m".format(threeD[x][y][2] / 1000))

img2_3D(target_point, label_top, label_bottom, point, Q) # 调用函数,前两个参数就是想要测距的坐标

整个代码放在了我的github上,下载即可直接运行,链接:https://github.com/Hozhangwang/Stereo_Calibration_and_estimated_distance_use_SIFT_in_OpenCV创作不易,可以的话帮忙在github上帮忙点颗星

匹配结果:

过滤前:

过滤后:

寻找最近的匹配点:

这里绿色点是想要进行深度估计的点,红色点是找到的最近的匹配到的点,估算深度并作为最终结果,因为不可能你选的点都能匹配成功,所以采用的这种方法,有一定误差,但是不会太大。

最终结果:

input coordinates of the point:(624, 341)

The point coordinates closest to the input point:(629, 297)

Parallax at this point: 254.74746704101562

The three-dimensional coordinates of the point are: [-20.767252 177.53214 875.65 ]

The depth of the point is: 0.88 m

联系方式github上readme里有,如果我写的不够清楚可以找我交流。