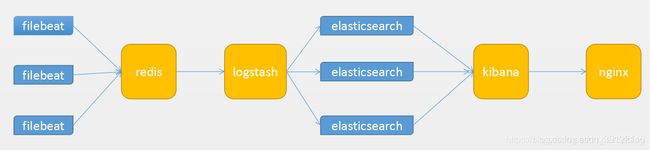

ELK搭建----docker+filebeat+redis+logstash+elasticsearch+kibana(7.2.0)

一.系统架构

二.es集群搭建

1、配置准备

docker run -d --name es --rm -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:7.2.0

mikdir -p /docker/elk/es1

docker cp es:/usr/share/elasticsearch/config /docker/elk/es1/config

docker stop es

sudo mkdir -p /docker/elk/es1/data

sudo chmod -R 777 /docker/elk/es1/data

cp -a /docker/elk/es1 /docker/elk/es2

cp -a /docker/elk/es1 /docker/elk/es3

2、配置文件修改

主节点node1:elasticsearch.yml

cluster.name: "elk"

node.name: node1

node.master: true

node.data: false

network.bind_host: 0.0.0.0

network.publish_host: 192.168.10.46

http.port: 9201

transport.tcp.port: 9301

http.cors.enabled: true

http.cors.allow-origin: "*"

discovery.seed_hosts: ["192.168.10.44:9301","192.168.10.44:9302","192.168.10.44:9303"]

cluster.initial_master_nodes: ["node1"]

xpack.monitoring.collection.enabled: true

数据节点node2:elasticsearch.yml

cluster.name: "elk"

node.name: node2

node.master: false

node.data: true

network.bind_host: 0.0.0.0

network.publish_host: 192.168.10.46

http.port: 9202

transport.tcp.port: 9302

http.cors.enabled: true

http.cors.allow-origin: "*"

discovery.seed_hosts: ["192.168.10.46:9301","192.168.10.46:9302","192.168.10.46:9303"]

cluster.initial_master_nodes: ["node1"]

xpack.monitoring.collection.enabled: true

数据节点node3:elasticsearch.yml

cluster.name: "elk"

node.name: node3

node.master: false

node.data: true

network.bind_host: 0.0.0.0

network.publish_host: 192.168.10.44

http.port: 9203

transport.tcp.port: 9303

http.cors.enabled: true

http.cors.allow-origin: "*"

discovery.seed_hosts: ["192.168.10.44:9301","192.168.10.44:9302","192.168.10.44:9303"]

cluster.initial_master_nodes: ["node1"]

xpack.monitoring.collection.enabled: true

3、启动es集群

docker run -d --name es-node1 -p 9201:9201 -p 9301:9301 \

-v /docker/elk/es1/config/:/usr/share/elasticsearch/config \

-v /docker/elk/es1/data/:/usr/share/elasticsearch/data \

docker.elastic.co/elasticsearch/elasticsearch:7.2.0

docker run -d --name es-node2 -p 9202:9202 -p 9302:9302 \

-v /docker/elk/es2/config/:/usr/share/elasticsearch/config \

-v /docker/elk/es2/data/:/usr/share/elasticsearch/data \

docker.elastic.co/elasticsearch/elasticsearch:7.2.0

docker run -d --name es-node3 -p 9203:9203 -p 9303:9303 \

-v /docker/elk/es3/config/:/usr/share/elasticsearch/config \

-v /docker/elk/es3/data/:/usr/share/elasticsearch/data \

docker.elastic.co/elasticsearch/elasticsearch:7.2.0

注意:

如果内存不够用,在启动的时候加上-e ES_JAVA_OPTS="-Xms=256m -Xms=256m" 参数,给es分配256m的内存大小

config 目录下

jvm.options

-Xms1g改为256m

-Xmx1g 改为256m

docker run -d -p 9200:9200 -p 9300:9300 -e ES_JAVA_OPTS="-Xms=256m -Xms=256m" --name myes elastisseatch

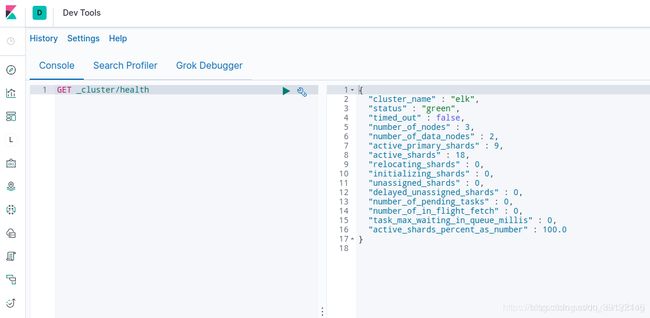

4、查看集群状态

status状态为green,视为集群搭建成功

curl 192.168.10.46:9201/_cluster/health

{"cluster_name":"elk","status":"green","timed_out":false,"number_of_nodes":3,"number_of_data_nodes":2,"active_primary_shards":9,"active_shards":18,"relocating_shards":0,"initializing_shards":0,"unassigned_shards":0,"delayed_unassigned_shards":0,"number_of_pending_tasks":0,"number_of_in_flight_fetch":0,"task_max_waiting_in_queue_millis":0,"active_shards_percent_as_number":100.0}

遇到问题:

ERROR: [1] bootstrap checks failed

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

解决:

sudo sysctl -w vm.max_map_count=262144

三、kibana搭建

1、配置文件准备

docker run -d --name kibana --rm docker.elastic.co/kibana/kibana:7.2.0

mkdir -p /docker/elk/kibana/config

cd /docker/elk/kibana/config

docker cp kibana:/usr/share/kibana/config/kibana.yml .

docker stop kibana

kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://192.168.10.44:9201","http://192.168.10.44:9202","http://192.168.10.46:9203"]

2、启动命令

docker run -d --name kibana -p 5601:5601 -v /docker/elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml:ro docker.elastic.co/kibana/kibana:7.2.0

3、浏览器访问

http://192.168.10.46:5601/

点击Dev tools,查看集群健康

四、redis搭建

1、配置文件准备

mkdir -p /docker/elk/redis/data

vim /docker/elk/redis/data/redis.conf

redis.conf

bind 0.0.0.0

daemonize no

pidfile "/var/run/redis.pid"

port 6380

timeout 300

loglevel warning

logfile "redis.log"

databases 16

rdbcompression yes

dbfilename "redis.rdb"

dir "/data"

requirepass "123456"

masterauth "123456"

maxclients 10000

maxmemory 1000mb

maxmemory-policy allkeys-lru

appendonly yes

appendfsync always

2、启动命令

docker run -d --name redis -p 6380:6380 -v /docker/elk/redis/data/:/data redis:5.0 redis-server redis.conf

五、logstash搭建

1、配置准备

mkdir /docker/elk/logstash

cd /docker/elk/logstash

docker run --rm -d --name logstash docker.elastic.co/logstash/logstash:7.2.0

docker cp logstash:/usr/share/logstash/config .

docker cp logstash:/usr/share/logstash/pipeline .

docker stop logstash

2、修改配置文件

vim /docker/elk/logstash/config/logstash.yml

vim /docker/elk/logstash/config/pipelines.yml

mv /docker/elk/logstash/pipeline/logstash.conf /docker/elk/logstash/pipeline/docker.conf

vim /docker/elk/logstash/pipeline/docker.conf

logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: ["http://192.168.10.46:9201","http://192.168.10.46:9202","http://192.168.10.46:9203"]

pipelines.yml

- pipeline.id: docker

path.config: "/usr/share/logstash/pipeline/docker.conf"

docker.conf

input {

redis {

host => "192.168.10.46"

port => 6380

db => 0

key => "localhost"

password => "123456"

data_type => "list"

threads => 4

tags => "localhost"

}

}

output {

if "localhost" in [tags] {

if [fields][function] == "docker" {

elasticsearch {

hosts => ["192.168.10.46:9201","192.168.10.46:9202","192.168.10.46:9203"]

index => "docker-localhost-%{+YYYY.MM.dd}"

}

}

}

}

3、启动命令

docker run -d -p 5044:5044 -p 9600:9600 --name logstash \

-v /docker/elk/logstash/config/:/usr/share/logstash/config \

-v /docker/elk/logstash/pipeline/:/usr/share/logstash/pipeline \

docker.elastic.co/logstash/logstash:7.2.0

六、filebeat搭建(收集docker日志)

1、配置文件准备

mkdir /docker/elk/filebeat

vim /docker/elk/filebeat/filebeat.yml

sudo chown root:root /docker/elk/filebeat/filebeat.yml

filebeat.yml

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

filebeat.inputs:

- type: docker

enabled: true

combine_partial: true

containers:

path: "/var/lib/docker/containers"

ids:

- '*'

processors:

- add_docker_metadata: ~

encoding: utf-8

max_bytes: 104857600

tail_files: true

fields:

function: docker

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

output.redis:

hosts: ["192.168.10.46:6380"]

password: "123456"

db: 0

key: "localhost"

keys:

- key: "%{[fields.list]}"

mappings:

function: "docker"

worker: 4

timeout: 20

max_retries: 3

codec.json:

pretty: false

monitoring.enabled: true

monitoring.elasticsearch:

hosts: ["http://192.168.10.46:9201","http://192.168.10.46:9202","http://192.168.10.46:9203"]

2、启动命令

docker run -d --name filebeat --hostname localhost --user=root \

-v /docker/elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml:ro \

-v /var/lib/docker:/var/lib/docker:ro \

-v /var/run/docker.sock:/var/run/docker.sock:ro \

docker.elastic.co/beats/filebeat:7.2.0

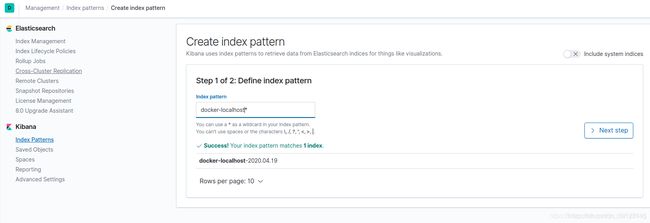

7、日志显示

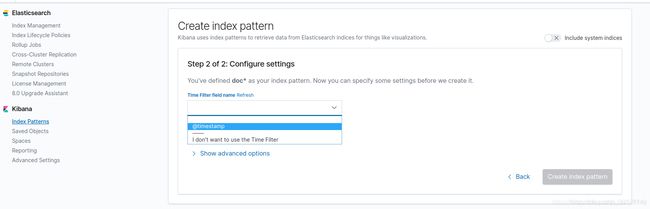

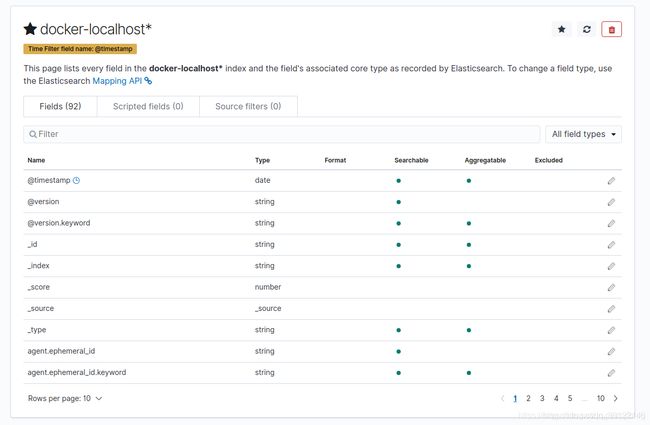

1、配置索引

点击Management,再点击Kibana下面的Index Patterns,然后Create index pattern

链接:https://blog.csdn.net/cyfblog/article/details/102839590