【卷积神经网络】构建对CIFAR-100数据集中的图像进行分类的CNN

参考:

- Cifar100 TensorFlow高精度调参记录

- cifar算法动物园(各类网络解决cifar分类)

- pytorch实现cifar100的 各类网络结构

前言:

CIFAR-100数据集的网络 网上的版本很多,有些跑起来也还可以,但是大多数都只能到40-50%左右的正确率。

有大佬做了各种网络,调好了参数,能达到80%左右的正确率。但是这里只是个小作业,希望用不要太复杂,不要太深的网络,最好在一小时内就能看到结果的,所以参照大佬的作品写了简化版本,能在50个epoch内达到60%左右正确率。

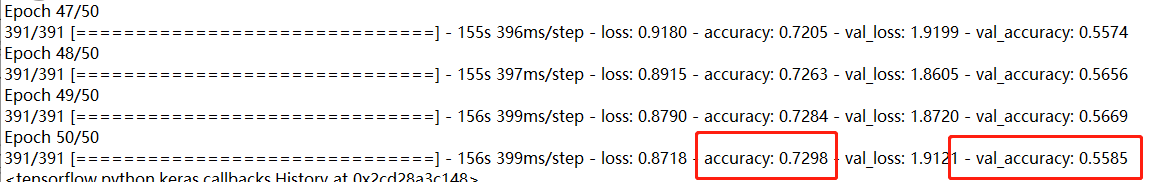

结果:

cifar100数据集:

这个数据集有100个分类,每个分类图片数量只有600张对应图片,其中100张还要拿来做检验,所以要保证分类正确,cifar100的复杂度 指数级 高于cifar10(cifar10有10个分类,每个分类6000张照片,达到90%+的正确率不在话下,就算瞎猜,10个里猜对一个的概率也大于100个猜对1个的概率)

主要网络结构:

代码:

Jupyter-notebook:构建对CIFAR-100数据集中的图像进行分类的CNN

点击下载后在对应环境下运行,这里给出的notebook里已经有运行结果,可以直接看。

注意:

cifar100的下载可能很慢,可以自己下好了,改下代码,直接按路径读取。

from __future__ import print_function

from tensorflow import keras

from tensorflow.keras.datasets import cifar100

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Activation, Flatten

from tensorflow.keras.layers import Conv2D, MaxPooling2D

import matplotlib.pyplot as plt

%matplotlib inline

# 下面的代码可以加载CIFAR-100数据集

# The data, shuffled and split between train and test sets:

(x_train, y_train), (x_test, y_test) = cifar100.load_data()

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

## Each image is a 32 x 32 x 3 的字符数组,由于在计算机中,每个图片的像素点颜色都是用0-255范围的数字表示的

## 32 x 32 x 3 数组表示 长度为32,高度为32,深度为3,也就是有三个通道,

## RGB就是记录了黄色,绿色,蓝色的三个通道的记录方法中的一种

x_train[444].shape

x_train[444]

## 展示一下训练前的图片

print(y_train[442])

plt.imshow(x_train[442]);

## 数据集分类为100个

num_classes = 100

## 将整型的类别标签转为onehot编码,onehot编码是一种方便计算机处理的二元编码。

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

## 展示下100长度的onehot编码,格式为(000000……10000)0占99个,1占一个

y_train[444]

## 归一化,转化为浮点数

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

## 自己构建一个模型

model_1 = Sequential()

## 模型的构建:可以理解为卷积层是提取特征,然后maxPooling是平滑他们的共性

## 5x5 convolution with 2x2 stride and 32 filters

model_1.add(Conv2D(32, (5, 5), strides = (2,2), padding='same',

input_shape=x_train.shape[1:]))

model_1.add(Activation('relu'))

## Another 5x5 convolution with 2x2 stride and 32 filters

model_1.add(Conv2D(32, (5, 5), strides = (2,2)))

model_1.add(Activation('relu'))

## 2x2 max pooling reduces to 3 x 3 x 32

model_1.add(MaxPooling2D(pool_size=(2, 2)))

model_1.add(Dropout(0.25))

## Flatten turns 3x3x32 into 288x1

model_1.add(Flatten())

model_1.add(Dense(512))

model_1.add(Activation('relu'))

model_1.add(Dropout(0.5))

model_1.add(Dense(num_classes))

model_1.add(Activation('softmax'))

model_1.summary()

# 构建并训练你的初始的卷积神经网络,以识别CIFAR-100数据集

# Let's build a CNN using Keras' Sequential capabilities

#创建CNN模型

model_2 = Sequential()

model_2.add(Conv2D(32, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model_2.add(keras.layers.BatchNormalization())

model_2.add(Dropout(0.25))

model_2.add(Conv2D(32, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(MaxPooling2D(pool_size=(2, 2)))

## model_2.add(Dropout(0.25))

model_2.add(Conv2D(64, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(Dropout(0.3))

model_2.add(Conv2D(64, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(MaxPooling2D(pool_size=(2, 2)))

model_2.add(Conv2D(128, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(Dropout(0.4))

model_2.add(Conv2D(128, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(Dropout(0.4))

model_2.add(Conv2D(128, (3, 3), padding='same'))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(MaxPooling2D(pool_size=(2, 2)))

model_2.add(Flatten())

model_2.add(Dense(512))

model_2.add(Activation('relu'))

model_2.add(keras.layers.BatchNormalization())

model_2.add(Dropout(0.5))

model_2.add(Dense(num_classes))

model_2.add(Activation('softmax'))

## Check number of parameters

model_2.summary()

## 设置训练一次的批次块大小,块越大,一般训练会快,但是块太大,内存装不下也不好

batch_size = 128

# initiate RMSprop optimizer

# opt_2 = keras.optimizers.SGD(lr=0.1,decay = 1e-6,momentum=0.9, nesterov=True)

opt = keras.optimizers.RMSprop(lr=0.001, decay=1e-6)

# Let's train the model using RMSprop

model_2.compile(loss='categorical_crossentropy',

optimizer=opt_2,

metrics=['accuracy'])

history = model_2.fit(x_train, y_train,

batch_size=batch_size,

epochs=50,

validation_data=(x_test, y_test),

shuffle=True)

# 绘制训练 & 验证的准确率值

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('Model accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

# 绘制训练 & 验证的损失值

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

改进方法总结:

准确性提升:30%->60%

- 增加了卷积神经网络的深度和增加了卷积核大小,缩小了卷积视野,使得整个模型大小变大,拟合能力增强

- 学习率变化为适度的学习率,使用变化学习率,递归且衰减

- 增加了训练批次10->50

- 修改了训练优化器,改为了sgd

- 调整batch的归一化,权重衰减和适度的dropout来调整防止过拟合

- 使用了别人设计的网络结构,在此基础上进行简化

还可以从下面的一些方面进行改进:

- 学习率变化为适度的学习率,进行多次实验,找出合适的学习率,

也可以使用变化学习率,通过在训练过程中递减学习率,使得模型能够更好的收敛。 - 从数据集想办法,利用一些增强图像的方法,翻转图像、切割图像、白化图像等方法增加数据量,

由于CIFAR-100的图片分类多,有100类,而图像的数量又少,每张图像只有600张,

数据量如果更大肯定会提高准确性,增加模型的拟合能力和泛化能力 - 适度增加迭代次数,例如跑个50 epoch,如果验证集准确性依然在收敛,则可以继续适当增加迭代次数

- 可以改进模型训练方法,例如调整batch的归一化,权重衰减和适度的dropout来调整防止过拟合。

- 可以改进模型层数,加深网络层数通过加深模型层数增加模型的拟合能力,

- 残差网络(resnet)技术通过解决梯度衰减问题加强了模型的拟合能力

- 修改使用的优化器为合适的优化器

- 使用别人的预训练权重基础上继续训练

- 使用别人设计的网络结构,在此基础上进行优化