hadoop spark flink

hadoop spark flink

hadoop spark flink

hadoop spark flink

hadoop spark flink

hadoop spark flink

1、代码实现

package flink.demo;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class WordCount0 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<String> inputDataStream = env.readTextFile("H:\\flink_demo\\flink_test\\src\\main\\resources\\wordcount.txt");

SingleOutputStreamOperator<Tuple2<String, Integer>> resultDataStream = inputDataStream.flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>() {

@Override

public void flatMap(String input, Collector<Tuple2<String, Integer>> collector) throws Exception {

String[] words = input.split(" ");

for (String word : words) {

collector.collect(new Tuple2<>(word, 1));

}

}

}).keyBy(0)

.sum(1);

resultDataStream.print();

env.execute();

}

}

2、优化点一 - 使用面向对象

- 优化点:把数据看成对象,遇到字段较多的数据操作比较方便

2.1、自定义对象数据结构

public class WordAndCount {

private String word;

private int count;

public WordAndCount() {

}

public WordAndCount(String word, int count) {

this.word = word;

this.count = count;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

@Override

public String toString() {

return "WordAndCount{" +

"word='" + word + '\'' +

", count=" + count +

'}';

}

}

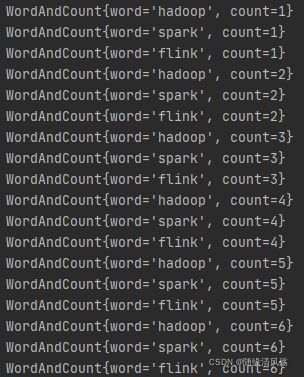

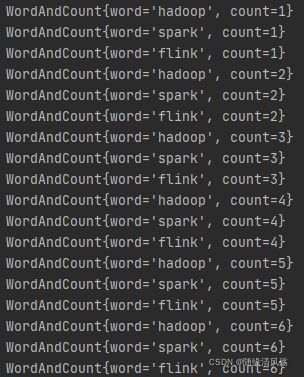

2.2、main方法实现业务逻辑

public class WordCount {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<String> inputDataStream = env.readTextFile("H:\\flink_demo\\flink_test\\src\\main\\resources\\wordcount.txt");

SingleOutputStreamOperator<WordAndCount> resultData = inputDataStream.flatMap(new FlatMapFunction<String, WordAndCount>() {

@Override

public void flatMap(String line, Collector<WordAndCount> out) throws Exception {

String[] fields = line.split(" ");

for (String word : fields) {

out.collect(new WordAndCount(word, 1));

}

}

}).keyBy("word").sum("count");

resultData.print();

env.execute();

}

}

3、优化点二 - 抽取业务功能

3.1、自定义对象的数据结构

public class WordAndCount {

private String word;

private int count;

public WordAndCount() {

}

public WordAndCount(String word, int count) {

this.word = word;

this.count = count;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

@Override

public String toString() {

return "WordAndCount{" +

"word='" + word + '\'' +

", count=" + count +

'}';

}

}

3.2、抽取业务逻辑

public static class SplitLine implements FlatMapFunction<String,WordAndCount>{

@Override

public void flatMap(String line, Collector<WordAndCount> out) throws Exception {

String[] fields = line.split(" ");

for (String word : fields) {

out.collect(new WordAndCount(word, 1));

}

}

}

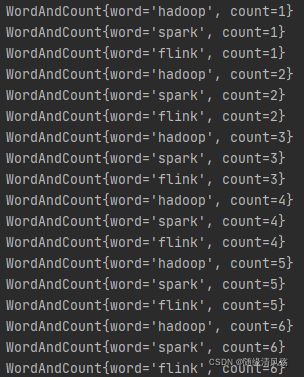

3.3、main方法实现

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<String> inputDataStream = env.readTextFile("H:\\flink_demo\\flink_test\\src\\main\\resources\\wordcount.txt");

SingleOutputStreamOperator<WordAndCount> resultData = inputDataStream.flatMap(new SplitLine()).keyBy("word").sum("count");

resultData.print();

env.execute();

}

4、优化点三 - 数据源传参

- 优化点:flink建议如果程序中需要传入参数,使用它提供的ParameterTool。

4.1、自定义对象的数据结构

public class WordAndCount {

private String word;

private int count;

public WordAndCount() {

}

public WordAndCount(String word, int count) {

this.word = word;

this.count = count;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

@Override

public String toString() {

return "WordAndCount{" +

"word='" + word + '\'' +

", count=" + count +

'}';

}

}

4.2、抽取业务逻辑

public static class SplitLine implements FlatMapFunction<String,WordAndCount>{

@Override

public void flatMap(String line, Collector<WordAndCount> out) throws Exception {

String[] fields = line.split(" ");

for (String word : fields) {

out.collect(new WordAndCount(word, 1));

}

}

}

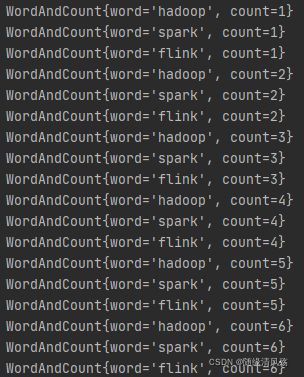

4.3、main方法实现自定义参数传递

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

ParameterTool parameterTool = ParameterTool.fromArgs(args);

String path = parameterTool.get("path");

DataStreamSource<String> dataStream = env.readTextFile(path);

SingleOutputStreamOperator<WordAndCount> resultData = dataStream.flatMap(new SplitLine()).keyBy("word").sum("count");

resultData.print();

env.execute();

}

5、生产环境最佳代码实践

5.1、pom文件配置

<project xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://maven.apache.org/POM/4.0.0"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.examplegroupId>

<artifactId>flinkdemoartifactId>

<version>1.0-SNAPSHOTversion>

<properties>

<java.version>1.8java.version>

<scala.version>2.11scala.version>

<flink.version>1.9.3flink.version>

<parquet.version>1.10.0parquet.version>

<hadoop.version>2.7.3hadoop.version>

<fastjson.version>1.2.72fastjson.version>

<redis.version>2.9.0redis.version>

<mysql.version>5.1.35mysql.version>

<log4j.version>1.2.17log4j.version>

<slf4j.version>1.7.7slf4j.version>

<maven.compiler.source>1.8maven.compiler.source>

<maven.compiler.target>1.8maven.compiler.target>

<maven.compiler.compilerVersion>1.8maven.compiler.compilerVersion>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<project.build.scope>compileproject.build.scope>

<mainClass>com.hainiu.DrivermainClass>

properties>

<dependencies>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>${slf4j.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>log4jgroupId>

<artifactId>log4jartifactId>

<version>${log4j.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-hadoop-compatibility_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-javaartifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-scala_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-scala_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-runtime-web_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-statebackend-rocksdb_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-hbase_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-elasticsearch5_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka-0.10_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-filesystem_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>${mysql.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>redis.clientsgroupId>

<artifactId>jedisartifactId>

<version>${redis.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.parquetgroupId>

<artifactId>parquet-avroartifactId>

<version>${parquet.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.parquetgroupId>

<artifactId>parquet-hadoopartifactId>

<version>${parquet.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-parquet_${scala.version}artifactId>

<version>${flink.version}version>

<scope>${project.build.scope}scope>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>${fastjson.version}version>

<scope>${project.build.scope}scope>

dependency>

dependencies>

<build>

<resources>

<resource>

<directory>src/main/resourcesdirectory>

resource>

resources>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-assembly-pluginartifactId>

<configuration>

<descriptors>

<descriptor>src/assembly/assembly.xmldescriptor>

descriptors>

<archive>

<manifest>

<mainClass>${mainClass}mainClass>

manifest>

archive>

configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>singlegoal>

goals>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-surefire-pluginartifactId>

<version>2.12version>

<configuration>

<skip>trueskip>

<forkMode>onceforkMode>

<excludes>

<exclude>**/**exclude>

excludes>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.1version>

<configuration>

<source>${java.version}source>

<target>${java.version}target>

<encoding>${project.build.sourceEncoding}encoding>

configuration>

plugin>

plugins>

build>

project>

5.2、自定义对象的数据结构

package flink.demo;

public class WordAndCount {

private String word;

private int count;

public WordAndCount() {

}

public WordAndCount(String word, int count) {

this.word = word;

this.count = count;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

@Override

public String toString() {

return "WordAndCount{" +

"word='" + word + '\'' +

", count=" + count +

'}';

}

}

5.3、入口类实现

package flink.demo;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class WordCount {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

ParameterTool parameterTool = ParameterTool.fromArgs(args);

String path = parameterTool.get("path");

DataStreamSource<String> dataStream = env.readTextFile(path);

SingleOutputStreamOperator<WordAndCount> resultData = dataStream.flatMap(new SplitLine()).keyBy("word").sum("count");

resultData.print();

env.execute();

}

public static class SplitLine implements FlatMapFunction<String,WordAndCount>{

@Override

public void flatMap(String line, Collector<WordAndCount> out) throws Exception {

String[] fields = line.split(" ");

for (String word : fields) {

out.collect(new WordAndCount(word, 1));

}

}

}

}

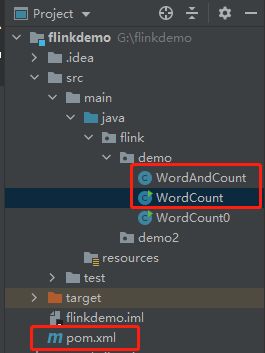

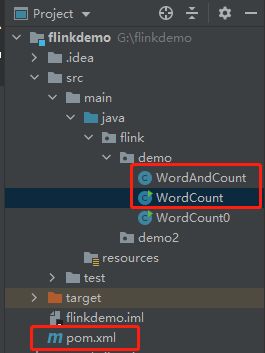

5.4、代码目录结构