day38:opencv与传统机器学习

1、K均值

K均值是最简单的聚类方法之一,是一种无监督学习。K均值聚类的原理是通过指定种类数目对数据进行聚类,例如根据颜色将围棋棋盘上的棋子分成两类.

K 均值聚类算法主要分为以下4个步骤。

第一步:指定将数据聚类成看K 类,并随机生成K 个中心点;

第二步: 遍历所有数据,根据数据与中心的位置关系将每个数据归类到不同的中心;

第三步: 计算每个聚类的平均值,并将均值作为新的中心点;

第四步: 重复第二步和第三步,直到每个聚类中心点的坐标收敛 ,输出聚类结果。

void visionagin:: MyKmeanpoints()

{

//颜色表

vectorcolor_lut;

color_lut.push_back(Scalar(0, 0, 255));

color_lut.push_back(Scalar(0, 255,0));

color_lut.push_back(Scalar(255,0,0));

RNG rng(10086);

Mat img(Size(500, 500), CV_8UC3,Scalar::all(255));//500*500白色画布

int k_num = 3;

int pt1_num = rng.uniform(20, 60);

int pt2_num = rng.uniform(20, 60);

int pt3_num = rng.uniform(20, 60);

int pointsnum = pt1_num + pt2_num + pt3_num;

Mat points(pointsnum, 1, CV_32FC2);

//生成pointsnum个随机点

int i = 0;

for (; i < pt1_num; ++i)

{

Point2f p;

p.x = rng.uniform(0, 100);

p.y = rng.uniform(100, 200);

points.at(i, 0) = p;

}

for (; i < pt1_num+pt2_num; ++i)

{

Point2f p;

p.x = rng.uniform(0, 100);

p.y = rng.uniform(400, 500);

points.at(i, 0) = p;

}

for (; i < pt1_num+pt2_num+pt3_num; ++i)

{

Point2f p;

p.x = rng.uniform(300, 400);

p.y = rng.uniform(300, 400);

points.at(i, 0) = p;

}

Mat labels;

TermCriteria T = TermCriteria(TermCriteria::COUNT | TermCriteria::EPS, 10, 0.1);

Mat centers;

//kmean分类

kmeans(points, k_num, labels, T, 3, KMEANS_PP_CENTERS,centers);

//绘制随机点

for (int j = 0; j (j, 0);

Point2f temp = points.at(j, 0);

circle(img, temp, 2, color_lut[index], -1);

}

//以各类中心点为圆心画圆

for (int m = 0; m (m, 0);

ce.y = centers.at(m, 1);

circle(img, ce, 50, Scalar(0,200,0), 1);

}

imshow("result", img);

} 根据k 均值聚类可以实现基于像素值的 图像分割。 与坐标点聚类 相似, 图像分割时的聚类数 据是每个像素的像素值.

在下列程序中, 首先将每个像素的像素值整理成符合Kmeans() 函数处理的 行数据形式 之后根据需求 选择聚类种类数目.在聚类完成后,将不同类中的像素表示成不同的颜色, 最后在图像窗口中显示.

在该程序中 分别将原图像分割成3 类和5类, 通过结果可以看出, 在利用k 均值进行图像分割时,合适的聚类种类数目是一 项重要的数据参数.

void visionagin:: MyKmeanimg()

{

Mat img = imread("C:\\Users\\86176\\Downloads\\visionimage\\women.jfif");

if (!img.data)

{

cout << "read failed!" << endl;

}

//将图像转化成符合Kmeans输入的数据格式

int pointscount = img.rows * img.cols;

Mat imgchanged=img.reshape(3, pointscount);

Mat data;

imgchanged.convertTo(data, CV_32F);

//进行kmeans分类

Mat labels;

int k_num = 3;

TermCriteria T = TermCriteria(TermCriteria::COUNT | TermCriteria::EPS, 10, 0.1);

kmeans(data, k_num, labels, T, 3, KMEANS_PP_CENTERS);

//着色分类结果

vector color_lut;

color_lut.push_back(Vec3b(0, 0, 255));

color_lut.push_back(Vec3b( 0, 255,0));

color_lut.push_back(Vec3b(255,0,0));

color_lut.push_back(Vec3b(255, 2555, 0));

Mat result = Mat::zeros(img.size(), img.type());

for (int i = 0; i < img.rows; ++i)

{

for (int j = 0; j < img.cols; ++j)

{

int index = labels.at(i * img.cols + j,0);

result.at(i, j) =color_lut[index];

}

}

imshow("result", result);

} 2.k近邻

数据进行训练并保存:

void visionagin:: Myknearesttrain()

{

Mat img = imread("C:\\Users\\86176\\Downloads\\visionimage\\digits.png");//该图片50行,每5行一个数字

Mat gray;

cvtColor(img, gray, COLOR_BGR2GRAY);

//将图像分割成5000行 20*20

Mat image = Mat::zeros(5000, 400, CV_8UC1);

Mat labels = Mat::zeros(5000, 1, CV_8UC1);

//Rect 为将每个单幅图数据拷贝给image的位置

Rect singleimg;

singleimg.x = 0;

singleimg.height = 1;

singleimg.width = 400;

int index = 0;//第几个单幅图像的数据

for (int i = 0; i < 50; i++)

{

int label = i / 5;

int datay = i * 20;

for (int j = 0; j < 100; j++)

{

int datax = j * 20;

Mat singlenum = Mat::zeros(20, 20, CV_8UC1);

//单个图片20*20 像素

for (int m = 0; m < 20; m++)

{

for (int n = 0; n < 20; n++)

{

singlenum.at(m, n) = gray.at(datay + m, datax + n);

}

}

//将单个图片转化为行数据

Mat rowdata = singlenum.reshape(1, 1);//1*400

singleimg.y = index;

cout << "提取第" << index +1<< "个数据" << endl;

//将每一幅图的数据 放到image

rowdata.copyTo(image(singleimg));

//记录每副图像的label;

labels.at(index,0) = label;

index++;

}

}

imwrite("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字按行排列结果.png", image);

imwrite("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字图像标签.png", labels);

//加载训练模型,转换数据类型

image.convertTo(image, CV_32F);

labels.convertTo(labels, CV_32F);

//构造训练类

Ptr tdata = cv::ml::TrainData::create(image, ml::ROW_SAMPLE, labels);

//进行训练

//

//创建K近邻类

Ptr knn = ml::KNearest::create();

knn->setDefaultK(5);//每个类别拿出5个数据

knn->setIsClassifier(true);//进行分类

//开始训练

knn->train(tdata);

//保存训练结果

knn->save("C:\\Users\\86176\\Downloads\\visionimage\\digits_train_model.yml");

cout << "已完成训练!" << endl;

} 加载数据

void visionagin:: Myknearesttest()

{

Mat data = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字按行排列结果.png",IMREAD_ANYDEPTH);

Mat labels = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字图像标签.png",IMREAD_ANYDEPTH);

data.convertTo(data, CV_32F);

labels.convertTo(labels, CV_32S);

//加载模型

Ptr knn = Algorithm::load("C:\\Users\\86176\\Downloads\\visionimage\\digits_train_model.yml");

//预测

Mat result;

knn->findNearest(data, 5, result);

//统计预测结果与实际情况相同的数目

int count = 0;

for (int i = 0; i < result.rows; ++i)

{

int predict = result.at(i, 0);

if (predict == labels.at(i, 0))

{

count++;

}

}

float d = static_cast(count )/ result.rows;

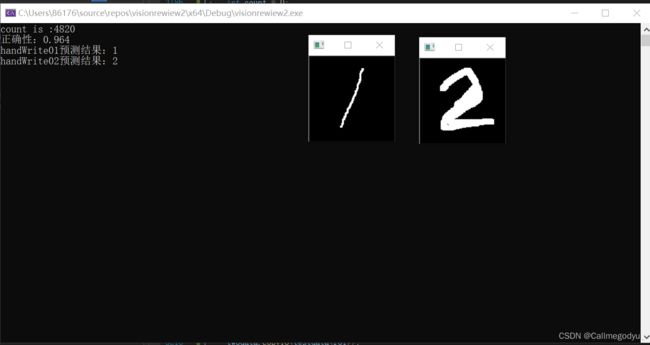

cout << "count is :" << count << endl;

cout << "正确性:" << d << endl;

//实例进行预测

Mat testimg1 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite01.png", IMREAD_ANYDEPTH);

Mat testimg2 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite02.png",IMREAD_ANYDEPTH);

imshow("testimg1", testimg1);

imshow("testimg2", testimg2);

//建立一个存放test数据的矩阵

Mat testdata = Mat::zeros(2, 400, CV_8UC1);

resize(testimg1,testimg1,Size(20,20));

resize(testimg2,testimg2,Size(20, 20));

Rect roi;

roi.x = 0;

roi.height = 1;

roi.width = 400;

roi.y = 0;

Mat onedata=testimg1.reshape(1, 1);

Mat twodata=testimg2.reshape(1, 1);

onedata.copyTo(testdata(roi));

roi.y = 1;

twodata.copyTo(testdata(roi));

testdata.convertTo(testdata, CV_32F);

Mat result2;

knn->findNearest(testdata, 5, result2);

cout << "handWrite01预测结果:" << result2.at(0,0) << endl;

cout << "handWrite02预测结果:" << result2.at(1, 0) << endl;

} 3.决策树:

void visionagin:: MyDtrees()

{

Mat data = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字按行排列结果.png", IMREAD_ANYDEPTH);

Mat labels = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字图像标签.png", IMREAD_ANYDEPTH);

data.convertTo(data, CV_32F);

labels.convertTo(labels, CV_32S);

//构建决策树

Ptr DTmodel = ml::DTrees::create();

DTmodel->setMaxDepth(8);

DTmodel->setCVFolds(0);

//构建训练数据集

Ptr dtraindata = ml::TrainData::create(data, ml::ROW_SAMPLE, labels);

//开始训练

DTmodel->train(dtraindata);

//保存训练模型

DTmodel->save("C:\\Users\\86176\\Downloads\\visionimage\\决策树训练模型.yml");

//测试模型精度

Mat result;

DTmodel->predict(data, result);//进行预测

int count = 0;

for (int i = 0; i < result.rows; ++i)

{

int predict = result.at(i, 0);

if (predict == labels.at(i, 0))

{

count++;

}

}

float rate = 0;

rate = static_cast(count) / result.rows;

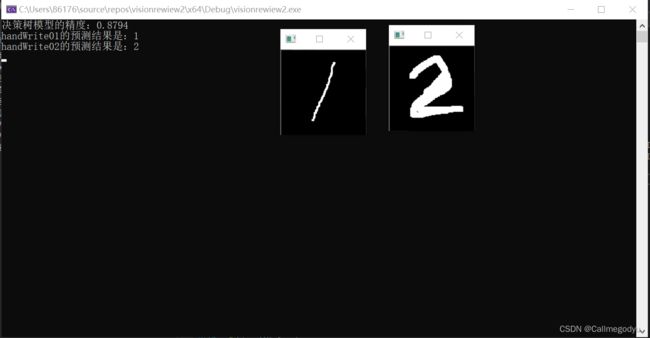

cout << "决策树模型的精度:" << rate << endl;

//实例测试模型

Mat testimg1 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite01.png", IMREAD_ANYDEPTH);

Mat testimg2 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite02.png", IMREAD_ANYDEPTH);

imshow("handWrite01", testimg1);

imshow("handWrite02", testimg2);

//缩小尺寸

resize(testimg1, testimg1, Size(20, 20));

resize(testimg2, testimg2, Size(20, 20));

//构建输入矩阵

Mat testdata = Mat::zeros(2, 400, CV_8UC1);

Rect roi;

roi.x = 0;

roi.y = 0;

roi.height = 1;

roi.width = 400;

Mat onedata = testimg1.reshape(1, 1);

Mat twodata = testimg2.reshape(1, 1);

onedata.copyTo(testdata(roi));

roi.y = 1;

twodata.copyTo(testdata(roi));

testdata.convertTo(testdata,CV_32FC1);

//预测

Mat result2;

DTmodel->predict(testdata, result2);

cout << "handWrite01的预测结果是:" << result2.at(0, 0) << endl;

cout << "handWrite02的预测结果是:" << result2.at(1, 0) << endl;

} 4.随机森林

void visionagin:: MyRtrees()

{

Mat data = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字按行排列结果.png", IMREAD_ANYDEPTH);

Mat labels = imread("C:\\Users\\86176\\Downloads\\visionimage\\5000幅数字图像标签.png", IMREAD_ANYDEPTH);

data.convertTo(data, CV_32FC1);

labels.convertTo(labels, CV_32SC1);

Ptr rtreemodel = ml::RTrees::create();

rtreemodel->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER + TermCriteria::EPS, 100, 0.1));

//构建训练集

Ptr traindata = ml::TrainData::create(data, ml::ROW_SAMPLE, labels);

rtreemodel->train(traindata);

//训练模型保存

rtreemodel->save("C:\\Users\\86176\\Downloads\\visionimage\\随机数树训练模型.yml");

Mat result;

rtreemodel->predict(data, result);

//计算精度

int count=0;

for (int i = 0; i < result.rows; i++)

{

int predict = result.at(i, 0);

if (predict == labels.at(i, 0))

{

count++;

}

}

double rate = 0;

rate = static_cast(count) / result.rows;

cout << "rtrees的精度:" << rate << endl;

//实例测试随机数模型

Mat testimg1 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite01.png", IMREAD_ANYDEPTH);

Mat testimg2 = imread("C:\\Users\\86176\\Downloads\\visionimage\\handWrite02.png", IMREAD_ANYDEPTH);

imshow("handWrite01", testimg1);

imshow("handWrite02", testimg2);

//变换尺寸

resize(testimg1, testimg1, Size(20, 20));

resize(testimg2, testimg2, Size(20, 20));

//建立输入矩阵

Mat testdata = Mat::zeros(2, 400, CV_8UC1);

Mat onedata = testimg1.reshape(1, 1);

Mat twodata = testimg2.reshape(1, 1);

Rect roi;

roi.x = 0;

roi.y = 0;

roi.height = 1;

roi.width = 400;

onedata.copyTo(testdata(roi));

roi.y = 1;

twodata.copyTo(testdata(roi));

testdata.convertTo(testdata, CV_32F);

//预测

Mat result2;

rtreemodel->predict(testdata, result2);

cout << "handWrite01的预测结果:" << result2.at(0, 0) << endl;

cout << "handWrite02的预测结果:" << result2.at(1, 0) << endl;

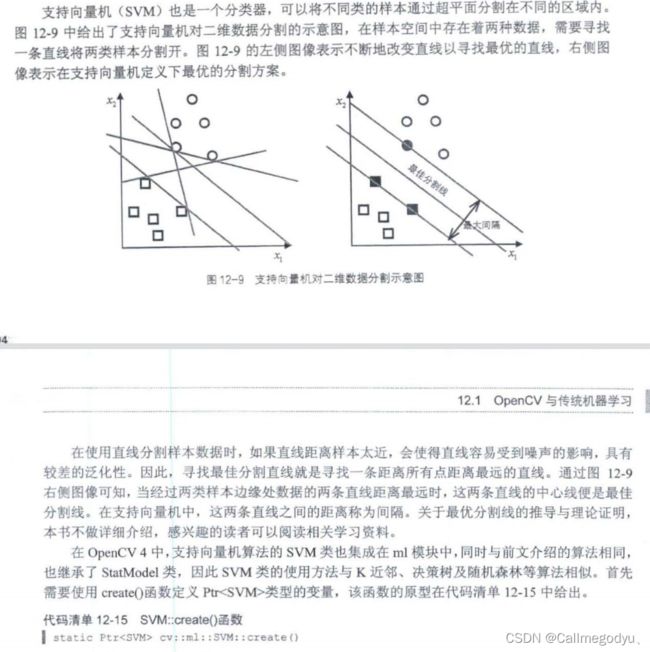

} 5.支持向量机

void visionagin:: MySVM()

{

FileStorage fread("C:\\Users\\86176\\Downloads\\visionimage\\point.yml", FileStorage::READ);

Mat data,labels;

fread["data"] >> data;

fread["labls"] >> labels;

fread.release();

vectorcolor;

color.push_back(Vec3b(0, 0, 255));

color.push_back(Vec3b(0, 255, 0));

Mat img = Mat(Size(600, 600), CV_8UC3, Scalar::all(255));

Mat img2;

img.copyTo(img2);

for (int i = 0; i < labels.rows; ++i)

{

Point2f p;

p.x = data.at(i, 0);

p.y = data.at(i, 1);

circle(img, p, 3, color[labels.at(i, 0)], -1);

circle(img2, p, 3, color[labels.at(i, 0)], -1);

}

imshow("原数据", img);

//data.convertTo(data, CV_32FC1);

//labels.convertTo(labels, CV_32SC1);

//构建svm模型

Ptr svmmodel = ml::SVM::create();

svmmodel->setKernel(ml::SVM::INTER);

svmmodel->setType(ml::SVM::C_SVC);

svmmodel->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER + TermCriteria::EPS, 100, 0.01));

//构建输入的数据

Ptr traindata = ml::TrainData::create(data, ml::ROW_SAMPLE, labels);

//训练

svmmodel->train(traindata);

//对每个点进行预测

Mat temp = Mat::zeros(1, 2, CV_32FC1);

for (int i = 0; i < img.rows; i+=2)

{

for (int j = 0; j < img.cols; j+=2)

{

temp.at(0) = (float)j;//x

temp.at(1) = (float)i;//y

int result;//单个数据结果

result=svmmodel->predict(temp);

img2.at(i,j) = color[result];

}

}

imshow("result", img2);

}