Software Engineering 课程笔记 -- Reading

Software Engineering 课程笔记 -- Reading

-

- 1.25-Process, Risk and Scheduling

- 1.27-Measurement

- 2.1-Quality Assurance and Testing

-

- Software Engineering at Google

- 2.3-Test Suite Quality Metrics

- 2.8-Test Inputs, Oracles and Generation

- 2.10-Code Inspection and Review

- 2.15-Dynamic Analysis Tools

- 2.17-Pair Programming and Skill Interviews

- 3.1 Static & Dataflow Analysis (1/2)

- 3.3 Static & Dataflow Analysis (2/2)

- 3.8 Defect Reporting and Triage

What I got, what should be remember from numerous reading materials in EECS 481: Software Engineering.

1.25-Process, Risk and Scheduling

1.27-Measurement

2.1-Quality Assurance and Testing

Software Engineering at Google

Software Engineering at Google: Section 2.0 - 2.5

2.1. The Source Repository

Most of Google’s code is stored in a single unified source-code repository, and is

accessible to all software engineers at Google:

leads to higher-quality infrastructure that better meets the needs of those using it.

Development at the head of repository:

identify integration problems & reduce the amount of merging work.

Automated system run tests after every change & “build cop” to ensure to pass test at first: keeping the build green and go on

Each subtree of repository have listed owners (at least 2), changes in certain subtree must be approved by owner(s): every change is reviewed by as least one owner (who understand the code)

2.2. The build System

Blaze a distributed build system, responsible for compiling and linking software and for running tests. It provides standard commands for building and testing software that work across the whole repository.

Build: “BUILD” file – how to build. can be automatically generated sometimes

Test: high-level declarative build specification – entity, name, source file and library

- Individual build steps must be “hermetic”: they depend only on their declared inputs

- Individual build steps are deterministic: Cache – store build result in the cloud (reuse result), sync to old change and rebuild. Shared cache.

- The build system is reliable: The build system tracks dependencies on changes to the build rules themselves, and knows to rebuild targets if the action to produce them changed, even if the inputs to that action didn’t

- Incremental rebuilds are fast

- Presubmit checks: automatically running a suite of tests when initiating a code review and/or preparing to commit a change to the repository

2.3. Code Review

- web-based code review: authors request review, reviewers received email.

- All change must be review by at least one engineer: at least one owner, auto suggesting reviewer

- Code review discussion are auto mail to the project maintainers

- Experimental Section: no need normal code review

- Keep each individual change small – review can easily review in one go, author response. Code review tool describe the size of change (30-99 added/deleted/removed lines – mid size)

2.4. Testing

- Unit Testing

All code used in production is expected to have unit tests, and the code review tool will highlight if source files are added without corresponding tests. Mocking frameworks (allow construction of lightweight unit tests even for code with dependencies on heavyweight libraries) - Integration testing and regression testing

- Load testing prior to deployment

Teams are expected to produce a table or graph showing how key metrics, particularly latency and error rate, vary with

the rate of incoming requests.

2.5. Bug tracking

Buganizer bug tracking

Bugs are categorized into hierarchical components and each component can have a default assignee and default email list to CC.

Quality assurance

Definition

a way of preventing mistakes and defects in manufactured products and avoiding problems when delivering products or services to customers

part of quality management focused on providing confidence that quality requirements will be fulfilled

Quality assurance vs. Quality control

Quality control: The term “control”, however, is used to describe the fifth phase of the Define, Measure, Analyze, Improve, Control (DMAIC) model. 检查产品是否满足质量要求

Quality assurance: It is the systematic measurement, comparison with a standard, monitoring of processes and an associated feedback loop that confers error prevention. This can be contrasted with quality control, which is focused on process output 更注重过程的来确保产品质量

Two Principles

- Fit for purpose

- right first time (mistakes should be eliminated)

Approaches

- Failure testing: the operation of a product until it fails, often under stresses

- Statistical control: based on analyses of objective and subjective data to track quality data

- Total quality management: The quality of products is dependent upon that of the participating constituents,[26] some of which are sustainable and effectively controlled while others are not.

- Models and standards

- Company quality: The company-wide quality approach places an emphasis on four aspects – Elements (controls, job management, adequate processes, performance ), Competence (knowledge, skills, experiences, qualifications), Soft elements (personnel integrity, confidence), Infrastructure 强调全面的质量文化

Software testing

Definition

An investigation conducted to provide stakeholders with information about the quality of the software product or service under test.

Provide:

- an objective & independent view of the software

- the risks of software implementation

software testing typically (but not exclusively) attempts to execute a program or application with the intent of finding failures due to software faults.

properties indicate the extent to which the component or system under test:

- meets the requirements that guided its design and development,

- responds correctly to all kinds of inputs

- performs its functions within an acceptable time

- is sufficiently usable

- can be installed and run in its intended environments

- achieves the general result its stakeholders desire.

Testing approach

- Static, dynamic, and passive testing:

Reviews, walkthroughs, or inspections are referred to asstatic testing, verification;

Executing programmed code with a given set of test cases is referred to asdynamic testing, validation;

Passive testingmeans verifying the system behavior without any interaction with the software product. Do not provide any test data but look at system logs and traces. - Exploratory approach:

(black box testing)seeks to find out how the software actually works, and to ask questions about how it will handle difficult and easy cases - White-box testing

verifies the internal structures or workings of a program, as opposed to the functionality exposed to the end-user. An internal perspective of the system (the source code), as well as programming skills, are used to design test cases. - Black-box testing

Examining functionality without any knowledge of internal implementation, without seeing the source code. The testers are only aware of what the software is supposed to do, not how it does it.

Test Levels

- Unit testing

- Integration testing

to verify the interfaces between components against a software design - System testing

tests a completely integrated system - Acceptance testing

1.User acceptance testing 2. Operational acceptance testing 3. Contractual and regulatory acceptance testing 4. Alpha and beta testing

Testing types

- Installation testing

- Compatibility testing

- Smoke and sanity testing

Sanity testing determines whether it is reasonable to proceed with further testing.

Smoke testing consists of minimal attempts to operate the software, designed to determine whether there are any basic problems that will prevent it from working at all. - Regression testing

Focuses on finding defects after a major code change has occurred, including old bugs that have come back - Acceptance testing

1 A smoke test is used as a build acceptance test prior to further testing

2 Acceptance testing performed by the customer, often in their lab environment on their own hardware - Alpha testing

Simulated or actual operational testing by potential users/customers or an independent test team at the developers’ site. (internal acceptance testing ) - Beta testing

Versions of the software, known as beta versions, are released to a limited audience outside of the programming team known as beta testers.(external user acceptance testing)

2.3-Test Suite Quality Metrics

Code coverage

Test Coverage

A measure used to describe the degree to which the source code of a program is executed when a particular test suite runs.

Basic coverage criteria

- Function coverage – has each function (or subroutine) in the program been called?

- Statement coverage – has each statement in the program been executed?

- Edge coverage – has every edge in the Control flow graph been executed?

- Branch coverage – has each branch of each control structure (such as in if and case statements) been executed?

- Condition coverage (or predicate coverage) – has each Boolean sub-expression evaluated both to true and false?

Modified condition/decision coverage

A combination of function coverage and branch coverage

- Each entry and exit point is invoked

- Each decision takes every possible outcome

- Each condition in a decision takes every possible outcome

- Each condition in a decision is shown to independently affect the outcome of the decision.

Mutation testing

Definition

To design new software tests and evaluate the quality of existing software tests.

Mutation testing involves modifying a program in small ways. Each mutated version is called a mutant. Tests detect and reject mutants by causing the behavior of the original version to differ from the mutant. (whit-box testing)

Test suites are measured by the percentage of mutants that they kill.

Goals:

- identify weakly tested pieces of code (those for which mutants are not killed)

- identify weak tests (those that never kill mutants)

- compute the mutation score (number of mutants killed / total number of mutants)

- learn about error propagation and state infection in the program

2.8-Test Inputs, Oracles and Generation

Test generation

Definition

The process of creating a set of test data or test cases for testing the adequacy of new or revised software applications. Generating quality test data quickly, efficiently and accurately

Test data generators

1. Random test data generators

Pro: generate input for any type of program

Con: does not generate quality test data as it does not perform well in terms of coverage. Since the data generated is based solely on probability, if a fault is only revealed by a small percentage of the program input it is said to be a semantically small fault.

Random Test Data Generation is usually used as a benchmark as it has the lowest acceptable rate of generating test data.

2. Goal-oriented test data generators

The Goal-Oriented approach provides a guidance towards a certain set of paths.

The Test Data Generators in this approach generate an input for any path u. Thus, the generator can find any input for any path p which is a subset of the path u.

- Chaining approach

The limitation is thecontrol flow graphis used to generate the test data. The path-oriented approach usually has to generate a large number of paths before it finds the “right” path, because the path selection is blind.Chaining approachduring the execution of each branch decides whether to continue execution through this branch or if an alternative branch be taken because the current branch does not lead to the goal node.在构建flow的过程中判断是否要向前进行还是换新的branch - Assertion-oriented approach

In this approach assertions - that is constraint conditions are inserted. 程序必须满足assertion,否则报错。目标:找到所有到这个assertion的path是否正常

void test(int a) {

int b, c;

b = a - 1;

assertion(b != 0);

c = (1 / b);

}

3. Pathwise test data generators

并没有给出很多path,而是基于input的1. 被测程序 2. esting criterion (e.g.: path coverage, statement coverage, etc.)来使用一个特定的path

Problems of test generation

- Arrays and pointers:

during symbolic execution their values are not known; multiple complicating factors like the index of the array or the structure of the input that needs to be given to the pointer - Objects:

hard to determine which code will be called at runtime - Loops:

会因为不同输入而改变不同的loop,让path预测十分困难

Fuzzing

The key idea of random text generation, also known as fuzzing, is to feed a string of random characters into a program in the hope to uncover failures.

Pex–White Box Test Generation for .NET

Pex is an automatic white-box test generation tool for .NET

2.10-Code Inspection and Review

2.15-Dynamic Analysis Tools

Dynamic program analysis

Definition

Dynamic program analysis is the analysis of computer software that is performed by executing programs on a real or virtual processor.

Dynamic analysis is in contrast to static program analysis. Unit tests, integration tests, system tests and acceptance tests use dynamic testing.

Types of dynamic analysis

- Code coverage

- Memory error detection

- Fault localization

- Invariant inference

- Security analysis

- Concurrency errors

- Program slicing (reducing the program to the minimum form that still produces the selected behavior)

- Performance analysis

Finding and Reproducing Heisenbugs in Concurrent Programs

Concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the final outcome.

2.17-Pair Programming and Skill Interviews

Pair programming

Definition

Pair programming is an agile software development technique in which two programmers work together at one workstation. One, the driver, writes code while the other, the observer or navigator,reviews each line of code as it is typed in. The two programmers switch roles frequently.

Observer: “strategic” 策略大方向, as a safety net and guide

Driver: “tactical” How to do it

Economics

Increase required person-hour(high person and time cost)

Decrease defects. And other benefits about pair working.

Design Quality

more diverse solutions to problems

- different prior experiences

- assess information relevant to the task in different ways

- stand in different relationships to the problem by virtue of their functional roles

It reduces the chances of selecting a poor method.

Learning

- higher confidence

- learn more

- knowledge of the system to spread throughout the whole team

- develop monitoring mechanisms for their own learning activities

Studies

- more design alternatives: more maintainable designs, and catch design defects earlier

- total number of person-hours increases

- Best benefit on task: programmers do not fully understand before they begin; complex programming tasks

Pairing variations

- Expert–expert: highest productivity; lack of new ways to solve problems

- Expert–novice: many opportunities for the expert to mentor the novice; novice intro new ideas, expert explain established practices; “watch the master”, patience.

- Novice-novice: better than two novices; discourage

The Costs and Benefits of Pair Programming

Is pair programming really more effective than solo programming? What are the economics? What about the people factor - enjoyment on the job?

The significant benefits of pair programming:

- many mistakes get caught as they are being typed in rather than in QA test or in the field (continuous code reviews); earlier bug fixed

- the end defect content is statistically lower (continuous code reviews);

- the designs are better and code length shorter (ongoing brainstorming and pair relaying);

- the team solves problems faster (pair relaying);

- the people learn significantly more, about the system and about software development (lineof-sight learning);

- the project ends up with multiple people understanding each piece of the system

- the people learn to work together and talk more often together, giving better information flow and team dynamics;

- people enjoy their work more

The Google Technical Interview

The interview process at Google has been designed:

To avoid false positives. We want to avoid making offers to candidates who would not be successful at Google

45min interview format:

- a programming problem (one or more solutions), explain the tradeoff and benefits of your solution:

1.clarify the assumption “How big could the input be?” “What happens with bad inputs?” and “How often will we run this?”

2.talk to the interviewer and explain what you are thinking.

3.Big O notation.

4.must know one language well

5.test code after writing, normal input and edge cases - Data structures and algorithms

- Overall:

Are you someone they want to work with? Are you someone who would make their team better? Are you someone they want writing code they will use and depend on? Can you on your feet? Can you explain your ideas to coworkers? Can you write and test code? And are you friendly enough to chat with every day? - Ask questions:

what it is like to work here, what we love, what we hate, how often we travel

35 minutes of programming problems

5 minutes of questions

5 minutes everything else (an introduction, discussing your resume, and asking questions about your prior work experience)

Once you are in a technical interview, our interviewers will mostly focus on programming problems, not the resume.

Wear something that makes you feel comfortable

Talk out loud!!!

Software Engineering at Google - 4.1 Roles

Roles in Google

- Engineering Manage

Engineering Managers always manage people, are often former Software Engineers.

Engineering Managers do not necessarily lead projects; projects are led by a Tech Lead (often SWE) - Software Engineer (SWE)

Google has separate career progression sequences for engineering and management. - Research Scientist

demonstrated exceptional research ability evidenced by a great publication record and

ability to write code - Site Reliability Engineer

The maintenance of operational systems is done by software engineering teams, expertise in other skills such as networking or unix system internals - Product Manager

Product Managers usually do NOT write code themselves, but work with software engineers to ensure that the right code gets written. - Program Manager / Technical Program Manager

Similar to Product Manager, but rather than managing a product, they manage projects, processes, or operations (e.g. data collection).

3.1 Static & Dataflow Analysis (1/2)

Static program analysis

Definition

The analysis of computer software that is performed without actually executing programs, in contrast with dynamic analysis, which is analysis performed on programs while they are executing.

In the verification of properties of software used in safety-critical computer systems and locating potentially vulnerable code.

Tool Types

- Unity Level: within a specific program or subroutine, without connecting to the context of that program.

- Technology Level: interactions between unit programs, view of the overall program in order to find issues and avoid obvious false positives.

- System Level: interactions between unit programs, but without being limited to one specific technology or programming language.

Formal Methods

Definition: analysis of software whose results are obtained purely through the use of rigorous mathematical methods

Implementation techniques:

- Abstract interpretation: model the effect that every statement has on the state of an abstract machine

- Data-flow analysis: information about the possible set of values

- Hoare logic: a set of logical rules for reasoning rigorously about the correctness of computer programs

- Model checking: finite state by abstraction

- Symbolic execution: mathematical expressions representing the value of mutated variables

Data-driven static analysis uses large amounts of code to infer coding rules

Experiences Using Static Analysis to Find Bugs

FindBugs, an open source static analysis tool for Java

look for violations of reasonable or recommended programming practice:

- code might dereference a null pointer or overflow an array

- a comparison that can’t possibly be true

- programming style issues

To cheaply identify defects with a reasonable confidence

Defects CAN be found

3.3 Static & Dataflow Analysis (2/2)

Basic block

Definition

Only ONE entry point: no code within is the destination of a jump instruction

Only ONE exit point: only the last instruction can cause the program to begin executing code in a different basic block

Whenever the first instruction in a basic block is executed, the rest of the instructions are necessarily executed exactly once, in order.

- The instruction in each position dominates, or always executes before, all those in later positions.

- No other instruction executes between two instructions in the sequence.

Successor: the current control transfer to

Predecessor: the current control enter from

Creating Algorithm

Scan all codes, mark block boundaries (begin or end of a block – accept or transfer control)

三地址码:每个三地址码指令,都可以被分解为一个四元组(4-tuple):(运算符,操作数1,操作数2,结果)。因为每个陈述都包含了三个变量,所以它被称为三地址码。

Input: A sequence of instructions (mostly three-address code)

Output: A list of basic blocks with each three-address statement in exactly one block.

- Identify the leaders in the code. Three leader categories:

- the first instruction

- the target of goto/jump instruction

- the instruction immediately follows goto/jump instruction

- Starting from a leader, set of all following instructions until and not including the next leader is the basic block.

End instruction categories:

- branches

- return to a calling procedure

- throw a exception

- function calls if they can not return (exception, EXIT…)

- return instruction itself

Begin instruction categories:

- entry point

- target of jump or branch

- “fall-through” instructions following some conditional branches

- instructions following ones that throw exceptions

- exception handlers

Data-flow analysis

Definition

Data-flow analysis is a technique for gathering information about the possible set of values calculated at various points in a computer program.

Basic principles

Forward flow analysis

![]()

![]()

join: combines the exit states of predecessors p --> entry state of b

trans: transfer function of b --> exit state of b

3.8 Defect Reporting and Triage

Wikipedia’s Bug tracking system

Definition

A bug tracking system or defect tracking system is a software application that keeps track of reported software bugs in software development projects. It may be regarded as a type of issue tracking system.

Make a Bug tracking system

- Database: record facts about known bugs

- Life cycle for a bug

Use a Bug tracking system

Provide a clear centralized overview of development requests and their state.

local bug tracker (LBT):

- professionals (often a help desk) to keep track of issues communicated to software developers.

- a team of support professionals to track specific information about users complain.

Bug and issue tracking systems is a part of integrated project management systems

bug tracking systems integrate with distributed version control

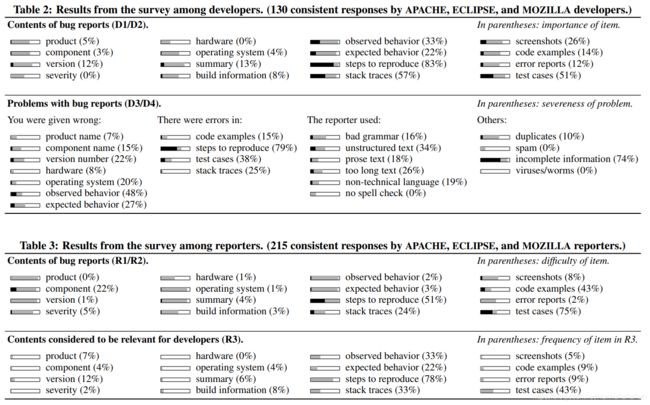

What Makes a Good Bug Report?

- quality of bug reports -->

tool to measure the quality of bug reports - mismatch between what developers consider most helpful and what users provide -->

tool to help reporter to furnish information that developers want, gauges the quality of bug reports and suggests to reporters what should be added to make a bug report better

The most severe problems encountered by developers:

- Errors in steps to reproduce

- Incomplete information

- Wrong observed behavior.

Bug duplicates not considered as harmful by developers