Flink学习:Flink Table / Sql API的Window操作

Flink Window

- 一、Table Api

-

- (一)、GroupBy Window

- (二)、OverWindow

- 二、Sql

-

- (一)、GroupBy Window

- (二)、Over Window

一、Table Api

(一)、GroupBy Window

- groupBy window和DataStream/Dataset API中提供的窗口一致,都是将流式数据集根据窗口类型切分成有界数据集,然后在有界数据集上进行聚合计算

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.api.scala.createTypeInformation

import org.apache.flink.api.java.tuple._

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.scala._

import org.apache.flink.table.api.{TableEnvironment, Types}

import org.apache.flink.table.sinks.CsvTableSink

import org.apache.flink.table.sources.CsvTableSource

import org.apache.flink.types.Row

case class User(id:Int,name:String,timestamp:Long)

object SqlTest {

def main(args: Array[String]): Unit = {

val streamEnv = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv = TableEnvironment.getTableEnvironment(streamEnv)

//指定时间类型为事件时间

streamEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val stream = streamEnv.fromElements(

User(1,"nie",1511658000),

User(2,"hu",1511658000)

).assignAscendingTimestamps(_.timestamp * 1000L) //指定水位线

tEnv.registerDataStream("testTable",stream,'id, 'name, 'event_time.rowtime)

val result = tEnv.scan("testTable")

.window(Tumble over 1.hour on 'event_time as 'test) //指定窗口类型和时间类型并将窗口重新命名为test

.groupBy('test) //根据窗口聚合

.select('id.sum) //指定对id字段进行sum求和

result.toRetractStream[Row].print()

streamEnv.execute("windowTest")

}

}

- 在Table API中使用windows()方法对窗口进行定义和调用,且必须通过as()方法指定窗口别名以后在后面的算子中使用

- 在window()方法指定窗口类型以后,需要紧跟groupBy()方法来指定创建的窗口名称以窗口数据聚合的Key

- 然后使用select()方法来指定需要查询的字段名称以及窗口聚合数据进行统计的函数

1、Tumbling Window

- 滚动窗口关键字为Tumble,可以基于event Time(rowtime)、ProcessTime(proctime)以及Row-Count,下面分别展示

--event_time

//首先需要指定事件类型为EventTime

//然后给字段取别名的时候,EventTime对应rowtime

tEnv.registerDataStream("testTable",stream,'id, 'name, 'event_time.rowtime)

//后面窗口的on关键字后面接事件时间

window(Tumble over 1.hour on 'event_time as 'test)

--process_time

//首先需要指定事件类型为process_time(默认)

//然后给字段取别名的时候,process_time对应rowtime

tEnv.registerDataStream("testTable",stream,'id, 'name, 'process_time.proctime)

//后面窗口的on关键字后面接事件时间

window(Tumble over 1.hour on 'process_time as 'test)

--rowcount

//over后接具体行数,注意:process_time无实际意义

window(Tumble over 100.rows on 'process_time as 'test)

2、Sliding Windows

- 滑动窗口关键字为Slide,也可以基于event Time(rowtime)、ProcessTime(proctime)以及Row-Count,指定时间类型语法和Tubling Window一样,只是指定window有区别,下面以event_time为例

--event_time

//首先需要指定事件类型为EventTime

//然后给字段取别名的时候,EventTime对应rowtime

tEnv.registerDataStream("testTable",stream,'id, 'name, 'event_time.rowtime)

//后面窗口的on关键字后面接事件时间

window(Slide over 10.minutes every 5.millis on 'event_time as 'test) //表示窗口长度为10分钟,每隔5s统计一次

3、Session Windows

- 会话窗口关键字为Session,与Tumbling、Sliding窗口不同的是,Session窗口不需要指定固定的窗口时间,而是通过判断固定时间内数据的活跃性来切分窗口,例如10min内数据不接入则切分窗口并触发计算

- Session窗口只能基于EventTime和ProcessTime时间,不支持Row-Count,下面以EventTime为例

--event_time

//首先需要指定事件类型为EventTime

//然后给字段取别名的时候,EventTime对应rowtime

tEnv.registerDataStream("testTable",stream,'id, 'name, 'event_time.rowtime)

//后面窗口的on关键字后面接事件时间

window(Session WithGap 10.minutes on 'event_time as 'test) //基于eventtime创建Session Window,Session Gap为10min

(二)、OverWindow

Over Window和标准Sql中提供的开窗函数语法功能类似,也是一种数据聚合计算的方式,但和Group Window不同的是,over

window不需要对输入数据按照窗口大小进行堆叠

- 在Table API中,over window也是在window方法中指定,但后面不需要和groupby操作符绑定,后面直接接select操作符,并在select操作符中指定需要查询的字段和聚合指标

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.api.scala.createTypeInformation

import org.apache.flink.api.java.tuple._

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.scala._

import org.apache.flink.table.api.{TableEnvironment, Types}

import org.apache.flink.table.sinks.CsvTableSink

import org.apache.flink.table.sources.CsvTableSource

import org.apache.flink.types.Row

case class User(id:Int,name:String,age:Int,timestamp:Long)

object SqlTest {

def main(args: Array[String]): Unit = {

val streamEnv = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv = TableEnvironment.getTableEnvironment(streamEnv)

//指定时间类型为事件时间

streamEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

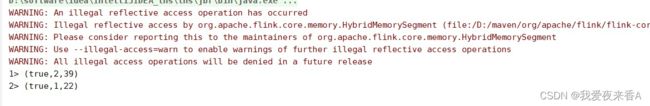

val stream = streamEnv.fromElements(

User(1,"nie",22,1511658000),

User(2,"hu",20,1511658000),

User(2,"xiao",19,1511658000)

).assignAscendingTimestamps(_.timestamp * 1000L) //指定水位线

tEnv.registerDataStream("testTable",stream,'id, 'name, 'event_time.rowtime)

val result = tEnv.scan("testTable")

.window(Over partitionBy 'id orderBy 'event_time preceding UNBOUNDED_RANGE as 'test)

.select('name,'id.sum over 'test)

result.toRetractStream[Row].print()

streamEnv.execute("windowTest")

}

}

- 和sql中的开窗函数类似,每一行都返回一条数据,相当于开了一个窗口,聚合函数是每一组返回一条数据

- Over Window Aggregation仅支持preceding定义的UNBOUNDED和BOUND类型窗口,对于following定义的窗口暂不支持

二、Sql

(一)、GroupBy Window

- 和Table API一样,Flink Sql也支持三种窗口类型,分别为Tumble Windows、HOP Windows和Session

Windows,其中HOP Window对应Table API中的Sliding Window

1、Tumble Window

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.api.scala.createTypeInformation

import org.apache.flink.api.java.tuple._

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.scala._

import org.apache.flink.table.api.{TableEnvironment, Types}

import org.apache.flink.table.sinks.CsvTableSink

import org.apache.flink.table.sources.CsvTableSource

import org.apache.flink.types.Row

case class User(id:Int,name:String,age:Int,timestamp:Long)

object SqlTest {

def main(args: Array[String]): Unit = {

val streamEnv = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv = TableEnvironment.getTableEnvironment(streamEnv)

//指定时间类型为事件时间

streamEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val stream = streamEnv.fromElements(

User(1,"nie",22,1511658000),

User(2,"hu",20,1511658000),

User(2,"xiao",19,1511658000)

).assignAscendingTimestamps(_.timestamp * 1000L) //指定水位线

tEnv.registerDataStream("testTable",stream,'id, 'name,'age,'event_time.rowtime)

//以10分钟作为一个窗口,和table API稍有不同

val result = tEnv.sqlQuery(

"select id,sum(age) from testTable group by tumble(event_time, INTERVAL '10' MINUTE),id"

)

result.toRetractStream[Row].print()

streamEnv.execute("windowTest")

}

}

- 和Table API指定水位线和时间类型差不多,只不过Table中scan、where、window等API换成了sql

//指定窗口长度为10分钟,每隔1分钟滑动一次窗口,共有4条记录

val result = tEnv.sqlQuery(

"select id,sum(age) from testTable group by HOP(event_time, INTERVAL '5' MINUTE,INTERVAL '10' MINUTE),id"

)

//Session Gap为5h,表示5h内没有数据接入则认为窗口结束并触发计算

val result = tEnv.sqlQuery(

"select id,sum(age) from testTable group by SESSION(event_time, INTERVAL '5' HOUR),id"

)

(二)、Over Window

- 这里的over window也就是开窗函数和Sql语法一致,直接编写Sql语句,就不过多赘述了

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.api.scala.createTypeInformation

import org.apache.flink.api.java.tuple._

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.scala._

import org.apache.flink.table.api.{TableEnvironment, Types}

import org.apache.flink.table.sinks.CsvTableSink

import org.apache.flink.table.sources.CsvTableSource

import org.apache.flink.types.Row

case class User(id:Int,name:String,age:Int,timestamp:Long)

object SqlTest {

def main(args: Array[String]): Unit = {

val streamEnv = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv = TableEnvironment.getTableEnvironment(streamEnv)

//指定时间类型为事件时间

streamEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

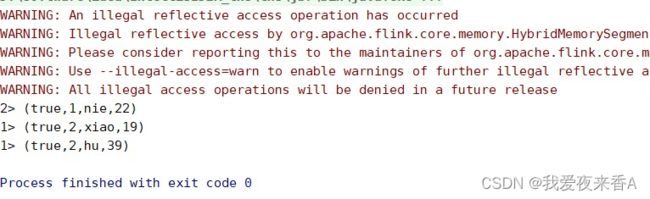

val stream = streamEnv.fromElements(

User(1,"nie",22,1511658000),

User(2,"hu",20,1511658000),

User(2,"xiao",19,1511658000)

).assignAscendingTimestamps(_.timestamp * 1000L) //指定水位线

tEnv.registerDataStream("testTable",stream,'id, 'name,'age,'event_time.rowtime)

val result = tEnv.sqlQuery(

"select id,name,sum(age)over(partition by id order by event_time " +

"ROWS BETWEEN 10 PRECEDING AND CURRENT ROW) from testTable"

)

result.toRetractStream[Row].print()

streamEnv.execute("windowTest")

}

}