Flink实战4-Flink广播流动态更新MySQL_Source配置信息实现配置化流式处理程序

背景

- 适用于配置化操作流,无需终止流式程序实现配置,并且以广播流的形式在流式程序中使用;

- 实现MySQL_Source配置信息动态定时更新;

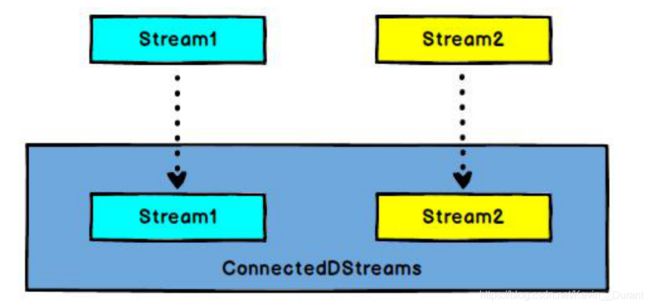

- 实现MySQL_Source广播流,此处使用最常用的keyby广播流KeyedBroadcastProcessFunction;

摘要

关键字

- MySQL_Source、Flink广播流;

设计

-

MyJdbcSource

- 日常创建一个继承源富函数的类;

- 初始化单连接;

- 配置更新时间设置自己可以编写方法应用;

-

配置化Flink广播流;

理解

实现

说明

此处的处理没有写成项目中使用的比较复杂的可配置化的形式,也就是只针对单表测试表的操作;

依赖

<scala.main.version>2.11scala.main.version>

<flink.version>1.10.1flink.version>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-scala_${scala.main.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-scala_${scala.main.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka_${scala.main.version}artifactId>

<version>1.10.1version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-api-scala-bridge_${scala.main.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-planner_${scala.main.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-redis_${scala.main.version}artifactId>

<version>${flink.redis.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-elasticsearch6_${scala.main.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-statebackend-rocksdb_2.11artifactId>

<version>1.9.1version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-cep-scala_2.11artifactId>

<version>${flink.version}version>

dependency>

main

import java.util

import com.xx.beans.FlinkMergeDataResultBean

import com.xx.utils.flink.soruce.{MyJdbcSource, MyKafkaSource}

import com.xx.utils.flink.states.ValueStateDemo.MyMapperTableData

import org.apache.flink.api.common.state.{BroadcastState, MapStateDescriptor, ReadOnlyBroadcastState}

import org.apache.flink.api.common.typeinfo.BasicTypeInfo

import org.apache.flink.api.java.typeutils.MapTypeInfo

import org.apache.flink.streaming.api.datastream.BroadcastStream

import org.apache.flink.streaming.api.{CheckpointingMode, TimeCharacteristic}

import org.apache.flink.streaming.api.functions.co.{KeyedBroadcastProcessFunction, KeyedCoProcessFunction}

import org.apache.flink.streaming.api.scala.{BroadcastConnectedStream, DataStream, StreamExecutionEnvironment}

import org.apache.flink.util.Collector

import scala.collection.mutable

/**

* @Author KevinLu

* @Description Flink实现动态MySQL数据配置广播流

* @Copyright 代码类版权的最终解释权归属KevinLu本人所有;

**/

object BroadcastStateStreamDemo {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

/**

* 重启策略配置

*/

/**

* import

*/

import org.apache.flink.api.scala._

/**

* 获取配置MySQL流

*/

val propertiesStream: DataStream[mutable.HashMap[String, String]] = env.addSource(MyJdbcSource)

/**

* * @param name {@code MapStateDescriptor}的名称。

* * @param keyTypeInfo状态下键的类型信息。

* * @param valueTypeInfo状态值的类型信息。

*/

val mapStateDescriptor: MapStateDescriptor[String, util.Map[String, String]] = new MapStateDescriptor("broadCastConfig", BasicTypeInfo.STRING_TYPE_INFO, new MapTypeInfo(classOf[String], classOf[String]))

//拿到配置广播流

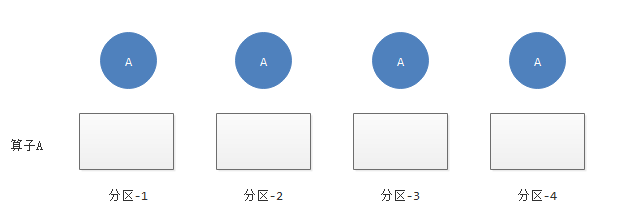

val broadcastStream: BroadcastStream[mutable.HashMap[String, String]] = propertiesStream.setParallelism(1).broadcast(mapStateDescriptor)

//数据流

val dStream1: DataStream[String] = MyKafkaSource.myKafkaSource(

env,

"xxx:9092,xxx:9092,xxx:9092",

List("xxx")

)

val keydStream = dStream1

.map(new MyMapperTableData)

.keyBy(_.key)

val broadcastConnectedStream: BroadcastConnectedStream[FlinkMergeDataResultBean, mutable.HashMap[String, String]] = keydStream.connect(broadcastStream)

/**

* * @param 输入的关键字流的关键类型。KeyedStream 中 key 的类型

* * @param 键控(非广播)端的输入类型。

* * @param 广播端输入类型。

* * @param 操作符的输出类型。

*/

broadcastConnectedStream.process(

new KeyedBroadcastProcessFunction[String, FlinkMergeDataResultBean, mutable.HashMap[String, String], String] {

val mapStateDescriptor : MapStateDescriptor[String, util.Map[String, String]] = new MapStateDescriptor("broadCastConfig", BasicTypeInfo.STRING_TYPE_INFO, new MapTypeInfo(classOf[String], classOf[String]))

/**

* 初始化

*/

private var hashMap: mutable.HashMap[String, String] = mutable.HashMap[String, String]()

/**

* 这个函数处理数据流的数据,这里之只能获取到 ReadOnlyBroadcastState,因为 Flink 不允许在这里修改 BroadcastState 的状态。

* value 是数据流中的一个元素;ctx 是上下文,可以提供计时器服务、当前 key和只读的 BroadcastState;out 是输出流收集器。

*

* @param value

* @param ctx

* @param out

*/

override def processElement(value: FlinkMergeDataResultBean, ctx: KeyedBroadcastProcessFunction[String, FlinkMergeDataResultBean, mutable.HashMap[String, String], String]#ReadOnlyContext, out: Collector[String]): Unit = {

// val broadcastValue: HeapBroadcastState[String, util.Map[String, String]] = ctx.getBroadcastState(mapStateDescriptor).asInstanceOf[HeapBroadcastState[String, util.Map[String, String]]]

println("hashMap",hashMap)

println("value",value)

println("hashMap.get(value.key)",hashMap.get(value.key))

out.collect(hashMap.get(value.key).getOrElse("NU"))

}

/**

* 这里处理广播流的数据,将广播流数据保存到 BroadcastState 中。

* @param value value 是广播流中的一个元素;

* @param ctx ctx 是上下文,提供 BroadcastState 和修改方法;

* @param out out 是输出流收集器。

*/

override def processBroadcastElement(value: mutable.HashMap[String, String], ctx: KeyedBroadcastProcessFunction[String, FlinkMergeDataResultBean, mutable.HashMap[String, String], String]#Context, out: Collector[String]): Unit = {

// val broadcastState: BroadcastState[String, util.Map[String, String]] = ctx.getBroadcastState(mapStateDescriptor)

hashMap = value

}

}

).print("输出结果:")

env.execute()

}

}

Bean

case class FlinkMergeDataResultBean(

key : String ,

data : JSONObject

)

MySQL_Source

import java.sql.Connection

import com.xx.contant.ContantCommon

import com.xx.utils.jdbc.Jdbc

import org.apache.flink.configuration.Configuration

import org.apache.flink.streaming.api.functions.source.{RichSourceFunction, SourceFunction}

import scala.collection.mutable

/**

* Description:xxxx

* Copyright (c) ,20xx , KevinLu

* This program is protected by copyright laws.

*

* @author KevinLu

* @version : 1.0

*/

object MyJdbcSource extends RichSourceFunction[mutable.HashMap[String, String]] {

private var jdbcConn: Connection = null

val url = "jdbc:mysql://xx:3306/xx?user=xx&password=xx&characterEncoding=UTF-8&useSSL=false"

var isRunning = true

/**

* 初始化source

* @param parameters

*/

override def open(parameters: Configuration): Unit = {

println(s"查询MySQL已经建立连接!")

//所有Jdbc单连接方法都可,根据获取情况判定是否使用连接池

jdbcConn = Jdbc.getConnect(url)

}

override def run(ctx: SourceFunction.SourceContext[mutable.HashMap[String, String]]): Unit = {

val sql = s"select name , properties from properties"

/**

* 收到同一个source_table下的多个task_name任务

*/

var mapResult = mutable.HashMap[String, String]()

while(isRunning){

/**

* 收到同一个source_table下的多个task_name任务

*/

val map = mutable.HashMap[String, String]()

try {

val stmt = jdbcConn.createStatement()

val pstmt = stmt.executeQuery(sql)

while (pstmt.next) {

/**

* 双MAP做唯一判断

*/

map.put(pstmt.getString(1), pstmt.getString(2))

}

} catch {

case e: Exception => println(s"${this.getClass.getSimpleName}-查询源数据配置信息异常 → ${e}", e.printStackTrace())

}

mapResult = map

ctx.collect(mapResult)

Thread.sleep(10000)

}

}

/**

* 取消一个job时

*/

// override def cancel(): Unit = {

// if (jdbcConn != null) {

// jdbcConn.close()

// } else {

// println("JDBC连接为空,请注意检查!")

// }

// }

override def close(): Unit = {

jdbcConn.close()

println("mysql连接已经关闭;")

}

override def cancel(): Unit = {

isRunning = false

}

}

Kafka_Source

import java.util.Properties

import com.xxxx.contant.Contant

import org.apache.flink.api.common.serialization.{DeserializationSchema, SimpleStringSchema}

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer}

/**

* @Author KevinLu(鹿)

* @Description ******

* @Date xxx

* @Copyright 代码类版权的最终解释权归属KevinLu本人所有;

**/

object MyKafkaSource {

def myKafkaSource(

env : StreamExecutionEnvironment ,

bootstrapServers : String ,

topics : List[String]

): DataStream[String] ={

/**

* 获取基础参数

*/

import org.apache.flink.api.scala._

import scala.collection.JavaConversions._

/**

* 定义kafka-source得到DataStream

*/

//将kafka中数据反序列化,

val valueDeserializer: DeserializationSchema[String] = new SimpleStringSchema()

val properties = new Properties()

properties.put("bootstrap.servers", bootstrapServers)

val kafkaSinkDStream: DataStream[String] = env.addSource(new FlinkKafkaConsumer[String](topics, valueDeserializer, properties))

kafkaSinkDStream

}

}

Map_Function

/**

* 自定义mapper处理相关数据

* 判断如果是相关宽表中的数据那么分为同一个by中

*/

class MyMapperTableData() extends RichMapFunction[String , FlinkMergeDataResultBean] {

override def map(in: String): FlinkMergeDataResultBean = {

println(s"source data:$in")

val json = JSON.parseObject(in)

FlinkMergeDataResultBean(json.getString("table") , json.getJSONObject("data"))

}

}

部署

#!/bin/bash

flink run -m yarn-cluster \

-c xxx.xxx \

-p 8 \

/Linux根目录

注意事项

- 开发者根据自己需要拆解使用,包含知识点不单一,MySQL_Source、广播流、底层connect过程等;

- 单使用广播流可以使用外部文件、Kafka、Redis等进行测试,两个Kafka流进行测试相对容易上手;

- 需要花费时间深入的点就是广播流的运转模式,不同场景如何切换开发思路;