Python爬虫实战——Svg映射型爬虫(大众点评)

一、svg爬虫简介

SVG 是用于描述二维矢量图形的一种图形格式。它基于 XML 描述图形,对图形进行放大或缩小操作都不会影响图形质量。矢量图形的这个特点使得它被广泛应用在 Web 网站中。

二、svg的具体表现

三、举例详解

已知:

类名:vhkjj4

坐标:(-316px -141px)----取正整数则为(316,141)

四、爬取大众点评评论数据

①下载网页源代码

网站链接: http://www.dianping.com/shop/130096343/review_all

def down_data(url, cookie):

headers = {

"Cookie": cookie,

"Referer": "http://www.dianping.com/",

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.121 Safari/537.36"

}

ret = requests.get(url, headers=headers).text

with open('dazhong.html', 'w', encoding='utf-8') as f:

f.write(ret)

url = 'http://www.dianping.com/shop/130096343/review_all'

cookie = ''

down_data(url, cookie)

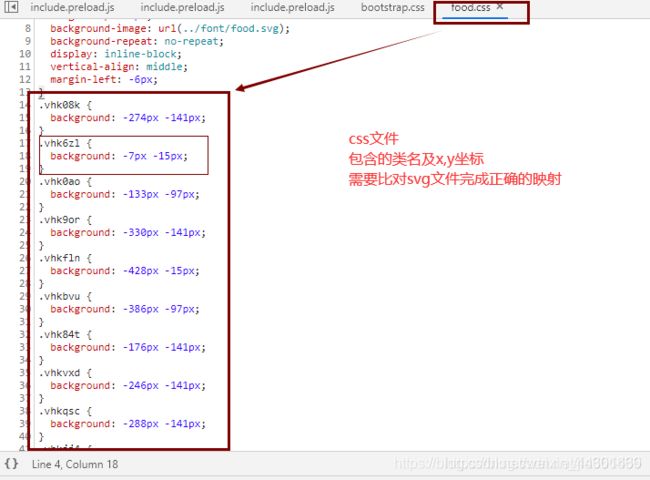

②下载网站css文件

css_url = re.findall('', ret)

css_url = 'https:' + css_url[0]

css_response = requests.get(css_url, headers=headers).text

with open('dazhong.css', 'w', encoding='utf-8') as f:

f.write(css_response)

③匹配所有对应css的映射文件svg

css = open('dazhong.css', encoding='utf-8')

svg_urls = re.findall(r'.*?\[class\^="(.*?)"\]\{.*?background-image: url\((.*?)\);', css.read())

css.close()

for svg_url in svg_urls:

name, svg = svg_url

svg_url = 'https:' + svg

print(svg_url)

svg_response = requests.get(svg_url).text

with open(f'{name}_dazhong.svg', 'w', encoding='utf-8') as f:

f.write(svg_response)

④读取评论相对应的svg文件并解析成相对应字典

with open('be_dazhong.svg', 'r', encoding='utf-8') as f:

svg_html = f.read()

sel = parsel.Selector(svg_html)

texts = sel.css('textPath')

paths = sel.css('path')

path_dict = {}

for path in paths:

path_dict[path.css('path::attr(id)').get()] = path.css('path::attr(d)').get().split(' ')[1]

⑤y坐标与字符串进行相对应

count = 1

zpd_svg_dict = {} # y坐标和字符串的联系

for text in texts:

zpd_svg_dict[path_dict[str(count)]] = text.css('textPath::text').get()

count += 1

⑥读取css中样式、x轴坐标、y坐标

with open('dazhong.css', 'r', encoding='utf-8') as f:

css_html = f.read()

css_paths = re.findall('\.(.*?)\{background:-(\d+)\.0px -(\d+)\.0px;\}.*?', css_html) # 正则表达式条件根据css文件类标签更换

⑦将源文件中的css属性转换为对应文字

last_map = {}

for css_path in css_paths:

css_name, x, y = css_path

index = int(int(x) / 14) # font-size:14px;fill:#333;}

for i in zpd_svg_dict:

if int(y) > int(i):

pass

else:

try:

last_map[css_name] = zpd_svg_dict[i][index]

break

except IndexError as e:

print(e)

8将html源码中的属性进行替换解析

with open('dazhong.html', 'r', encoding='utf-8') as f:

ret = f.read()

svg_list = re.findall('9.循环得到评价内容

etre = etree.HTML(ret)

li_list = etre.xpath('//div[@class="reviews-items"]/ul/li')

for i in li_list:

print(i.xpath('div[@class="main-review"]/div[@class="review-words Hide"]/text()'))

五、完整Python源码

import requests

from lxml import etree

import re

import parsel

def down_data(url, cookie):

headers = {

"Cookie": cookie,

"Referer": "http://www.dianping.com/",

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.121 Safari/537.36"

}

ret = requests.get(url, headers=headers).text

with open('dazhong.html', 'w', encoding='utf-8') as f:

f.write(ret)

css_url = re.findall('', ret)

css_url = 'https:' + css_url[0]

css_response = requests.get(css_url, headers=headers).text

with open('dazhong.css', 'w', encoding='utf-8') as f:

f.write(css_response)

css = open('dazhong.css', encoding='utf-8')

svg_urls = re.findall(r'.*?\[class\^="(.*?)"\]\{.*?background-image: url\((.*?)\);', css.read())

print(svg_urls)

css.close()

for svg_url in svg_urls:

name, svg = svg_url

svg_url = 'https:' + svg

print(svg_url)

svg_response = requests.get(svg_url).text

with open(f'{name}_dazhong.svg', 'w', encoding='utf-8') as f:

f.write(svg_response)

def crack_data():

with open('be_dazhong.svg', 'r', encoding='utf-8') as f:

svg_html = f.read()

# with open('gs_dazhong.svg', 'r', encoding='utf-8') as f:

# svg_html += f.read()

# with open('rq_dazhong.svg', 'r', encoding='utf-8') as f:

# svg_html += f.read()

sel = parsel.Selector(svg_html)

texts = sel.css('textPath')

paths = sel.css('path')

path_dict = {}

for path in paths:

path_dict[path.css('path::attr(id)').get()] = path.css('path::attr(d)').get().split(' ')[1]

print(path_dict)

count = 1

zpd_svg_dict = {} # y坐标和字符串的联系

for text in texts:

zpd_svg_dict[path_dict[str(count)]] = text.css('textPath::text').get()

count += 1

print('zpd_svg_dict', zpd_svg_dict)

with open('dazhong.css', 'r', encoding='utf-8') as f:

css_html = f.read()

css_paths = re.findall('\.(.*?)\{background:-(\d+)\.0px -(\d+)\.0px;\}.*?', css_html) # 正则表达式条件根据css文件类标签更换

print(css_paths)

last_map = {}

for css_path in css_paths:

css_name, x, y = css_path

index = int(int(x) / 14) # font-size:14px;fill:#333;}

for i in zpd_svg_dict:

if int(y) > int(i):

pass

else:

try:

last_map[css_name] = zpd_svg_dict[i][index]

break

except IndexError as e:

print(e)

return last_map

def decode_html(last_map):

with open('dazhong.html', 'r', encoding='utf-8') as f:

ret = f.read()

svg_list = re.findall('