python期末大作业之实现多线程爬虫系统

实现时必须涵盖以下技术:

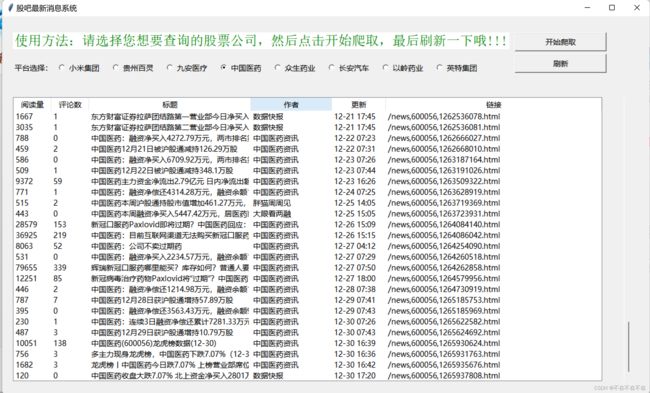

①图形界面 -> tkinter

②多线程 -> threading.Thread

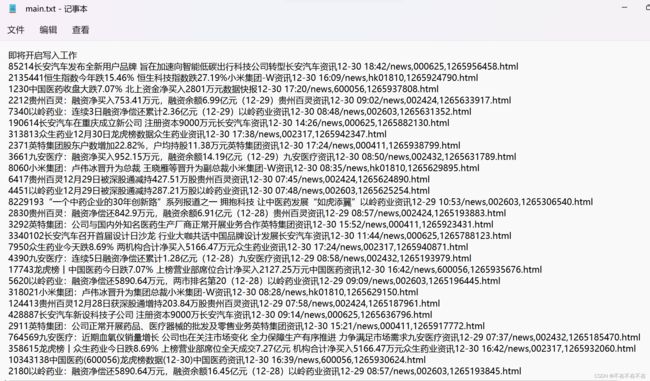

③文件读写操作 -> write read

④数据库编程 -> pymysql

⑤网页爬虫 -> 获取html

⑥异常处理 -> try except

⑦装饰器 -> @函数

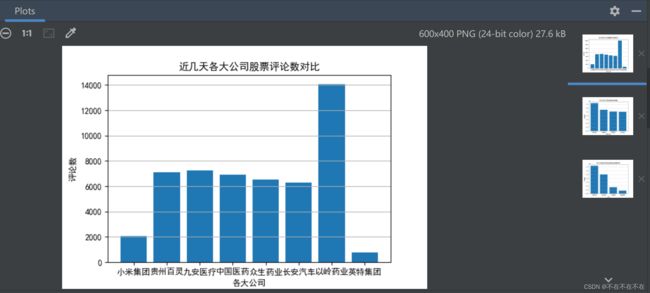

⑧matplotlib绘图 -> plt

纯python小白写的期末大作业,代码部分有很多冗余的地方,大佬勿喷。此文只是给不会python的网友一个借鉴,相信你能两天写完一个期末大作业。这个作业我花了四个下午,早上都在睡觉,所以耗时应该是两天多。因为忙着玩,所以界面写得很丑,代码也很丑,建议网友可以借鉴着完善得更好哦。

遇到什么作业别害怕,猛着干就完事,相信自己。

界面效果图

数据库图

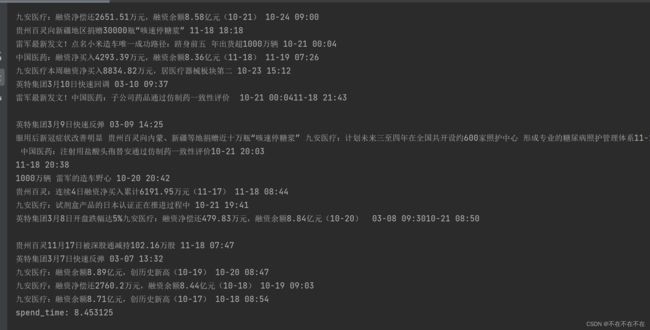

多线程爬取图

matplotlib绘图

文件读写图

代码部分:

spider.py

#spider.py

import threading

import requests

from bs4 import BeautifulSoup

from time import sleep

import random

import pymysql

import tkinter as tk

from tkinter import ttk

import time

import draw_analyse

number=999

strs=[]

threads = []

lock = threading.Lock() # 实例化一把锁

code=['小米集团','贵州百灵','九安医疗','中国医药','众生药业','长安汽车','以岭药业','英特集团']

def show_time(func): #装饰器

def wrapper():

star = time.process_time()

func()

end= time.process_time()

print('spend_time:',end-star)

return wrapper

def Connect():

conn = pymysql.connect(

host="localhost",

port=3306,

user='root', #在这里输入用户名

password='77777', #在这里输入密码

charset='utf8mb4',

database='jwgl' #指定操作的数据库

)

return conn

def CreateTable(tableName=None):

try:

conn = Connect()

sql = 'drop table if exists %s'%(tableName)

cursor = conn.cursor()

cursor.execute(sql)

sql = '''create table %s (

阅读量 int(10),

评论 int(10),

标题 varchar(100),

作者 varchar (100),

更新 varchar(100),

链接 varchar(100),

primary key(链接))''' % (tableName)

cursor.execute(sql) # 执行sql语句,创建表

sql = 'show tables'

cursor.execute(sql)

print('显示创建的表:',cursor.fetchall()) #显示创建的表

sql = 'desc %s'%(tableName)

cursor.execute(sql)

print('显示表结构:',cursor.fetchall()) #显示表结构

except Exception as e:

print('创建表格失败')

conn.rollback() #回滚事务

headers = {'User-Agent': 'Mozilla/5.0'} #获取html页面

def getHTMLText(url):

try:

res = requests.get(url, headers=headers)

res.raise_for_status()

res.encoding = res.apparent_encoding

return res.text

except:

print("异常")

def get_news(url_list,q):

'''

获取东方财富网新闻列表至main.txt

url_list是指链接列表

'''

# 保存爬取内容

for i in range(len(url_list)):

url = url_list[i]

html=getHTMLText(url)

soup = BeautifulSoup(html,"html.parser")

read_list = soup.select(".l1.a1")[1:]

comment_list = soup.select(".l2.a2")[1:]

title_list = soup.select(".l3.a3")[1:]

author_list = soup.select(".l4.a4")[1:]

renew_list = soup.select(".l5.a5")[1:]

if q == number:

strs.append([])

for k in range(len(title_list)):

str1 = str(read_list[k].text.strip())

str2 = str(comment_list[k].text.strip())

str3 = str(title_list[k].select('a')[0]["title"])

str4 = str(author_list[k].text.strip())

str5 = str(renew_list[k].text.strip())

str6 = str(title_list[k].select('a')[0]["href"])

if q == number:

for w in range(7):

strs[i].append(str1)

strs[i].append(str2)

strs[i].append(str3)

strs[i].append(str4)

strs[i].append(str5)

strs[i].append(str6)

with open('main.txt','a') as hello: #写文件

hello.write(str1)

hello.write(str2)

hello.write(str3)

hello.write(str4)

hello.write(str5)

hello.write(str6)

hello.write('\n')

try:

conn = Connect()

cursor = conn.cursor()

sql = '''insert into %s(阅读量, 评论, 标题, 作者, 更新, 链接)

values(%s,'%s','%s','%s','%s','%s');''' % (code[q], str1, str2, str3, str4, str5, str6)

cursor.execute(sql)

conn.commit()

except Exception as e:

print("插入数据失败")

print(e)

print(title_list[k].select('a')[0]["title"], renew_list[k].text.strip())

#print(read_list[k].text.strip(),comment_list[k].text.strip(),title_list[k].select('a')[0]["title"],author_list[k].text.strip(),renew_list[k].text.strip(),title_list[k].select('a')[0]["href"])

t=random.uniform(4,5)

sleep(t)

def draw():

# 提示框

def set0():

delButton(tree)

global number

number=0

def set1():

delButton(tree)

global number

number = 1

def set2():

delButton(tree)

global number

number = 2

def set3():

delButton(tree)

global number

number = 3

def set4():

delButton(tree)

global number

number = 4

def set5():

delButton(tree)

global number

number = 5

def set6():

delButton(tree)

global number

number = 6

def set7():

delButton(tree)

global number

number = 7

window = tk.Tk()

window.title('股吧最新消息系统')

window.geometry('1200x700')

var = tk.IntVar()

tk.Label(window,fg='green', bg='white', font=('Lucida Grande', 19),text='使用方法:请选择您想要查询的股票公司,然后点击开始爬取,最后刷新一下哦!!!').place(x=20, y=30)

tk.Label(window, text='平台选择:').place(x=20, y=82)

tk.Radiobutton(window, text='小米集团', variable=var, value=1,command=set0).place(x=100, y=80)

tk.Radiobutton(window, text='贵州百灵', variable=var, value=2,command=set1).place(x=200, y=80)

tk.Radiobutton(window, text='九安医疗', variable=var, value=3,command=set2).place(x=300, y=80)

tk.Radiobutton(window, text='中国医药', variable=var, value=4,command=set3).place(x=400, y=80)

tk.Radiobutton(window, text='众生药业', variable=var, value=5,command=set4).place(x=500, y=80)

tk.Radiobutton(window, text='长安汽车', variable=var, value=6,command=set5).place(x=600, y=80)

tk.Radiobutton(window, text='以岭药业', variable=var, value=7,command=set6).place(x=700, y=80)

tk.Radiobutton(window, text='英特集团', variable=var, value=8,command=set7).place(x=800, y=80)

# 开始爬取按钮

b_select = tk.Button(window, text='开始爬取', width=20, height=1, command=p).place(x=950, y=30)

tree = ttk.Treeview(window, height=25, columns=['1', '2', '3', '4', '5', '6'], show='headings')

tree.column('1', width=70)

tree.column('2', width=70)

tree.column('3', width=300)

tree.column('4', width=150)

tree.column('5', width=100)

tree.column('6', width=400)

tree.heading('1', text='阅读量')

tree.heading('2', text='评论数')

tree.heading('3', text='标题')

tree.heading('4', text='作者')

tree.heading('5', text='更新')

tree.heading('6', text='链接')

def flush():

global number

if number!= 999:

Connect()

conn = Connect()

cursor = conn.cursor(cursor=pymysql.cursors.DictCursor)

query = "SELECT 阅读量, 评论, 标题, 作者, 更新, 链接 FROM %s"%code[number]

cursor.execute(query)

rows = cursor.fetchall()

def update(rows):

for i in rows:

tree.insert('', 'end', values=(i['阅读量'], i['评论'], i['标题'], i['作者'], i['更新'], i['链接']))

update(rows)

b_select = tk.Button(window, text='刷新', width=20, height=1, command=flush).place(x=950, y=70)

def delButton(tree):

x = tree.get_children()

for item in x:

tree.delete(item)

# 滚动条

vbar = ttk.Scrollbar(window, orient=tk.VERTICAL, command=tree.yview)

tree.configure(yscrollcommand=vbar.set)

tree.grid(row=0, column=0, padx=20, pady=150, sticky=tk.NSEW)

vbar.grid(row=0, column=1, padx=20, pady=150, sticky=tk.NS)

window.mainloop()

@show_time

def p():

with open('main.txt', 'w') as hello: # 写文件

hello.write("即将开启写入工作\n")

hello = open('main.txt', 'r') #读文件

hello.seek(0)

con = hello.read()

print(con)

hello.close()

codes = ["hk01810",'002424','002432','600056','002317','000625','002603','000411']

#codes = ["hk01810"]

#codes = ["hk01810", '002424', '002432', '600056']

pages = 2

url_list=[] #获取东方财富网股吧链接列表 code是指公司代码 page是值爬取页数

for i in range(len(codes)):

url_list.append([])

for page in range(1, pages + 1):

url = f"http://guba.eastmoney.com/list,{codes[i]},1,f_{page}.html"

url_list[i].append(url)

for i in code: #数据库建表

CreateTable(i)

q=0

for url in url_list:

thread = threading.Thread(target=get_news, args=(url,q)) #多线程

thread.start()

threads.append(thread)

q=q+1

for t in threads:

t.join() # 等待每个线程执行结束

draw_analyse.draw_a()

if __name__ == '__main__':

draw()draw_analyse.py

#draw_analyse.py

import pandas as pd # 用来做数据导入(pd.read_sql_query() 执行sql语句得到结果df

import matplotlib.pyplot as plt # 用来画图(plt.plot()折线图, plt.bar()柱状图,....

from sqlalchemy import create_engine

def draw_a():

plt.rcParams['font.sans-serif'] = 'SimHei' # 设置中文字体支持中文显示

plt.rcParams['axes.unicode_minus'] = False # 支持中文字体下显示'-'号

plt.rcParams['figure.figsize'] = (6, 4) # 8x6 inches

plt.rcParams['figure.dpi'] = 100 # 100 dot per inch

engine = create_engine('mysql+pymysql://%s:%s@%s:%s/%s?charset=utf8'

% ('root', '77777', 'localhost', 3306, 'jwgl'))

y = []

sql = r"SELECT SUM(阅读量) as t FROM 以岭药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

# print(value)

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 九安医疗"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 中国医药"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 众生药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

x = ['以岭药业', '九安医疗', '中国医药', '众生药业']

plt.bar(x, y)

plt.grid(axis='y')

plt.title("近几天各大药业消息的阅读量")

# y轴标签

plt.ylabel("阅读量")

# x轴标签

plt.xlabel("医药公司")

plt.show()

y = []

sql = r"SELECT SUM(阅读量) as t FROM 以岭药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

# print(value)

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 众生药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 小米集团"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(阅读量) as t FROM 英特集团"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

x = ['以岭药业', '众生药业', '小米集团', '英特集团']

plt.bar(x, y)

plt.grid(axis='y')

plt.title("近几天药业和非药业消息的阅读量对比")

# y轴标签

plt.ylabel("阅读量")

# x轴标签

plt.xlabel("公司")

plt.show()

y = []

sql = r"SELECT SUM(评论) as t FROM 小米集团"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 贵州百灵"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 九安医疗"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 中国医药"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 众生药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 长安汽车"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 以岭药业"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

# print(value)

y.append(value)

sql = r"SELECT SUM(评论) as t FROM 英特集团"

df = pd.read_sql_query(sql, engine)

for index, value in df['t'].items():

y.append(value)

x = ['小米集团', '贵州百灵', '九安医疗', '中国医药', '众生药业', '长安汽车', '以岭药业', '英特集团']

plt.bar(x, y)

plt.grid(axis='y')

plt.title("近几天各大公司股票评论数对比")

# y轴标签

plt.ylabel("评论数")

# x轴标签

plt.xlabel("各大公司")

plt.show()

draw_a()前几天报名了一个有趣的活动,如果之后参加了,有时间会再分享给大家。如果之后没参加,那就算了捏。有机会再见捏!