Linux内存初始化-启动阶段的内存初始化

本文代码基于ARM64平台, Linux kernel 5.15

在加载kernel 之前, kernel对于系统是有一定要求的,明确规定了boot阶段必须要把MMU关闭:

arch/arm64/kernel/head.S

/*

* Kernel startup entry point.

* ---------------------------

*

* The requirements are:

* MMU = off, D-cache = off, I-cache = on or off,

* x0 = physical address to the FDT blob.那么在进入kernel之后, 就必须有一个使能MMU, 建立映射的过程, 本文描述kernel启动阶段进行内存初始化相关的操作。

流程

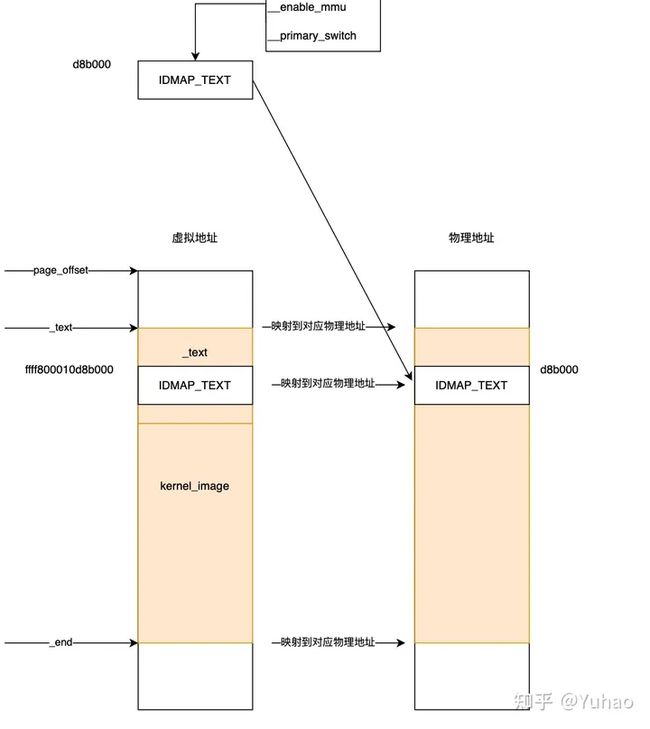

在初始化阶段,我们mapping二段地址,一段是identity mapping,其实就是把物理地址mapping到物理地址上去,在打开MMU的时候需要这样的mapping(ARM ARCH强烈推荐这么做的)。第二段是kernel image mapping,内核代码欢快的执行当然需要将kernel running需要的地址(kernel txt、dernel rodata、data、bss等等)进行映射了。映射之后, 系统内存的状态大致如图所示:

(图中的地址为实验机器的地址, 仅供参考)

启动入口

kernel的 启动入口是_text , 它定义在.head.text 中

arch/arm64/kernel/vmlinux.lds.S

.....

ENTRY(_text)

.....

SECTIONS

{

. = KIMAGE_VADDR;

.head.text : {

_text = .;

HEAD_TEXT

}

.....

}

arch/arm64/kernel/head.S

__HEAD

/*

* DO NOT MODIFY. Image header expected by Linux boot-loaders.

*/

efi_signature_nop // special NOP to identity as PE/COFF executable

b primary_entry // branch to kernel start, magic

.quad 0 // Image load offset from start of RAM, little-endian

le64sym _kernel_size_le // Effective size of kernel image, little-endian

.....

SYM_CODE_START(primary_entry)

bl preserve_boot_args

bl init_kernel_el // w0=cpu_boot_mode

adrp x23, __PHYS_OFFSET

and x23, x23, MIN_KIMG_ALIGN - 1 // KASLR offset, defaults to 0

bl set_cpu_boot_mode_flag

bl __create_page_tableskernel 启动后, 最终会调用到__create_page_tables这个函数, 这是创建启动页表的关键函数。

调用过程如下_text ->primary_entry->__create_page_tables

初始化准备

arch/arm64/kernel/head.S

SYM_FUNC_START_LOCAL(__create_page_tables)

mov x28, lr

/*

* Invalidate the init page tables to avoid potential dirty cache lines

* being evicted. Other page tables are allocated in rodata as part of

* the kernel image, and thus are clean to the PoC per the boot

* protocol.

*/

adrp x0, init_pg_dir

adrp x1, init_pg_end

sub x1, x1, x0

bl __inval_dcache_area

/*

* Clear the init page tables.

*/

adrp x0, init_pg_dir

adrp x1, init_pg_end

sub x1, x1, x0

1: stp xzr, xzr, [x0], #16

stp xzr, xzr, [x0], #16

stp xzr, xzr, [x0], #16

stp xzr, xzr, [x0], #16

subs x1, x1, #64

b.ne 1b__create_page_tables 初始化时, 执行的时候会把init_pg_dir 对应区域的cache清空, 然后把对应区域的内存清零。

init_pg_dir 就是启动阶段用来映射kernel text的页表, 它的本身是位于 kenrel 的bss段

arch/arm64/kernel/vmlinux.lds.S

BSS_SECTION(0, 0, 0)

. = ALIGN(PAGE_SIZE);

init_pg_dir = .;

. += INIT_DIR_SIZE;

init_pg_end = .;映射IDMAP_TEXT

identity mapping实际上就是建立了整个内核使能MMU相关代码的一致性mapping,就是将物理地址所在的虚拟地址段mapping到物理地址上去。为什么这么做呢?ARM ARM文档中有一段话:

If the PA of the software that enables or disables a particular stage of address translation differs from its VA, speculative instruction fetching can cause complications. ARM strongly recommends that the PA and VA of any software that enables or disables a stage of address translation are identical if that stage of translation controls translations that apply to the software currently being executed.

由于打开MMU操作的时候,内核代码欢快的执行,这时候有一个地址映射ON/OFF的切换过程,这种一致性映射可以保证在在打开MMU那一点附近的程序代码可以平滑切换。

下面是__create_page_tables函数中处理IDMAP的部分

arch/arm64/kernel/head.S

SYM_FUNC_START_LOCAL(__create_page_tables)

.......

/*

* Create the identity mapping.

*/

adrp x0, idmap_pg_dir

adrp x3, __idmap_text_start // __pa(__idmap_text_start)

ldr_l x4, idmap_ptrs_per_pgd

mov x5, x3 // __pa(__idmap_text_start)

adr_l x6, __idmap_text_end // __pa(__idmap_text_end)

// 根据下面的注释可以看到, x3, x6是需要映射的虚拟地址的起始和结束地址(第三个和第四个参数),这里穿的是__idmap_text_start和__idmap_text_end对应的物理地址, 同时映射的目的物理地址也传的是x3(第六个参数)

map_memory x0, x1, x3, x6, x7, x3, x4, x10, x11, x12, x13, x14

/*

* Map memory for specified virtual address range. Each level of page table needed supports

* multiple entries. If a level requires n entries the next page table level is assumed to be

* formed from n pages.

*

* tbl: location of page table

* rtbl: address to be used for first level page table entry (typically tbl + PAGE_SIZE)

* vstart: start address to map

* vend: end address to map - we map [vstart, vend]

* flags: flags to use to map last level entries

* phys: physical address corresponding to vstart - physical memory is contiguous

* pgds: the number of pgd entries

*

* Temporaries: istart, iend, tmp, count, sv - these need to be different registers

* Preserves: vstart, vend, flags

* Corrupts: tbl, rtbl, istart, iend, tmp, count, sv

*/

.macro map_memory, tbl, rtbl, vstart, vend, flags, phys, pgds, istart, iend, tmp, count, sv在map的时候, 实际上是map的idmap_text_start到idmap_text_end这一段地址。那么这段地址里面有哪些内容呢?

#define IDMAP_TEXT \

. = ALIGN(SZ_4K); \

__idmap_text_start = .; \

*(.idmap.text) \

__idmap_text_end = .;

arch/arm64/kernel/vmlinux.lds.S

...........

.text : ALIGN(SEGMENT_ALIGN) { /* Real text segment */

_stext = .; /* Text and read-only data */

IRQENTRY_TEXT

SOFTIRQENTRY_TEXT

ENTRY_TEXT

TEXT_TEXT

SCHED_TEXT

CPUIDLE_TEXT

LOCK_TEXT

KPROBES_TEXT

HYPERVISOR_TEXT

IDMAP_TEXT

HIBERNATE_TEXT

TRAMP_TEXT

*(.fixup)

*(.gnu.warning)

. = ALIGN(16);

*(.got) /* Global offset table */

}可以看到, .idmap.text 是被放在.text段中的

$ nm vmlinux | grep __idmap_text_end

ffff800010d8b760 T __idmap_text_end

$ nm vmlinux | grep __idmap_text_start

ffff800010d8b000 T __idmap_text_start

arch/arm64/kernel/head.S

.section ".idmap.text","awx"

/*

* Starting from EL2 or EL1, configure the CPU to execute at the highest

* reachable EL supported by the kernel in a chosen default state. If dropping

* from EL2 to EL1, configure EL2 before configuring EL1.

*

* Since we cannot always rely on ERET synchronizing writes to sysregs (e.g. if

* SCTLR_ELx.EOS is clear), we place an ISB prior to ERET.

*

* Returns either BOOT_CPU_MODE_EL1 or BOOT_CPU_MODE_EL2 in w0 if

* booted in EL1 or EL2 respectively.在head.S文件中, 定义了这个段, 很多内存初始化的代码都被放到了这个段里, 比如enable_mmu,primary_switch

$ nm vmlinux | grep __enable_mmu

ffff800010d8b268 T __enable_mmu

$ nm vmlinux | grep __primary_switch

ffff800010d8b330 t __primary_switch

ffff800011530330 t __primary_switched上面可以看到, 在和使能mmu相关的代码, 实际上都被放到了__idmap_text 里面, 保证切换MMU时能够平滑切换。

Idmap 实际上被映射了两次,既映射到了其kernle text的虚拟地址, 又映射到了它的物理地址。

映射kernel

创建IDMAP之后, 还会将kernel text相关的内容进行映射, 保证kernel可以正常运行

arch/arm64/kernel/head.S

SYM_FUNC_START_LOCAL(__create_page_tables)

/*

* Map the kernel image (starting with PHYS_OFFSET).

*/

adrp x0, init_pg_dir

mov_q x5, KIMAGE_VADDR // compile time __va(_text)

add x5, x5, x23 // add KASLR displacement

mov x4, PTRS_PER_PGD

adrp x6, _end // runtime __pa(_end)

adrp x3, _text // runtime __pa(_text)

sub x6, x6, x3 // _end - _text

add x6, x6, x5 // runtime __va(_end)

map_memory x0, x1, x5, x6, x7, x3, x4, x10, x11, x12, x13, x14可以看到, 这里是将_text到_end 这段物理地址映射到对应的虚拟地址上。

使能MMU

arch/arm64/kernel/head.S

SYM_FUNC_START(__enable_mmu)

mrs x2, ID_AA64MMFR0_EL1

ubfx x2, x2, #ID_AA64MMFR0_TGRAN_SHIFT, 4

cmp x2, #ID_AA64MMFR0_TGRAN_SUPPORTED

b.ne __no_granule_support

update_early_cpu_boot_status 0, x2, x3

adrp x2, idmap_pg_dir ---------------(1)

phys_to_ttbr x1, x1

phys_to_ttbr x2, x2

msr ttbr0_el1, x2 // load TTBR0 ---------------(2)

offset_ttbr1 x1, x3

msr ttbr1_el1, x1 // load TTBR1 ---------------(3)

isb

msr sctlr_el1, x0 ---------------(4)

isb

/*

* Invalidate the local I-cache so that any instructions fetched

* speculatively from the PoC are discarded, since they may have

* been dynamically patched at the PoU.

*/

ic iallu

dsb nsh

isb

ret

SYM_FUNC_END(__enable_mmu)(1)(2) 这里吧idmap_pg_dir的地址传给了ttbr0_el1;这里需要说明下, arm64 会在MMU时, 0x0000 0000 0000 0000 ~ 0xFFFF 0000 0000 0000 地址空间的内容会用ttbr0_el1 进行转换, 此时由于都是直接运行的物理地址, 所以IDMAP相关的映射全部都会走ttbr0_el1

(3) 将x1 的值赋给ttbr1_el1, 0xFFFF 0000 0000 0000 ~ 0xFFFF FFFF FFFF FFFF 空间的地址映射用用到这个寄存器, 其实就是kernel space相关的地址。在启动阶段调用__enable_mmu时, x1传的是init_pg_dir的地址。

arch/arm64/kernel/head.S

SYM_FUNC_START_LOCAL(__primary_switch)

#ifdef CONFIG_RANDOMIZE_BASE

mov x19, x0 // preserve new SCTLR_EL1 value

mrs x20, sctlr_el1 // preserve old SCTLR_EL1 value

#endif

adrp x1, init_pg_dir

bl __enable_mmu(4) 使能MMU, x0的值在调用__enable_mmu之前的__cpu_setup 函数中就设置好了