内网离线 k3s Rancher 高可用安装部署流程

文章目录

-

-

- 1. 总体架构

-

- 1.1 节点规划

- 1.2 架构设计

- 2. 节点准备

-

- 2.1 NTP 时间同步服务

-

- 2.1.1 NTP 程序包下载

- 2.1.2 NTP 服务端安装

- 2.1.3 NTP 客户端安装

- 2.2 系统环境配置

- 3. k3s 安装

-

- 3.1 数据库准备

- 3.2 k3s 程序下载

- 3.3 k3s 安装

- 3.4 kubectl 使用

- 4. Helm 下载 rancher 模板(外网)

- 5. TLS 证书生成(外网)

- 6. Rancher 安装

- 7. 配置下游集群

-

- 7.1 节点环境配置

- 7.2 Docker 安装

- 7.3 私有镜像仓库配置

- 7.4 TLS 证书配置

- 7.5 创建自定义集群

- 7.6 cattle-cluster-agent 报错解决

- 8. 安装 Longhorn

-

- 8.1 主机节点安装 iscsi

- 8.2 应用商店中安装

- 9. Nginx 负载均衡配置

- 10. Rancher 节点清理脚本

- 11. 错误汇总

-

1. 总体架构

1.1 节点规划

| 节点 IP | 安装服务 | 角色功能 | 备注 |

|---|---|---|---|

| 192.168.100.31 | Harbor, Nginx, NTP, MySQL | 镜像仓库,负载均衡,时间同步,K3S 数据库 | Docker 启动 Nginx 和 MySQL |

| 192.168.100.32 | K3S | Rancher Server 节点 | 连接外置 MySQL 数据库 |

| 192.168.100.146 | K3S | Rancher Server 节点 | 连接外置 MySQL 数据库 |

| 192.168.100.33 | Docker | 下游集群 Rancher Agent 节点 | ETCD, Control, Worker |

| 192.168.100.34 | Docker | 下游集群 Rancher Agent 节点 | ETCD, Worker |

| 192.168.100.35 | Docker | 下游集群 Rancher Agent 节点 | Worker |

| 192.168.100.147 | Docker | 下游集群 Rancher Agent 节点 | ETCD, Worker |

1.2 架构设计

-

Rancher Server (local 集群)

按照官方高可用部署方案,通过 k3s 部署的 Rancher Server 至少需要两个节点实现高可用,即 192.168.100.32 和 192.168.100.146 组成高可用节点。

在 192.168.100.31 上部署 MySQL 服务作为 k3s 外置数据库,实现高可用。

在 192.168.100.31 上部署 nginx 服务,通过配置文件实现 Rancher Server 高可用及负载均衡。

-

Rancher Agent(下游集群)

下游集群通过 Rancher 自定义集群进行部署,为了保证高可用,至少需要三个 ETCD 节点,以保证在单个 ETCD 节点故障时集群的可用性。

-

域名规划

高可用的实现依赖于通过域名访问 Rancher Server,因此本次将

xxyf.rancher.com作为域名使用。 -

离线程序包下载

所有所需的

rpm程序包均使用repotrack方式进行下载,该方式可以将程序本体及所有依赖全部下载下来,保证离线环境的顺利安装。# 外网环境下,安装 repotrack yum -y install yum-utils # 例:下载 nginx 及其依赖包至 /home/nginx-rpms 目录下 repotrack -p /home/nginx-rpms nginx # 打包所有依赖 tar -zcvf nginx-rpms.tar.gz /home/nginx-rpms/ -

其他

由于在内网环境,需要安装 NTP 时间同步服务器以保证集群内所有节点的时间一致性。

网络安全方面的要求,不允许关闭防火墙,需要考虑规则配置,尽可能少的开放端口。

2. 节点准备

2.1 NTP 时间同步服务

2.1.1 NTP 程序包下载

-

外网环境下载

yum -y install ntp --downloadonly --downloaddir /root/ntp-rpms -

打包得到

ntp-rpms.tar.gz并拷贝至所有内网节点中cd /root/ tar -zcvf ntp-rpms.tar.gz ntp-rpms/

2.1.2 NTP 服务端安装

-

修改主机时间

timedatectl set-timezone Asia/Shanghai date -s "2022-07-29 10:13:00" hwclock --set --date "2022-07-29 10:13:00" hwclock --hctosys hwclock -w init 6 -

解压并安装 NTP 程序包

tar -zxvf ntp-rpms.tar.gz rpm -Uvh --nodeps --force ntp-rpms/*.rpm -

编辑配置文件

vi /etc/ntp.conf# 添加 restrict -4 default kod notrap nomodify restrict -6 default kod notrap nomodify # 注释掉 #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst # 添加(代表使用自身作为服务器) server 127.127.1.0 fudge 127.127.1.0 stratum 8 -

启动服务

systemctl restart ntpd systemctl disable chronyd.service systemctl enable ntpd.service -

查看状态

ntpstat netstat -tunlp | grep 123 -

开放防火墙端口

firewall-cmd --permanent --add-port 123/udp firewall-cmd --reload

2.1.3 NTP 客户端安装

-

解压并安装 NTP 程序包

tar -zxvf ntp-rpms.tar.gz rpm -Uvh --nodeps --force ntp-rpms/*.rpm -

编辑配置文件

vi /etc/ntp.conf# 添加 restrict -4 default kod notrap nomodify restrict -6 default kod notrap nomodify # 注释掉 #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst # 添加(此处 ip 地址为服务端地址) server 192.168.100.31 fudge 192.168.100.31 stratum 8 -

启动服务

systemctl restart ntpd systemctl disable chronyd.service systemctl enable ntpd.service -

查看状态

ntpstat -

从服务端同步时间

ntpdate -u 192.168.100.31

2.2 系统环境配置

-

关闭 SELinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config grep "SELINUX=disabled" /etc/selinux/config setenforce 0 -

关闭 swap 分区

swapoff -a sed -i 's$/dev/mapper/centos-swap$#/dev/mapper/centos-swap$g' /etc/fstab echo "vm.swappiness=0" >> /etc/sysctl.conf sysctl -p /etc/sysctl.conf -

修改内核参数

echo """ net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1 net.ipv4.ip_forward=1 """ >> /etc/sysctl.conf modprobe br_netfilter sysctl -p -

配置 dns

此处配置没有实际作用,但是不配置在安装 k3s 时会报错

echo "nameserver 114.114.114.114" > /etc/resolv.conf systemctl daemon-reload systemctl restart network -

配置 hosts

将域名

xxyf.rancher.com解析到 nginx 所在服务器,通过 nginx 进行负载均衡cat << EOF >> /etc/hosts 192.168.100.31 xxyf.rancher.com 192.168.100.32 server032 192.168.100.33 server033 192.168.100.34 server034 192.168.100.35 server035 192.168.100.146 server146 192.168.100.147 server147 EOF -

防火墙配置

此处列出的端口列表基本覆盖各种不同方式类型的 Rancher,后续可以根据实际使用进行调整

firewall-cmd --permanent --add-port 22/tcp firewall-cmd --permanent --add-port 80/tcp firewall-cmd --permanent --add-port 443/tcp firewall-cmd --permanent --add-port 2379/tcp firewall-cmd --permanent --add-port 2380/tcp firewall-cmd --permanent --add-port 6443/tcp firewall-cmd --permanent --add-port 6444/tcp firewall-cmd --permanent --add-port 8472/udp firewall-cmd --permanent --add-port 9345/tcp firewall-cmd --permanent --add-port 10249/tcp firewall-cmd --permanent --add-port 10250/tcp firewall-cmd --permanent --add-port 10256/tcp firewall-cmd --permanent --add-port 30935/tcp firewall-cmd --permanent --add-port 31477/tcp # 这两行非常非常非常重要,没有的话后续会报错,Rancher 节点间无法通信 firewall-cmd --permanent --zone=trusted --add-source=10.42.0.0/16 firewall-cmd --permanent --zone=trusted --add-source=10.43.0.0/16 firewall-cmd --reload -

k3s-selinux 安装(仅在两个 k3s 节点安装)

tar -zxvf container-selinux-rpms.tar.gz rpm -Uvh --force --nodeps container-selinux-rpms/*.rpm

3. k3s 安装

3.1 数据库准备

在 192.168.100.31 服务器上部署 MySQL 5.7 作为 k3s 集群外置数据库,实现高可用。

docker run -d -p 13306:3306 --restart=unless-stopped --name mysql-k3s \

-v /home/mysql-k3s:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=xxx 192.168.100.31:18888/library/mysql:5.7.38

安全起见,不要使用 root 账号,建立 k3s 用户,整体信息如下:

IP: 192.168.100.31

PORT: 13306

DB: k3s

USERNAME: k3s

PASSWORD: K3s_12345AA

3.2 k3s 程序下载

根据官网文档,在 Github 或者国内镜像下载以下文件:

- k3s (v1.18.20+k3s1)

- k3s-airgap-images-amd64.tar (离线镜像包)

- install.sh (安装脚本)

3.3 k3s 安装

mkdir -p /var/lib/rancher/k3s/agent/images/

cp ./k3s-airgap-images-amd64.tar /var/lib/rancher/k3s/agent/images/

cp ./k3s /usr/local/bin/

chmod a+x /usr/local/bin/k3s

chmod +x ./install.sh

# master 安装

K3S_DATASTORE_ENDPOINT='mysql://k3s:K3s_12345AA@tcp(192.168.100.31:13306)/k3s' INSTALL_K3S_SKIP_DOWNLOAD=true ./install.sh

# node 安装(不执行!本次使用两个 k3s master 做高可用,未使用 node 节点)

K3S_URL=https://xxyf.rancher.com:6443 INSTALL_K3S_SKIP_DOWNLOAD=true K3S_TOKEN=xxx ./k3s/install.sh

# 其中 K3S_TOKEN 通过在 master 节点执行下面命令获取

cat /var/lib/rancher/k3s/server/node-token

3.4 kubectl 使用

安装 kubectl 工具

# 根据官方文档下载 kubectl 程序并拷贝至 k3s server 节点安装

install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

# k3s 安装过程中会生成 /etc/rancher/k3s/k3s.yaml 文件

mkdir -p ~/.kube/config/

cp /etc/rancher/k3s/k3s.yaml ~/.kube/config/

vi ~/.kube/config/k3s.yaml

# 将 server 修改为域名,端口保留 6443 不要漏掉

server: https://xxyf.rancher.com:6443

查看集群状态

export KUBECONFIG=~/.kube/config/k3s.yaml

kubectl get pods --all-namespaces

kubectl get nodes

查看节点 ROLES 为

[root@server032 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server032 Ready master 24m v1.18.20+k3s1

server033 Ready <none> 106s v1.18.20+k3s1

[root@server032 ~]#

[root@server032 ~]# kubectl label node server033 node-role.kubernetes.io/worker=worker

node/server033 labeled

[root@server032 ~]#

[root@server032 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server032 Ready master 24m v1.18.20+k3s1

server033 Ready worker 2m13s v1.18.20+k3s1

4. Helm 下载 rancher 模板(外网)

-

下载 helm 程序包

helm-v3.9.1-linux-amd64.tar.gzhttps://github.com/helm/helm/releases/tag/v3.9.1 -

解压缩并移动目录

tar -zxvf helm-v3.9.1-linux-amd64.tar.gz mv linux-amd64/helm /usr/local/bin/helm -

获取 rancher 模板

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable helm fetch rancher-stable/rancher --version=v2.5.14 -

渲染 rancher 模板,得到名称为

rancher文件夹helm template rancher ./rancher-2.5.14.tgz --output-dir . \ --no-hooks \ --namespace cattle-system \ --set hostname=xxyf.rancher.com \ --set rancherImage=192.168.100.31:18888/rancher/rancher \ --set ingress.tls.source=secret \ --set systemDefaultRegistry=192.168.100.31:18888 \ --set useBundledSystemChart=true \ --set rancherImageTag = v2.5.14

5. TLS 证书生成(外网)

-

证书一键生成脚本

create_self-signed-cert.sh#!/bin/bash -e help () { echo ' ================================================================ ' echo ' --ssl-domain: 生成ssl证书需要的主域名,如不指定则默认为www.rancher.local,如果是ip访问服务,则可忽略;' echo ' --ssl-trusted-ip: 一般ssl证书只信任域名的访问请求,有时候需要使用ip去访问server,那么需要给ssl证书添加扩展IP,多个IP用逗号隔开;' echo ' --ssl-trusted-domain: 如果想多个域名访问,则添加扩展域名(SSL_TRUSTED_DOMAIN),多个扩展域名用逗号隔开;' echo ' --ssl-size: ssl加密位数,默认2048;' echo ' --ssl-cn: 国家代码(2个字母的代号),默认CN;' echo ' 使用示例:' echo ' ./create_self-signed-cert.sh --ssl-domain=www.test.com --ssl-trusted-domain=www.test2.com \ ' echo ' --ssl-trusted-ip=1.1.1.1,2.2.2.2,3.3.3.3 --ssl-size=2048 --ssl-date=3650' echo ' ================================================================' } case "$1" in -h|--help) help; exit;; esac if [[ $1 == '' ]];then help; exit; fi CMDOPTS="$*" for OPTS in $CMDOPTS; do key=$(echo ${OPTS} | awk -F"=" '{print $1}' ) value=$(echo ${OPTS} | awk -F"=" '{print $2}' ) case "$key" in --ssl-domain) SSL_DOMAIN=$value ;; --ssl-trusted-ip) SSL_TRUSTED_IP=$value ;; --ssl-trusted-domain) SSL_TRUSTED_DOMAIN=$value ;; --ssl-size) SSL_SIZE=$value ;; --ssl-date) SSL_DATE=$value ;; --ca-date) CA_DATE=$value ;; --ssl-cn) CN=$value ;; esac done # CA相关配置 CA_DATE=${CA_DATE:-3650} CA_KEY=${CA_KEY:-cakey.pem} CA_CERT=${CA_CERT:-cacerts.pem} CA_DOMAIN=cattle-ca # ssl相关配置 SSL_CONFIG=${SSL_CONFIG:-$PWD/openssl.cnf} SSL_DOMAIN=${SSL_DOMAIN:-'www.rancher.local'} SSL_DATE=${SSL_DATE:-3650} SSL_SIZE=${SSL_SIZE:-2048} ## 国家代码(2个字母的代号),默认CN; CN=${CN:-CN} SSL_KEY=$SSL_DOMAIN.key SSL_CSR=$SSL_DOMAIN.csr SSL_CERT=$SSL_DOMAIN.crt echo -e "\033[32m ---------------------------- \033[0m" echo -e "\033[32m | 生成 SSL Cert | \033[0m" echo -e "\033[32m ---------------------------- \033[0m" if [[ -e ./${CA_KEY} ]]; then echo -e "\033[32m ====> 1. 发现已存在CA私钥,备份"${CA_KEY}"为"${CA_KEY}"-bak,然后重新创建 \033[0m" mv ${CA_KEY} "${CA_KEY}"-bak openssl genrsa -out ${CA_KEY} ${SSL_SIZE} else echo -e "\033[32m ====> 1. 生成新的CA私钥 ${CA_KEY} \033[0m" openssl genrsa -out ${CA_KEY} ${SSL_SIZE} fi if [[ -e ./${CA_CERT} ]]; then echo -e "\033[32m ====> 2. 发现已存在CA证书,先备份"${CA_CERT}"为"${CA_CERT}"-bak,然后重新创建 \033[0m" mv ${CA_CERT} "${CA_CERT}"-bak openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}" else echo -e "\033[32m ====> 2. 生成新的CA证书 ${CA_CERT} \033[0m" openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}" fi echo -e "\033[32m ====> 3. 生成Openssl配置文件 ${SSL_CONFIG} \033[0m" cat > ${SSL_CONFIG} <<EOM [req] req_extensions = v3_req distinguished_name = req_distinguished_name [req_distinguished_name] [ v3_req ] basicConstraints = CA:FALSE keyUsage = nonRepudiation, digitalSignature, keyEncipherment extendedKeyUsage = clientAuth, serverAuth EOM if [[ -n ${SSL_TRUSTED_IP} || -n ${SSL_TRUSTED_DOMAIN} || -n ${SSL_DOMAIN} ]]; then cat >> ${SSL_CONFIG} <<EOM subjectAltName = @alt_names [alt_names] EOM IFS="," dns=(${SSL_TRUSTED_DOMAIN}) dns+=(${SSL_DOMAIN}) for i in "${!dns[@]}"; do echo DNS.$((i+1)) = ${dns[$i]} >> ${SSL_CONFIG} done if [[ -n ${SSL_TRUSTED_IP} ]]; then ip=(${SSL_TRUSTED_IP}) for i in "${!ip[@]}"; do echo IP.$((i+1)) = ${ip[$i]} >> ${SSL_CONFIG} done fi fi echo -e "\033[32m ====> 4. 生成服务SSL KEY ${SSL_KEY} \033[0m" openssl genrsa -out ${SSL_KEY} ${SSL_SIZE} echo -e "\033[32m ====> 5. 生成服务SSL CSR ${SSL_CSR} \033[0m" openssl req -sha256 -new -key ${SSL_KEY} -out ${SSL_CSR} -subj "/C=${CN}/CN=${SSL_DOMAIN}" -config ${SSL_CONFIG} echo -e "\033[32m ====> 6. 生成服务SSL CERT ${SSL_CERT} \033[0m" openssl x509 -sha256 -req -in ${SSL_CSR} -CA ${CA_CERT} \ -CAkey ${CA_KEY} -CAcreateserial -out ${SSL_CERT} \ -days ${SSL_DATE} -extensions v3_req \ -extfile ${SSL_CONFIG} echo -e "\033[32m ====> 7. 证书制作完成 \033[0m" echo echo -e "\033[32m ====> 8. 以YAML格式输出结果 \033[0m" echo "----------------------------------------------------------" echo "ca_key: |" cat $CA_KEY | sed 's/^/ /' echo echo "ca_cert: |" cat $CA_CERT | sed 's/^/ /' echo echo "ssl_key: |" cat $SSL_KEY | sed 's/^/ /' echo echo "ssl_csr: |" cat $SSL_CSR | sed 's/^/ /' echo echo "ssl_cert: |" cat $SSL_CERT | sed 's/^/ /' echo echo -e "\033[32m ====> 9. 附加CA证书到Cert文件 \033[0m" cat ${CA_CERT} >> ${SSL_CERT} echo "ssl_cert: |" cat $SSL_CERT | sed 's/^/ /' echo echo -e "\033[32m ====> 10. 重命名服务证书 \033[0m" echo "cp ${SSL_DOMAIN}.key tls.key" cp ${SSL_DOMAIN}.key tls.key echo "cp ${SSL_DOMAIN}.crt tls.crt" cp ${SSL_DOMAIN}.crt tls.crt -

生成证书,得到

tls.crt和tls.key./create_self-signed-cert.sh \ --ssl-domain=xxyf.rancher.com \ --ssl-trusted-ip=192.168.100.32,192.168.100.146 \ --ssl-trusted-domain=xxyf.rancher.com \ --ssl-size=2048 \ --ssl-date=3650

6. Rancher 安装

所有步骤在 k3s server 节点执行(任选一个节点即可)

-

配置

k3s私有镜像仓库mkdir -p /etc/rancher/k3s/ vi /etc/rancher/k3s/registries.yamlmirrors: docker.io: endpoint: - "http://192.168.100.31:18888" "192.168.100.31:18888": endpoint: - "http://192.168.100.31:18888" configs: "192.168.100.31:18888": auth: username: admin password: xxx# k3s server 节点执行 systemctl restart k3s # k3s agent 节点执行(本例无) systemctl restart k3s-agent -

安装 tls 证书

kubectl create namespace cattle-system kubectl -n cattle-system create secret tls tls-rancher-ingress --cert=./tls.crt --key=./tls.key -

修改 Rancher 实例数

vi ./rancher/templates/deployment.yaml kind: Deployment apiVersion: apps/v1 metadata: name: rancher labels: app: rancher chart: rancher-2.5.14 heritage: Helm release: rancher spec: # 由默认 3 修改为 2(只有两个 k3s server 节点) replicas: 2 -

安装 Rancher

kubectl -n cattle-system apply -R -f ./rancher -

防火墙(若网络不通可临时配置测试)iptables -P INPUT ACCEPT iptables -P FORWARD ACCEPT iptables -P OUTPUT ACCEPT iptables -F -

日志查看

kubectl get pods -A -o wide kubectl logs -n cattle-system helm-operation-xxx # crictl 命令替代 docker 命令 k3s crictl ps -a k3s crictl images k3s crictl logs xxx -

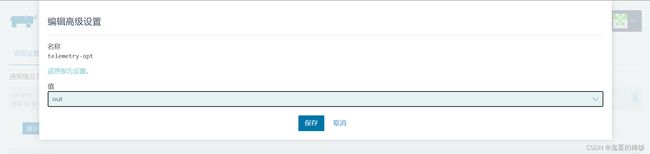

关闭遥测(离线环境需关闭)

7. 配置下游集群

7.1 节点环境配置

-

关闭 SELinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config grep "SELINUX=disabled" /etc/selinux/config setenforce 0 -

关闭 swap 分区

swapoff -a echo "vm.swappiness=0" >> /etc/sysctl.conf sysctl -p /etc/sysctl.conf sed -i 's$/dev/mapper/centos-swap$#/dev/mapper/centos-swap$g' /etc/fstab -

配置系统参数

echo """ net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1 net.ipv4.ip_forward=1 """ >> /etc/sysctl.conf modprobe br_netfilter sysctl -p -

修改主机时区(不执行!使用 NTP 同步时间)timedatectl set-timezone Asia/Shanghai date -s "2022-07-29 11:09:00" hwclock --set --date "2022-07-29 11:09:00" hwclock --hctosys hwclock -w init 6 -

配置 DNS

echo "nameserver 114.114.114.114" > /etc/resolv.conf systemctl daemon-reload systemctl restart network -

配置 hosts

cat << EOF >> /etc/hosts 192.168.100.32 server032 xxyf.rancher.com 192.168.100.33 server033 192.168.100.34 server034 192.168.100.35 server035 192.168.100.146 server146 xxyf.rancher.com 192.168.100.147 server147 EOF -

防火墙配置

firewall-cmd --permanent --add-port 22/tcp firewall-cmd --permanent --add-port 80/tcp firewall-cmd --permanent --add-port 443/tcp firewall-cmd --permanent --add-port 2376/tcp firewall-cmd --permanent --add-port 2379/tcp firewall-cmd --permanent --add-port 2380/tcp firewall-cmd --permanent --add-port 6443/tcp firewall-cmd --permanent --add-port 6444/tcp firewall-cmd --permanent --add-port 8472/udp firewall-cmd --permanent --add-port 9099/tcp firewall-cmd --permanent --add-port 9345/tcp firewall-cmd --permanent --add-port 10249/tcp firewall-cmd --permanent --add-port 10250/tcp firewall-cmd --permanent --add-port 10254/tcp firewall-cmd --permanent --add-port 10256/tcp firewall-cmd --permanent --add-port 30935/tcp firewall-cmd --permanent --add-port 31477/tcp firewall-cmd --permanent --zone=trusted --add-source=10.42.0.0/16 firewall-cmd --permanent --zone=trusted --add-source=10.43.0.0/16 firewall-cmd --reload

7.2 Docker 安装

-

拷贝离线安装包及依赖至主机任意目录并安装

tar -zxvf docker-rpms.tar.gz rpm -ivh docker-rpms/*.rpm --nodeps --force -

启动 docker 并设置开机启动

systemctl start docker systemctl enable docker -

查看 docker 启动状态

$ systemctl status docker ● docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2022-06-24 01:49:42 EDT; 24min ago Docs: https://docs.docker.com Main PID: 9949 (dockerd) CGroup: /system.slice/docker.service └─9949 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -

查看 docker 版本号

$ docker -v Docker version 20.10.17, build 100c701

7.3 私有镜像仓库配置

-

配置 docker 私有仓库地址

mkdir -p /etc/docker tee /etc/docker/daemon.json << EOF { "insecure-registries": ["192.168.100.31:18888"] } EOF -

重启 docker 使生效

systemctl daemon-reload systemctl restart docker systemctl enable docker -

登录私有仓库

$ docker login 192.168.100.31:18888 Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded

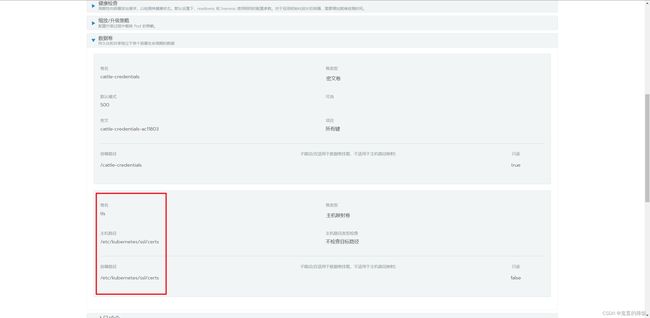

7.4 TLS 证书配置

-

创建目录

mkdir -p /etc/kubernetes/ssl/certs -

将之前生成的

tls.crt证书文件拷贝至各集群节点cp tls.crt /etc/kubernetes/ssl/certs/

7.5 创建自定义集群

访问 https://xxyf.rancher.com 进入 Rancher UI,创建自定义集群。

7.6 cattle-cluster-agent 报错解决

-

报错信息

INFO: Arguments: --server https://xxyf.rancher.com --token REDACTED --etcd --controlplane --worker INFO: Environment: CATTLE_ADDRESS=192.168.100.34 CATTLE_INTERNAL_ADDRESS= CATTLE_NODE_NAME=server034 CATTLE_ROLE=,etcd,worker,controlplane CATTLE_SERVER=https://xxyf.rancher.com CATTLE_TOKEN=REDACTED INFO: Using resolv.conf: nameserver 114.114.114.114 INFO: https://xxyf.rancher.com/ping is accessible INFO: xxyf.rancher.com resolves to 192.168.100.32 time="2022-07-27T23:34:26Z" level=info msg="Listening on /tmp/log.sock" time="2022-07-27T23:34:26Z" level=info msg="Rancher agent version 52a8de7b6-dirty is starting" time="2022-07-27T23:34:26Z" level=info msg="Option requestedHostname=server034" time="2022-07-27T23:34:26Z" level=info msg="Option customConfig=map[address:192.168.100.34 internalAddress: label:map[] roles:[etcd worker controlplane] taints:[]]" time="2022-07-27T23:34:26Z" level=info msg="Option etcd=true" time="2022-07-27T23:34:26Z" level=info msg="Option controlPlane=true" time="2022-07-27T23:34:26Z" level=info msg="Option worker=true" time="2022-07-27T23:34:26Z" level=info msg="Certificate details from https://xxyf.rancher.com" time="2022-07-27T23:34:26Z" level=info msg="Certificate #0 (https://xxyf.rancher.com)" time="2022-07-27T23:34:26Z" level=info msg="Subject: CN=xxyf.rancher.com,C=CN" time="2022-07-27T23:34:26Z" level=info msg="Issuer: CN=cattle-ca,C=CN" time="2022-07-27T23:34:26Z" level=info msg="IsCA: false" time="2022-07-27T23:34:26Z" level=info msg="DNS Names: [xxyf.rancher.com xxyf.rancher.com]" time="2022-07-27T23:34:26Z" level=info msg="IPAddresses: [192.168.100.32 192.168.100.33]" time="2022-07-27T23:34:26Z" level=info msg="NotBefore: 2022-07-26 04:52:29 +0000 UTC" time="2022-07-27T23:34:26Z" level=info msg="NotAfter: 2032-07-23 04:52:29 +0000 UTC" time="2022-07-27T23:34:26Z" level=info msg="SignatureAlgorithm: SHA256-RSA" time="2022-07-27T23:34:26Z" level=info msg="PublicKeyAlgorithm: RSA" time="2022-07-27T23:34:26Z" level=info msg="Certificate #1 (https://xxyf.rancher.com)" time="2022-07-27T23:34:26Z" level=info msg="Subject: CN=cattle-ca,C=CN" time="2022-07-27T23:34:26Z" level=info msg="Issuer: CN=cattle-ca,C=CN" time="2022-07-27T23:34:26Z" level=info msg="IsCA: true" time="2022-07-27T23:34:26Z" level=info msg="DNS Names:" time="2022-07-27T23:34:26Z" level=info msg="IPAddresses:" time="2022-07-27T23:34:26Z" level=info msg="NotBefore: 2022-07-26 04:52:29 +0000 UTC" time="2022-07-27T23:34:26Z" level=info msg="NotAfter: 2032-07-23 04:52:29 +0000 UTC" time="2022-07-27T23:34:26Z" level=info msg="SignatureAlgorithm: SHA256-RSA" time="2022-07-27T23:34:26Z" level=info msg="PublicKeyAlgorithm: RSA" time="2022-07-27T23:34:26Z" level=fatal msg="Certificate chain is not complete, please check if all needed intermediate certificates are included in the server certificate (in the correct order) and if the cacerts setting in Rancher either contains the correct CA certificate (in the case of using self signed certificates) or is empty (in the case of using a certificate signed by a recognized CA). Certificate information is displayed above. error: Get \"https://xxyf.rancher.com\": x509: certificate signed by unknown authority" -

添加主机域名解析

- 添加主机目录映射

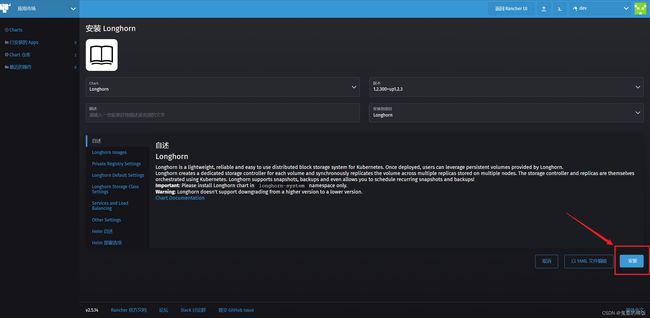

8. 安装 Longhorn

Longhorn 是 Rancher 使用的一种存储类,在进行容器数据持久化时,可以通过新建 PVC 实现。

8.1 主机节点安装 iscsi

-

在具有网络环境的机器上下载 iscsi 及依赖程序包

repotrack iscsi-initiator-utils -

压缩为 tar 包

tar zcvf iscsi-rpms.tar.gz iscsi/ -

拷贝至 Rancher 所有工作节点主机上并解压

tar -zxvf iscsi-rpms.tar.gz -

安装程序

rpm -ivh iscsi/*.rpm --nodeps --force

8.2 应用商店中安装

默认选项安装即可(建议先建立项目 Longhorn,安装到该项目下)

9. Nginx 负载均衡配置

-

拉取 nginx 镜像

docker pull 192.168.100.31:18888/library/nginx:latest -

创建

nginx.conf配置文件模板vi /etc/nginx.confworker_processes 4; worker_rlimit_nofile 40000; events { worker_connections 8192; } stream { upstream rancher_servers_http { least_conn; server 192.168.100.32:80 max_fails=3 fail_timeout=5s; server 192.168.100.146:80 max_fails=3 fail_timeout=5s; } server { listen 80; proxy_pass rancher_servers_http; } upstream rancher_servers_https { least_conn; server 192.168.100.32:443 max_fails=3 fail_timeout=5s; server 192.168.100.146:443 max_fails=3 fail_timeout=5s; } server { listen 443; proxy_pass rancher_servers_https; } } -

启动容器,并挂载配置文件

docker run -d --privileged --restart=unless-stopped \ -p 80:80 -p 443:443 \ -v /etc/nginx.conf:/etc/nginx/nginx.conf \ 192.168.100.31:18888/library/nginx:latest

10. Rancher 节点清理脚本

#!/bin/bash

KUBE_SVC='

kubelet

kube-scheduler

kube-proxy

kube-controller-manager

kube-apiserver

'

for kube_svc in ${KUBE_SVC};

do

# 停止服务

if [[ `systemctl is-active ${kube_svc}` == 'active' ]]; then

systemctl stop ${kube_svc}

fi

# 禁止服务开机启动

if [[ `systemctl is-enabled ${kube_svc}` == 'enabled' ]]; then

systemctl disable ${kube_svc}

fi

done

# 停止所有容器

docker stop $(docker ps -aq)

# 删除所有容器

docker rm -f $(docker ps -qa)

# 删除所有容器卷

docker volume rm $(docker volume ls -q)

# 卸载mount目录

for mount in $(mount | grep tmpfs | grep '/var/lib/kubelet' | awk '{ print $3 }') /var/lib/kubelet /var/lib/rancher;

do

umount $mount;

done

# 备份目录

mv /etc/kubernetes /etc/kubernetes-bak-$(date +"%Y%m%d%H%M")

mv /var/lib/etcd /var/lib/etcd-bak-$(date +"%Y%m%d%H%M")

mv /var/lib/rancher /var/lib/rancher-bak-$(date +"%Y%m%d%H%M")

mv /opt/rke /opt/rke-bak-$(date +"%Y%m%d%H%M")

# 删除残留路径

rm -rf /etc/ceph \

/etc/cni \

/opt/cni \

/run/secrets/kubernetes.io \

/run/calico \

/run/flannel \

/var/lib/calico \

/var/lib/cni \

/var/lib/kubelet \

/var/log/containers \

/var/log/kube-audit \

/var/log/pods \

/var/run/calico \

/usr/libexec/kubernetes

# 清理网络接口

no_del_net_inter='

lo

docker0

eth

ens

bond

'

network_interface=`ls /sys/class/net`

for net_inter in $network_interface;

do

if ! echo "${no_del_net_inter}" | grep -qE ${net_inter:0:3}; then

ip link delete $net_inter

fi

done

# 清理残留进程

port_list='

80

443

6443

2376

2379

2380

8472

9099

10250

10254

'

for port in $port_list;

do

pid=`netstat -atlnup | grep $port | awk '{print $7}' | awk -F '/' '{print $1}' | grep -v - | sort -rnk2 | uniq`

if [[ -n $pid ]]; then

kill -9 $pid

fi

done

kube_pid=`ps -ef | grep -v grep | grep kube | awk '{print $2}'`

if [[ -n $kube_pid ]]; then

kill -9 $kube_pid

fi

# 清理Iptables表

## 注意:如果节点Iptables有特殊配置,以下命令请谨慎操作

sudo iptables --flush

sudo iptables --flush --table nat

sudo iptables --flush --table filter

sudo iptables --table nat --delete-chain

sudo iptables --table filter --delete-chain

systemctl restart docker

11. 错误汇总

- iptables 报错

022/07/29 03:56:17 [INFO] kontainerdriver rancherkubernetesengine stopped

2022/07/29 03:56:17 [ERROR] error syncing 'c-q8krf': handler cluster-provisioner-controller: [Failed to start [rke-etcd-port-listener] container on host [192.168.100.34]: Error response from daemon: driver failed programming external connectivity on endpoint rke-etcd-port-listener (f50d03f943d38a0b5eb75c08481b16fdf3b7025a953f3242c005fc7461b05c64): (COMMAND_FAILED: '/usr/sbin/iptables -w10 -t nat -A DOCKER -p tcp -d 0/0 --dport 2380 -j DNAT --to-destination 172.17.0.2:1337 ! -i docker0' failed: Another app is currently holding the xtables lock; still 1s 0us time ahead to have a chance to grab the lock...

Another app is currently holding the xtables lock. Stopped waiting after 10s.

)], requeuing

解决思路:先重启防火墙,再重启 docker

systemctl restart firewalld

systemctl restart docker

- 证书报错

2022-07-29 04:04:08.025220 I | embed: rejected connection from "192.168.100.34:42768" (error "tls: failed to verify client's certificate: x509: certificate signed by unknown authority (possibly because of \"crypto/rsa: verification error\" while trying to verify candidate authority certificate \"kube-ca\")", ServerName "")

解决思路:将证书拷贝至所有节点中,包括下游集群节点,参考 7.4 处理步骤