多机多卡技术测试-单节点多DCU(任务划分型-无数据传输矩阵乘法)

#include Makefile

SOURCE =$(wildcard *.cpp)

OBJS =$(patsubst %.cpp,%,$(SOURCE))

HIPCC = /opt/rocm/bin/hipcc

GCC=/opt/rh/devtoolset-7/root/usr/bin/gcc

all:$(OBJS)

$(OBJS):%:%.cpp

$(HIPCC) $^ -o $@

run:

./matrixMaxDCU 2 4 4 4 1

clean:

-rm $(OBJS)

matrixMaxDCU.cpp

#include "common.h"

#include > > (d_A[i], d_B[i], d_C[i], m, k, k, n, m, n);

hipLaunchKernelGGL(matrixMultiplyShared, grid, block, 0, stream[i], d_A[i], d_B[i], d_C[i], m, k, k, n, m, n);

CHECK(hipMemcpyAsync(gpuRef[i], d_C[i], Cxy * sizeof(float), hipMemcpyDeviceToHost,

stream[i]));

}

// synchronize streams

for (int i = 0; i < ngpus; i++)

{

CHECK(hipSetDevice(i));

CHECK(hipStreamSynchronize(stream[i]));

}

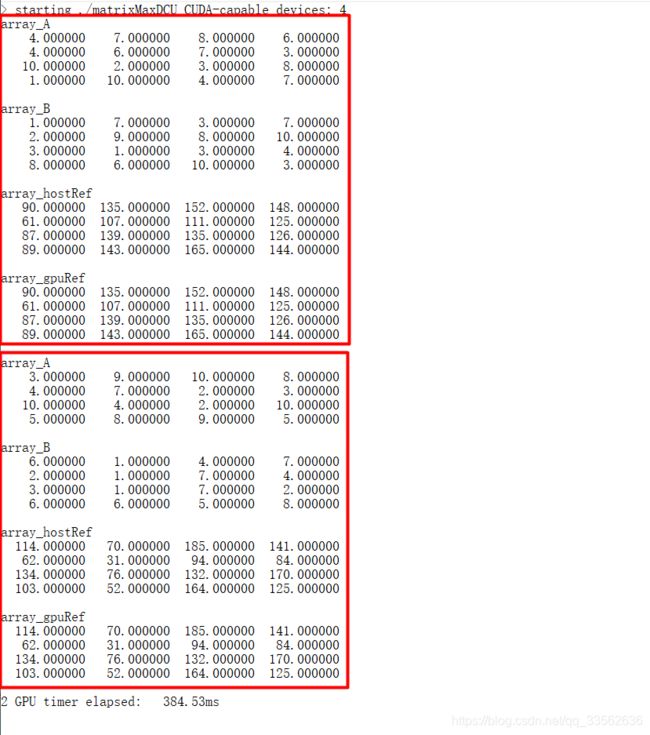

const char* array_A = "array_A";

const char* array_B = "array_B";

const char* array_hostRef = "array_hostRef";

const char* array_gpuRef = "array_gpuRef";

if (ifprint)

for (int i = 0; i < ngpus; i++)

{

CHECK(hipSetDevice(i));

// printData(h_A[i], iSize);

printMatrix(array_A, h_A[i], m, k);

printMatrix(array_B, h_B[i], k, n);

printMatrix(array_hostRef, hostRef[i], m, n);

printMatrix(array_gpuRef, gpuRef[i], m, n);

}

double iElaps = seconds() - iStart;

printf("%d GPU timer elapsed: %8.2fms \n", ngpus, iElaps * 1000.0);

for (int i = 0; i < ngpus; i++)

{

CHECK(hipSetDevice(i));

checkResult(hostRef[i], gpuRef[i], Cxy);

}

for (int i = 0; i < ngpus; i++)

{

CHECK(hipSetDevice(i));

CHECK(hipFree(d_A[i]));

CHECK(hipFree(d_B[i]));

CHECK(hipFree(d_C[i]));

CHECK(hipHostFree(h_A[i]));

CHECK(hipHostFree(h_B[i]));

CHECK(hipHostFree(hostRef[i]));

CHECK(hipHostFree(gpuRef[i]));

CHECK(hipStreamDestroy(stream[i]));

CHECK(hipDeviceReset());

}

free(d_A);

free(d_B);

free(d_C);

free(h_A);

free(h_B);

free(hostRef);

free(gpuRef);

free(stream);

return 0;

}