手写体识别

机器学习模型:调用第三方库sklearn进行运行,划分数据集为训练集:测试集=7:3

1.决策树

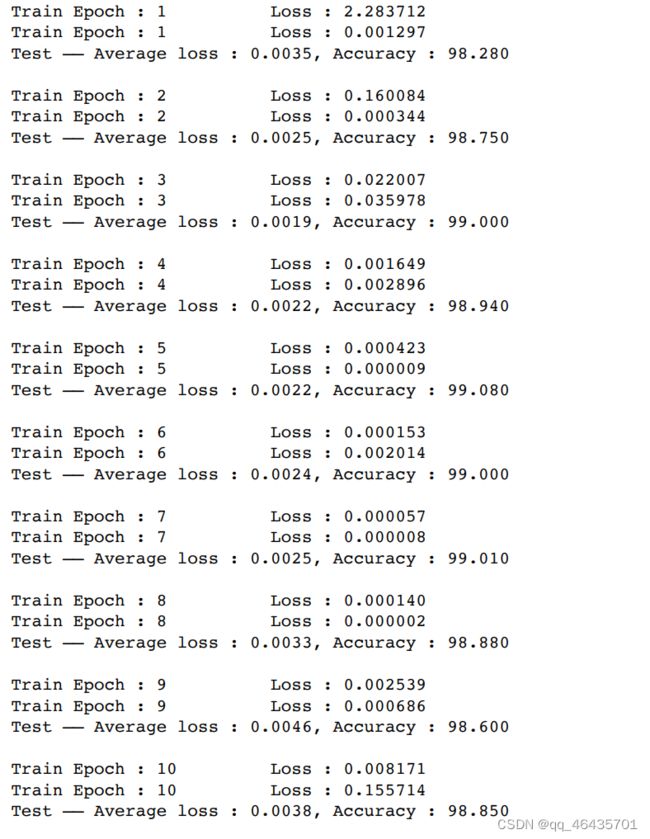

神经网络模型:使用的pytorch包,用官方经典cnn网络模型,效果非常不错,可以到达99%多,构建了一个新的网络结构,用了两个卷积层,第一个卷积层输出通道改为了10,卷积核改为了5,第二个卷积层输入改为了10,输出修改为20,两个全连接层第一个输入通道改为了2000,,输出通道改为了500,第二个输入通道改为了500,输出通道改为了10,识别率也能达到98%,,99%。

总结:综上所述,机器模型中运用支持向量机分类的效果最好,决策树的效果最差,但效果最好的是神经网络,高达99%多。

代码:

机器学习模型:

from sklearn import preprocessing

from sklearn.tree import DecisionTreeRegressor

from sklearn import svm

from sklearn import tree

from sklearn.linear_model import LogisticRegression

import matplotlib.pyplot as plt

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score,make_scorer

from sklearn.datasets import load_digits

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import GridSearchCV

划分数据集

digits=load_digits()

x_train,x_test,y_train,y_test=train_test_split(digits.data,digits.target,test_size=0.3)

决策树

clf=tree.DecisionTreeClassifier(criterion=“entropy”)

计算score

clf=clf.fit(x_train,y_train)

score=clf.score(x_test,y_test)

print(score)

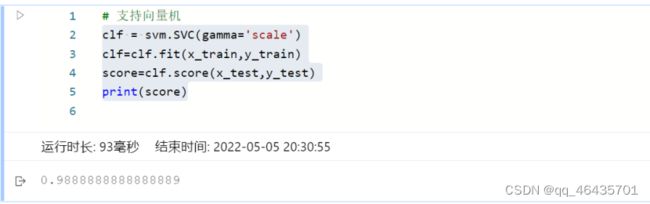

支持向量机

clf = svm.SVC(gamma=‘scale’)

clf=clf.fit(x_train,y_train)

score=clf.score(x_test,y_test)

print(score)

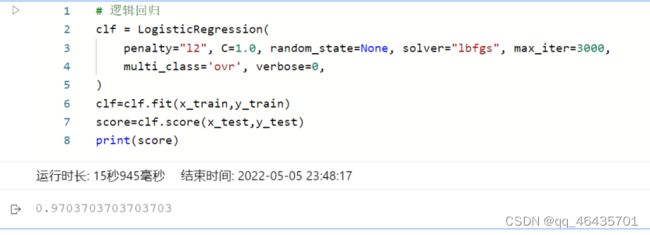

逻辑回归

clf = LogisticRegression(

penalty=“l2”, C=1.0, random_state=None, solver=“lbfgs”, max_iter=3000,

multi_class=‘ovr’, verbose=0,

)

clf=clf.fit(x_train,y_train)

score=clf.score(x_test,y_test)

print(score)

神经网络模型:

导入库

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets,transforms,models

定义超参数

BATCH_SIZE = 32 # 每批处理的数据

DEVICE = torch.device(“cuda” if torch.cuda.is_available() else “cpu”) # 是否用GPU还是CPU训练

EPOCHS = 10 # 训练数据集的轮次

构建pipeline,对图像做处理

pipeline = transforms.Compose([

transforms.ToTensor(),# 将图片转换成tensor

transforms.Normalize((0.1307,),(0.3081,)) # 正则化:降低模型复杂度

])

下载,加载数据集

from torch.utils.data import DataLoader

train_set=datasets.MNIST(“data”,train=True,download=True,transform=pipeline)

test_set=datasets.MNIST(“data”,train=False,download=True,transform=pipeline)

加载数据

train_loader=DataLoader(train_set,batch_size=BATCH_SIZE,shuffle=True)

test_loader=DataLoader(test_set,batch_size=BATCH_SIZE,shuffle=True)

自己编写的网络模型

class Digit(nn.Module):

def init(self):

super().init()

self.conv1=nn.Conv2d(1, 10, 5) # 灰度图片的通道 输出通道 kernel

self.conv2=nn.Conv2d(10, 20, 3)

self.fc1=nn.Linear(201010, 500) # 输入通道和输出通道

self.fc2=nn.Linear(500, 10) # 输入通道和输出通道

def forward(self, x):

input_size = x.size(0) # batch_size

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, 2, 2) # 池化层

x = self.conv2(x)

x = F.relu(x)

x = x.view(input_size, -1) # 拉平

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

官网网络模型

class CNN(nn.Module):

def init(self):

super(CNN, self).init()

self.layer = nn.Sequential(

nn.Conv2d(1, 16, 5), # 16*24*24

nn.ReLU(),

nn.Conv2d(16, 32, 5), # 32*20*20

nn.ReLU(),

nn.MaxPool2d(2, 2), # 32*10*10

nn.Conv2d(32, 64, 5), # 64*6*6

nn.ReLU(),

nn.MaxPool2d(2, 2) # 64*3*3

)

self.fc_layer = nn.Sequential(

nn.Linear(64 * 3 * 3, 100),

nn.ReLU(),

nn.Linear(100, 10)

)

def forward(self, x):

out = self.layer(x)

out = out.view(-1, 64 * 3 * 3)

out = self.fc_layer(out)

return out

定义优化器

model = CNN()

model.cuda()

print(model)

optimizer = optim.Adam(model.parameters(),lr=0.001)

定义训练方法

def train_model(model, device, train_loader, optimizer, epoch):

# 模型训练

model.train()

for batch_index, (data , target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

# 梯度初始化0

optimizer.zero_grad()

# 训练后的结果

output = model(data)

loss = F.cross_entropy(output, target)

# 反向传播

loss.backward()

# 参数优化

optimizer.step()

if batch_index % 3000 == 0:

print(“Train Epoch :{} \t Loss : {:.6f}”.format(epoch, loss.item()))

定义测试方法

def test_model(model, device, test_loader):

# 模型验证

model.eval()

correct = 0.0

# 测试损失

test_loss = 0.0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

# 测试数据

output = model(data)

test_loss += F.cross_entropy(output, target).item()

# 找到概率值最大的下标

pred = output.max(1, keepdim=True)[1]

# 累计正确值

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print(“Test —— Average loss : {:4f}, Accuracy : {:.3f}\n”.format(

test_loss,100.0 * correct / len(test_loader.dataset)))

for epoch in range(1,EPOCHS + 1):

train_model(model, DEVICE, train_loader, optimizer, epoch)

test_model(model, DEVICE, test_loader)