总结 Underlay 和 Overlay 网络,在k8s集群实现underlay网络,网络组件flannel vxlan/ calico IPIP模式的网络通信流程,基于二进制实现高可用的K8S集群

1、总结Underlay和Overlay网络的的区别及优缺点

Overlay网络:

Overlay 叫叠加网络也叫覆盖网络,指的是在物理网络的

基础之上叠加实现新的虚拟网络,即可使网络的中的容器可

以相互通信。

优点是对物理网络的兼容性比较好,可以实现pod的夸宿

主机子网通信。

calico与flannel等网络插件都支持overlay网络

缺点是有额外的封装与解封性能开销

目前私有云使用比较多。

VTEP(VXLAN Tunnel Endpoint vxlan隧道端点),VTEP是VXLAN网络的边缘设备,是VXLAN隧道的起点和终点,VXLAN对用户原始数据帧的封装和解封装均在VTEP上进行,用于VXLAN报文的封装和解封装,VTEP与物理网络相连,VXLAN报文中源IP地址为本节点的VTEP地址,VXLAN报文中目的IP地址为对端节点的VTEP地址,一对VTEP地址就对应着一个VXLAN隧道,服务器上的虚拟交换机(隧道flannel.1 就是VTEP),比如一个虚拟机网络中的多个vxlan就需要多个VTEP对不同网络的报文进行封装与解封装

VNI(VXLAN Network Identifier):VXLAN网络标识VNI类似VLAN ID,用于区分VXLAN段,不同VXLAN段的虚拟机不能直接二层相互通信,一个VNI表示一个租户,即使多个终端用户属于同一个VNI,也表示一个租户。

NVGRE:Network Virtualization using Generic Routing Encapsulation,主要支持者是Microsoft,与VXLAN不同的是,NVGRE没有采用标准传输协议(TCP/UDP),而是借助通用路由封装协议(GRE),NVGRE使用GRE头部的第24位作为租户网络标识符(TNI),与VXLAN一样可以支持1777216个vlan。

源宿主机VTEP添加或者封装VXLAN、UDP及IP头部报文。

网络层设备将封装后的报文通过标准的报文在三层网络进行转发到目标主机

目标宿主机VTEP删除或者解封装VXLAN、UDP及IP头部。

Underlay网络:

Underlay网络就是传统IT基础设施网络,由交换机和路由器等设备组成,借助以太网协议、路由协议和VLAN协议等驱动,它还是Overlay网络的底层网络,为Overlay网络提供数据通信服务。容器网络中的Underlay网络是指借助驱动程序将宿主机的底层网络接口直接暴露给容器使用的一种网络构建技术,较为常见的解决方案有MAC VLAN、IP VLAN和直接路由等。

Mac Vlan模式:

MAC VLAN:支持在同一个以太网接口上虚拟出多个网络接口(子接口),每个虚拟接口都拥有唯一的MAC地址并可配置网卡子接口IP。

IP VLAN模式:

IP VLAN类似于MAC VLAN,它同样创建新的虚拟网络接口并为每个接口分配唯一的IP地址,不同之处在于,每个虚拟接口将共享使用物理接口的MAC地址。

网络通信-MAC Vlan工作模式:

bridge模式:

在bridge这种模式下,使用同一个宿主机网络的macvlan容器可以直接实现通信,推荐使用此模式。

网络通信-总结:

Overlay:基于VXLAN、NVGRE等封装技术实现overlay叠加网络。

Underlay(Macvlan):基于宿主机物理网卡虚拟出多个网络接口(子接口),每个虚拟接口都拥有唯一的MAC地址并可配置网卡子接口IP。

2、在kubernetes集群实现underlay网络

2.1 k8s 环境准备-安装运行时

##在包括master及node节点在内的每一个节点安装docker或containerd运行时,先安装必要的一些系统工具,只展示master节点

root@k8s-master1:~# apt-get update

Get:1 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease [8,993 B]

Hit:2 http://cn.archive.ubuntu.com/ubuntu jammy InRelease

Get:3 http://cn.archive.ubuntu.com/ubuntu jammy-updates InRelease [119 kB]

Get:4 http://cn.archive.ubuntu.com/ubuntu jammy-backports InRelease [107 kB]

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy-security InRelease [110 kB]

Get:6 http://cn.archive.ubuntu.com/ubuntu jammy-updates/main amd64 Packages [948 kB]

Get:7 http://cn.archive.ubuntu.com/ubuntu jammy-updates/universe amd64 Packages [890 kB]

Fetched 2,183 kB in 9s (248 kB/s)

Reading package lists... Done

W: https://mirrors.aliyun.com/kubernetes/apt/dists/kubernetes-xenial/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

root@k8s-master1:~# apt -y install apt-transport-https ca-certificates curl software-properties-common

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

ca-certificates is already the newest version (20211016ubuntu0.22.04.1).

ca-certificates set to manually installed.

curl is already the newest version (7.81.0-1ubuntu1.8).

curl set to manually installed.

apt-transport-https is already the newest version (2.4.8).

The following additional packages will be installed:

python3-software-properties

The following packages will be upgraded:

python3-software-properties software-properties-common

2 upgraded, 0 newly installed, 0 to remove and 70 not upgraded.

Need to get 42.9 kB of archives.

After this operation, 0 B of additional disk space will be used.

Get:1 http://cn.archive.ubuntu.com/ubuntu jammy-updates/main amd64 software-properties-common all 0.99.22.6 [14.1 kB]

Get:2 http://cn.archive.ubuntu.com/ubuntu jammy-updates/main amd64 python3-software-properties all 0.99.22.6 [28.8 kB]

Fetched 42.9 kB in 1s (39.6 kB/s)

(Reading database ... 73785 files and directories currently installed.)

Preparing to unpack .../software-properties-common_0.99.22.6_all.deb ...

Unpacking software-properties-common (0.99.22.6) over (0.99.22.2) ...

Preparing to unpack .../python3-software-properties_0.99.22.6_all.deb ...

Unpacking python3-software-properties (0.99.22.6) over (0.99.22.2) ...

Setting up python3-software-properties (0.99.22.6) ...

Setting up software-properties-common (0.99.22.6) ...

Processing triggers for man-db (2.10.2-1) ...

Processing triggers for dbus (1.12.20-2ubuntu4.1) ...

Scanning processes...

Scanning linux images...

Running kernel seems to be up-to-date.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.

##安装GPG证书

root@k8s-master1:~# curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

OK

##写入软件源信息

root@k8s-master1:~# add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

Repository: 'deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy stable'

Description:

Archive for codename: jammy components: stable

More info: http://mirrors.aliyun.com/docker-ce/linux/ubuntu

Adding repository.

Press [ENTER] to continue or Ctrl-c to cancel.

Adding deb entry to /etc/apt/sources.list.d/archive_uri-http_mirrors_aliyun_com_docker-ce_linux_ubuntu-jammy.list

Adding disabled deb-src entry to /etc/apt/sources.list.d/archive_uri-http_mirrors_aliyun_com_docker-ce_linux_ubuntu-jammy.list

Get:1 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy InRelease [48.9 kB]

Hit:2 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease

Hit:3 http://cn.archive.ubuntu.com/ubuntu jammy InRelease

Get:4 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages [13.6 kB]

Hit:5 http://cn.archive.ubuntu.com/ubuntu jammy-updates InRelease

Hit:6 http://cn.archive.ubuntu.com/ubuntu jammy-backports InRelease

Hit:7 http://cn.archive.ubuntu.com/ubuntu jammy-security InRelease

Fetched 62.5 kB in 1s (53.7 kB/s)

Reading package lists... Done

W: https://mirrors.aliyun.com/kubernetes/apt/dists/kubernetes-xenial/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

W: http://mirrors.aliyun.com/docker-ce/linux/ubuntu/dists/jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

##更新软件

root@k8s-master1:~# apt-get -y update

Hit:1 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy InRelease

Hit:2 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease

Hit:3 http://cn.archive.ubuntu.com/ubuntu jammy InRelease

Hit:4 http://cn.archive.ubuntu.com/ubuntu jammy-updates InRelease

Hit:5 http://cn.archive.ubuntu.com/ubuntu jammy-backports InRelease

Hit:6 http://cn.archive.ubuntu.com/ubuntu jammy-security InRelease

Reading package lists... Done

W: http://mirrors.aliyun.com/docker-ce/linux/ubuntu/dists/jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

W: https://mirrors.aliyun.com/kubernetes/apt/dists/kubernetes-xenial/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

##查看docker可安装的版本

root@k8s-master1:~# apt-cache madison docker-ce docker-ce-cli

docker-ce | 5:23.0.1-1~ubuntu.22.04~jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:23.0.0-1~ubuntu.22.04~jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.23~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.22~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.21~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.20~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.19~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.18~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.17~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.16~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.15~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.14~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce | 5:20.10.13~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:23.0.1-1~ubuntu.22.04~jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:23.0.0-1~ubuntu.22.04~jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.23~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.22~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.21~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.20~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.19~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.18~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.17~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.16~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.15~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.14~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

docker-ce-cli | 5:20.10.13~3-0~ubuntu-jammy | http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages

##安装docker

root@k8s-master1:~# apt install -y docker-ce=5:20.10.23~3-0~ubuntu-jammy docker-ce-cli=5:20.10.23~3-0~ubuntu-jammy

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

containerd.io docker-ce-rootless-extras docker-scan-plugin libltdl7 libslirp0 pigz slirp4netns

Suggested packages:

aufs-tools cgroupfs-mount | cgroup-lite

The following NEW packages will be installed:

containerd.io docker-ce docker-ce-cli docker-ce-rootless-extras docker-scan-plugin libltdl7 libslirp0 pigz slirp4netns

0 upgraded, 9 newly installed, 0 to remove and 70 not upgraded.

Need to get 104 MB of archives.

After this operation, 389 MB of additional disk space will be used.

Get:1 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 containerd.io amd64 1.6.18-1 [28.2 MB]

Get:2 http://cn.archive.ubuntu.com/ubuntu jammy/universe amd64 pigz amd64 2.6-1 [63.6 kB]

Get:3 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 libltdl7 amd64 2.4.6-15build2 [39.6 kB]

Get:4 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 libslirp0 amd64 4.6.1-1build1 [61.5 kB]

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy/universe amd64 slirp4netns amd64 1.0.1-2 [28.2 kB]

15% [1 containerd.io 7,889 kB/28.2 MB 28%] 106 kB/s 15min 0s^Get:6 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 docker-ce-cli amd64 5:20.10.23~3-0~ubuntu-jammy [42.6 MB]

Get:7 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 docker-ce amd64 5:20.10.23~3-0~ubuntu-jammy [20.5 MB]

Get:8 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 docker-ce-rootless-extras amd64 5:23.0.1-1~ubuntu.22.04~jammy [8,760 kB]

Get:9 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 docker-scan-plugin amd64 0.23.0~ubuntu-jammy [3,623 kB]

Fetched 104 MB in 16min 33s (105 kB/s)

Selecting previously unselected package pigz.

(Reading database ... 73785 files and directories currently installed.)

Preparing to unpack .../0-pigz_2.6-1_amd64.deb ...

Unpacking pigz (2.6-1) ...

Selecting previously unselected package containerd.io.

Preparing to unpack .../1-containerd.io_1.6.18-1_amd64.deb ...

Unpacking containerd.io (1.6.18-1) ...

Selecting previously unselected package docker-ce-cli.

Preparing to unpack .../2-docker-ce-cli_5%3a20.10.23~3-0~ubuntu-jammy_amd64.deb ...

Unpacking docker-ce-cli (5:20.10.23~3-0~ubuntu-jammy) ...

Selecting previously unselected package docker-ce.

Preparing to unpack .../3-docker-ce_5%3a20.10.23~3-0~ubuntu-jammy_amd64.deb ...

Unpacking docker-ce (5:20.10.23~3-0~ubuntu-jammy) ...

Selecting previously unselected package docker-ce-rootless-extras.

Preparing to unpack .../4-docker-ce-rootless-extras_5%3a23.0.1-1~ubuntu.22.04~jammy_amd64.deb ...

Unpacking docker-ce-rootless-extras (5:23.0.1-1~ubuntu.22.04~jammy) ...

Selecting previously unselected package docker-scan-plugin.

Preparing to unpack .../5-docker-scan-plugin_0.23.0~ubuntu-jammy_amd64.deb ...

Unpacking docker-scan-plugin (0.23.0~ubuntu-jammy) ...

Selecting previously unselected package libltdl7:amd64.

Preparing to unpack .../6-libltdl7_2.4.6-15build2_amd64.deb ...

Unpacking libltdl7:amd64 (2.4.6-15build2) ...

Selecting previously unselected package libslirp0:amd64.

Preparing to unpack .../7-libslirp0_4.6.1-1build1_amd64.deb ...

Unpacking libslirp0:amd64 (4.6.1-1build1) ...

Selecting previously unselected package slirp4netns.

Preparing to unpack .../8-slirp4netns_1.0.1-2_amd64.deb ...

Unpacking slirp4netns (1.0.1-2) ...

Setting up docker-scan-plugin (0.23.0~ubuntu-jammy) ...

Setting up containerd.io (1.6.18-1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Setting up libltdl7:amd64 (2.4.6-15build2) ...

Setting up docker-ce-cli (5:20.10.23~3-0~ubuntu-jammy) ...

Setting up libslirp0:amd64 (4.6.1-1build1) ...

Setting up pigz (2.6-1) ...

Setting up docker-ce-rootless-extras (5:23.0.1-1~ubuntu.22.04~jammy) ...

Setting up slirp4netns (1.0.1-2) ...

Setting up docker-ce (5:20.10.23~3-0~ubuntu-jammy) ...

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service.

Created symlink /etc/systemd/system/sockets.target.wants/docker.socket → /lib/systemd/system/docker.socket.

Processing triggers for man-db (2.10.2-1) ...

Processing triggers for libc-bin (2.35-0ubuntu3.1) ...

Scanning processes...

Scanning linux images...

Running kernel seems to be up-to-date.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.

##启动docker并加入开机自启动

root@k8s-node1:~# systemctl start docker && systemctl enable docker

Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable docker

##参数优化,配置镜像加速并使用systemd

root@k8s-node1:~# mkdir -p /etc/docker

root@k8s-node1:~# tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"]

}

EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"]

}

##重启docker使配置生效

root@k8s-node1:~# systemctl daemon-reload && sudo systemctl restart docker

root@k8s-node1:~# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"]

}

2.2 安装cri-dockerd-二进制

##下载二进制安装包

root@k8s-master1:~# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1.amd64.tgz

##解压安装包

root@k8s-node1:~# tar xvf cri-dockerd-0.3.1.amd64.tgz

cri-dockerd/

cri-dockerd/cri-dockerd

##拷贝二进制文件到可执行目录

root@k8s-node1:~# cp cri-dockerd/cri-dockerd /usr/local/bin/

##编写cri-docker.service

root@k8s-node1:~# vim /lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

##配置cri-docker.socket文件

root@k8s-node1:~# vim /etc/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

##启动服务

root@k8s-node1:~# systemctl daemon-reload && systemctl restart cri-docker && systemctl enable cri-docker

root@k8s-node1:~# systemctl enable --now cri-docker.socket

Created symlink /etc/systemd/system/sockets.target.wants/cri-docker.socket → /etc/systemd/system/cri-docker.socket.

##查看cri-docker启动状态

root@k8s-node1:~# systemctl status cri-docker.service

● cri-docker.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/cri-docker.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-03-19 05:09:25 UTC; 57s ago

TriggeredBy: ● cri-docker.socket

Docs: https://docs.mirantis.com

Main PID: 6443 (cri-dockerd)

Tasks: 7

Memory: 8.8M

CPU: 63ms

CGroup: /system.slice/cri-docker.service

└─6443 /usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="The binary conntrack is not installed, this can cause fail>

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="The binary conntrack is not installed, this can cause fail>

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Loaded network plugin cni"

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Docker cri networking managed by network plugin cni"

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Docker Info: &{ID:JKKP:U24V:NLTT:V37V:Y3BB:7L5O:4VTR:DMVC:>

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Setting cgroupDriver systemd"

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Docker cri received runtime config &RuntimeConfig{NetworkC>

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Starting the GRPC backend for the Docker CRI interface."

Mar 19 05:09:25 k8s-node1 cri-dockerd[6443]: time="2023-03-19T05:09:25Z" level=info msg="Start cri-dockerd grpc backend"

Mar 19 05:09:25 k8s-node1 systemd[1]: Started CRI Interface for Docker Application Container Engine.

2.3 安装kubeadm

##使用阿里云源

root@k8s-node1:~# apt-get update && apt-get install -y apt-transport-https

Hit:1 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy InRelease

Hit:2 http://cn.archive.ubuntu.com/ubuntu jammy InRelease

Get:3 http://cn.archive.ubuntu.com/ubuntu jammy-updates InRelease [119 kB]

Get:4 http://cn.archive.ubuntu.com/ubuntu jammy-backports InRelease [107 kB]

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy-security InRelease [110 kB]

Fetched 336 kB in 2s (144 kB/s)

Reading package lists... Done

W: http://mirrors.aliyun.com/docker-ce/linux/ubuntu/dists/jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

apt-transport-https is already the newest version (2.4.8).

0 upgraded, 0 newly installed, 0 to remove and 69 not upgraded.

root@k8s-node1:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

100 1210 100 1210 0 0 3710 0 --:--:-- --:--:-- --:--:-- 3711

OK

root@k8s-node1:~# cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

##检查更新

root@k8s-node1:~# apt-get update

Hit:1 http://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy InRelease

Get:2 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease [8,993 B]

Hit:3 http://cn.archive.ubuntu.com/ubuntu jammy InRelease

Ign:4 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

Get:4 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages [64.5 kB]

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy-updates InRelease [119 kB]

Get:6 http://cn.archive.ubuntu.com/ubuntu jammy-backports InRelease [107 kB]

Get:7 http://cn.archive.ubuntu.com/ubuntu jammy-security InRelease [110 kB]

Fetched 409 kB in 3s (143 kB/s)

Reading package lists... Done

W: http://mirrors.aliyun.com/docker-ce/linux/ubuntu/dists/jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

W: https://mirrors.aliyun.com/kubernetes/apt/dists/kubernetes-xenial/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

##安装kubeadm

root@k8s-node1:~# apt-get install kubelet=1.24.10-00 kubeadm=1.24.10-00 kubectl=1.24.10-00

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

conntrack cri-tools ebtables kubernetes-cni socat

The following NEW packages will be installed:

conntrack cri-tools ebtables kubeadm kubectl kubelet kubernetes-cni socat

0 upgraded, 8 newly installed, 0 to remove and 69 not upgraded.

Need to get 85.0 MB of archives.

After this operation, 332 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 cri-tools amd64 1.26.0-00 [18.9 MB]

Get:2 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 conntrack amd64 1:1.4.6-2build2 [33.5 kB]

Get:3 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 ebtables amd64 2.0.11-4build2 [84.9 kB]

Get:4 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubernetes-cni amd64 1.2.0-00 [27.6 MB]

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 socat amd64 1.7.4.1-3ubuntu4 [349 kB]

Get:6 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubelet amd64 1.24.10-00 [19.4 MB]

Get:7 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubectl amd64 1.24.10-00 [9,432 kB]

Get:8 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubeadm amd64 1.24.10-00 [9,114 kB]

Ign:5 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 socat amd64 1.7.4.1-3ubuntu4

Get:5 http://cn.archive.ubuntu.com/ubuntu jammy/main amd64 socat amd64 1.7.4.1-3ubuntu4 [349 kB]

Fetched 84.9 MB in 1min 46s (803 kB/s)

Selecting previously unselected package conntrack.

(Reading database ... 73975 files and directories currently installed.)

Preparing to unpack .../0-conntrack_1%3a1.4.6-2build2_amd64.deb ...

Unpacking conntrack (1:1.4.6-2build2) ...

Selecting previously unselected package cri-tools.

Preparing to unpack .../1-cri-tools_1.26.0-00_amd64.deb ...

Unpacking cri-tools (1.26.0-00) ...

Selecting previously unselected package ebtables.

Preparing to unpack .../2-ebtables_2.0.11-4build2_amd64.deb ...

Unpacking ebtables (2.0.11-4build2) ...

Selecting previously unselected package kubernetes-cni.

Preparing to unpack .../3-kubernetes-cni_1.2.0-00_amd64.deb ...

Unpacking kubernetes-cni (1.2.0-00) ...

Selecting previously unselected package socat.

Preparing to unpack .../4-socat_1.7.4.1-3ubuntu4_amd64.deb ...

Unpacking socat (1.7.4.1-3ubuntu4) ...

Selecting previously unselected package kubelet.

Preparing to unpack .../5-kubelet_1.24.10-00_amd64.deb ...

Unpacking kubelet (1.24.10-00) ...

Selecting previously unselected package kubectl.

Preparing to unpack .../6-kubectl_1.24.10-00_amd64.deb ...

Unpacking kubectl (1.24.10-00) ...

Selecting previously unselected package kubeadm.

Preparing to unpack .../7-kubeadm_1.24.10-00_amd64.deb ...

Unpacking kubeadm (1.24.10-00) ...

Setting up conntrack (1:1.4.6-2build2) ...

Setting up kubectl (1.24.10-00) ...

Setting up ebtables (2.0.11-4build2) ...

Setting up socat (1.7.4.1-3ubuntu4) ...

Setting up cri-tools (1.26.0-00) ...

Setting up kubernetes-cni (1.2.0-00) ...

Setting up kubelet (1.24.10-00) ...

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service.

Setting up kubeadm (1.24.10-00) ...

Processing triggers for man-db (2.10.2-1) ...

Scanning processes...

Scanning linux images...

Running kernel seems to be up-to-date.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.

2.4 初始化集群-镜像准备

##用脚本提前下载好镜像

root@k8s-node1:~# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.24.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.24.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.24.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.24.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.6

v1.24.10: Pulling from google_containers/kube-apiserver

fc251a6e7981: Pull complete

bffbec874b09: Pull complete

6374258c2a53: Pull complete

Digest: sha256:425a9728de960c4fe2d162669fb91e2b28061026d77bb48c8730c0ad9ee15149

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.24.10

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.24.10

v1.24.10: Pulling from google_containers/kube-controller-manager

fc251a6e7981: Already exists

bffbec874b09: Already exists

63cf3ce8a444: Pull complete

Digest: sha256:9d78dd19663d9eb3b9f660d3bae836c4cda685d0f1debc69f8a476cb9d796b66

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.24.10

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.24.10

v1.24.10: Pulling from google_containers/kube-scheduler

fc251a6e7981: Already exists

bffbec874b09: Already exists

a83371edc6c5: Pull complete

Digest: sha256:77f9414f4003e3c471a51dce48a6b2ef5c6753fd41ac336080d1ff562a3862a3

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.24.10

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.24.10

v1.24.10: Pulling from google_containers/kube-proxy

318b659927b4: Pull complete

dd22051c691b: Pull complete

294b864c5e01: Pull complete

Digest: sha256:5d5748d409be932fba3db18b86673e9b3542dff373d9c26ed65e4c89add6102b

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.24.10

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.24.10

3.7: Pulling from google_containers/pause

7582c2cc65ef: Pull complete

Digest: sha256:bb6ed397957e9ca7c65ada0db5c5d1c707c9c8afc80a94acbe69f3ae76988f0c

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7

3.5.6-0: Pulling from google_containers/etcd

8fdb1fc20e24: Pull complete

436b7dc2bc75: Pull complete

05135444fe12: Pull complete

e462d783cd7f: Pull complete

ecff8ef6851d: Pull complete

Digest: sha256:dd75ec974b0a2a6f6bb47001ba09207976e625db898d1b16735528c009cb171c

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

1.8.6: Pulling from google_containers/coredns

d92bdee79785: Pull complete

6e1b7c06e42d: Pull complete

Digest: sha256:5b6ec0d6de9baaf3e92d0f66cd96a25b9edbce8716f5f15dcd1a616b3abd590e

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.6

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.6

##初始化kubernetes

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=172.31.6.201 --apiserver-bind-port=6443 --kubernetes-version=v1.24.10 --pod-network-cidr=10.200.0.0/16 --service-cidr=172.31.5.0/24 --service-dns-domain=cluster.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap --cri-socket unix:///var/run/cri-dockerd.sock

[init] Using Kubernetes version: v1.24.10

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [172.31.0.1 172.31.6.201]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master1 localhost] and IPs [172.31.6.201 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master1 localhost] and IPs [172.31.6.201 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 33.025391 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: zcpj0c.ynp9jbztcml2v55c

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.31.6.201:6443 --token zcpj0c.ynp9jbztcml2v55c \

--discovery-token-ca-cert-hash sha256:d311556d7062595e4b34cf19adbb39d2f193e4d2b7cb87e49f3c6bc1fbed25d9

##node 节点执行kubectl命令验证

root@k8s-node1:~# kubeadm join 172.31.6.201:6443 --token zcpj0c.ynp9jbztcml2v55c \

--discovery-token-ca-cert-hash sha256:d311556d7062595e4b34cf19adbb39d2f193e4d2b7cb87e49f3c6bc1fbed25d9 --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

root@k8s-node2:~# kubeadm join 172.31.6.201:6443 --token zcpj0c.ynp9jbztcml2v55c \

--discovery-token-ca-cert-hash sha256:d311556d7062595e4b34cf19adbb39d2f193e4d2b7cb87e49f3c6bc1fbed25d9 --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

root@k8s-node3:~# kubeadm join 172.31.6.201:6443 --token zcpj0c.ynp9jbztcml2v55c \

--discovery-token-ca-cert-hash sha256:d311556d7062595e4b34cf19adbb39d2f193e4d2b7cb87e49f3c6bc1fbed25d9 --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

##分发kubeconfig认证文件

root@k8s-node1:~# mkdir /root/.kube -p

root@k8s-node2:~# mkdir /root/.kube -p

root@k8s-node3:~# mkdir /root/.kube -p

##配置master kubectl配置

root@k8s-master1:~# mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@k8s-master1:~# export KUBECONFIG=/etc/kubernetes/admin.conf

root@k8s-master1:~# scp /root/.kube/config 172.31.6.202:/root/.kube/

root@k8s-master1:~# scp /root/.kube/config 172.31.6.203:/root/.kube/

root@k8s-master1:~# scp /root/.kube/config 172.31.6.204:/root/.kube/

2.5 安装helm

##下载二进制安装包

root@k8s-master1:/usr/local/src# wget https://get.helm.sh/helm-v3.9.0-linux-amd64.tar.gz

--2023-03-19 06:28:09-- https://get.helm.sh/helm-v3.9.0-linux-amd64.tar.gz

##解压

root@k8s-master1:/usr/local/src# tar xvf helm-v3.11.1-linux-amd64.tar.gz

linux-amd64/

linux-amd64/helm

linux-amd64/LICENSE

linux-amd64/README.md

root@k8s-master1:/usr/local/src# mv linux-amd64/helm /usr/local/bin/

2.6 部署网络组件hybridnet

##添加helm源

root@k8s-master1:/usr/local/bin# helm repo add hybridnet https://alibaba.github.io/hybridnet/

"hybridnet" has been added to your repositories

root@k8s-master1:/usr/local/bin# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "hybridnet" chart repository

Update Complete. ⎈Happy Helming!⎈

##配置overlay pod网络(使用kubeadm初始化时指定的pod网络),如果不指定--set init.cidr=10.200.0.0/16默认会使用100.64.0.0/16

root@k8s-master1:/usr/local/bin# helm install hybridnet hybridnet/hybridnet -n kube-system --set init.cidr=10.200.0.0/16

W0319 06:36:24.544931 34262 warnings.go:70] spec.template.spec.nodeSelector[beta.kubernetes.io/os]: deprecated since v1.14; use "kubernetes.io/os" instead

W0319 06:36:24.545018 34262 warnings.go:70] spec.template.metadata.annotations[scheduler.alpha.kubernetes.io/critical-pod]: non-functional in v1.16+; use the "priorityClassName" field instead

NAME: hybridnet

LAST DEPLOYED: Sun Mar 19 06:36:21 2023

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

##查看pod状态

root@k8s-master1:/usr/local/bin# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-typha-59bf6c985c-c7tmk 0/1 ContainerCreating 0 33s

kube-system calico-typha-59bf6c985c-w7qsc 0/1 ContainerCreating 0 33s

kube-system calico-typha-59bf6c985c-xnh77 0/1 ContainerCreating 0 33s

kube-system coredns-7f74c56694-76kpr 0/1 Pending 0 21m

kube-system coredns-7f74c56694-gklzd 0/1 Pending 0 21m

kube-system etcd-k8s-master1 1/1 Running 0 21m

kube-system hybridnet-daemon-5tnd7 0/2 Init:0/1 0 33s

kube-system hybridnet-daemon-mkkx8 0/2 Init:0/1 0 33s

kube-system hybridnet-daemon-qgvzw 0/2 Init:0/1 0 33s

kube-system hybridnet-daemon-v98vj 0/2 Init:0/1 0 33s

kube-system hybridnet-manager-57f55874cf-94grf 0/1 Pending 0 33s

kube-system hybridnet-manager-57f55874cf-llzrc 0/1 Pending 0 33s

kube-system hybridnet-manager-57f55874cf-ng6h7 0/1 Pending 0 33s

kube-system hybridnet-webhook-7f4fcb5646-dj964 0/1 Pending 0 36s

kube-system hybridnet-webhook-7f4fcb5646-p5rrj 0/1 Pending 0 36s

kube-system hybridnet-webhook-7f4fcb5646-pmwvn 0/1 Pending 0 36s

kube-system kube-apiserver-k8s-master1 1/1 Running 0 21m

kube-system kube-controller-manager-k8s-master1 1/1 Running 0 21m

kube-system kube-proxy-49kkc 1/1 Running 0 21m

kube-system kube-proxy-q6m5f 1/1 Running 0 15m

kube-system kube-proxy-w8pl6 1/1 Running 0 15m

kube-system kube-proxy-xjfdv 1/1 Running 0 15m

kube-system kube-scheduler-k8s-master1 1/1 Running 0 21m

root@k8s-master1:/usr/local/bin# kubectl describe pod hybridnet-manager-57f55874cf-94grf -n kube-system

Name: hybridnet-manager-57f55874cf-94grf

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: <none>

Labels: app=hybridnet

component=manager

pod-template-hash=57f55874cf

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/hybridnet-manager-57f55874cf

Containers:

hybridnet-manager:

Image: docker.io/hybridnetdev/hybridnet:v0.8.0

Port: 9899/TCP

Host Port: 9899/TCP

Command:

/hybridnet/hybridnet-manager

--default-ip-retain=true

--feature-gates=MultiCluster=false,VMIPRetain=false

--controller-concurrency=Pod=1,IPAM=1,IPInstance=1

--kube-client-qps=300

--kube-client-burst=600

--metrics-port=9899

Environment:

DEFAULT_NETWORK_TYPE: Overlay

DEFAULT_IP_FAMILY: IPv4

NAMESPACE: kube-system (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mt2z4 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-mt2z4:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: node-role.kubernetes.io/master=

Tolerations: :NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 2m6s default-scheduler 0/4 nodes are available: 4 node(s) didn't match Pod's node affinity/selector. preemption: 0/4 nodes are available: 4 Preemption is not helpful for scheduling.

##给节点打标签

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node1 node-role.kubernetes.io/master=

node/k8s-node1 labeled

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node2 node-role.kubernetes.io/master=

node/k8s-node2 labeled

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node3 node-role.kubernetes.io/master=

node/k8s-node3 labeled

2.7 创建underlay网络并与node节点关联

##为node主机添加underlay network标签

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node1 network=underlay-nethost

node/k8s-node1 labeled

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node2 network=underlay-nethost

node/k8s-node2 labeled

root@k8s-master1:/usr/local/bin# kubectl label node k8s-node3 network=underlay-nethost

node/k8s-node3 labeled

##创建underlay网络

root@k8s-master1:~/underlay-cases-files# ls

1.create-underlay-network.yaml 2.tomcat-app1-overlay.yaml 3.tomcat-app1-underlay.yaml 4-pod-underlay.yaml

root@k8s-master1:~/underlay-cases-files# ll

total 24

drwxr-xr-x 2 root root 4096 Mar 19 06:56 ./

drwx------ 9 root root 4096 Mar 19 06:56 ../

-rw-r--r-- 1 root root 464 Mar 5 12:12 1.create-underlay-network.yaml

-rw-r--r-- 1 root root 1383 Mar 5 12:12 2.tomcat-app1-overlay.yaml

-rw-r--r-- 1 root root 1532 Mar 5 12:12 3.tomcat-app1-underlay.yaml

-rw-r--r-- 1 root root 219 Mar 5 12:12 4-pod-underlay.yaml

root@k8s-master1:~/underlay-cases-files# vim 1.create-underlay-network.yaml

root@k8s-master1:~/underlay-cases-files# kubectl apply -f 1.create-underlay-network.yaml

network.networking.alibaba.com/underlay-network1 created

subnet.networking.alibaba.com/underlay-network1 created

root@k8s-master1:~/underlay-cases-files# kubectl get network

NAME NETID TYPE MODE V4TOTAL V4USED V4AVAILABLE LASTALLOCATEDV4SUBNET V6TOTAL V6USED V6AVAILABLE LASTALLOCATEDV6SUBNET

init 4 Overlay 65534 2 65532 init 0 0 0

underlay-network1 0 Underlay 254 0 254 underlay-network1 0 0 0

2.8 创建pod并使用overlay网络

##创建namaspace

root@k8s-master1:~/underlay-cases-files# kubectl create ns myserver

namespace/myserver created

root@k8s-master1:~/underlay-cases-files# ls

1.create-underlay-network.yaml 2.tomcat-app1-overlay.yaml 3.tomcat-app1-underlay.yaml 4-pod-underlay.yaml

root@k8s-master1:~/underlay-cases-files# vim 2.tomcat-app1-overlay.yaml

##创建测试pod和service

root@k8s-master1:~/underlay-cases-files# kubectl apply -f 2.tomcat-app1-overlay.yaml

deployment.apps/myserver-tomcat-app1-deployment-overlay created

service/myserver-tomcat-app1-service-overlay created

##查看pod和service

root@k8s-master1:~/underlay-cases-files# kubectl get pod,svc -o wide -n myserver

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/myserver-tomcat-app1-deployment-overlay-f8dbf4964-hrhmg 0/1 ContainerCreating 0 28s <none> k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/myserver-tomcat-app1-service-overlay NodePort 172.31.5.64 <none> 80:30003/TCP 28s app=myserver-tomcat-app1-overlay-selector

##验证overlay pod通信

root@k8s-master1:~/underlay-cases-files# kubectl exec -it myserver-tomcat-app1-service-overlay bash -n myserver

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Error from server (NotFound): pods "myserver-tomcat-app1-service-overlay" not found

root@k8s-master1:~/underlay-cases-files# kubectl exec -it myserver-tomcat-app1-deployment-overlay-f8dbf4964-hrhmg bash -n myserver

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@myserver-tomcat-app1-deployment-overlay-f8dbf4964-hrhmg /]# ping www.baidu.com

PING www.a.shifen.com (14.119.104.189) 56(84) bytes of data.

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=1 ttl=127 time=35.7 ms

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=2 ttl=127 time=33.3 ms

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=3 ttl=127 time=40.1 ms

^C

--- www.a.shifen.com ping statistics ---

4 packets transmitted, 3 received, 25% packet loss, time 3004ms

rtt min/avg/max/mdev = 33.373/36.433/40.181/2.825 ms

2.9 创建pod并使用underlay网络

##underlay pod可以与overlaypod共存(混合使用)

root@k8s-master1:~/underlay-cases-files# kubectl apply -f 3.tomcat-app1-underlay.yaml

deployment.apps/myserver-tomcat-app1-deployment-underlay created

service/myserver-tomcat-app1-service-underlay created

##查看创建的pod和service

root@k8s-master1:~/underlay-cases-files# kubectl get pod,svc -o wide -n myserver

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/myserver-tomcat-app1-deployment-overlay-f8dbf4964-hrhmg 1/1 Running 0 14m 10.200.0.3 k8s-node2 <none> <none>

pod/myserver-tomcat-app1-deployment-underlay-5f7dd46d56-n7lcc 1/1 Running 0 86s 172.31.6.1 k8s-node3 <none> <none>

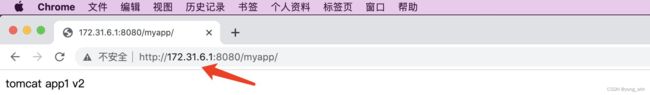

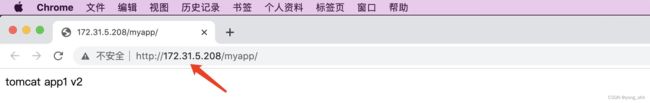

2.10 通过service IP访问Pod

#查看service信息

root@k8s-master1:~/underlay-cases-files# kubectl get svc -o wide -n myserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

myserver-tomcat-app1-service-overlay NodePort 172.31.5.109 <none> 80:30003/TCP 20m app=myserver-tomcat-app1-overlay-selector

myserver-tomcat-app1-service-underlay ClusterIP 172.31.5.208 <none> 80/TCP 6m31s app=myserver-tomcat-app1-underlay-selector

注意:后期如果要访问SVC则需要在网络设备配置静态路由,打通从客户端到SVC的通信,把请求转发到具体的kubernetes node节点响应,如:

#windows10 以管理员身份运行cmd添加路由

route add 172.31.5.0 MASK 255.255.255.0 -p 172.31.7.102

~# route add -net 172.31.5.0 netmask 255.255.255.0 gateway 172.31.6.204

3、总结网络组件flannel vxlan模式的网络通信流程

flannel通信流程-1.pod->2.cni->3.eth0:

1:源pod发起请求,此时报文中源IP为pod的eth0 的ip,源mac 为pod的eth0的mac,目的Pod为目的Pod的IP,目的mac为网关(cni0)的MAC

2:数据报文通过veth peer发送给网关cni0,检查目的mac就是发给自己的,cni0进行目标IP检查,如果是同一个网桥的报文就直接转发,不是的话就发送给flannel.1, 此时报文被修改如下:

源IP: Pod IP,10.100.2.2

目的IP: Pod IP,10.100.1.6

源MAC: 源POD MAC

目的MAC:cni mAC

3:到达flannel.1,检查目的mac就是发给自己的,开始匹配路由表,先实现overlay报文的内层封装(主要是修改目的Pod的对端flannel.1的 MAC、源MAC为当前宿主机flannel.1的MAC):

源IP: Pod IP,10.100.2.2

目的IP: Pod IP,10.100.1.6

源MAC: 9e:68:76:c4:64:0f,源Pod所在宿主机flannel.1的MAC

目的MAC:de:34:a2:06:d3:56,目的Pod所在主机flannel.1的MAC

4.源宿主机基于UDP封装Vxlan报文:

VXLAN ID:1

UDP 源端口: 随机

UDP 目的端口: 8472

源IP #源Pod所在宿主机的物理网卡IP#

目的IP #目的Pod所在宿主机的物理网卡IP

源MAC: 00:0c:29:bc:fe:d8 #源Pod所在宿主机的物理网卡

目的MAC: 00:0c:29:56:e7:1b #目的Pod所在宿主机的物理网卡

5.报文到达目的宿主机物理网卡,接开始解封装报文:

目的主机解封装报文:

外层目的IP为本机物理网卡,解开后发现里面还有一层目的IP和目的MAC,发现目的IP为10.100.1.6,目的MAC为de:34:a2:06:d3:56(目的

6.报文到达目的宿主机flannel.1:

flannel.1检查报文的目的IP,发现是去往本机cni0的子网,将请求报文转发至cni0

目的IP:10.100.1.6 #目的Pod

源IP: 10.100.2.2 #源Pod

目的 MAC:de:34:a2:06:d3:56 #目的pod所在宿主机的flannel.1

源MAC:9e:68:76:c4:64:0f #源pod所在宿主机flannel.1的MAC

flannel.1的MAC),然后将报文发送给flannel.1:

7.报文到达目的宿主机cni0:

cni0基于目的IP检查mac地址表,修改目的MAC为目的MAC后将来请求转给pod:

源IP: 10.100.2.2 #源Pod

目的IP: 10.100.1.6 #目的Pod

源MAC:cni0的MAC, b2:12:0d:e4:eb:46

目的MAC: 目的Pod的MAC f2:50:98:b4:ea:01

8.报文到达目的宿主机pod:

cni0收到报文返现去往10.100.1.6,检查MAC地址表发现是本地接口,然后通过网桥接口发给pod:

目的IP: 目的pod IP 10.100.1.6

源IP:源Pod IP 10.200.2.2

目的MAC: 目的pod MAC,f2:50:98:b4:ea:01

源MAC: cni0的MAC,b2:12:0d:e4:eb:46

4、总结网络组件calico IPIP模式的网络通信流程

calico通信流程- - 确 认pod虚拟网卡名

确认源pod与目的pod的宿主机网卡名称,用于下一步抓包

源pod:

# kubectl exec -it net-test2 bash #进入pod

[root@net-test2 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.200.218.62 netmask 255.255.255.255 broadcast 10.200.218.62

#ethtool -S eth0 #源pod确认网卡对儿

NIC statistics:

peer_ifindex: 8

rx_queue_0_xdp_packets: 0

8: cali2b2e7c9e43e@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 3

root@k8s-node1:~# tcpdump -nn -vvv -i cali2b2e7c9e43e -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53

目的pod:

#ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.200.151.205 netmask 255.255.255.255 broadcast 10.200.151.205

/# ethtool -S eth0 #目的pod网卡对儿

NIC statistics:

peer_ifindex: 16

rx_queue_0_xdp_packets: 0

16: cali32ecf57bfbe@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 7

root@k8s-node2:~# tcpdump -nn -vvv -i cali32ecf57bfbe -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53

calico通信流程-pod产生请求报文到达宿主机虚拟网卡:

1.源pod发起请求, 报文到达宿主机与pod对应的网卡:

root@net-test2 /]# curl 10.200.151.205

2.报文到达在宿主机与pod对应的网卡:cali2b2e7c9e43e

root@k8s-node1:~# tcpdump -nn -vvv -i cali2b2e7c9e43e -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53

calico通信流程- -报文到达源宿主机tun0 :

3. 报文到达源宿主机tun0

root@k8s-node1:~# tcpdump -nn -vvv -i tunl0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 2-tunl0.pcap

此时报文的源IP为源pod IP,目的IP为目的pod IP。没有MAC 地址。

calico通信流程- - 报文到达源宿主机eth0 :

4.报文到达源宿主机eth0:

root@k8s-node1:~# tcpdump -nn -vvv -i eth0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 and ! port 2380 and ! host 172.31.7.101 -w 3.eth0.pcap

此时报文为IPinIP格式,外层为源宿主机和目的宿主机的源MAC目的MAC、源IP及目的IP,内部为源pod IP及目的pod的IP,没有使用pod的MAC地址。

calico 通 信 流 程- - 报 文 到 达 目 的 宿 主 机eth0 :

5.报文到达目的宿主机eth0

此时收到的是源宿主机的IPinIP报文,外层为源宿主机和目的宿主机的源MAC目的MAC、源IP及目的IP,内部为源pod IP及目的pod的IP,没有使用MAC地址,

解封装后发现是去往10.200.151.205

calico 通 信 流 程- - 报 文 到 达 目 的 宿 主 机tunl0 :

6.报文到达目的宿主机tunl0:

root@k8s-node2:~# tcpdump -nn -vvv -i tunl0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and !

port 53 and ! port 2380 and ! host 172.31.7.101 -w 5-tunl0.pcap

报文到达目的宿主机tunl0,此时源IP为源pod IP,目的IP为目的pod的IP,没有MAC地址。

calico 通 信 流 程- - 报 文 到 达 源 宿 主 机 虚 拟 网 卡

7 报 文 到 达 目 的P Po od d 与 目 的 宿 主 机 对 应 的 网 卡cali32ecf57bfbe

root@k8s-node2:~# tcpdump -nn -vvv -i cali32ecf57bfbe -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 and ! port 2380 and ! host 172.31.7.101 -w 6-cali32ecf57bfbe.pcap

报文到达目的宿主机cali32ecf57bfbe 网卡。此时源IP为源Pod IP,源MCA为tunl0 MAC,目的IP为目的Pod IP,目的MAC为目的Pod MAC,随后报文被转发被目的MAC(目的Pod) 。

calico 通 信 流 程- - 报 文 到 达 目 的Pod:

8.报文到达目的Pod:

nginx-deployment-58f6c4967b-x959s:/# tcpdump - i eth0 -vvv -nn - 7dst-pod.p cap

nginx-deployment-58f6c4967b-x959s:/# apt-get install openssh-server

ginx-deployment-58f6c4967b-x959s:/# scp 7-dst-pod.acp 172.31.7.112:/root/

报文到达目的pod,目的pod接受请求并构建响应报文并原路返回给源pod。

5、基于二进制实现高可用的K8S集群环境

#二进制安装docker

root@k8s-harbor1:/usr/local/src# tar xvf docker-20.10.19-binary-install.tar.gz

./

./docker.socket

./containerd.service

./docker.service

./sysctl.conf

./limits.conf

./docker-compose-Linux-x86_64_1.28.6

./daemon.json

./docker-20.10.19.tgz

./docker-install.sh

root@k8s-harbor1:/usr/local/src# ls

containerd.service docker-20.10.19-binary-install.tar.gz docker-compose-Linux-x86_64_1.28.6 docker.service limits.conf

daemon.json docker-20.10.19.tgz docker-install.sh docker.socket sysctl.conf

root@k8s-harbor1:/usr/local/src# bash docker-install.sh

当前系统是Ubuntu 20.04.3 LTS \n \l,即将开始系统初始化、配置docker-compose与安装docker

docker/

docker/docker-proxy

docker/docker-init

docker/containerd

docker/containerd-shim

docker/dockerd

docker/runc

docker/ctr

docker/docker

docker/containerd-shim-runc-v2

正在启动docker server并设置为开机自启动!

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service.

Created symlink /etc/systemd/system/sockets.target.wants/docker.socket → /lib/systemd/system/docker.socket.

docker server安装完成,欢迎进入docker世界!

#docker info信息

root@k8s-harbor1:/usr/local/src# docker info

Client:

Context: default

Debug Mode: false

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.19

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 io.containerd.runtime.v1.linux runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

runc version: v1.1.4-0-g5fd4c4d1

init version: de40ad0

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 5.4.0-81-generic

Operating System: Ubuntu 20.04.3 LTS

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.913GiB

Name: k8s-harbor1.example.com

ID: XY4C:NFC5:ZLZM:GKFH:QW5O:HYAK:QYF4:XGMQ:DP22:QAGV:VUEM:O4P6

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

harbor.magedu.com

harbor.myserver.com

172.31.7.105

127.0.0.0/8

Registry Mirrors:

https://9916w1ow.mirror.aliyuncs.com/

Live Restore Enabled: false

Product License: Community Engine

WARNING: No swap limit support

#⾃⾏使⽤openssl签发、⽣成私有key

root@k8s-harbor1:/apps# tar xvf harbor-offline-installer-v2.6.3.tgz

harbor/harbor.v2.6.3.tar.gz

harbor/prepare

harbor/LICENSE

harbor/install.sh

harbor/common.sh

harbor/harbor.yml.tmpl

root@k8s-harbor1:/apps# cd harbor/

root@k8s-harbor1:/apps/harbor# mkdir certs

root@k8s-harbor1:/apps/harbor# cd certs/

root@k8s-harbor1:/apps/harbor/certs# openssl genrsa -out harbor-ca.key

Generating RSA private key, 2048 bit long modulus (2 primes)

..............+++++

.................................................................................................................................................................................................+++++

e is 65537 (0x010001)

root@k8s-harbor1:/apps/harbor/certs# ls

harbor-ca.key

root@k8s-harbor1:/apps/harbor/certs# openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.linuxarchitect.io" -days 7120 -out harbor-ca.crt

root@k8s-harbor1:/apps/harbor/certs# ls

harbor-ca.crt harbor-ca.key

#创建/data/harbor文件系统,后面使用它作harbor数据目录

root@k8s-harbor1:/apps/harbor/certs# mkfs.xfs /dev/sdb

meta-data=/dev/sdb isize=512 agcount=4, agsize=3276800 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=13107200, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=6400, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

root@k8s-harbor1:/apps/harbor/certs# mkdir /data/harbor -p

root@k8s-harbor1:/apps/harbor/certs# vi /etc/fstab

root@k8s-harbor1:/apps/harbor/certs# mount -a

root@k8s-harbor1:/apps/harbor/certs# df -h

Filesystem Size Used Avail Use% Mounted on

udev 936M 0 936M 0% /dev

tmpfs 196M 1.3M 195M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 119G 7.8G 112G 7% /

tmpfs 980M 0 980M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 980M 0 980M 0% /sys/fs/cgroup

/dev/sda2 976M 107M 803M 12% /boot

/dev/loop1 68M 68M 0 100% /snap/lxd/22753

/dev/loop2 62M 62M 0 100% /snap/core20/1581

/dev/loop0 56M 56M 0 100% /snap/core18/2714

/dev/loop3 56M 56M 0 100% /snap/core18/2538

/dev/loop4 92M 92M 0 100% /snap/lxd/24061

/dev/loop5 50M 50M 0 100% /snap/snapd/18596

tmpfs 196M 0 196M 0% /run/user/0

/dev/sdb 50G 390M 50G 1% /data/harbor

#修改harbor配置⽂件

root@k8s-harbor1:/apps/harbor# grep -v "#" harbor.yml | grep -v "^$"

hostname: harbor.linuxarchitect.io

http:

port: 80

https:

port: 443

certificate: /apps/harbor/certs/harbor-ca.crt

private_key: /apps/harbor/certs/harbor-ca.key

harbor_admin_password: 123456

database:

password: root123

max_idle_conns: 100

max_open_conns: 900

data_volume: /data/harbor

#执⾏安装

root@k8s-harbor1:/apps/harbor# ./install.sh --with-trivy --with-chartmuseum

[Step 0]: checking if docker is installed ...

Note: docker version: 20.10.19

[Step 1]: checking docker-compose is installed ...

Note: docker-compose version: 1.28.6

[Step 2]: loading Harbor images ...

ed6c4a2423e8: Loading layer [==================================================>] 37.72MB/37.72MB

c8776c17f955: Loading layer [==================================================>] 119.1MB/119.1MB

5f120e4803a2: Loading layer [==================================================>] 7.538MB/7.538MB

58dbf2e2daf0: Loading layer [==================================================>] 1.185MB/1.185MB

Loaded image: goharbor/harbor-portal:v2.6.3

1c09f6f53666: Loading layer [==================================================>] 127MB/127MB

6f4e5282749c: Loading layer [==================================================>] 3.584kB/3.584kB

aeaec4bb0bba: Loading layer [==================================================>] 3.072kB/3.072kB

2429e67351ee: Loading layer [==================================================>] 2.56kB/2.56kB

b410f00133a5: Loading layer [==================================================>] 3.072kB/3.072kB

e8f4e26fb91d: Loading layer [==================================================>] 3.584kB/3.584kB

6223393fe2f5: Loading layer [==================================================>] 20.99kB/20.99kB

Loaded image: goharbor/harbor-log:v2.6.3

026a81c8f4b3: Loading layer [==================================================>] 5.759MB/5.759MB

f6b33ea5f36a: Loading layer [==================================================>] 4.096kB/4.096kB

2153df6352bf: Loading layer [==================================================>] 17.11MB/17.11MB

875409599e37: Loading layer [==================================================>] 3.072kB/3.072kB

81d6dfdd2e4a: Loading layer [==================================================>] 29.71MB/29.71MB

f2da68271a27: Loading layer [==================================================>] 47.61MB/47.61MB

Loaded image: goharbor/harbor-registryctl:v2.6.3

884a1f5c9843: Loading layer [==================================================>] 8.902MB/8.902MB

2ddf1b4d43bf: Loading layer [==================================================>] 25.08MB/25.08MB

81eb1b23bbb5: Loading layer [==================================================>] 4.608kB/4.608kB

9e58c43954b6: Loading layer [==================================================>] 25.88MB/25.88MB

Loaded image: goharbor/harbor-exporter:v2.6.3

bc0c522810c4: Loading layer [==================================================>] 8.902MB/8.902MB

9fbf2bb4023c: Loading layer [==================================================>] 3.584kB/3.584kB

98b4939c60c8: Loading layer [==================================================>] 2.56kB/2.56kB

045d575b759b: Loading layer [==================================================>] 102.3MB/102.3MB

1f466184225a: Loading layer [==================================================>] 103.1MB/103.1MB

Loaded image: goharbor/harbor-jobservice:v2.6.3

566336e05ba3: Loading layer [==================================================>] 119.9MB/119.9MB

44facf87cb8c: Loading layer [==================================================>] 3.072kB/3.072kB

d9d4a35201e9: Loading layer [==================================================>] 59.9kB/59.9kB

94110a561bc1: Loading layer [==================================================>] 61.95kB/61.95kB

Loaded image: goharbor/redis-photon:v2.6.3

cacdd58f45fd: Loading layer [==================================================>] 5.754MB/5.754MB

3bb8655c543f: Loading layer [==================================================>] 8.735MB/8.735MB

6a7367cd9912: Loading layer [==================================================>] 15.88MB/15.88MB

296e3d2fd99f: Loading layer [==================================================>] 29.29MB/29.29MB

ca60e55cc5ea: Loading layer [==================================================>] 22.02kB/22.02kB

dfcecee54d65: Loading layer [==================================================>] 15.88MB/15.88MB

Loaded image: goharbor/notary-server-photon:v2.6.3

89043341a274: Loading layer [==================================================>] 43.84MB/43.84MB

9d023d2a97b6: Loading layer [==================================================>] 66.03MB/66.03MB

61c36c0b0ac2: Loading layer [==================================================>] 18.21MB/18.21MB

91f5f58d0482: Loading layer [==================================================>] 65.54kB/65.54kB

4f6269635897: Loading layer [==================================================>] 2.56kB/2.56kB

a98564e3a1f8: Loading layer [==================================================>] 1.536kB/1.536kB

173bcb5d11b3: Loading layer [==================================================>] 12.29kB/12.29kB

bcf887d06d88: Loading layer [==================================================>] 2.613MB/2.613MB

f20d7a395ffa: Loading layer [==================================================>] 379.9kB/379.9kB

Loaded image: goharbor/prepare:v2.6.3

c08fb233db28: Loading layer [==================================================>] 8.902MB/8.902MB

7da1ae52a1a6: Loading layer [==================================================>] 3.584kB/3.584kB

83cf11f9e71b: Loading layer [==================================================>] 2.56kB/2.56kB

edda2f6a1e4c: Loading layer [==================================================>] 83.92MB/83.92MB

8742d75dc503: Loading layer [==================================================>] 5.632kB/5.632kB

c9b2016053d9: Loading layer [==================================================>] 106.5kB/106.5kB

b1803d4adaaa: Loading layer [==================================================>] 44.03kB/44.03kB

2f1a1ce6928d: Loading layer [==================================================>] 84.87MB/84.87MB

04316e994e93: Loading layer [==================================================>] 2.56kB/2.56kB

Loaded image: goharbor/harbor-core:v2.6.3

dab3848549c0: Loading layer [==================================================>] 119.1MB/119.1MB

Loaded image: goharbor/nginx-photon:v2.6.3

036fd2d1c3c4: Loading layer [==================================================>] 5.759MB/5.759MB

01b862c716d4: Loading layer [==================================================>] 4.096kB/4.096kB

2cee2bc0cc47: Loading layer [==================================================>] 3.072kB/3.072kB

998cc489ba67: Loading layer [==================================================>] 17.11MB/17.11MB

9ac4078c0b12: Loading layer [==================================================>] 17.9MB/17.9MB

Loaded image: goharbor/registry-photon:v2.6.3

659fadc582e7: Loading layer [==================================================>] 1.097MB/1.097MB

9f40d7d801f0: Loading layer [==================================================>] 5.889MB/5.889MB

2c2d126c3429: Loading layer [==================================================>] 169.1MB/169.1MB

79926d613579: Loading layer [==================================================>] 16.91MB/16.91MB

ddefe0eb652b: Loading layer [==================================================>] 4.096kB/4.096kB

6fc9e4dd18a4: Loading layer [==================================================>] 6.144kB/6.144kB

8ed4b870f096: Loading layer [==================================================>] 3.072kB/3.072kB

081c5440b79c: Loading layer [==================================================>] 2.048kB/2.048kB

0eff6e82f1ce: Loading layer [==================================================>] 2.56kB/2.56kB

8b5978d0cdd0: Loading layer [==================================================>] 2.56kB/2.56kB

3319997d6569: Loading layer [==================================================>] 2.56kB/2.56kB

1bf8f6ab8e25: Loading layer [==================================================>] 8.704kB/8.704kB

Loaded image: goharbor/harbor-db:v2.6.3

1db48763bb6c: Loading layer [==================================================>] 5.754MB/5.754MB

d051f6488df9: Loading layer [==================================================>] 8.735MB/8.735MB

7ea2b3f7e6f6: Loading layer [==================================================>] 14.47MB/14.47MB

1cb265dac032: Loading layer [==================================================>] 29.29MB/29.29MB

144284088cbf: Loading layer [==================================================>] 22.02kB/22.02kB

5ac59fccf6fb: Loading layer [==================================================>] 14.47MB/14.47MB

Loaded image: goharbor/notary-signer-photon:v2.6.3

2a92cdbfe831: Loading layer [==================================================>] 6.287MB/6.287MB

02ccf0420a48: Loading layer [==================================================>] 4.096kB/4.096kB

ade777038c1d: Loading layer [==================================================>] 3.072kB/3.072kB

46b542154bbd: Loading layer [==================================================>] 180.6MB/180.6MB

0f964f621fcb: Loading layer [==================================================>] 13.38MB/13.38MB

b884febe7cf8: Loading layer [==================================================>] 194.7MB/194.7MB

Loaded image: goharbor/trivy-adapter-photon:v2.6.3

1b69ea382f2d: Loading layer [==================================================>] 5.759MB/5.759MB

cd7f5f488cf0: Loading layer [==================================================>] 90.88MB/90.88MB

393f6387bb7a: Loading layer [==================================================>] 3.072kB/3.072kB

53997c89d1fd: Loading layer [==================================================>] 4.096kB/4.096kB

73b80a5cb9ac: Loading layer [==================================================>] 91.67MB/91.67MB

Loaded image: goharbor/chartmuseum-photon:v2.6.3

[Step 3]: preparing environment ...

[Step 4]: preparing harbor configs ...

prepare base dir is set to /apps/harbor

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

Generated and saved secret to file: /data/secret/keys/secretkey

Successfully called func: create_root_cert

Generated configuration file: /config/trivy-adapter/env

Generated configuration file: /config/chartserver/env

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

Note: stopping existing Harbor instance ...

Removing network harbor_harbor

WARNING: Network harbor_harbor not found.

Removing network harbor_harbor-chartmuseum

WARNING: Network harbor_harbor-chartmuseum not found.

[Step 5]: starting Harbor ...

➜

Chartmusuem will be deprecated as of Harbor v2.6.0 and start to be removed in v2.8.0 or later.

Please see discussion here for more details. https://github.com/goharbor/harbor/discussions/15057

Creating network "harbor_harbor" with the default driver

Creating network "harbor_harbor-chartmuseum" with the default driver

Creating harbor-log ... done

Creating redis ... done

Creating chartmuseum ... done

Creating registryctl ... done

Creating harbor-portal ... done

Creating harbor-db ... done

Creating registry ... done

Creating trivy-adapter ... done

Creating harbor-core ... done

Creating harbor-jobservice ... done

Creating nginx ... done

✔ ----Harbor has been installed and started successfully.----

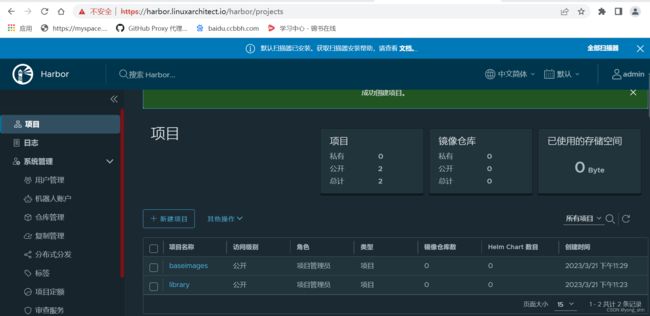

#访问harbor, 新建baseimages项目

#配置高可用负载均衡

##安装keepalived和haproxy

root@k8s-ha1:~# apt update

root@k8s-ha1:~# apt install keepalived haproxy -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

##修改配置

root@k8s-ha1:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@k8s-ha1:~# vim /etc/keepalived/keepalived.conf

virtual_ipaddress {

192.168.7.188 dev eth1 label eth1:0

192.168.7.189 dev eth1 label eth1:1

192.168.7.190 dev eth1 label eth1:2

192.168.7.191 dev eth1 label eth1:3

192.168.7.192 dev eth1 label eth1:4

}

root@k8s-ha1:~# systemctl restart keepalived

root@k8s-ha1:~# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.31.7.109 netmask 255.255.248.0 broadcast 172.31.7.255

inet6 fe80::20c:29ff:fe9f:ffb9 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:9f:ff:b9 txqueuelen 1000 (Ethernet)

RX packets 25920 bytes 38338087 (38.3 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3323 bytes 253165 (253.1 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.109 netmask 255.255.248.0 broadcast 192.168.7.255

inet6 fe80::20c:29ff:fe9f:ffc3 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

RX packets 2247 bytes 172776 (172.7 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2420 bytes 358069 (358.0 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.188 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

eth1:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.189 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

eth1:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.190 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

eth1:3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.191 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

eth1:4: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.7.192 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:9f:ff:c3 txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0