Istio1.71入门及操作步骤

Istio入门

什么是Istio

参考链接

- 概念

Istio是一个开放平台,提供统一的微服务集成、微服务间流量管理、策略实施和telemetry数据聚合方式。Istio的控制平面在底层集群管理平台(如Kubernetes)上提供了一个抽象层。抽象层使得对k8s的管理更加方便。正如IT界一个有名的说法:“All problems in computer science can be solved by another level of indirection, except for the problem of too many layers of indirection.” Indirection在这里经常被故意引用为abstraction layer。

Istio有助于降低微服务的部署的复杂性,并减轻DevOps团队的压力。它是一个完全开源的服务网格(service mesh),透明地分层到现有的分布式应用程序上。它还是一个平台,包括允许它集成到任何日志平台或遥测或策略系统的API。Istio提供统一的微服务安全、连接和监控方法。

- 架构

- Envoy - 也就是图中的Proxy,有时候也叫Sidecar。 每个微服务中的Sidecar代理处理集群内服务之间的入口/出口流量以及到外部服务的入口/出口流量。这个代理构成一个安全的微服务网格,提供丰富的功能集,如发现、丰富的7层路由、熔断、策略执行和遥测记录/报告功能。

- Istiod - Istio控制平面。提供服务发现、配置和证书管理。它由以下子部分组成:

- Pilot - 负责在运行时配置代理。为Envoy sidecar提供服务发现功能,为智能路由(例如A/B测试、金丝雀部署等)和弹性(超时、重试、熔断器等)提供流量管理功能;它将控制流量行为的高级路由规则转换为特定于Envoy的配置,并在运行时将它们传播到sidecar;

- Citadel - 负责证书颁发和轮换。通过内置身份和凭证管理赋能强大的服务间和最终用户身份验证;可用于升级服务网格中未加密的流量,并为运维人员提供基于服务标识而不是网络控制的强制执行策略的能力;

- Galley - 负责Istio内部的验证、采集、聚合、转换和分发配置。

- Operator - 该组件提供用户友好的选项来操作Istio服务网格。

Istio快速上手

- 安装

在部署使用Istio之前,我们需要准备一个Kubernetes的环境,如果只是试用Istio的话,推荐试用Minikube或者Kind来快速搭建Kubernetes环境。

有了Kubernetes环境并且配置好kubectl之后,我们就可以正式开始了。

-

下载并解压最新的Istio发布版本

[root@k8s-master k8s]# curl -L https://istio.io/downloadIstio | sh - % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 102 100 102 0 0 58 0 0:00:01 0:00:01 --:--:-- 58 100 4277 100 4277 0 0 1720 0 0:00:02 0:00:02 --:--:-- 596k Downloading istio-1.7.1 from https://github.com/istio/istio/releases/download/1.7.1/istio-1.7.1-linux-amd64.tar.gz ... Istio 1.7.1 Download Complete! Istio has been successfully downloaded into the istio-1.7.1 folder on your system. Next Steps: See https://istio.io/latest/docs/setup/install/ to add Istio to your Kubernetes cluster. To configure the istioctl client tool for your workstation, add the /appsdata/k8s/istio-1.7.1/bin directory to your environment path variable with: export PATH="$PATH:/appsdata/k8s/istio-1.7.1/bin" Begin the Istio pre-installation check by running: istioctl x precheck Need more information? Visit https://istio.io/latest/docs/setup/install/注意: 这个安装过程是需要访问到k8s的API server的,最好在k8s-master node上执行。我尝试着在某个node上执行,由于没有权限访问到API server有如下报错。(当然,如果这个node有权限访问到API server,也应该不会报错)

[root@k8s-node1 istio-1.7.1]# istioctl x precheck Checking the cluster to make sure it is ready for Istio installation... #1. Kubernetes-api ----------------------- Can initialize the Kubernetes client. Failed to query the Kubernetes API Server: Get "http://localhost:8080/version?timeout=32s": dial tcp [::1]:8080: connect: connection refused. Istio install NOT verified because the cluster is unreachable. Error: 1 error occurred: * failed to query the Kubernetes API Server: Get "http://localhost:8080/version?timeout=32s": dial tcp [::1]:8080: connect: connection refused -

将

istioctl命令行工具加入PATH环境变量,并且验证k8s集群环境满足Istio部署条件:[root@k8s-master k8s]# export PATH="$PATH:/appsdata/k8s/istio-1.7.1/bin" [root@k8s-master k8s]# istioctl x precheck Checking the cluster to make sure it is ready for Istio installation... #1. Kubernetes-api ----------------------- Can initialize the Kubernetes client. Can query the Kubernetes API Server. #2. Kubernetes-version ----------------------- Istio is compatible with Kubernetes: v1.16.3. #3. Istio-existence ----------------------- Istio will be installed in the istio-system namespace. #4. Kubernetes-setup ----------------------- Can create necessary Kubernetes configurations: Namespace,ClusterRole,ClusterRoleBinding,CustomResourceDefinition,Role,ServiceAccount,Service,Deployments,ConfigMap. #5. SideCar-Injector ----------------------- This Kubernetes cluster supports automatic sidecar injection. To enable automatic sidecar injection see https://istio.io/docs/setup/kubernetes/additional-setup/sidecar-injection/#deploying-an-app ----------------------- Install Pre-Check passed! The cluster is ready for Istio installation. -

安装Istio并允许istio-injection,自动注入依赖于

istio-sidecar-injector,需要部署应用前给对应的namespace打上label(istio-injection=enabled)[root@k8s-master k8s]# cd istio-1.7.1 [root@k8s-master istio-1.7.1]# istioctl install --set profile=demo Detected that your cluster does not support third party JWT authentication. Falling back to less secure first party JWT. See https://istio.io/docs/ops/best-practices/security/#configure-third-party-service-account-tokens for details. ✔ Istio core installed ✔ Istiod installed ✔ Egress gateways installed ✔ Ingress gateways installed ✔ Installation complete [root@k8s-master istio-1.7.1]# kubectl label namespace default istio-injection=enabled namespace/default labeled [root@k8s-master istio-1.7.1]# kubectl get namespace -L istio-injection NAME STATUS AGE ISTIO-INJECTION default Active 14d enabled istio-system Active 3h15m disabled kube-node-lease Active 23h kube-public Active 14d kube-system Active 14d kubernetes-dashboard Active 14d

- 先确保在默认的namespace中,自动注入是enabled状态

[root@k8s-master istio-1.7.1]# kubectl get namespace -L istio-injection

NAME STATUS AGE ISTIO-INJECTION

default Active 14d enabled

istio-system Active 3h15m disabled

kube-node-lease Active 23h

kube-public Active 14d

kube-system Active 14d

kubernetes-dashboard Active 14d

- 在istio目录下部署bookinfo应用

[root@k8s-master istio-1.7.1]# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

service/details created

serviceaccount/bookinfo-details created

deployment.apps/details-v1 created

service/ratings created

serviceaccount/bookinfo-ratings created

deployment.apps/ratings-v1 created

service/reviews created

serviceaccount/bookinfo-reviews created

deployment.apps/reviews-v1 created

deployment.apps/reviews-v2 created

deployment.apps/reviews-v3 created

service/productpage created

serviceaccount/bookinfo-productpage created

deployment.apps/productpage-v1 created

- 等待大概几分钟(看网络情况),确认所有service和pod正常运行

[root@k8s-master istio-1.7.1]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

details ClusterIP 10.102.104.191 9080/TCP 5m18s

kubernetes ClusterIP 10.96.0.1 443/TCP 14d

productpage ClusterIP 10.106.155.91 9080/TCP 5m18s

ratings ClusterIP 10.110.240.172 9080/TCP 5m18s

reviews ClusterIP 10.102.154.134 9080/TCP 5m18s

[root@k8s-master istio-1.7.1]# kubectl get pods

NAME READY STATUS RESTARTS AGE

details-v1-5974b67c8-26swv 2/2 Running 0 5m50s

productpage-v1-64794f5db4-bnkfv 2/2 Running 0 5m49s

ratings-v1-c6cdf8d98-vszfb 2/2 Running 0 5m49s

reviews-v1-7f6558b974-v77r2 2/2 Running 0 5m49s

reviews-v2-6cb6ccd848-2jr6l 2/2 Running 0 5m49s

reviews-v3-cc56b578-qnwkm 2/2 Running 0 5m50s

备注:这里我遇到过一个很难定位的问题,Deployment都可以创建,但是对应的pod就是无法创建。

我通过kubernetes dashboard查看deployment的详细信息查到这个错误关键字x509: certificate signed by unknown authority,Google了很久,才发现根本原因不是istio的锅,是代理的锅。具体来说,是因为我是通过proxy访问互联网的,本应该走内网访问的地址

https://istio-sidecar-injector.istio-system.svc:443/inject?timeout=30s走代理通过互联网访问了。参考这里大家可以修改

/etc/kubernetes/manifests下面对应yaml文件然后更新对应pod的配置,我最后是通过kubernetes dashboard修改kube-system这个namespace中的,kube-apiserver,kube-controller和kube-scheduler的no_proxy配置,加入.svc,也就是只要是.svc结尾的URL都不走代理,通过内网访问。- name: no_proxy value: >- 127.0.0.1, localhost, 192.168.0.0/16, 10.96.0.0/12, 10.244.0.0/16, .svc

-

确认Bookinfo应用正常运行,在集群内网能够访问到。

[root@k8s-master istio-1.7.1]# kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -s productpage:9080/productpage | grep -o ".* "Simple Bookstore App -

决定ingress的ip和端口

[root@k8s-master istio-1.7.1]# kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml gateway.networking.istio.io/bookinfo-gateway created virtualservice.networking.istio.io/bookinfo created [root@k8s-master istio-1.7.1]# kubectl get gateway NAME AGE bookinfo-gateway 30s参考这里来确定

INGRESS_HOST和INGRESS_PORT两个变量我没有外部的load balancer,我是使用node port方式访问应用的

[root@k8s-master istio-1.7.1]# export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}') [root@k8s-master istio-1.7.1]# export SECURE_INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="https")].nodePort}') [root@k8s-master istio-1.7.1]# export TCP_INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="tcp")].nodePort}') [root@k8s-master istio-1.7.1]# export INGRESS_HOST=$(kubectl get po -l istio=ingressgateway -n istio-system -o jsonpath='{.items[0].status.hostIP}') [root@k8s-master istio-1.7.1]# echo $INGRESS_HOST:$INGRESS_PORT 10.21.154.224:31354 [root@k8s-master istio-1.7.1]# export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT [root@k8s-master istio-1.7.1]# curl -s "http://${GATEWAY_URL}/productpage" | grep -o ".* "Simple Bookstore App 到这里,我们的应用也部署完毕了,也可以通过浏览器来访问到了。在我的环境中是,http://10.21.154.224:31354/productpage,大家的环境IP和端口肯定是不一样的。

如果一切正常,多次刷新浏览器页面,我们会发现review部分会随机的出现无星、黑星和红星,这是因为我们目前没有创建任何流量规则,所以使用默认的随机(round robin)模式在不同的reviews版本之间切换。

创建默认的destination rules;在使用流量规则控制访问的review版本之前,我们需要创建一系列的destination rules用来定义每个版本对应的subset:

[root@k8s-master istio-1.7.1]# kubectl apply -f samples/bookinfo/networking/destination-rule-all.yaml

destinationrule.networking.istio.io/productpage created

destinationrule.networking.istio.io/reviews created

destinationrule.networking.istio.io/ratings created

destinationrule.networking.istio.io/details created

- 更多探索

这里大家可以试着执行不同的策略来看效果,比如2-8策略,这个就没有流量访问到v3(红星)了,80%的流量到v1(无星),20%的流量到v2(黑星)。v3的服务在运行中,但是没有流量访问到。这样就可以实现金丝雀发布。可以下线v3的服务,讲其升级为v4或者回退到v2/v1都可以。

[root@k8s-master istio-1.7.1]# cat samples/bookinfo/networking/virtual-service-reviews-80-20.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

spec:

hosts:

- reviews

http:

- route:

- destination:

host: reviews

subset: v1

weight: 80

- destination:

host: reviews

subset: v2

weight: 20

[root@k8s-master istio-1.7.1]# kubectl apply -f samples/bookinfo/networking/virtual-service-reviews-80-20.yaml

virtualservice.networking.istio.io/reviews created

如果一切正常,多次刷新浏览器页面,我们会发现页面中review部分大约有20%的几率会看到页面中出带黑星的评价内容,刷新次数越多,结果越准确。

查看networking目录下的yaml可以看到很多规则,大家都可以试试看

[root@k8s-master istio-1.7.1]# ls samples/bookinfo/networking

bookinfo-gateway.yaml egress-rule-google-apis.yaml virtual-service-ratings-mysql-vm.yaml virtual-service-reviews-80-20.yaml virtual-service-reviews-v3.yaml

certmanager-gateway.yaml fault-injection-details-v1.yaml virtual-service-ratings-mysql.yaml virtual-service-reviews-90-10.yaml

destination-rule-all-mtls.yaml virtual-service-all-v1.yaml virtual-service-ratings-test-abort.yaml virtual-service-reviews-jason-v2-v3.yaml

destination-rule-all.yaml virtual-service-details-v2.yaml virtual-service-ratings-test-delay.yaml virtual-service-reviews-test-v2.yaml

destination-rule-reviews.yaml virtual-service-ratings-db.yaml virtual-service-reviews-50-v3.yaml virtual-service-reviews-v2-v3.yaml

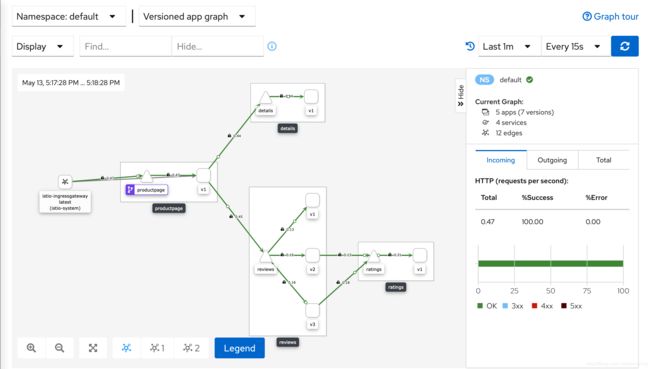

查看Dashboard

具体信息参考这里,我在这里有个小改进

Istio集成了几种 不同的遥测应用。这些可以帮助您了解服务网格的结构,显示网格的拓扑结构,并分析网格的运行状况。

使用下面的方法来部署 Kiali dashboard, 和 Prometheus, Grafana, Jaeger.

-

安装Kiali并等待其部署完毕

[root@k8s-master istio-1.7.1]# kubectl apply -f samples/addons serviceaccount/grafana created configmap/grafana created service/grafana created deployment.apps/grafana created configmap/istio-grafana-dashboards created configmap/istio-services-grafana-dashboards created deployment.apps/jaeger created service/tracing created service/zipkin created customresourcedefinition.apiextensions.k8s.io/monitoringdashboards.monitoring.kiali.io created serviceaccount/kiali created configmap/kiali created clusterrole.rbac.authorization.k8s.io/kiali-viewer created clusterrole.rbac.authorization.k8s.io/kiali created clusterrolebinding.rbac.authorization.k8s.io/kiali created service/kiali created deployment.apps/kiali created serviceaccount/prometheus created configmap/prometheus created clusterrole.rbac.authorization.k8s.io/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created service/prometheus created deployment.apps/prometheus created unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" unable to recognize "samples/addons/kiali.yaml": no matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1" [root@k8s-master istio-1.7.1]# while ! kubectl wait --for=condition=available --timeout=600s deployment/kiali -n istio-system; do sleep 1; done deployment.apps/kiali condition met -

查看dashboard

[root@k8s-master istio-1.7.1]# istioctl dashboard kiali Error: could not build port forwarder for Kiali: pod is not running. Status=Pending [root@k8s-master istio-1.7.1]# istioctl dashboard kiali http://localhost:20001/kiali第一次执行的时候kiali这个pod还没有部署好,在kubernetes dashboard里面可以实时查看其状态,等其部署好了之后再执行一次即可。

注意:这里是localhost的服务,只能在k8s-master这个服务器上访问到,很不方便。该命令也会启动一个本地的Firefox去访问该URL。但是一般的Linux服务器图形化访问都不是很方便。所以请大家参考下一步对这个kiali的service做一定的修改,使其能够在我们任何浏览器里面能够访问到。

-

修改kiali dashboard

- 可以通过kubectl到处kiali这个service的yaml并做相应修改

- 也可以直接通过kubernetes dashboard找到这个service选择edit来修改

kubectl get service kiali -n istio-system -o yaml > kiali-service.yaml vim kiali-service.yaml增加nodePort的端口,并将ClusterIP改成NodePort模式

spec:

ports:

- name: http

# -> nodePort: 32123

protocol: TCP

port: 20001

targetPort: 20001

- name: http-metrics

protocol: TCP

port: 9090

targetPort: 9090

selector:

app.kubernetes.io/instance: kiali-server

app.kubernetes.io/name: kiali

clusterIP: 10.103.92.55

type: ClusterIP # -> type: NodePort

sessionAffinity: None

status:

loadBalancer: {}