《Hand Keypoint Detection in Single Images using Multiview Bootstrapping》及模型推理

论文:《Hand Keypoint Detection in Single Images using Multiview Bootstrapping》2017

链接:1704.07809.pdf (arxiv.org)

code:Hand Keypoint Detection using Deep Learning and OpenCV | LearnOpenCV

论文略读

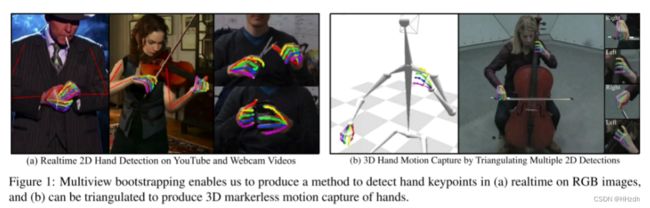

1.Introduction

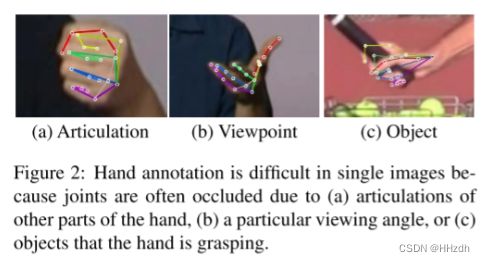

In this paper, we present an approach to boost the performance of a given keypoint detector using a multi-camera setup.在本文中,我们提出了一种使用多摄像机装置提高给定关键点探测器性能的方法。这种方法,我们称之为多视图引导,基于以下观察:即使手的特定图像有明显的遮挡,总有也不存在遮挡的视图。如下,单视图下由于存在遮挡,标注也是很困难的。

2. Multiview Bootstrapped Training

多视图自举。(a)多视图系统提供其中容易进行关键点检测的手的视图,其用于对(B)关键点的3D位置进行三角测量。具有(c)失败检测的困难视图可以(d)使用重新投影的3D关键点来注释,并且用于重新训练(e)现在在困难视图上工作的改进的检测器。

从一小组标记的手部图像开始,并使用神经网络( Convolutional Pose Machines -类似于身体姿势)来粗略估计手部关键点。论文中有一个巨大的多视角手部采集系统,可以从不同的视点或角度拍摄图像,该系统包括31个高清摄像头。

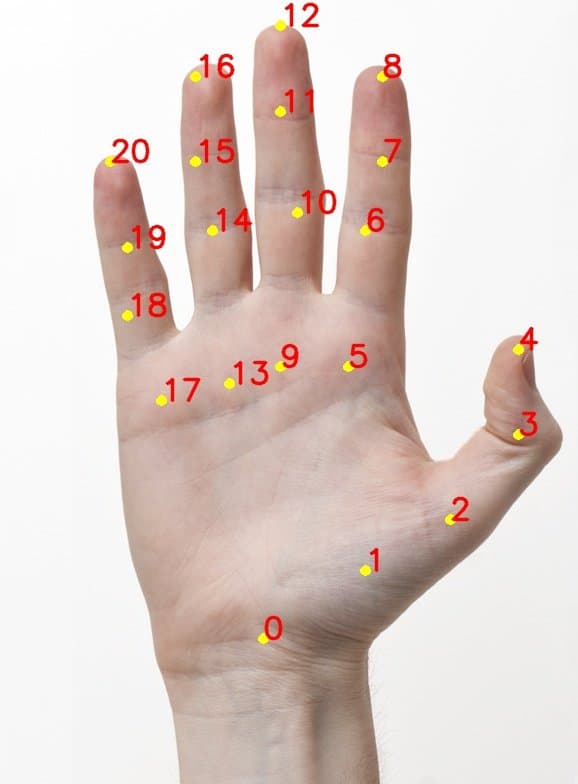

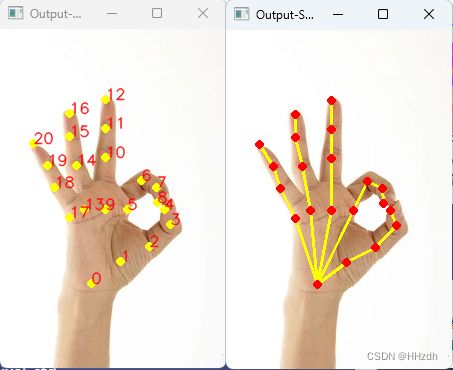

将这些图像通过检测器detector 来获得许多粗略的关键点预测。从不同视图获取同一只手的检测到的关键点后,将执行Keypoint triangulation以获取关键点的三维位置。关键点的3D位置用于通过从3D到2D的重投影来鲁棒地预测关键点。这对于难以预测关键点的图像尤其重要。这样,他们在几次迭代中得到了一个性能大大改进的检测器detector。该模型产生22个关键点,手有21个点,而第22个点表示背景。如下:

Code test

1.python推理

import cv2

import time

import numpy as np

# 模型文件、关键点数量、骨架连接方式

protoFile = "./model/pose_deploy.prototxt"

weightsFile = "./model/pose_iter_102000.caffemodel"

nPoints = 22

POSE_PAIRS = [[0, 1], [1, 2], [2, 3], [3, 4], [0, 5], [5, 6], [6, 7], [7, 8], [0, 9], [9, 10], [10, 11], [11, 12],

[0, 13], [13, 14], [14, 15], [15, 16], [0, 17], [17, 18], [18, 19], [19, 20]]

# 读取图片和模型加载

frame = cv2.imread("./image/1402.jpg")

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

# 准备推理图片

frameCopy = np.copy(frame)

frameWidth = frame.shape[1]

frameHeight = frame.shape[0]

aspect_ratio = frameWidth / frameHeight

threshold = 0.1

# 推理并计算推理时间

t = time.time()

# input image dimensions for the network

inHeight = 368

inWidth = int(((aspect_ratio * inHeight) * 8) // 8)

inpBlob = cv2.dnn.blobFromImage(frame, 1.0 / 255, (inWidth, inHeight), (0, 0, 0), swapRB=False, crop=False)

net.setInput(inpBlob)

output = net.forward()

print("time taken by network : {:.3f}".format(time.time() - t))

# Empty list to store the detected keypoints

points = []

# 画出关键点并标记编号

for i in range(nPoints):

# confidence map of corresponding body's part.

probMap = output[0, i, :, :]

probMap = cv2.resize(probMap, (frameWidth, frameHeight))

# Find global maxima of the probMap.

minVal, prob, minLoc, point = cv2.minMaxLoc(probMap)

if prob > threshold:

cv2.circle(frameCopy, (int(point[0]), int(point[1])), 4, (0, 255, 255), thickness=-1,

lineType=cv2.FILLED)

cv2.putText(frameCopy, "{}".format(i), (int(point[0]), int(point[1])), cv2.FONT_HERSHEY_SIMPLEX, 0.5,

(0, 0, 255),

1, lineType=cv2.LINE_AA)

# Add the point to the list if the probability is greater than the threshold

points.append((int(point[0]), int(point[1])))

else:

points.append(None)

# Draw Skeleton

# 根据骨架数组,画出骨架(即连接点)

for pair in POSE_PAIRS:

partA = pair[0]

partB = pair[1]

if points[partA] and points[partB]:

cv2.line(frame, points[partA], points[partB], (0, 255, 255), 2)

cv2.circle(frame, points[partA], 4, (0, 0, 255), thickness=-1, lineType=cv2.FILLED)

cv2.circle(frame, points[partB], 4, (0, 0, 255), thickness=-1, lineType=cv2.FILLED)

# 显示推理结果

cv2.imshow('Output-Keypoints', frameCopy)

cv2.waitKey(0)

cv2.imshow('Output-Skeleton', frame)

cv2.waitKey(0)2.C++推理

#include

#include

#include

#include

using namespace std;

using namespace cv;

using namespace cv::dnn;

//各个部位连接线坐标,比如(0,1)表示第0特征点和第1特征点连接线为拇指

const int POSE_PAIRS[20][2] =

{

{0,1}, {1,2}, {2,3}, {3,4}, // thumb

{0,5}, {5,6}, {6,7}, {7,8}, // index

{0,9}, {9,10}, {10,11}, {11,12}, // middle

{0,13}, {13,14}, {14,15}, {15,16}, // ring

{0,17}, {17,18}, {18,19}, {19,20} // small

};

int nPoints = 22;

int main()

{

//模型文件位置

string protoFile = "../model/pose_deploy.prototxt";

string weightsFile = "../model/pose_iter_102000.caffemodel";

// read image 读取图像

string imageFile = "../image/1402.jpg";

Mat frame = imread(imageFile);

if (frame.empty())

{

cout << "check image" << endl;

return 0;

}

//复制图像

Mat frameCopy = frame.clone();

//读取图像长宽

int frameWidth = frame.cols;

int frameHeight = frame.rows;

float thresh = 0.01;

//原图宽高比

float aspect_ratio = frameWidth / (float)frameHeight;

int inHeight = 368;

//缩放图像

int inWidth = (int(aspect_ratio*inHeight) * 8) / 8;

cout << "inWidth = " << frameWidth << " ; inHeight = " << frameHeight << endl;

double t = (double)cv::getTickCount();

//调用caffe模型

cv::dnn::Net net = readNetFromCaffe(protoFile, weightsFile);

Mat inpBlob = blobFromImage(frame, 1.0 / 255, Size(inWidth, inHeight), Scalar(0, 0, 0), false, false);

net.setInput(inpBlob);

Mat output = net.forward();

int H = output.size[2];

int W = output.size[3];

// find the position of the body parts 找到各点的位置

vector points(nPoints);

for (int n = 0; n < nPoints; n++)

{

// Probability map of corresponding body's part. 第一个特征点的预测矩阵

Mat probMap(H, W, CV_32F, output.ptr(0, n));

//放大预测矩阵

resize(probMap, probMap, Size(frameWidth, frameHeight));

Point maxLoc;

double prob;

//寻找预测矩阵,最大值概率以及最大值的坐标位置

minMaxLoc(probMap, 0, &prob, 0, &maxLoc);

if (prob > thresh)

{

//画图

circle(frameCopy, cv::Point((int)maxLoc.x, (int)maxLoc.y), 4, Scalar(0, 255, 255), -1);

cv::putText(frameCopy, cv::format("%d", n), cv::Point((int)maxLoc.x, (int)maxLoc.y), cv::FONT_HERSHEY_COMPLEX, 0.4, cv::Scalar(0, 0, 255), 1);

}

//保存特征点的坐标

points[n] = maxLoc;

}

//获取要画的骨架线个数

int nPairs = sizeof(POSE_PAIRS) / sizeof(POSE_PAIRS[0]);

//连接点,画骨架

for (int n = 0; n < nPairs; n++)

{

// lookup 2 connected body/hand parts

Point2f partA = points[POSE_PAIRS[n][0]];

Point2f partB = points[POSE_PAIRS[n][1]];

if (partA.x <= 0 || partA.y <= 0 || partB.x <= 0 || partB.y <= 0)

continue;

//画骨条线

line(frame, partA, partB, Scalar(0, 255, 255), 2);

circle(frame, partA, 4, Scalar(0, 0, 255), -1);

circle(frame, partB, 4, Scalar(0, 0, 255), -1);

}

//计算运行时间

t = ((double)cv::getTickCount() - t) / cv::getTickFrequency();

cout << "Time Taken = " << t << endl;

imshow("Output-Keypoints", frameCopy);

imshow("Output-Skeleton", frame);

imwrite("../image/result/Output-Skeleton.jpg", frame);

imwrite("../image/result/Output-Keypoints.jpg", frameCopy);

waitKey();

return 0;

} 模型文件:可在我的资源中下载:openpose的手部关键点估计预训练模型资源

也可前往以下链接下载:https://github.com/CMU-Perceptual-Computing-Lab/openpose