【OpenCV-Python】37.OpenCV的人脸和目标的跟踪——dlib库

37.OpenCV的人脸和目标的跟踪——dlib库

文章目录

- 前言

- 一、基于dlib库的检测人脸、跟踪人脸

- 二、基于dlib库的选定目标物、跟踪目标

- 三、OpenCV-Python资源下载

- 总结

前言

dlib库是一个十分好用的机器学习库,其源码均由C++实现,并提供了Python 接口,可广泛适用于很多场景。本实例仅简单示范dlib库中关于人脸跟踪和目标跟踪技术的Python应用。

一、基于dlib库的检测人脸、跟踪人脸

程序可以实现对人脸检测后进行跟踪,并保存视频。

import cv2

import dlib

def main():

capture = cv2.VideoCapture(0)

detector = dlib.get_frontal_face_detector()

tractor = dlib.correlation_tracker()

tracking_state = False

frame_width = capture.get(cv2.CAP_PROP_FRAME_WIDTH)

frame_height = capture.get(cv2.CAP_PROP_FRAME_HEIGHT)

frame_fps = capture.get(cv2.CAP_PROP_FPS)

fourcc = cv2.VideoWriter_fourcc(*"XVID")

output = cv2.VideoWriter("record.avi", fourcc, int(frame_fps), (int(frame_width), int(frame_height)), True)

while True:

ret, frame = capture.read()

if tracking_state is False:

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

dets = detector(gray, 1)

if len(dets) > 0:

tractor.start_track(frame, dets[0])

tracking_state = True

if tracking_state is True:

tractor.update(frame)

position = tractor.get_position()

cv2.rectangle(frame,(int(position.left()), int(position.top())), (int(position.right()), int(position.bottom())), (0,255,0), 3)

key = cv2.waitKey(1) & 0xFF

if key == ord('q'):

break

cv2.imshow("face tracking", frame)

output.write(frame)

capture.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

main()

程序可以实现对人脸检测后进行跟踪,同时可以在视频中显示信息提示,之后保存视频。

import cv2

import dlib

def show_info(frame, tracking_state):

pos1 = (20, 40)

pos2 = (20, 80)

cv2.putText(frame, "'1' : reset ", pos1, cv2.FONT_HERSHEY_COMPLEX, 0.5, (255,255,255))

if tracking_state is True:

cv2.putText(frame, "tracking now ...", pos2, cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 0, 0))

else:

cv2.putText(frame, "no tracking ...", pos2, cv2.FONT_HERSHEY_COMPLEX, 0.5, (0, 255, 0))

def main():

capture = cv2.VideoCapture(0)

detector = dlib.get_frontal_face_detector()

tractor = dlib.correlation_tracker()

tracking_state = False

frame_width = capture.get(cv2.CAP_PROP_FRAME_WIDTH)

frame_height = capture.get(cv2.CAP_PROP_FRAME_HEIGHT)

frame_fps = capture.get(cv2.CAP_PROP_FPS)

fourcc = cv2.VideoWriter_fourcc(*"XVID")

output = cv2.VideoWriter("record.avi", fourcc, int(frame_fps), (int(frame_width), int(frame_height)), True)

while True:

ret, frame = capture.read()

show_info(frame, tracking_state)

if tracking_state is False:

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

dets = detector(gray, 1)

if len(dets) > 0:

tractor.start_track(frame, dets[0])

tracking_state = True

if tracking_state is True:

tractor.update(frame)

position = tractor.get_position()

cv2.rectangle(frame,(int(position.left()), int(position.top())), (int(position.right()), int(position.bottom())), (0,255,0), 3)

key = cv2.waitKey(1) & 0xFF

if key == ord('q'):

break

if key == ord('1'):

tracking_state = False

cv2.imshow("face tracking", frame)

output.write(frame)

capture.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

main()

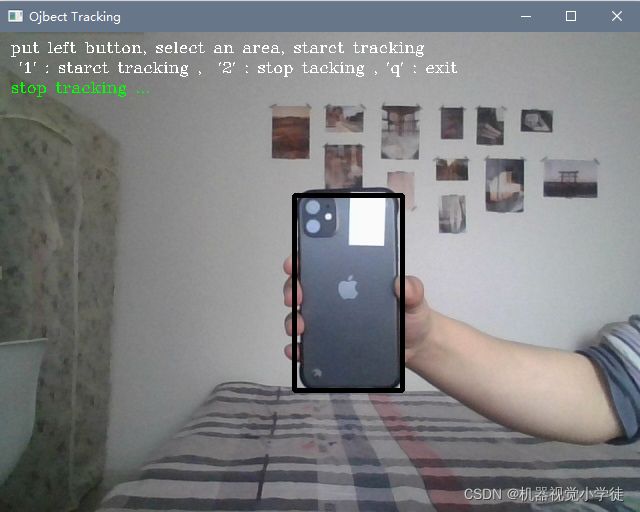

二、基于dlib库的选定目标物、跟踪目标

import cv2

import dlib

def show_info(frame, tracking_state):

pos1 = (10, 20)

pos2 = (10, 40)

pos3 = (10, 60)

info1 = "put left button, select an area, starct tracking"

info2 = " '1' : starct tracking , '2' : stop tacking , 'q' : exit "

cv2.putText(frame, info1, pos1, cv2.FONT_HERSHEY_COMPLEX, 0.5, (255,255,255))

cv2.putText(frame, info2, pos2, cv2.FONT_HERSHEY_COMPLEX, 0.5, (255,255,255))

if tracking_state:

cv2.putText(frame, "tracking now ...", pos3, cv2.FONT_HERSHEY_COMPLEX, 0.5, (255,0,0))

else:

cv2.putText(frame, "stop tracking ...", pos3, cv2.FONT_HERSHEY_COMPLEX, 0.5, (0,255,0))

points = []

def mouse_event_handler(event, x, y, flags, parms):

global points # 全局调用

if event == cv2.EVENT_LBUTTONDOWN:

points = [(x, y)]

elif event == cv2.EVENT_LBUTTONUP:

points.append((x,y))

capture = cv2.VideoCapture(0)

nameWindow = "Ojbect Tracking"

cv2.namedWindow(nameWindow)

cv2.setMouseCallback(nameWindow, mouse_event_handler)

tracker = dlib.correlation_tracker()

tracking_state = False

while True:

ret, frame = capture.read()

show_info(frame, tracking_state)

if len(points) == 2 :

cv2.rectangle(frame, points[0], points[1], (0,0,0), 3)

dlib_rect = dlib.rectangle(points[0][0], points[0][1], points[1][0], points[1][1])

if tracking_state is True:

tracker.update(frame)

pos = tracker.get_position()

cv2.rectangle(frame, (int(pos.left()),int(pos.top())), (int(pos.right()), int(pos.bottom())), (255, 255, 255), 3)

key = cv2.waitKey(1) & 0xFF

if key == ord('1'):

if len(points) == 2:

tracker.start_track(frame, dlib_rect)

tracking_state = True

points = []

if key == ord('2'):

points = []

tracking_state = False

if key == ord('q'):

break

cv2.imshow(nameWindow, frame)

capture.release()

cv2.destroyAllWindows()

三、OpenCV-Python资源下载

OpenCV-Python测试用图片、中文官方文档、opencv-4.5.4源码

总结

以上内容简单的介绍了OpenCV-Python的人脸跟踪和目标跟踪,有关Python、数据科学、人工智能等文章后续会不定期发布,请大家多多关注,一键三连哟(●’◡’●)。