Hive SQL DML

Hive SQL DML

本节所需数据集

数据集 提取码:rkun

⛵加载数据

Load

- 加载,装载

- 将数据文件移动到与Hive表对应位置,移动时是纯复制,移动操作。

- 纯复制移动指数据load加载到表中,hive不会对表中数据内容进行任何变换,任何操作

LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename;

本地文件系统LOCAL指的是Hiveserver2服务所在机器的本地Linux文件系统,不是Hive客户端所在的本地文件系统。

首先准备数据

students.txt

95001,李勇,男,20,CS

95002,刘晨,女,19,IS

95003,王敏,女,22,MA

95004,张立,男,19,IS

95005,刘刚,男,18,MA

95006,孙庆,男,23,CS

95007,易思玲,女,19,MA

95008,李娜,女,18,CS

95009,梦圆圆,女,18,MA

95010,孔小涛,男,19,CS

95011,包小柏,男,18,MA

95012,孙花,女,20,CS

95013,冯伟,男,21,CS

95014,王小丽,女,19,CS

95015,王君,男,18,MA

95016,钱国,男,21,MA

95017,王风娟,女,18,IS

95018,王一,女,19,IS

95019,邢小丽,女,19,IS

95020,赵钱,男,21,IS

95021,周二,男,17,MA

95022,郑明,男,20,MA

Hiveserver2服务所在机器的本地Linux上传数据

[root@node1 ~]# cd hivedata/

[root@node1 hivedata]# ls

archer.txt students.txt team_ace_player.txt

[root@node1 hivedata]# pwd

/root/hivedata

在db1下创建两张表

--建表student_local 用于演示从本地加载数据

create table student_local(num int,name string,sex string,age int,dept string) row format delimited fields terminated by ',';

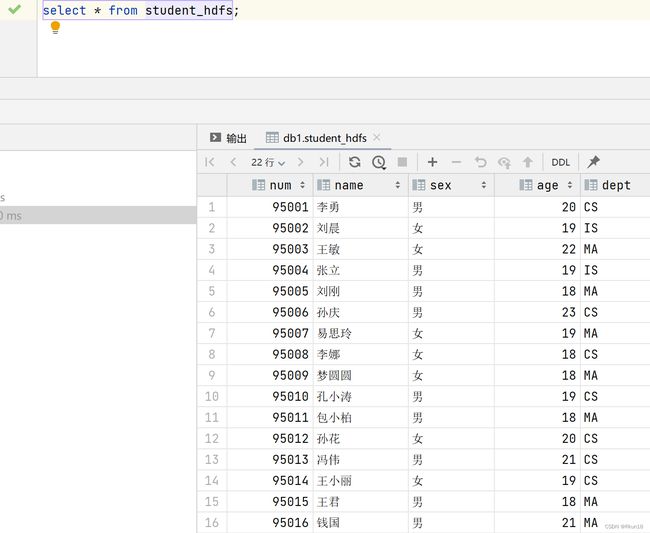

--建表student_HDFS 用于演示从HDFS加载数据

create table student_HDFS(num int,name string,sex string,age int,dept string) row format delimited fields terminated by ',';

-- 从本地加载数据 数据位于HS2(node1)本地文件系统 本质是hadoop fs -put上传操作

LOAD DATA LOCAL INPATH '/root/hivedata/students.txt' INTO TABLE student_local;

将数据上传到hdfs根目录下

[root@node1 hivedata]# hadoop fs -put students.txt /

[root@node1 hivedata]# hadoop fs -ls /

Found 3 items

-rw-r--r-- 3 root supergroup 526 2023-06-13 15:26 /students.txt

drwx-w---- - root supergroup 0 2023-06-13 09:28 /tmp

drwxr-xr-x - root supergroup 0 2023-06-13 09:12 /user

--从HDFS加载数据 数据位于HDFS文件系统根目录下 本质是hadoop fs -mv 移动操作

--先把数据上传到HDFS上 hadoop fs -put /root/hivedata/students.txt /

LOAD DATA INPATH '/students.txt' INTO TABLE student_hdfs;

️插入数据

- insert+select:将后面查询返回的结果作为内容插入到指定表中

INSERT INTO TABLE tablename select_statement1 FROM from_statement;

底层使用MapReduce,会经历一系列操作,所以会很慢,不要急。

--step1:创建一张源表student使用之前的数据

drop table if exists student;

create table student(num int,name string,sex string,age int,dept string)

row format delimited fields terminated by ',';

--step2:加载数据

load data local inpath '/root/hivedata/students.txt' into table student;

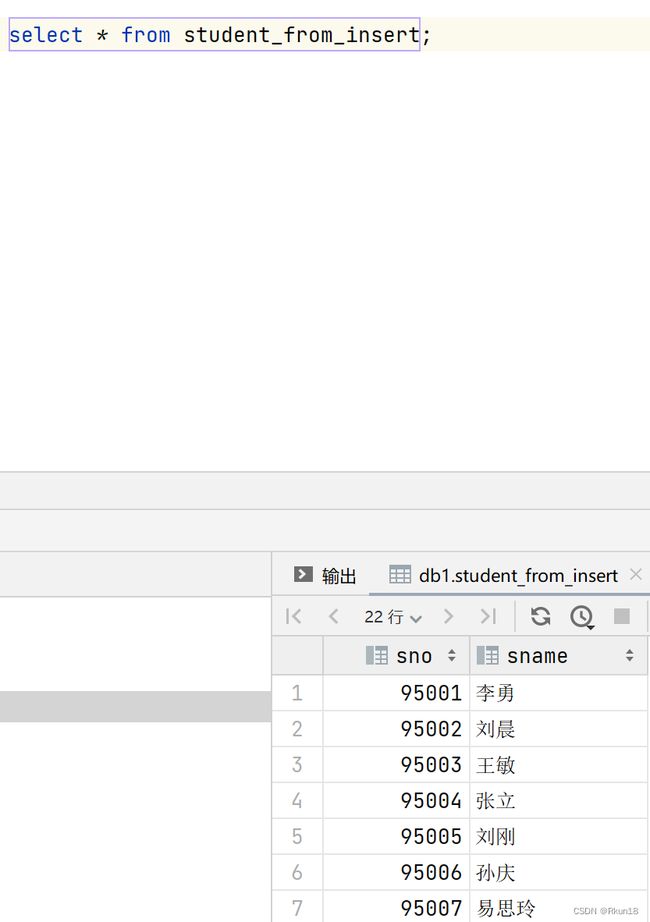

--step3:创建一张目标表 只有两个字段

create table student_from_insert(sno int,sname string);

--使用insert+select插入数据到新表中

insert into table student_from_insert select num,name from student;

查询数据

语法结构

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_reference

[WHERE where_condition]

[GROUP BY col_list]

[ORDER BY col_list]

[LIMIT [offset,] rows]

-

从哪里查询取决于FROM后面的参数

-

表名和列名不区分大小写

练习

数据准备:

us-covid19-counties.dat

美国2021-01-28各个县累计新冠确诊病例数和累计死亡病例数

上传文件至LOCAL

#上传文件至node1

[root@node1 ~]# cd hivedata/

[root@node1 hivedata]# ls

archer.txt students.txt team_ace_player.txt

[root@node1 hivedata]# ls

archer.txt students.txt team_ace_player.txt us-covid19-counties.dat

[root@node1 hivedata]# pwd

/root/hivedata

创建表

--创建表t_usa_covid19

drop table if exists t_usa_covid19;

CREATE TABLE t_usa_covid19(

count_date string,

county string,

state string,

fips int,

cases int,

deaths int)

row format delimited fields terminated by ",";

--将数据load加载到t_usa_covid19表对应的路径下

load data local inpath '/root/hivedata/us-covid19-counties.dat' into table t_usa_covid19;

虽然数据导入较慢,但数据查询很快。

select_expr

表示检索查询返回的列,必须至少有一个select_expr。

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_reference

[WHERE where_condition]

[GROUP BY col_list]

[ORDER BY col_list]

[LIMIT [offset,] rows];

select county, cases, deaths from t_usa_covid19;

--查询当前数据库

select current_database(); --省去from关键字

ALL DISTINCT

- 用户查询指定返回结构中重复的行如何处理

- 没有给出这些选择,默认ALL(匹配所有行)

- DISTINCT指定从结果集中删除重复的行

--返回所有匹配的行

select state from t_usa_covid19;

--相当于

select all state from t_usa_covid19;

--返回所有匹配的行 去除重复的结果

select distinct state from t_usa_covid19;

--多个字段distinct 整体去重

select distinct county,state from t_usa_covid19;

建议给Hive服务器内存和核数给多一点,要不然可能会很慢。

WHERE

- 后跟布尔表达式(true/false),用于查询过滤,当布尔表达式为true,返回select后expr表达是结果,否则返回空

- 可以使用Hive正常的任何函数和运算符,聚合函数除外

select * from t_usa_covid19 where 1 > 2; -- 1 > 2 返回false 返回为空

select * from t_usa_covid19 where 1 = 1; -- 1 = 1 返回true 查询所有

--找出来自于California州的疫情数据

select * from t_usa_covid19 where state = 'California';

--where条件中使用函数 找出州名字母长度超过10位的有哪些

select * from t_usa_covid19 where length(state) >10 ;

聚合

- SQL用于计数和计算的内建函数

- 聚合操作函数:COUNT ,SUM,MAX ,MIN,AVG等函数

- 聚合不管袁术数据有多少行记录,经聚合只返回一行

AVG(column) 返回某列的平均值

COUNT(column) 返回某列的行数(不包括 NULL 值)

COUNT(*) 返回被选行数

MAX(column) 返回某列的最高值

MIN(column) 返回某列的最低值

SUM(column) 返回某列的总和

--学会使用as 给查询返回的结果起个别名

select count(county) as county_cnts from t_usa_covid19;

--去重distinct

select count(distinct county) as county_cnts from t_usa_covid19;

--统计美国加州有多少个县

select count(county) from t_usa_covid19 where state = "California";

--统计德州总死亡病例数

select sum(deaths) from t_usa_covid19 where state = "Texas";

--统计出美国最高确诊病例数是哪个县

select max(cases) from t_usa_covid19;

GROUP BY

- 用于结合聚合函数,根据一个或多个列对结果集进行分组

--根据state州进行分组 统计每个州有多少个县county

select count(county) from t_usa_covid19 where count_date = "2021-01-28" group by state;

--统计的结果是属于哪一个州的

select state,count(county) as county_nums from t_usa_covid19 where count_date = "2021-01-28" group by state;

--每个县的死亡病例数 把deaths字段加上返回

select state,count(county),sum(deaths) from t_usa_covid19 where count_date = "2021-01-28" group by state;

-- sql报错了org.apache.hadoop.hive.ql.parse.SemanticException:Line 1:27 Expression not in GROUP BY key 'deaths'

--group by的语法限制

--结论:出现在GROUP BY中select_expr的字段:要么是GROUP BY分组的字段;要么是被聚合函数应用的字段。

--deaths不是分组字段 报错

--state是分组字段 可以直接出现在select_expr中

--被聚合函数应用

select state,count(county),sum(deaths) from t_usa_covid19 where count_date = "2021-01-28" group by state;

--避免出现歧义

HAVING

- Having让我们筛选分组后各组数据,可以在Havin中使用聚合函数,此时where,group by执行结束,结果集以及确定(在确定的结果集上进行操作)

--在group by的时候聚合函数已经作用得出结果 having直接引用结果过滤 不需要再单独计算一次了

select state,sum(deaths) as cnts from t_usa_covid19 where count_date = "2021-01-28" group by state having cnts> 10000;

ORDER BY

- 用于指定列对结果集进行排序

- 默认升序(ASC)对记录进行排序,降序(DESC)

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_reference

[WHERE where_condition]

[GROUP BY col_list]

[ORDER BY col_list]

[LIMIT [offset,] rows]

--不写排序规则 默认就是asc升序

select * from t_usa_covid19 order by cases asc;

--根据死亡病例数倒序排序 查询返回加州每个县的结果

select * from t_usa_covid19 where state = "California" order by cases desc;

LIMIT

- 限制SELECT语句返回行数

- 接受一个或两个数字参数 ,必须都是非负整数

- 第一个参数代表返回第一行的偏移量,第二个参数代表返回的最大行数。

- 给出单个参数,代表最大行数,偏移量默认为0

--返回结果集的前5条

select * from t_usa_covid19 where count_date = "2021-01-28" and state ="California" limit 5;

--返回结果集从第3行开始 共3行

select * from t_usa_covid19 where count_date = "2021-01-28" and state ="California" limit 2,3;

--注意 第一个参数偏移量是从0开始的 0 1 2 从第三条数据开始(包含第三条数据)

select state,sum(deaths) as cnts from t_usa_covid19

where count_date = "2021-01-28"

group by state

having cnts> 10000

limit 2;

️JOIN

- 根据两个或多个表中列之间的关系,从这些表中共同查询数据。

- inner join(内连接) left join(左连接)

join_table:

table_reference [INNER] JOIN table_factor [join_condition]

| table_reference {LEFT} [OUTER] JOIN table_reference join_condition

join_condition:

ON expression

- table_reference:join查询使用的表名

- table_factor:链接查询使用的表名

- join_condition:join查询关联条件

数据准备:

数据集中hive join

- 员工表

employee.txt - 地址信息

employee_address.txt - 联系方式

employee_connection.txt

#上传表数据

[root@node1 ~]# cd hivedata/

[root@node1 hivedata]# ls

archer.txt students.txt team_ace_player.txt us-covid19-counties.dat

[root@node1 hivedata]# rm -rf *

[root@node1 hivedata]# ls

employee_address.txt employee_connection.txt employee.txt

-- 员工表

CREATE TABLE employee(

id int,

name string,

deg string,

salary int,

dept string

) row format delimited

fields terminated by ',';

--住址信息表

CREATE TABLE employee_address (

id int,

hno string,

street string,

city string

) row format delimited

fields terminated by ',';

--联系方式信息表

CREATE TABLE employee_connection (

id int,

phno string,

email string

) row format delimited

fields terminated by ',';

--加载数据到表中

load data local inpath '/root/hivedata/employee.txt' into table employee;

load data local inpath '/root/hivedata/employee_address.txt' into table employee_address;

load data local inpath '/root/hivedata/employee_connection.txt' into table employee_connection;

⭐inner join

- 只有进行连接的两个表都存在与连接条件相匹配的数据才会被留下来

- inner join == join

select e.id ,e.name,e_a.city,e_a.street

from employee e

join employee_address e_a

on e.id =e_a.id;

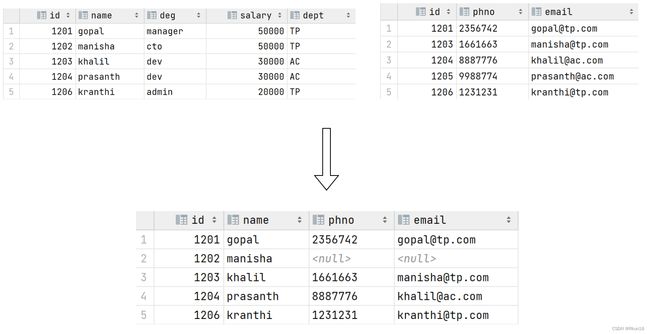

left join

- join时以左表数据为准,右表与之管理,左边数据全部返回,右表关联数据返回,关联不上使用null返回。

select e.id,e.name,e_conn.phno,e_conn.email

from employee e

left join employee_connection e_conn

on e.id =e_conn.id;