Flink 学习六 Flink 窗口计算API

Flink 学习六 Flink 窗口计算API

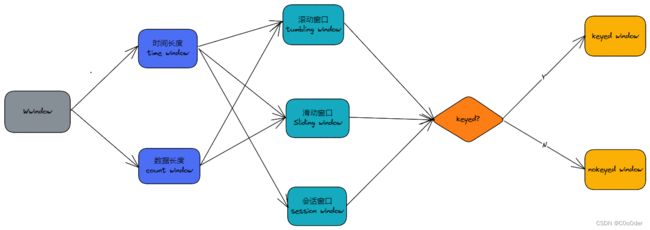

1.窗口 (window)概念和分类

窗口 window 是处理无限流的核心就是把无界的数据流,按照一定的规则划分成一段一段的有界的数据流(桶),然后再这个有界的数据流里面去做计算;

2.分类体系

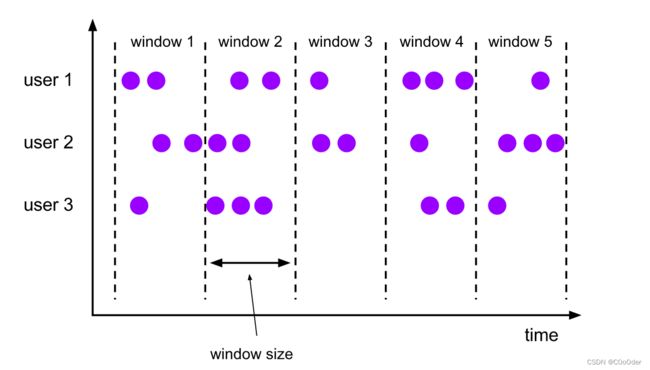

2.1 滚动窗口

相邻窗口之间是没有数据重合

- window 大小可以是时间,可以是数据长度

- 按照数据流是否可以是 keyed , 在分类,nonkey window 也叫做global window (并行度为1)

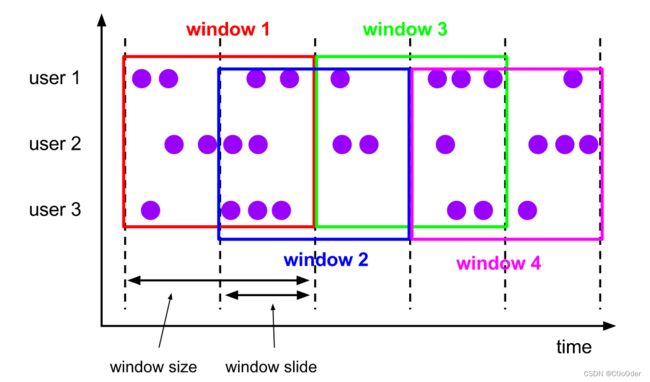

2.2 滑动窗口

滑动步长小于窗口大小,数据会有重合;正常情况下是有数据重合的

- window 大小可以是时间,可以是数据长度

- 按照数据流是否可以是 keyed , 在分类,nonkey window 也叫做global window (并行度为1)

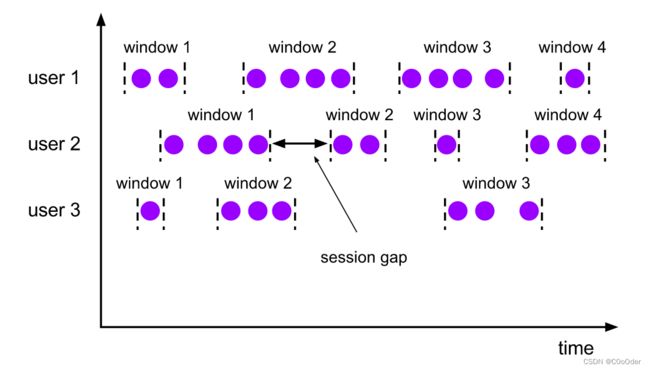

2.3 会话窗口

按照时间分类的一个类别,一段时间没有数据,就重新开一个窗口;

- window 大小可以是时间;

- 按照数据流是否可以是 keyed , 在分类,nonkey window 也叫做global window (并行度为1)

3.窗口计算API

计算的API 主要是根据最后window的类型是否是 Keyed ,

3.1 KeyedWindow

上游数据按照hash key 后计算,并行度可控

stream

.keyBy(...) <- keyed versus non-keyed windows //按照上面key 分组

.window(...) <- required: "assigner" //window 类别 是 滚动/滑动/会话

[.trigger(...)] <- optional: "trigger" (else default trigger) //数据进入桶触发

[.evictor(...)] <- optional: "evictor" (else no evictor) // 桶数据擦除策略

[.allowedLateness(...)] <- optional: "lateness" (else zero) //是否允许数据迟到

[.sideOutputLateData(...)] <- optional: "output tag" (else no side output for late data) //测流输出处理迟到数据,补救策略

.reduce/aggregate/apply() <- required: "function" //处理函数,一般是聚合计算

[.getSideOutput(...)] <- optional: "output tag" //

//滚动窗口 处理时间语义

dataStream.windowAll(TumblingProcessingTimeWindows.of(Time.seconds(5)));

//滑动窗口 处理时间语义

dataStream.windowAll(SlidingProcessingTimeWindows.of(Time.seconds(5),Time.seconds(1)));

//滚动窗口 事件时间语义

dataStream.windowAll(TumblingEventTimeWindows.of(Time.seconds(5)));

//滑动窗口 事件时间语义

dataStream.windowAll(SlidingEventTimeWindows.of(Time.seconds(5),Time.seconds(1)));

//计数滚动窗口

dataStream.countWindowAll(100);

//计数滑动窗口

dataStream.countWindowAll(100,20);

3.2 NonKeyedWindow

上游数据聚合到一起计算 ,并行度是1

stream

.windowAll(...) <- required: "assigner" //窗口类型是全局窗口 ,这个不一样 //window 类别 是 滚动/滑动/会话

[.trigger(...)] <- optional: "trigger" (else default trigger)

[.evictor(...)] <- optional: "evictor" (else no evictor)

[.allowedLateness(...)] <- optional: "lateness" (else zero)

[.sideOutputLateData(...)] <- optional: "output tag" (else no side output for late data)

.reduce/aggregate/apply() <- required: "function"

[.getSideOutput(...)] <- optional: "output tag"

//滚动窗口 处理时间语义

keyedStream.windowAll(TumblingProcessingTimeWindows.of(Time.seconds(5)));

//滑动窗口 处理时间语义

keyedStream.windowAll(SlidingProcessingTimeWindows.of(Time.seconds(5),Time.seconds(1)));

//滚动窗口 事件时间语义

keyedStream.windowAll(TumblingEventTimeWindows.of(Time.seconds(5)));

//滑动窗口 事件时间语义

keyedStream.windowAll(SlidingEventTimeWindows.of(Time.seconds(5),Time.seconds(1)));

//计数滚动窗口

keyedStream.countWindow(100);

//计数滑动窗口

keyedStream.countWindow(100,20);

3.3窗口聚合算子

3.3.1 增量窗口聚合算子

数据来一条出库一条,比如累加, min,max,minBy,maxBy,sum,reduce,aggregate

min,max,minBy,maxBy,sum

KeyedStream<EventLog, Long> keyedStream = streamOperator.keyBy(EventLog::getGuid);

SingleOutputStreamOperator<EventLog> serviceTime = keyedStream

.countWindow(10,2) // 创建滑动 count 窗口

.min("serviceTime") ; //serviceTime 是符合逻辑的,其他字段是窗口的第一条数据

.max("serviceTime") ; //serviceTime 是符合逻辑的,其他字段是窗口的第一条数据

.minBy("serviceTime") ; //serviceTime 是符合逻辑的,其他字段是 最小 serviceTime 所在哪一行

.maxBy("serviceTime") ; //serviceTime 是符合逻辑的,其他字段是 最大 serviceTime 所在哪一行

.sum("serviceTime") ; // serviceTime 是符合逻辑的(serviceTime 字段之和),其他字段是窗口的第一条数据

reduce

reduce 函数的返回值写死了,和数据流中一样

keyedStream.countWindow(10,2).reduce(new ReduceFunction<EventLog>() {

@Override

public EventLog reduce(EventLog value1, EventLog value2) throws Exception {

//

return null;

}

})

aggregate

aggregate 函数的返回值可以自定义: 详细见下面 3.4.1

keyedStream.countWindow(10,2).aggregate(new AggregateFunction<EventLog, Object, Object>() {

......

})

3.3.2 全量聚合算子

process

详细见下面 3.4.2

相比于apply 更加的强大,可以拿到上下文

apply

keyedStream.countWindow(10,2).apply(new WindowFunction<EventLog, Object, Long, GlobalWindow>() {

/**

* Evaluates the window and outputs none or several elements.

*

* @param key key

* @param window 窗口,可以拿到开始和结束

* @param input The elements in the window being evaluated. //窗口所有数据

* @param out //收集器

*/

@Override

public void apply(Long aLong, GlobalWindow window, Iterable<EventLog> input, Collector<Object> out) throws Exception {

}

})

3.3.3 示例

演示 KeyedWindow 使用的较多,和NonKeyedWindow 区别也不大;

原始需求 :

计算时间窗口内 EventLog 中根据guid 分组的个数

@Data

@AllArgsConstructor

@NoArgsConstructor

public class EventLog {

private Long guid; //用户id

private String event;//用户事件

private String timeStamp; //事件发生时间

private Integer serviceTime; //接口时间

}

自定义source 生成数据使用

public class CustomEventLogSourceFunction implements SourceFunction<EventLog> {

volatile boolean runFlag = true;

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

@Override

public void run(SourceContext<EventLog> sourceContext) throws Exception {

Random random = new Random();

while (runFlag) {

EventLog eventLog = new EventLog(Long.valueOf(random.nextInt(5)),"xw"+random.nextInt(3),simpleDateFormat.format(new Date()));

Thread.sleep(random.nextInt(5)*1000);

sourceContext.collect(eventLog);

}

}

@Override

public void cancel() {

runFlag = false;

}

}

aggregate

聚合:窗口中**,拿到每一条数据**,去做聚合计算

public class _03_Window {

public static void main(String[] args) throws Exception {

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

// 获取环境

Configuration configuration = new Configuration();

configuration.setInteger("rest.port", 8822);

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(configuration).setParallelism(1); //并行度设置为1 好观察

//DataStream dataStreamSource = env.socketTextStream("192.168.141.141", 9000);

DataStreamSource<EventLog> dataStream = env.addSource(new CustomEventLogSourceFunction());

// 创建 Watermark 策略 事件时间推进策略

WatermarkStrategy<EventLog> watermarkStrategy = WatermarkStrategy

.<EventLog>forBoundedOutOfOrderness(Duration.ofMillis(0))

.withTimestampAssigner(new SerializableTimestampAssigner<EventLog>() {

@Override

public long extractTimestamp(EventLog element, long recordTimestamp) {

try {

return sdf.parse(element.getTimeStamp()).getTime();

} catch (Exception e) {

return 0;

}

}

});

// 分配wm , 使用事件时间

DataStream<EventLog> streamOperator = dataStream.assignTimestampsAndWatermarks(watermarkStrategy).disableChaining();

// 需求 10 s 统计30s 数据 用户行为个数

streamOperator.keyBy(EventLog::getGuid)

.window(SlidingEventTimeWindows.of(Time.seconds(30), Time.seconds(10))) // 创建滑动窗口

// .apply() // 全部窗口数据给你,自定义全窗口运算逻辑,返回计算结果,灵活度更大

//.process() //全部窗口数据给你,自定义全窗口运算逻辑,返回计算结果,灵活度更大 可以获取上下文,数据更多

.aggregate(new AggregateFunction<EventLog, Tuple2<Long,Integer>, Tuple2<Long,Integer>>() { // api 聚合

// 初始化累加器

@Override

public Tuple2<Long,Integer> createAccumulator() {

return Tuple2.of(null,0);

}

// 累加逻辑

@Override

public Tuple2<Long,Integer> add(EventLog value, Tuple2<Long,Integer> accumulator) {

return Tuple2.of(value.getGuid(),accumulator.f1+1);

}

// 获取结果

@Override

public Tuple2<Long,Integer> getResult(Tuple2<Long,Integer> accumulator) {

return accumulator;

}

// 分区合并 方法

@Override

public Tuple2<Long,Integer> merge(Tuple2<Long,Integer> a, Tuple2<Long,Integer> b) {

return Tuple2.of(a.f0,a.f1+b.f1);

}

}).print();

env.execute();

}

}

process

聚合:窗口结束后,拿到窗口中每一条数据,也可以拿到上下文,去做自定义的逻辑处理数据,比 aggregate 更加的自由

keyedStream.window(TumblingProcessingTimeWindows.of(Time.seconds(20)))

.process(new ProcessWindowFunction<EventLog, Tuple2<Long, Integer>, Long, TimeWindow>() {

@Override

public void process(Long aLong,

ProcessWindowFunction<EventLog, Tuple2<Long, Integer>, Long, TimeWindow>.Context context,

Iterable<EventLog> elements, Collector<Tuple2<Long, Integer>> out) throws Exception {

TimeWindow window = context.window();

String start = sdf.format(new Date(window.getStart()));

String end = sdf.format(new Date(window.getEnd()));

Iterator<EventLog> iterator = elements.iterator();

int count = 0;

while (iterator.hasNext()) {

iterator.next();

count++;

}

System.out.println("start:" + start + ";end:" + end + ";count:" + count);

out.collect(Tuple2.of(aLong, count));

}

}).print();

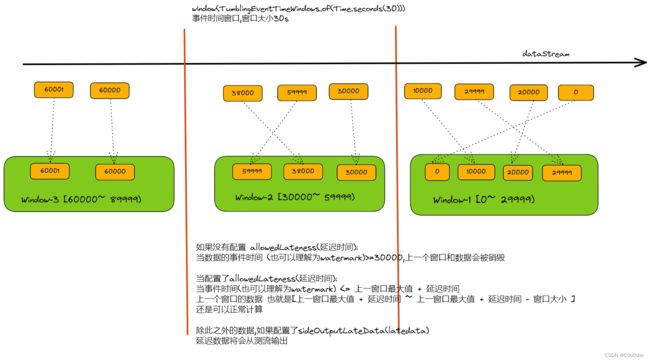

3.4 延迟数据处理

3.4.1 allowdLateness

允许数据迟到 3.5 的图可以参考下

public class _05_Window_allowedLateness {

public static void main(String[] args) throws Exception {

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

// 获取环境

Configuration configuration = new Configuration();

configuration.setInteger("rest.port", 8822);

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(configuration).setParallelism(1);

DataStream<String> dataStreamSource = env.socketTextStream("192.168.141.141", 9000);

// 字符串流==> 对象流

SingleOutputStreamOperator<EventLog> outputStreamOperator = dataStreamSource.map(new MapFunction<String, EventLog>() {

@Override

public EventLog map(String value) throws Exception {

String[] split = value.split(",");

return new EventLog(Long.valueOf(split[0]), split[1], split[2]);

}

});

// 创建 Watermark 策略 事件时间推进策略

WatermarkStrategy<EventLog> watermarkStrategy = WatermarkStrategy

.<EventLog>forBoundedOutOfOrderness(Duration.ofMillis(0))

.withTimestampAssigner(new SerializableTimestampAssigner<EventLog>() {

@Override

public long extractTimestamp(EventLog element, long recordTimestamp) {

return Long.valueOf(element.getTimeStamp());

}

});

// 分配wm , 使用事件时间

DataStream<EventLog> streamOperator = outputStreamOperator.assignTimestampsAndWatermarks(watermarkStrategy)

.disableChaining();

OutputTag<EventLog> latedata = new OutputTag<EventLog>("latedata", TypeInformation.of(EventLog.class));

KeyedStream<EventLog, Long> keyedStream = streamOperator.keyBy(EventLog::getGuid);

SingleOutputStreamOperator<EventLog> guid = keyedStream

.window(TumblingEventTimeWindows.of(Time.seconds(30)))

.allowedLateness(Time.seconds(3)) //允许迟到5s

.sideOutputLateData(latedata) //超过允许迟到的值后 ,输出到测流

.apply(new WindowFunction<EventLog, EventLog, Long, TimeWindow>() {

long count = 0;

@Override

public void apply(Long aLong, TimeWindow window, Iterable<EventLog> input, Collector<EventLog> out) throws Exception {

for (EventLog eventLog : input) {

count+=eventLog.getGuid();

}

EventLog eventLog = new EventLog();

eventLog.setGuid(count);

out.collect(eventLog);

String start = sdf.format(new Date(window.getStart()));

String end = sdf.format(new Date(window.getEnd()));

System.out.println("==> start:" + start + ";end:" + end + ";count:" + count);

}

});

guid.print("主流输出");

DataStream<EventLog> sideOutput = guid.getSideOutput(latedata);

sideOutput.print("测流输出");

env.execute();

}

}

3.4.2 window时间&watermark

3.5 窗口的触发机制

3.5.1 trigger 窗口计算触发

窗口计算的触发,是trigger 类决定,不同的 WindowAssigner 对应不同的trigger

Trigger (org.apache.flink.streaming.api.windowing.triggers)

ProcessingTimeoutTrigger (org.apache.flink.streaming.api.windowing.triggers)

EventTimeTrigger (org.apache.flink.streaming.api.windowing.triggers)

CountTrigger (org.apache.flink.streaming.api.windowing.triggers)

DeltaTrigger (org.apache.flink.streaming.api.windowing.triggers)

NeverTrigger in GlobalWindows (org.apache.flink.streaming.api.windowing.assigners)

ContinuousEventTimeTrigger (org.apache.flink.streaming.api.windowing.triggers)

PurgingTrigger (org.apache.flink.streaming.api.windowing.triggers)

ContinuousProcessingTimeTrigger (org.apache.flink.streaming.api.windowing.triggers)

ProcessingTimeTrigger (org.apache.flink.streaming.api.windowing.triggers)

EventTimeTrigger

事件时间触发器

@PublicEvolving

public class EventTimeTrigger extends Trigger<Object, TimeWindow> {

private static final long serialVersionUID = 1L;

private EventTimeTrigger() {}

/**

* 数据来的时候触发

*/

@Override

public TriggerResult onElement(

Object element, long timestamp, TimeWindow window, TriggerContext ctx)

throws Exception {

//窗口的最大时间小于当前的Watermark() 开启新窗口

if (window.maxTimestamp() <= ctx.getCurrentWatermark()) {

// if the watermark is already past the window fire immediately

return TriggerResult.FIRE;

} else { //否则继续

ctx.registerEventTimeTimer(window.maxTimestamp());

return TriggerResult.CONTINUE;

}

}

//当事件时间推进的时候 也判断是否开启新窗口

@Override

public TriggerResult onEventTime(long time, TimeWindow window, TriggerContext ctx) {

return time == window.maxTimestamp() ? TriggerResult.FIRE : TriggerResult.CONTINUE;

}

//该类是处理事件时间,不处理ProcessingTime

@Override

public TriggerResult onProcessingTime(long time, TimeWindow window, TriggerContext ctx)

throws Exception {

return TriggerResult.CONTINUE;

}

@Override

public void clear(TimeWindow window, TriggerContext ctx) throws Exception {

ctx.deleteEventTimeTimer(window.maxTimestamp());

}

@Override

public boolean canMerge() {

return true;

}

@Override

public void onMerge(TimeWindow window, OnMergeContext ctx) {

// only register a timer if the watermark is not yet past the end of the merged window

// this is in line with the logic in onElement(). If the watermark is past the end of

// the window onElement() will fire and setting a timer here would fire the window twice.

long windowMaxTimestamp = window.maxTimestamp();

if (windowMaxTimestamp > ctx.getCurrentWatermark()) {

ctx.registerEventTimeTimer(windowMaxTimestamp);

}

}

@Override

public String toString() {

return "EventTimeTrigger()";

}

/**

* Creates an event-time trigger that fires once the watermark passes the end of the window.

*

* Once the trigger fires all elements are discarded. Elements that arrive late immediately

* trigger window evaluation with just this one element.

*/

public static EventTimeTrigger create() {

return new EventTimeTrigger();

}

}

CountTrigger

数据窗口触发器

public class CountTrigger<W extends Window> extends Trigger<Object, W> {

private static final long serialVersionUID = 1L;

private final long maxCount;

private final ReducingStateDescriptor<Long> stateDesc =

new ReducingStateDescriptor<>("count", new Sum(), LongSerializer.INSTANCE);

private CountTrigger(long maxCount) {

this.maxCount = maxCount;

}

// 数量超过最大 触发

@Override

public TriggerResult onElement(Object element, long timestamp, W window, TriggerContext ctx)

throws Exception {

ReducingState<Long> count = ctx.getPartitionedState(stateDesc);

count.add(1L);

if (count.get() >= maxCount) {

count.clear();

return TriggerResult.FIRE;

}

return TriggerResult.CONTINUE;

}

// 不做 onEventTime 的逻辑潘墩

@Override

public TriggerResult onEventTime(long time, W window, TriggerContext ctx) {

return TriggerResult.CONTINUE;

}

// 不做 onProcessingTime 的逻辑潘墩

@Override

public TriggerResult onProcessingTime(long time, W window, TriggerContext ctx)

throws Exception {

return TriggerResult.CONTINUE;

}

@Override

public void clear(W window, TriggerContext ctx) throws Exception {

ctx.getPartitionedState(stateDesc).clear();

}

@Override

public boolean canMerge() {

return true;

}

@Override

public void onMerge(W window, OnMergeContext ctx) throws Exception {

ctx.mergePartitionedState(stateDesc);

}

@Override

public String toString() {

return "CountTrigger(" + maxCount + ")";

}

/**

* Creates a trigger that fires once the number of elements in a pane reaches the given count.

*

* @param maxCount The count of elements at which to fire.

* @param The type of {@link Window Windows} on which this trigger can operate.

*/

public static <W extends Window> CountTrigger<W> of(long maxCount) {

return new CountTrigger<>(maxCount);

}

private static class Sum implements ReduceFunction<Long> {

private static final long serialVersionUID = 1L;

@Override

public Long reduce(Long value1, Long value2) throws Exception {

return value1 + value2;

}

}

}

结合上面的例子 写一个自定义触发器,当数据流中出现特定值,立刻触发窗口的计算,而不需要等到窗口结束

class IEventTimeTrigger extends Trigger<EventLog, TimeWindow> {

private static final long serialVersionUID = 1L;

/**

* watermark 是不是大于窗口结束点 触发新的窗口

*/

@Override

public TriggerResult onElement(

EventLog element, long timestamp, TimeWindow window, TriggerContext ctx)

throws Exception {

if (window.maxTimestamp() <= ctx.getCurrentWatermark()) {

// if the watermark is already past the window fire immediately

return TriggerResult.FIRE;

} else {

ctx.registerEventTimeTimer(window.maxTimestamp());

if(element.getGuid().equals(1111111)){ //如果数据的GUID 是 1111111 提前触发窗口计算0

return TriggerResult.FIRE;

}

return TriggerResult.CONTINUE;

}

}

@Override

public TriggerResult onEventTime(long time, TimeWindow window, TriggerContext ctx) {

return time == window.maxTimestamp() ? TriggerResult.FIRE : TriggerResult.CONTINUE;

}

@Override

public TriggerResult onProcessingTime(long time, TimeWindow window, TriggerContext ctx)

throws Exception {

return TriggerResult.CONTINUE;

}

@Override

public void clear(TimeWindow window, TriggerContext ctx) throws Exception {

ctx.deleteEventTimeTimer(window.maxTimestamp());

}

@Override

public boolean canMerge() {

return true;

}

@Override

public void onMerge(TimeWindow window, OnMergeContext ctx) {

long windowMaxTimestamp = window.maxTimestamp();

if (windowMaxTimestamp > ctx.getCurrentWatermark()) {

ctx.registerEventTimeTimer(windowMaxTimestamp);

}

}

@Override

public String toString() {

return "EventTimeTrigger()";

}

}

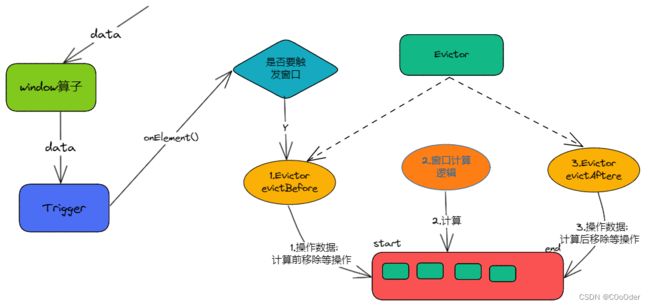

3.5.2 evictor窗口计算移出数据

窗口触发前/后 数据的移除机制

Evictor (org.apache.flink.streaming.api.windowing.evictors)

CountEvictor (org.apache.flink.streaming.api.windowing.evictors) //数量窗口清除

DeltaEvictor (org.apache.flink.streaming.api.windowing.evictors) //滑动窗口清除

TimeEvictor (org.apache.flink.streaming.api.windowing.evictors) //时间窗口清除

public interface Evictor<T, W extends Window> extends Serializable {

//计算之前

void evictBefore(

Iterable<TimestampedValue<T>> elements,

int size,

W window,

EvictorContext evictorContext);

//计算之后调用

void evictAfter(

Iterable<TimestampedValue<T>> elements,

int size,

W window,

EvictorContext evictorContext);

...............

}

重写一个ITimeEvictor 相对于 TimeEvictor 的改动

class ITimeEvictor<W extends Window> implements Evictor<EventLog, W> {

......................

private void evict(Iterable<TimestampedValue<EventLog>> elements, int size, EvictorContext ctx) {

if (!hasTimestamp(elements)) {

return;

}

long currentTime = getMaxTimestamp(elements);

long evictCutoff = currentTime - windowSize;

for (Iterator<TimestampedValue<EventLog>> iterator = elements.iterator(); iterator.hasNext();) {

TimestampedValue<EventLog> record = iterator.next();

// if (record.getTimestamp() <= evictCutoff)

// 添加条件 ,窗口计算移除 Guid().equals(222222)

if (record.getTimestamp() <= evictCutoff || record.getValue().getGuid().equals(222222)) {

iterator.remove();

}

}

}

.................................

}

3.5.3 调用示例

keyedStream.window(TumblingEventTimeWindows.of(Time.seconds(30)))

.trigger(new IEventTimeTrigger()) // 如果数据的GUID 是 1111111 提前触发窗口计算

.evictor(ITimeEvictor.of(Time.seconds(30), false)) // 计算前移除GUID 是 222222的数据

.apply(.....)

3.5.4 调用时机

-

数据到达窗口后,调用 Trigger 的 onElement() 方法

-

根据Trigger 的 onElement() 方法 返回值 判断是否要触发窗口计算

-

若触发窗口计算,在计算前调用Evictor(after/before) 来移除某些数据