部署v1.22.1版的Kubernetes集群

部署v1.22.1版的Kubernetes集群

主机环境预设

本示例中的Kubernetes集群部署将基于以下环境进行。

OS: CentOS7.9

Kubernetes:v1.22.1

Container Runtime: Docker CE 20.10.8

每台机器配置(2G2核)

主机名称 ip

master 192.168.1.21

node1 192.168.1.22

node2 192.168.1.23

一.基础配置

1:分别在3台主机设置主机名称

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

2:配置主机映射

cat < /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.21 master

192.168.1.22 node1

192.168.1.23 node2

EOF

3:3台主机配置、停防火墙、关闭Swap、关闭Selinux、设置内核、配置时间同步(配置完后建议重启一次)

systemctl stop firewalld

systemctl disable firewalld

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

服务端

1)在控制端配置时间同步服务

yum install chrony -y

2)编辑配置文件确认有以下配置

vim /etc/chrony.conf

--------------------------------

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server 192.168.1.21 iburst

allow 192.168.1.0/24

--------------------------------

3)重启ntp服务,并配置开机自启动

systemctl restart chronyd.service

systemctl status chronyd.service

systemctl enable chronyd.service

systemctl list-unit-files |grep chronyd.service

4)设置时区,同步时间

timedatectl set-timezone Asia/Shanghai

chronyc sources

timedatectl status

客户端

1)安装包

yum install chrony -y

2)编辑配置文件确认有以下配置

vim /etc/chrony.conf

-------------------------------------

# 修改引用控制节点

server 192.168.1.21 iburst

-------------------------------------

3)重启chronyd服务,并配置开机自启动

systemctl restart chronyd.service

systemctl status chronyd.service

systemctl enable chronyd.service

systemctl list-unit-files |grep chronyd.service

4)设置时区,首次同步时间

timedatectl set-timezone Asia/Shanghai

chronyc sources

timedatectl status

二. 安装docker

1.常用包

yum install vim bash-completion net-tools gcc -y

2.使用aliyun源安装docker-ce

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce

systemctl enable docker.service

systemctl start docker.service

3.添加aliyundocker仓库加速器

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": [

"https://fl791z1h.mirror.aliyuncs.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload

systemctl restart docker.service

三.安装kubectl、kubelet、kubeadm

1.添加阿里kubernetes源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.安装

yum install kubectl kubelet kubeadm

systemctl enable kubelet

四. 初始化k8s集群

1.初始化master节点(在master上完成如下操作)

[root@master ~]# kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.22.1

k8s.gcr.io/kube-controller-manager:v1.22.1

k8s.gcr.io/kube-scheduler:v1.22.1

k8s.gcr.io/kube-proxy:v1.22.1

k8s.gcr.io/pause:3.5

k8s.gcr.io/etcd:3.5.0-0

k8s.gcr.io/coredns/coredns:v1.8.4

执行命令出现你要pull的镜像

我是先把这些pull下来

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.1

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.1

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.1

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.22.1

docker pull registry.aliyuncs.com/google_containers/pause:3.5

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.0-0

docker pull registry.aliyuncs.com/google_containers/coredns:1.8.4(这个镜像得改名字才能正常运行起来)

docker tag registry.aliyuncs.com/google_containers/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4

镜像pull下来就可以启动了

kubeadm init --kubernetes-version=1.22.1 \

--apiserver-advertise-address=192.168.1.21 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16

执行完会有一条

kubeadm join 192.168.1.21:6443 --token v2r5a4.veazy2xhzetpktfz

–discovery-token-ca-cert-hash sha256:daded8514c8350f7c238204979039ff9884d5b595ca950ba8bbce80724fd65d4

在node1和node2上面执行 (镜像下不来可以照着上面的做法)

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

执行下面命令,使kubectl可以自动补充

[root@master01 ~]# source <(kubectl completion bash)

五.安装网络(依照自己的喜欢选择)

我在这里用的是flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

当然也可以用calico

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

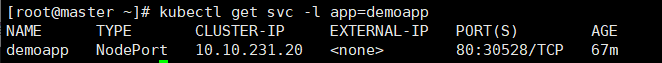

六.可以做个小测试

kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0

kubectl scale deployment/demoapp --replicas=3

kubectl create service nodeport demoapp --tcp=80:80

demoapp是一个web应用,因此,用户可以于集群外部通过“http://NodeIP:30528”这个URL访问demoapp上的应用,例如于集群外通过浏览器访问“http://192.168.1.22:30528”。