Druid原理浅析

1、流程解析

根据源码包下的

com.alibaba.druid.pool.mysql.MySqlTest作为探析入口

new DruidDataSource()、dataSource.getConnection()、connection.close()

public class MySqlTest extends TestCase {

private DruidDataSource dataSource;

protected void setUp() throws Exception {

dataSource = new DruidDataSource();

dataSource.setUrl("jdbc:mysql://10.1.74.210:3306/db1?allowMultiQueries=true");

dataSource.setUsername("root");

dataSource.setPassword("abc123");

dataSource.setFilters("log4j");

dataSource.setValidationQuery("SELECT 1");

dataSource.setTestOnBorrow(true);

dataSource.setTestWhileIdle(true);

dataSource.setMaxActive(10);

dataSource.setInitialSize(5);

dataSource.setMinIdle(5);

dataSource.setMaxWait(3000);

....

}

protected void tearDown() throws Exception {

dataSource.close();

}

public void test_mysql() throws Exception {

Connection connection = dataSource.getConnection();

...

Statement stmt = connection.createStatement();

stmt.execute("select 1;select 1");

stmt.close();

connection.close();

}

}

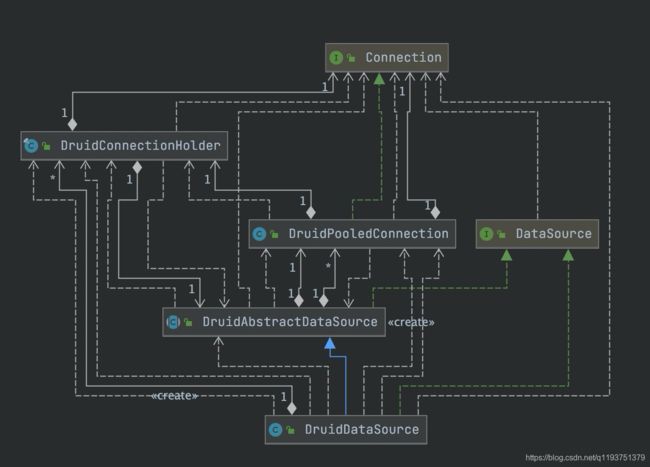

2、初始化

DruidConnectionHolder封装了一个Connection的基本信息,连接时长、隔离级别、是否只读、自动提交、局部变量、全局变量等等

DruidDataSource连接池的内部的维护代表,关于连接池的核心操作都在这个类中进行操作

2.1 DataSource初始化

关于公平简而言之,就是多个请求从连接池获取对象时,是否要按照先来后到去获取连接,false的性能较高

new DruidDataSource()总体来说就是把完成配置属性的初始化

//DruidDataSource

public DruidDataSource(){

this(false);

}

public DruidDataSource(boolean fairLock){

super(fairLock);//设置重入锁的公平性

//根据配置文件的kv属性对初始化DruidDataSource属性

configFromPropety(System.getProperties());

}

//DruidAbstractDataSource

public DruidAbstractDataSource(boolean lockFair){

lock = new ReentrantLock(lockFair);//创建非公平锁

notEmpty = lock.newCondition();//消费者等待队列(唤醒后就从池子connections中获取数据库连接)

empty = lock.newCondition();//生产者等待队列(唤醒后就去创建物理数据库连接)

}

2.1 连接池初始化

2.1.1 前置工作

- 设置

DataSourceId

this.id = DruidDriver.createDataSourceId();

- 过滤器初始化

for (Filter filter : filters) {

filter.init(this);

}

//实现类加载

initFromSPIServiceLoader();

- 数据库类型设置(mysql、mariadb、oceanbase、ads)下

cacheServerConfiguration设置为false

if (this.dbTypeName == null || this.dbTypeName.length() == 0) {

this.dbTypeName = JdbcUtils.getDbType(jdbcUrl, null);

}

DbType dbType = DbType.of(this.dbTypeName);

- 驱动加载

resolveDriver();//直接写jdbcUrl就可以,不用再设置驱动加载实现在这里

- 初始化queryCheck的检测方式(哪种数据库的实现),检测

testOnBorrow\testOnReturn\testWhileIdle参数下validationQuery是否为null

initExceptionSorter();

initValidConnectionChecker();//检测方式的具体实现类,策略模式

validationQueryCheck();//检测validationQuery的sql语句是否为空

2.2.2 核心初始化

connections = new DruidConnectionHolder[maxActive];//数据库连接池容器

evictConnections = new DruidConnectionHolder[maxActive];//存储将要释放的连接

keepAliveConnections = new DruidConnectionHolder[maxActive];//keepalive参数开启后存活检测的容器

初始化有两种方式

- 同步:默认同步去创建

initialSize放入connections中 - 异步:需要设置

createScheduler并设置asyncInit为true

if (createScheduler != null && asyncInit) {//异步初始化

for (int i = 0; i < initialSize; ++i) {

submitCreateTask(true);

}

} else if (!asyncInit) {//同步化初始化

// 配置了属性文件的化,此处会做初始化连接 poolingCount和初始化连接进行比较

while (poolingCount < initialSize) {

try {

//真正的创建物理连接

PhysicalConnectionInfo pyConnectInfo = createPhysicalConnection();

DruidConnectionHolder holder = new DruidConnectionHolder(this, pyConnectInfo);

//添加到连接池当中

connections[poolingCount++] = holder;

} catch (SQLException ex) {

LOG.error("init datasource error, url: " + this.getUrl(), ex);

if (initExceptionThrow) {

connectError = ex;

break;

} else {

Thread.sleep(3000);

}

}

}

2.2.3 日志/生产-创建

//创建日志记录线程,timeBetweenLogStatsMillis控制sleep时间,主要是打印druid的连接池状态信息

createAndLogThread();

createAndStartCreatorThread();//初始化创建连接线程(生产者生产连接)

createAndStartDestroyThread();//初始化销毁线程,间隔1s执行一次

//阻塞等待上述2个线程任务完成

initedLatch.await();

init = true;//初始化传教完成

3、获取连接

dataSource.getConnection()

//getConnectionDirect(long maxWaitMillis)

for (;;) {

//从连接池中获取连接

poolableConnection = getConnectionInternal(maxWaitMillis);

}

3.1 是否等待获取

//getConnectionInternal(long maxWait)

connectCount++;

if (maxWait > 0) {

//有时间的去获取连接

holder = pollLast(nanos);

} else {

holder = takeLast();

}

if (holder != null) {

if (holder.discard) {

continue;

}

//在池子外的活跃连接数+1

activeCount++;

holder.active = true;

if (activeCount > activePeak) {//判断内存泄漏

activePeak = activeCount;

activePeakTime = System.currentTimeMillis();

}

}

//表明这个有效连接被多少个人用过usecount+1

holder.incrementUseCount();

//封装到DruidPooledConnection这个对象中去

DruidPooledConnection poolalbeConnection = new DruidPooledConnection(holder);

return poolalbeConnection;

4、释放连接

connection.close()

主要在recycle()中进行归还数据库连接,最终是DruidDataSource进行归还

//DruidPooledConnection.close()

DruidConnectionHolder holder = this.holder;

DruidAbstractDataSource dataSource = holder.getDataSource();

try {//连接的监听事件关联

for (ConnectionEventListener listener : holder.getConnectionEventListeners()) {

listener.connectionClosed(new ConnectionEvent(this));

}

List<Filter> filters = dataSource.getProxyFilters();

if (filters.size() > 0) {//过滤事件

FilterChainImpl filterChain = new FilterChainImpl(dataSource);

filterChain.dataSource_recycle(this);

} else {

recycle();

}

} finally {

CLOSING_UPDATER.set(this, 0);

}

this.disable = true;

DruidConnectionHolder holder = this.holder;

if (!this.abandoned) {

DruidAbstractDataSource dataSource = holder.getDataSource();

dataSource.recycle(this);

}

4.1 真正归还

//每个连接大于phyMaxUseCount的话,将彻底释放该连接

if (phyMaxUseCount > 0 && holder.useCount >= phyMaxUseCount) {

discardConnection(holder);

return;

}

if (testOnReturn) {

//归还时的检测逻辑在此处生效

//此处检测失败的话会彻底释放

//lastPacketReceivedTimeMs > 0 && mysqlIdleMillis >= timeBetweenEvictionRunsMillis

//validConnectionChecker.isValidConnection(conn, validationQuery, validationQueryTimeout)

...

}

if (phyTimeoutMillis > 0) {

//物理连接时长设置,超过之后释放

//phyConnectTimeMillis > phyTimeoutMillis

}

lock.lock();//完成初始化后,放入到connnections中去

try {

if (holder.active) {

activeCount--;

holder.active = false;

}

closeCount++;

//放入connections末尾,并将活跃时间进行更新

result = putLast(holder, currentTimeMillis);

recycleCount++;

} finally {

lock.unlock();

}

5 生产/消费者

5.1 生产者

empt.await -> 生产者阻塞

empt.signal -> 生产者被唤醒

对于以上代码生效的前置条件需要关注(文本未能列举全部)

//DruidDataSource#createAndStartCreatorThread()

protected void createAndStartCreatorThread() {

if (createScheduler == null) {

String threadName = "Druid-ConnectionPool-Create-" + System.identityHashCode(this);

createConnectionThread = new CreateConnectionThread(threadName);

createConnectionThread.start();//创建连接的线程启动

return;

}

initedLatch.countDown();

}

5.1.1 核心逻辑

//DruidDataSource$CreateConnectionThread#run()

for(;;)

if (emptyWait) {

// 必须存在线程等待,才创建连接

// 在初始连接被用完的情况和大于最小连接数的时候,会被唤醒,去创建新的连接

if (poolingCount >= notEmptyWaitThreadCount //

&& (!(keepAlive && activeCount + poolingCount < minIdle))

&& !isFailContinuous()

) {//这里生产者将会自己阻塞

empty.await();

}

// 防止创建超过maxActive数量的连接,继续阻塞

if (activeCount + poolingCount >= maxActive) {

empty.await();

continue;

}

}

PhysicalConnectionInfo connection = null;

try {//唤醒后就执行下边的物理创建

connection = createPhysicalConnection();

}catch{

//创建失败后的一些确保机制

...

}

if (connection == null) {

continue;

}

//将创建好的连接放入connections,注意不能大于maxActive数量,否则返回失败

boolean result = put(connection);

}

//DruidDataSource#put

if (poolingCount >= maxActive) {//创建的线程不能大于maxActive

if (createScheduler != null) {

clearCreateTask(createTaskId);

}

return false;

}

connections[poolingCount] = holder;

incrementPoolingCount();

//唤醒消费者

notEmpty.signal();//此处唤醒消费线程pollLast#中的notEmpty.await

notEmptySignalCount++;//唤醒的次数

5.2 消费者

notEmpty.await -> 消费者等待

notEmpty.signal -> 消费者唤醒

对于以上代码生效的前置条件需要关注(文本未能列举全部)

//DruidDataSource#pollLast

for (;;) {

if (poolingCount == 0) {

//当连接池中无可借连接时,唤醒创建线程去创建连接

emptySignal(); // send signal to CreateThread create connection

try {

long startEstimate = estimate;

//在次数等待生产者生产数据库连接

estimate = notEmpty.awaitNanos(estimate); // signal by recycle or creator

}

//从connections获取连接,并将槽位清理

decrementPoolingCount();

DruidConnectionHolder last = connections[poolingCount];

connections[poolingCount] = null;

}

5.2.1 异步增加连接

for (boolean createDirect = false;;) {

if (createDirect) {

//异步提交任务创建物理连接

}

if (createScheduler != null

&& poolingCount == 0

&& activeCount < maxActive

&& creatingCountUpdater.get(this) == 0

&& createScheduler instanceof ScheduledThreadPoolExecutor) {

ScheduledThreadPoolExecutor executor = (ScheduledThreadPoolExecutor) createScheduler;

if (executor.getQueue().size() > 0) {

createDirect = true;

continue;

}

}

6 空闲检测

空闲的检测主要由这个线程进行实现DestroyTask

protected void createAndStartDestroyThread() {

destroyTask = new DestroyTask();

if (destroyScheduler != null) {

//如果destroyScheduler不为空,用配置间隔去检测线程

long period = timeBetweenEvictionRunsMillis;

if (period <= 0) {

period = 1000;

}

destroySchedulerFuture = destroyScheduler.scheduleAtFixedRate(destroyTask, period, period,TimeUnit.MILLISECONDS);

initedLatch.countDown();

return;

}

String threadName = "Druid-ConnectionPool-Destroy-" + System.identityHashCode(this);

destroyConnectionThread = new DestroyConnectionThread(threadName);

destroyConnectionThread.start();

}

//DestroyConnectionThread#run

for (;;) {

// 从前面开始进行检测,前面的是最开始放入的

try {

if (timeBetweenEvictionRunsMillis > 0) {

Thread.sleep(timeBetweenEvictionRunsMillis);

} else {

Thread.sleep(1000); //

}

if (Thread.interrupted()) {

break;

}

//处理逻辑

destroyTask.run();

} catch (InterruptedException e) {

break;

}

}

//DestroyTask.run

@Override

public void run() {

shrink(true, keepAlive);

if (isRemoveAbandoned()) {

removeAbandoned();

}

}

6.1 核心逻辑

这块主要构建evictConnections和keepAliveConnections,重新构建connections,并对evictConnections进行物理释放,对keepAliveConnections进行有效性检测,最终维持线程池的数量在minIdle之类

6.2 构建检测容器

evictConnections

从connections头进行检测

//DruidDataSource#shrink

if (checkTime) {

if (phyTimeoutMillis > 0) {

long phyConnectTimeMillis = currentTimeMillis - connection.connectTimeMillis;

if (phyConnectTimeMillis > phyTimeoutMillis) {

//比较连接时间是否大于设置的phyTimeoutMillis时间,大于放入evictConnections数组中

evictConnections[evictCount++] = connection;

continue;

}

}

//最小存活时间

if (idleMillis >= minEvictableIdleTimeMillis) {//将其放入evictConnections

if (checkTime && i < checkCount) {

evictConnections[evictCount++] = connection;

continue;

//最大存活时间

} else if (idleMillis > maxEvictableIdleTimeMillis) {

evictConnections[evictCount++] = connection;

continue;

}

}

}else {//如果checkTime为false则直接放入evictConnections

if (i < checkCount) {

evictConnections[evictCount++] = connection;

} else {

break;

}

}

keepAliveConnections

if ((onFatalError || fatalErrorIncrement > 0) && (lastFatalErrorTimeMillis > connection.connectTimeMillis)) {

keepAliveConnections[keepAliveCount++] = connection;//将此放入到keepAliveConnections数组中

continue;

}

if (keepAlive && idleMillis >= keepAliveBetweenTimeMillis) {

keepAliveConnections[keepAliveCount++] = connection;

}

6.3 释放/检测

evictConnections释放

if (evictCount > 0) {

//evictConnections是后续将要释放掉的数量

for (int i = 0; i < evictCount; ++i) {

DruidConnectionHolder item = evictConnections[i];

Connection connection = item.getConnection();

//物理释放

JdbcUtils.close(connection);

destroyCountUpdater.incrementAndGet(this);

}

//填充

Arrays.fill(evictConnections, null);

}

keepAliveConnections

此处存在

notEmpty.signal

if (keepAliveCount > 0) {//存在的去检测连接的有效性

for (int i = keepAliveCount - 1; i >= 0; --i) {

DruidConnectionHolder holer = keepAliveConnections[i];

Connection connection = holer.getConnection();

holer.incrementKeepAliveCheckCount();

boolean validate = false;

try {

this.validateConnection(connection);

validate = true;

}

if (validate) {//更新连接的上次操作时间,又放入connections队尾

//此处存在唤醒消费者的操作

holer.lastKeepTimeMillis = System.currentTimeMillis();

boolean putOk = put(holer, 0L);//放入connections队列中

if (!putOk) {

discard = true;

}

}

}

}

7 日志实现

日志入口 ->

DruidDataSource#init{createAndLogThread}

String threadName = "Druid-ConnectionPool-Log-" + System.identityHashCode(this);

logStatsThread = new LogStatsThread(threadName);

logStatsThread.start();

7.1 日志配置

//LogStatsThread

public void run() {

try {

for (;;) {

try {

logStats();

} catch (Exception e) {

LOG.error("logStats error", e);

}

//timeBetweenLogStatsMillis这个参数控制着日志记录间隔多久记录一次

Thread.sleep(timeBetweenLogStatsMillis);

}

} catch (InterruptedException e) {

// skip

}

}

public void logStats() {

//此处为日志策略

final DruidDataSourceStatLogger statLogger = this.statLogger;

if (statLogger == null) {

return;

}

//日志记录的信息,主要包装在DruidDataSourceStatValue这个类中

DruidDataSourceStatValue statValue = getStatValueAndReset();

statLogger.log(statValue);

}

//具体的实现

protected DruidDataSourceStatLogger statLogger = new DruidDataSourceStatLoggerImpl();

public interface DruidDataSourceStatLogger {

void log(DruidDataSourceStatValue statValue);

/**

* @param properties

* @since 0.2.21

*/

void configFromProperties(Properties properties);

void setLogger(Log logger);

void setLoggerName(String loggerName);

}

@Override

public void log(DruidDataSourceStatValue statValue) {

if (!isLogEnable()) {

return;

}

Map<String, Object> map = new LinkedHashMap<String, Object>();

ArrayList<Map<String, Object>> sqlList = new ArrayList<Map<String, Object>>();

map.put("sqlList", sqlList);

String text = JSONUtils.toJSONString(map);

log(text);

}

//日志打印

public void log(String value) {

logger.info(value);

}

private static Log LOG = LogFactory.getLog(DruidDataSourceStatLoggerImpl.class);

private Log logger = LOG;

7.2 日志策略

private static Constructor logConstructor;

public static Log getLog(String loggerName) {

try {

return (Log) logConstructor.newInstance(loggerName);

}

}

static {

//druid.logType属性控制,此处为null

String logType= System.getProperty("druid.logType");

if(logType != null){

if(logType.equalsIgnoreCase("slf4j")){

tryImplementation("org.slf4j.Logger", "com.alibaba.druid.support.logging.SLF4JImpl");

}else if(logType.equalsIgnoreCase("log4j")){

tryImplementation("org.apache.log4j.Logger", "com.alibaba.druid.support.logging.Log4jImpl");

}else if(logType.equalsIgnoreCase("log4j2")){

tryImplementation("org.apache.logging.log4j.Logger", "com.alibaba.druid.support.logging.Log4j2Impl");

}else if(logType.equalsIgnoreCase("commonsLog")){

tryImplementation("org.apache.commons.logging.LogFactory",

"com.alibaba.druid.support.logging.JakartaCommonsLoggingImpl");

}else if(logType.equalsIgnoreCase("jdkLog")){

tryImplementation("java.util.logging.Logger", "com.alibaba.druid.support.logging.Jdk14LoggingImpl");

}

}

// 优先选择log4j,而非Apache Common Logging. 因为后者无法设置真实Log调用者的信息

tryImplementation("org.slf4j.Logger", "com.alibaba.druid.support.logging.SLF4JImpl");

tryImplementation("org.apache.log4j.Logger", "com.alibaba.druid.support.logging.Log4jImpl");

tryImplementation("org.apache.logging.log4j.Logger", "com.alibaba.druid.support.logging.Log4j2Impl");

tryImplementation("org.apache.commons.logging.LogFactory",

"com.alibaba.druid.support.logging.JakartaCommonsLoggingImpl");

tryImplementation("java.util.logging.Logger", "com.alibaba.druid.support.logging.Jdk14LoggingImpl");

if (logConstructor == null) {

try {

logConstructor = NoLoggingImpl.class.getConstructor(String.class);

} catch (Exception e) {

throw new IllegalStateException(e.getMessage(), e);

}

}

//默认为com.alibaba.druid.support.logging.SLF4JImpl实现

private static void tryImplementation(String testClassName, String implClassName) {

if (logConstructor != null) {//单例的确保,确保logConstructor只有一种实现

return;

}

try {

Resources.classForName(testClassName);

//类加载

Class implClass = Resources.classForName(implClassName);

//获取指定的构造器

logConstructor = implClass.getConstructor(new Class[] { String.class });

Class<?> declareClass = logConstructor.getDeclaringClass();

if (!Log.class.isAssignableFrom(declareClass)) {

logConstructor = null;

}

try {

if (null != logConstructor) {

//实例化Log日志 -> com.alibaba.druid.support.logging.SLF4JImpl

logConstructor.newInstance(LogFactory.class.getName());

}

} catch (Throwable t) {

logConstructor = null;

}

} catch (Throwable t) {

// skip

}

}

7.3 实现代表

public class SLF4JImpl implements Log {

private static final String callerFQCN = SLF4JImpl.class.getName();

private int errorCount;

private int warnCount;

private int infoCount;

private int debugCount;

private LocationAwareLogger log;

public SLF4JImpl(String loggerName){

this.log = (LocationAwareLogger) LoggerFactory.getLogger(loggerName);

}

@Override

public void info(String msg) {

infoCount++;

log.log(null, callerFQCN, LocationAwareLogger.INFO_INT, msg, null, null);

}

}

//上述与DruidDataSource关联上

public static Log getLog(String loggerName) {

try {

return (Log) logConstructor.newInstance(loggerName);

} catch (Throwable t) {

throw new RuntimeException("Error creating logger for logger '" + loggerName + "'. Cause: " + t, t);

}

}

8 查询逻辑

入口:connection.createStatement();

//DruidPooledConnection

@Override

public Statement createStatement() throws SQLException {

checkState();

Statement stmt = null;

try {

//原始数据库创建的state对象

stmt = conn.createStatement();

} catch (SQLException ex) {

handleException(ex, null);

}

//设置查询时长queryTimeout属性

holder.getDataSource().initStatement(this, stmt);

//包装

DruidPooledStatement poolableStatement = new DruidPooledStatement(this, stmt);

holder.addTrace(poolableStatement);

return poolableStatement;

}

public class DruidPooledStatement extends PoolableWrapper implements Statement {

public DruidPooledStatement(DruidPooledConnection conn, Statement stmt){

super(stmt);

this.conn = conn;

this.stmt = stmt;

}

}

//super(stmt)

public class PoolableWrapper implements Wrapper {

private final Wrapper wrapper;

public PoolableWrapper(Wrapper wraaper){

this.wrapper = wraaper;

}

}

protected final List<Statement> statementTrace = new ArrayList<Statement>(2);

public void addTrace(DruidPooledStatement stmt) {

lock.lock();

try {

statementTrace.add(stmt);

} finally {

lock.unlock();

}

}

8.1 DruidPooledPreparedStatement

connection.prepareStatement("")

@Override

public PreparedStatement prepareStatement(String sql) throws SQLException {

checkState();

//增强的对象

PreparedStatementHolder stmtHolder = null;

//构建key

PreparedStatementKey key = new PreparedStatementKey(sql, getCatalog(), MethodType.M1);

boolean poolPreparedStatements = holder.isPoolPreparedStatements();

if (poolPreparedStatements) {

stmtHolder = holder.getStatementPool().get(key);

}

if (stmtHolder == null) {

try {

stmtHolder = new PreparedStatementHolder(key, conn.prepareStatement(sql));

holder.getDataSource().incrementPreparedStatementCount();

} catch (SQLException ex) {

handleException(ex, sql);

}

}

//queryTimeout设置

initStatement(stmtHolder);

DruidPooledPreparedStatement rtnVal = new DruidPooledPreparedStatement(this, stmtHolder);

holder.addTrace(rtnVal);

return rtnVal;

}

8.2 executeQuery

@Override

public ResultSet executeQuery() throws SQLException {

//连接状态检测

checkOpen();

//增加查询次数

incrementExecuteQueryCount();

//AutoCommit为false的话,这里包装事物sqlList,并更新conn状态时间

transactionRecord(sql);

oracleSetRowPrefetch();

//查询前操作

conn.beforeExecute();

try {

//真正查询

ResultSet rs = stmt.executeQuery();

if (rs == null) {

return null;

}

//包装结果集对象

DruidPooledResultSet poolableResultSet = new DruidPooledResultSet(this, rs);

addResultSetTrace(poolableResultSet);

return poolableResultSet;

} catch (Throwable t) {

errorCheck(t);

throw checkException(t);

} finally {

//查询后操作

conn.afterExecute();

}

}

final void beforeExecute() {

final DruidConnectionHolder holder = this.holder;

//如果removeAbandoned为true的话,设置running属性

if (holder != null && holder.dataSource.removeAbandoned) {

running = true;

}

}

final void afterExecute() {

final DruidConnectionHolder holder = this.holder;

if (holder != null) {

DruidAbstractDataSource dataSource = holder.dataSource;

if (dataSource.removeAbandoned) {

//更改running属性

running = false;

//更新活跃时间节点

holder.lastActiveTimeMillis = System.currentTimeMillis();

}

dataSource.onFatalError = false;

}

}

8.3 running 属性

此属性主要在DestroyTask任务的时候进行检测,对于设置

removeAbandonedTimeoutMillis的进行剔除,如下所示

//DruidDataSource

public int removeAbandoned() {

int removeCount = 0;

long currrentNanos = System.nanoTime();

List<DruidPooledConnection> abandonedList = new ArrayList<DruidPooledConnection>();

activeConnectionLock.lock();

Iterator<DruidPooledConnection> iter = activeConnections.keySet().iterator();

for (; iter.hasNext();) {

DruidPooledConnection pooledConnection = iter.next();

if (pooledConnection.isRunning()) {

continue;

}

long timeMillis = (currrentNanos - pooledConnection.getConnectedTimeNano()) / (1000 * 1000);

//当连接时间大于参数时添加

if (timeMillis >= removeAbandonedTimeoutMillis) {

iter.remove();

pooledConnection.setTraceEnable(false);

//添加到abandonedList

abandonedList.add(pooledConnection);

}

}

activeConnectionLock.unlock();

//剔除逻辑

if (abandonedList.size() > 0) {

for (DruidPooledConnection pooledConnection : abandonedList) {

JdbcUtils.close(pooledConnection);

pooledConnection.abandond();

removeAbandonedCount++;

removeCount++;

}

if (isLogAbandoned()) {

StringBuilder buf = new StringBuilder();

StringBuilder buf = new StringBuilder();

buf.append("abandon connection, owner thread: ");

buf.append(pooledConnection.getOwnerThread().getName());

buf.append(", connected at : ");

buf.append(pooledConnection.getConnectedTimeMillis());

buf.append(", open stackTrace\n");

//pooledConnection的调用链

//这个stackTrace是在获取连接的时候进行创建的

//poolableConnection.connectStackTrace = Thread.currentThread().getStackTrace()

//poolableConnection.setConnectedTimeNano();

//poolableConnection.traceEnable = true;

//activeConnections.put(poolableConnection, PRESENT);

StackTraceElement[] trace = pooledConnection.getConnectStackTrace();

for (int i = 0; i < trace.length; i++) {

buf.append("\tat ");

buf.append(trace[i].toString());

buf.append("\n");

}

buf.append("ownerThread current state is " + pooledConnection.getOwnerThread().getState()

+ ", current stackTrace\n");

trace = pooledConnection.getOwnerThread().getStackTrace();

for (int i = 0; i < trace.length; i++) {

buf.append("\tat ");

buf.append(trace[i].toString());

buf.append("\n");

}

LOG.error(buf.toString());

}

}

}

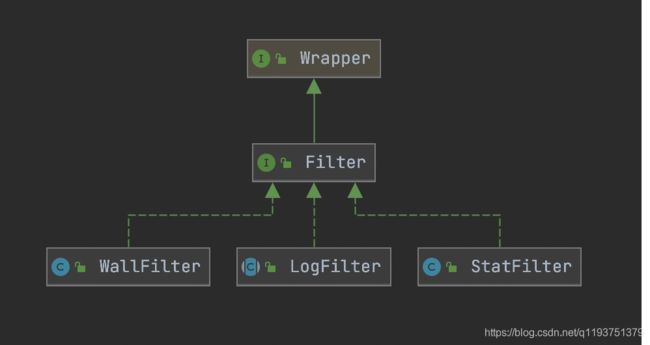

9 监控过滤

过滤器是在DataSource初始化的过程中,通过属性

druid.filters注入进来的,多个属性用’,'逗号进行分割

9.1 过滤器的初始化

//FilterManager#loadFilter

//入参filters就是DruidDataSource的filters

public static void loadFilter(List<Filter> filters, String filterName) throws SQLException {

if (filterName.length() == 0) {

return;

}

//获取全类名,可能存在别名

String filterClassNames = getFilter(filterName);

if (filterClassNames != null) {

for (String filterClassName : filterClassNames.split(",")) {

if (existsFilter(filters, filterClassName)) {

continue;

}

//加载filter类实例

Class<?> filterClass = Utils.loadClass(filterClassName);

if (filterClass == null) {

LOG.error("load filter error, filter not found : " + filterClassName);

continue;

}

Filter filter;

try {

//实例化过滤器

filter = (Filter) filterClass.newInstance();

}

filters.add(filter);

}

return;

}

9.2 ConnectionProxy的创建

ConnectionProxy最终包装到DruidConnectionHolder,对外提供的为DruidPooledConnection

//入口

conn = createPhysicalConnection(url, physicalConnectProperties);

Connection conn;

//如果有过滤器的话就创建ConnectionProxy

if (getProxyFilters().size() == 0) {

conn = getDriver().connect(url, info);

} else {

conn = new FilterChainImpl(this).connection_connect(info);

}

public ConnectionProxy connection_connect(Properties info) throws SQLException {

if (this.pos < filterSize) {

return nextFilter()

.connection_connect(this, info);

}

//

Driver driver = dataSource.getRawDriver();

String url = dataSource.getRawJdbcUrl();

Connection nativeConnection = driver.connect(url, info);

if (nativeConnection == null) {

return null;

}

return new ConnectionProxyImpl(dataSource, nativeConnection, info, dataSource.createConnectionId());

}

9.3 举例StatFilter

- 获取连接

public DruidPooledConnection getConnection(long maxWaitMillis) throws SQLException {

init();//初始化

if (filters.size() > 0) {

FilterChainImpl filterChain = new FilterChainImpl(this);

//这里会先构造filterChain然后顺序调用,最后一个去调取DruidDataSource对应的方法

return filterChain.dataSource_connect(this, maxWaitMillis);

} else {

return getConnectionDirect(maxWaitMillis);

}

}

- 获取连接前设置统计

@Override

public DruidPooledConnection dataSource_getConnection(FilterChain chain, DruidDataSource dataSource,long maxWaitMillis) throws SQLException {

//this.pos < filterSize ->

//nextFilter().dataSource_getConnection(this, dataSource, maxWaitMillis)

//dataSource.getConnectionDirect(maxWaitMillis)

DruidPooledConnection conn = chain.dataSource_connect(dataSource, maxWaitMillis);

if (conn != null) {

//在返回前设置统计信息

conn.setConnectedTimeNano();

StatFilterContext.getInstance().pool_connection_open();

}

return conn;

}

9.4 列举WallFilter

connection.prepareStatement最终调用的是Proxy中的

//应用类

connection.prepareStatement

//ConnectionProxyImpl

@Override

public PreparedStatement prepareStatement(String sql) throws SQLException {

FilterChainImpl chain = createChain();

PreparedStatement stmt = chain.connection_prepareStatement(this, sql);

recycleFilterChain(chain);

return stmt;

}

//WallFilter

@Override

public PreparedStatementProxy connection_prepareStatement(FilterChain chain, ConnectionProxy connection,String sql, int[] columnIndexes) throws SQLException {

String dbType = connection.getDirectDataSource().getDbType();

WallContext context = WallContext.create(dbType);

try {

//slq的防火墙实现入口

WallCheckResult result = checkInternal(sql);

context.setWallUpdateCheckItems(result.getUpdateCheckItems());

sql = result.getSql();

PreparedStatementProxy stmt = chain.connection_prepareStatement(connection, sql, columnIndexes);

setSqlStatAttribute(stmt);

return stmt;

} finally {

WallContext.clearContext();

}

}

private WallCheckResult checkInternal(String sql) throws SQLException {

//检测sql

WallCheckResult checkResult = provider.check(sql);

List<Violation> violations = checkResult.getViolations();

if (violations.size() > 0) {

Violation firstViolation = violations.get(0);

if (isLogViolation()) {

LOG.error("sql injection violation, dbType "

+ getDbType()

+ ", druid-version "

+ VERSION.getVersionNumber()

+ ", "

+ firstViolation.getMessage() + " : " + sql);

}

if (throwException) {

if (violations.get(0) instanceof SyntaxErrorViolation) {

SyntaxErrorViolation violation = (SyntaxErrorViolation) violations.get(0);

throw new SQLException("sql injection violation, dbType "

+ getDbType() + ", "

+ ", druid-version "

+ VERSION.getVersionNumber()

+ ", "

+ firstViolation.getMessage() + " : " + sql,

violation.getException());

} else {

throw new SQLException("sql injection violation, dbType "

+ getDbType()

+ ", druid-version "

+ VERSION.getVersionNumber()

+ ", "

+ firstViolation.getMessage()

+ " : " + sql);

}

}

}

return checkResult;

}

WallProvider提供检测

private WallCheckResult checkInternal(String sql) {

//sql黑白名单检测

WallCheckResult checkResult = checkWhiteAndBlackList(sql);

Set<String> updateCheckColumns = config.getUpdateCheckTable(tableName);

WallCheckResult result;

result = new WallCheckResult(sqlStat, statementList);

result.setSql(resultSql);

result.setUpdateCheckItems(visitor.getUpdateCheckItems());

}

附录

1、参数列表

| 配置 | 缺省值 | 说明 |

|---|---|---|

| name | 配置这个属性的意义在于,如果存在多个数据源,监控的时候可以通过名字来区分开来。如果没有配置,将会生成一个名字,格式是:“DataSource-” + System.identityHashCode(this). 另外配置此属性至少在1.0.5版本中是不起作用的,强行设置name会出错。详情-点此处。 | |

| url | 连接数据库的url,不同数据库不一样。例如: mysql : jdbc:mysql://10.20.153.104:3306/druid2 oracle : jdbc:oracle:thin:@10.20.149.85:1521:ocnauto | |

| username | 连接数据库的用户名 | |

| password | 连接数据库的密码。如果你不希望密码直接写在配置文件中,可以使用ConfigFilter。详细看这里 | |

| driverClassName | 根据url自动识别 | 这一项可配可不配,如果不配置druid会根据url自动识别dbType,然后选择相应的driverClassName |

| initialSize | 0 | 初始化时建立物理连接的个数。初始化发生在显示调用init方法,或者第一次getConnection时 |

| maxActive | 8 | 最大连接池数量 |

| maxIdle | 8 | 已经不再使用,配置了也没效果 |

| minIdle | 最小连接池数量 | |

| maxWait | 获取连接时最大等待时间,单位毫秒。配置了maxWait之后,缺省启用公平锁,并发效率会有所下降,如果需要可以通过配置useUnfairLock属性为true使用非公平锁。 | |

| poolPreparedStatements | false | 是否缓存preparedStatement,也就是PSCache。PSCache对支持游标的数据库性能提升巨大,比如说oracle。在mysql下建议关闭。 |

| maxPoolPreparedStatementPerConnectionSize | -1 | 要启用PSCache,必须配置大于0,当大于0时,poolPreparedStatements自动触发修改为true。在Druid中,不会存在Oracle下PSCache占用内存过多的问题,可以把这个数值配置大一些,比如说100 |

| validationQuery | 用来检测连接是否有效的sql,要求是一个查询语句,常用select ‘x’。如果validationQuery为null,testOnBorrow、testOnReturn、testWhileIdle都不会起作用。 | |

| validationQueryTimeout | 单位:秒,检测连接是否有效的超时时间。底层调用jdbc Statement对象的void setQueryTimeout(int seconds)方法 | |

| testOnBorrow | true | 申请连接时执行validationQuery检测连接是否有效,做了这个配置会降低性能。 |

| testOnReturn | false | 归还连接时执行validationQuery检测连接是否有效,做了这个配置会降低性能。 |

| testWhileIdle | false | 建议配置为true,不影响性能,并且保证安全性。申请连接的时候检测,如果空闲时间大于timeBetweenEvictionRunsMillis,执行validationQuery检测连接是否有效。 |

| keepAlive | false (1.0.28) | 连接池中的minIdle数量以内的连接,空闲时间超过minEvictableIdleTimeMillis,则会执行keepAlive操作。 |

| timeBetweenEvictionRunsMillis | 1分钟(1.0.14) | 有两个含义: 1) Destroy线程会检测连接的间隔时间,如果连接空闲时间大于等于minEvictableIdleTimeMillis则关闭物理连接。 2) testWhileIdle的判断依据,详细看testWhileIdle属性的说明 |

| numTestsPerEvictionRun | 30分钟(1.0.14) | 不再使用,一个DruidDataSource只支持一个EvictionRun |

| minEvictableIdleTimeMillis | 连接保持空闲而不被驱逐的最小时间 | |

| connectionInitSqls | 物理连接初始化的时候执行的sql | |

| exceptionSorter | 根据dbType自动识别 | 当数据库抛出一些不可恢复的异常时,抛弃连接 |

| filters | 属性类型是字符串,通过别名的方式配置扩展插件,常用的插件有: 监控统计用的filter:stat 日志用的filter:log4j 防御sql注入的filter:wall | |

| proxyFilters | 类型是List |