Android9.0 audio_policy_configuration.xml解析

参考:https://segmentfault.com/a/1190000020109354

frameworks/av/services/audiopolicy/managerdefault/AudioPolicyManager.cpp 的源码:

AudioPolicyManager::AudioPolicyManager(AudioPolicyClientInterface *clientInterface): AudioPolicyManager(clientInterface, false /*forTesting*/)

{

loadConfig();

initialize();

}

void AudioPolicyManager::loadConfig() {

//Android7.0之后便使用此宏

#ifdef USE_XML_AUDIO_POLICY_CONF

if (deserializeAudioPolicyXmlConfig(getConfig()) != NO_ERROR) {

#else

if ((ConfigParsingUtils::loadConfig(AUDIO_POLICY_VENDOR_CONFIG_FILE, getConfig()) != NO_ERROR)

&& (ConfigParsingUtils::loadConfig(AUDIO_POLICY_CONFIG_FILE, getConfig()) != NO_ERROR)) {

#endif

ALOGE("could not load audio policy configuration file, setting defaults");

getConfig().setDefault();

}

}

Android 7之后,通过配置文件USE_XML_AUDIO_POLICY_CONF来控制是使用XML配置的策略文件还是使用传统旧config配置文件。这个变量的初始化可以通过配置文件device.mk进行选择。

deserializeAudioPolicyXmlConfig函数的参数getConfig()即AudioPolicyConfig。getConfig()得到的AudioPolicyConfig mConfig成员变量如下:

AudioPolicyConfig& getConfig() { return mConfig; }

mConfig(mHwModulesAll, mAvailableOutputDevices, mAvailableInputDevices, mDefaultOutputDevice,

static_cast<VolumeCurvesCollection*>(mVolumeCurves.get()))

这些成员变量在解析配置文件(XML格式或者config格式)会得到初始化,这点很重要,后续的so加载会根据配置的module name来进行加载。

#define AUDIO_POLICY_XML_CONFIG_FILE_NAME "audio_policy_configuration.xml"

//xml存放在固件的路径,优先级:/odm/etc > /vendor/etc > /system/etc

static const char *kConfigLocationList[] =

{"/odm/etc", "/vendor/etc", "/system/etc"};

static const int kConfigLocationListSize =

(sizeof(kConfigLocationList) / sizeof(kConfigLocationList[0]));

static status_t deserializeAudioPolicyXmlConfig(AudioPolicyConfig &config) {

char audioPolicyXmlConfigFile[AUDIO_POLICY_XML_CONFIG_FILE_PATH_MAX_LENGTH];

std::vector<const char*> fileNames;

status_t ret;

//文件名"audio_policy_configuration.xml"位于frameworks/av/services/audiopolicy/config/下。

fileNames.push_back(AUDIO_POLICY_XML_CONFIG_FILE_NAME);

for (const char* fileName : fileNames) {

for (int i = 0; i < kConfigLocationListSize; i++) {

PolicySerializer serializer;

snprintf(audioPolicyXmlConfigFile, sizeof(audioPolicyXmlConfigFile),

"%s/%s", kConfigLocationList[i], fileName);

ret = serializer.deserialize(audioPolicyXmlConfigFile, config);

if (ret == NO_ERROR) {

return ret;

}

}

}

return ret;

}

今天要说的重点就是这个for循环了,serializer.deserialize(audioPolicyXmlConfigFile, config)

先看下PolicySerializer位于/frameworks/av/services/audiopolicy/common/managerdefinitions/include/目录下

以下举例的所有标签均来自audio_policy_configuration.xml下对应的第一行标签

status_t PolicySerializer::deserialize(const char *configFile, AudioPolicyConfig &config)

{

xmlDocPtr doc;

doc = xmlParseFile(configFile);

if (doc == NULL) {

ALOGE("%s: Could not parse %s document.", __FUNCTION__, configFile);

return BAD_VALUE;

}

......

//上面都是解析校验xml的一些属性标签啥的,此处开始才是正式加载,首先是module的加载

// Lets deserialize children

// Modules

ModuleTraits::Collection modules;

deserializeCollection<ModuleTraits>(doc, cur, modules, &config);

config.setHwModules(modules);

// deserialize volume section

VolumeTraits::Collection volumes;

deserializeCollection<VolumeTraits>(doc, cur, volumes, &config);

config.setVolumes(volumes);

// Global Configuration

GlobalConfigTraits::deserialize(cur, config);

xmlFreeDoc(doc);

return android::OK;

}

其中这两行代码便开始了真正的解析

deserializeCollection<ModuleTraits>(doc, cur, modules, &config);

config.setHwModules(modules);

deserializeCollection是个通用方法,其目的是调用泛型类的deserialize()方法,比如上面将调用ModuleTraits::deserialize()。

const char *const ModuleTraits::childAttachedDevicesTag = "attachedDevices";

const char *const ModuleTraits::childAttachedDeviceTag = "item";

const char *const ModuleTraits::childDefaultOutputDeviceTag = "defaultOutputDevice";

const char *const ModuleTraits::tag = "module";

const char *const ModuleTraits::collectionTag = "modules";

const char ModuleTraits::Attributes::name[] = "name";

const char ModuleTraits::Attributes::version[] = "halVersion";

status_t ModuleTraits::deserialize(xmlDocPtr doc, const xmlNode *root, PtrElement &module,

PtrSerializingCtx ctx)

{

//解析modules下的module标签,我们可以看下configuration.xml下module的name是primary

string name = getXmlAttribute(root, Attributes::name);

if (name.empty()) {

ALOGE("%s: No %s found", __FUNCTION__, Attributes::name);

return BAD_VALUE;

}

uint32_t versionMajor = 0, versionMinor = 0;

string versionLiteral = getXmlAttribute(root, Attributes::version);

if (!versionLiteral.empty()) {

sscanf(versionLiteral.c_str(), "%u.%u", &versionMajor, &versionMinor);

ALOGV("%s: mHalVersion = major %u minor %u", __FUNCTION__,

versionMajor, versionMajor);

}

ALOGV("%s: %s %s=%s", __FUNCTION__, tag, Attributes::name, name.c_str());

//其实就是把里的name和halVersion解析并初始化给了HwModule

module = new Element(name.c_str(), versionMajor, versionMinor);

// Deserialize childrens: Audio Mix Port, Audio Device Ports (Source/Sink), Audio Routes

MixPortTraits::Collection mixPorts;

//我们可以看到module下有 标签,其实也是按着这个顺序解析及的。

//到这里多少明白了一些audio_policy_configuration.xml的解析,那么解析完的数据又是如何初始化的呢?

//开始解析 标签下东西,调用MixPortTraits::deserialize(),下文继续讲。

deserializeCollection<MixPortTraits>(doc, root, mixPorts, NULL);

//moudle即HwModule,将解析的mixPorts(IOProfiles)存储给module的setProfiles

module->setProfiles(mixPorts); //调用HwModule::setProfiles()

//解析标签,解析原理都相同就不再细说了,只说下每个标签解析完都做了什么。

DevicePortTraits::Collection devicePorts;

//调用DevicePortTraits::deserialize()

//这个函数会解析标签下的各属性

deserializeCollection<DevicePortTraits>(doc, root, devicePorts, NULL);

//最终解析完device标签,同样赋值给hwModule

module->setDeclaredDevices(devicePorts);

//解析标签,要看到希望了哈,route很重要主要把source和sink连接起来

RouteTraits::Collection routes;

//调用RouteTraits::deserialize()

deserializeCollection<RouteTraits>(doc, root, routes, module.get());

module->setRoutes(routes);

//到此还未结束,回到module标签的开始会发现和标签还未解析

const xmlNode *children = root->xmlChildrenNode;

while (children != NULL) {

if (!xmlStrcmp(children->name, (const xmlChar *)childAttachedDevicesTag)) {

ALOGV("%s: %s %s found", __FUNCTION__, tag, childAttachedDevicesTag);

const xmlNode *child = children->xmlChildrenNode;

while (child != NULL) {

if (!xmlStrcmp(child->name, (const xmlChar *)childAttachedDeviceTag)) {

xmlChar *attachedDevice = xmlNodeListGetString(doc, child->xmlChildrenNode, 1);

if (attachedDevice != NULL) {

ALOGV("%s: %s %s=%s", __FUNCTION__, tag, childAttachedDeviceTag,

(const char*)attachedDevice);

//解析标签找到和device标签下name相同的DeviceDescriptor

sp<DeviceDescriptor> device =

module->getDeclaredDevices().getDeviceFromTagName(String8((const char*)attachedDevice));

//ctx即audioPolicyConfig

ctx->addAvailableDevice(device);

xmlFree(attachedDevice);

}

}

child = child->next;

}

}

//同理解析后通过AudioPolicyConfig设置下默认的输出设备即mDefaultOutputDevices

if (!xmlStrcmp(children->name, (const xmlChar *)childDefaultOutputDeviceTag)) {

xmlChar *defaultOutputDevice = xmlNodeListGetString(doc, children->xmlChildrenNode, 1);;

if (defaultOutputDevice != NULL) {

ALOGV("%s: %s %s=%s", __FUNCTION__, tag, childDefaultOutputDeviceTag,

(const char*)defaultOutputDevice);

sp<DeviceDescriptor> device =

module->getDeclaredDevices().getDeviceFromTagName(String8((const char*)defaultOutputDevice));

if (device != 0 && ctx->getDefaultOutputDevice() == 0) {

ctx->setDefaultOutputDevice(device);

ALOGV("%s: default is %08x", __FUNCTION__, ctx->getDefaultOutputDevice()->type());

}

xmlFree(defaultOutputDevice);

}

}

children = children->next;

}

return NO_ERROR;

}

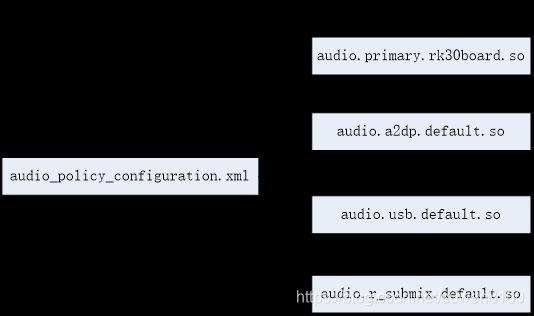

从XML里面看到,共有四个module,分别对应四个so库:

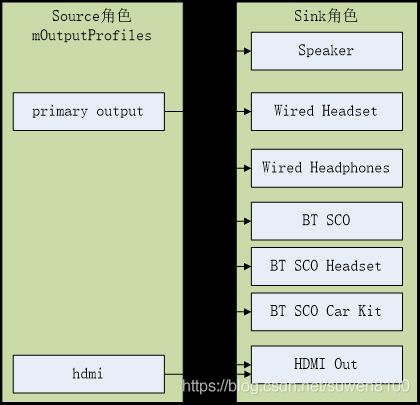

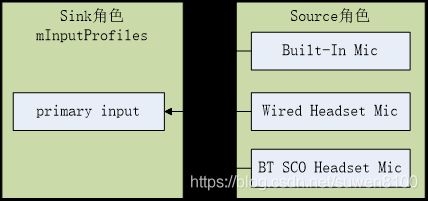

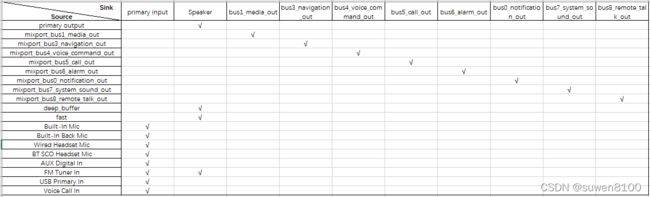

module下面有mixPorts、devicePorts和routes子段,它们下面又分别包含多个mixPort、devicePort和route的字段,这些字段内标识为source和sink两种角色:

devicePorts(source):为实际的硬件输入设备;

devicePorts(sink):为实际的硬件输出设备;

mixPorts(source):为经过AudioFlinger之后的流类型,也称“输出流设备”,是个逻辑设备而非物理设备,对应AudioFlinger里面的一个PlayerThread;

mixPorts(sink):为进入AudioFlinger之前的流类型,也称“输入流设备”,是个逻辑设备而非物理设备,对应AudioFlinger里面的一个RecordThread;

routes:定义devicePort和mixPorts的路由策略。

名字为“primary”的module还存在attachedDevices和defaultOutputDevice。下面分析一下MixPortTraits::deserialize()和RouteTraits::deserialize()。字段为mixPort的配置将被MixPortTraits::deserialize()解析:

const char *const MixPortTraits::collectionTag = "mixPorts";

const char *const MixPortTraits::tag = "mixPort";

const char MixPortTraits::Attributes::name[] = "name";

const char MixPortTraits::Attributes::role[] = "role";

const char MixPortTraits::Attributes::flags[] = "flags";

const char MixPortTraits::Attributes::maxOpenCount[] = "maxOpenCount";

const char MixPortTraits::Attributes::maxActiveCount[] = "maxActiveCount";

status_t MixPortTraits::deserialize(_xmlDoc *doc, const _xmlNode *child, PtrElement &mixPort,

PtrSerializingCtx /*serializingContext*/)

{

string name = getXmlAttribute(child, Attributes::name);

if (name.empty()) {

ALOGE("%s: No %s found", __FUNCTION__, Attributes::name);

return BAD_VALUE;

}

ALOGV("%s: %s %s=%s", __FUNCTION__, tag, Attributes::name, name.c_str());

string role = getXmlAttribute(child, Attributes::role);

if (role.empty()) {

ALOGE("%s: No %s found", __FUNCTION__, Attributes::role);

return BAD_VALUE;

}

ALOGV("%s: Role=%s", __FUNCTION__, role.c_str());

//portRole 分为 sink和source sink

audio_port_role_t portRole = role == "source" ? AUDIO_PORT_ROLE_SOURCE : AUDIO_PORT_ROLE_SINK;

//在Serializer.h头文件里看下发现其实new是IOProfile typedef IOProfile Element;而IOProfile继承AudioPort。

mixPort = new Element(String8(name.c_str()), portRole);

AudioProfileTraits::Collection profiles;

//执行AudioProfileTraits::deserialize()

deserializeCollection<AudioProfileTraits>(doc, child, profiles, NULL);

//如果profiles是空也会初始化个默认的,也就是每个标签下一定要有个

if (profiles.isEmpty()) {

sp <AudioProfile> dynamicProfile = new AudioProfile(gDynamicFormat,

ChannelsVector(), SampleRateVector());

dynamicProfile->setDynamicFormat(true);

dynamicProfile->setDynamicChannels(true);

dynamicProfile->setDynamicRate(true);

profiles.add(dynamicProfile);

}

//mixport即IOProfile,profiles即AudioProfiles,把AudioProfiles赋值给了IOProfile

mixPort->setAudioProfiles(profiles);

//下边这俩标签一般都不会使用,解析出来赋给mixport,一般在使用时如果没有特殊需求,一般使用的都是默认的

string flags = getXmlAttribute(child, Attributes::flags);

//如果flag标签存在,再设置下flag

if (!flags.empty()) {

// Source role

if (portRole == AUDIO_PORT_ROLE_SOURCE) {

mixPort->setFlags(OutputFlagConverter::maskFromString(flags));

} else {

// Sink role

mixPort->setFlags(InputFlagConverter::maskFromString(flags));

}

}

string maxOpenCount = getXmlAttribute(child, Attributes::maxOpenCount);

if (!maxOpenCount.empty()) {

convertTo(maxOpenCount, mixPort->maxOpenCount);

}

string maxActiveCount = getXmlAttribute(child, Attributes::maxActiveCount);

if (!maxActiveCount.empty()) {

convertTo(maxActiveCount, mixPort->maxActiveCount);

}

// Deserialize children

//解析下的这个在mixporit下通常也是没有的

AudioGainTraits::Collection gains;

deserializeCollection<AudioGainTraits>(doc, child, gains, NULL);

mixPort->setGains(gains);

return NO_ERROR;

}

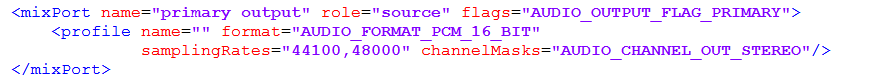

如上代码,mixPort其实是个IOProfile 类实例,继承AudioPort。摘取xml一个mixPort:

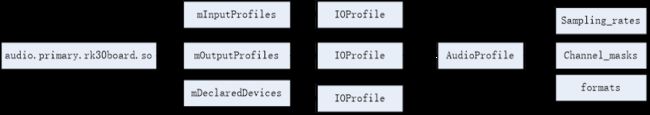

profile参数包含音频流一些信息,比如位数、采样率、通道数,它将被构建为AudioProfile对象,保存到mixPort,然后在存储到module。对于xml里面的devicePort,一般没有profile参数,则会创建一个默认的profile。当把mixPort加入到Moudle时,会进行分类:

status_t HwModule::addProfile(const sp<IOProfile> &profile)

{

//调用了addOutputProfile和addInputProfile,其实这俩函数最终就是

//赋值mInputProfiles和mOutputProfiles这俩集合。

switch (profile->getRole()) {

case AUDIO_PORT_ROLE_SOURCE:

return addOutputProfile(profile);

case AUDIO_PORT_ROLE_SINK:

return addInputProfile(profile);

case AUDIO_PORT_ROLE_NONE:

return BAD_VALUE;

}

return BAD_VALUE;

}

即source角色保存到OutputProfileCollection mOutputProfiles,sink角色保存到InputProfileCollection mInputProfiles。

而devicePort则调用HwModule::setDeclaredDevices()保存到module的mDeclaredDevices:

void HwModule::setDeclaredDevices(const DeviceVector &devices)

{

mDeclaredDevices = devices;

for (size_t i = 0; i < devices.size(); i++) {

mPorts.add(devices[i]);

}

}

注:一个IOProfile(即AudioPort)可包含多个AudioProfile,保存在其成员变量AudioProfileVector mProfiles。

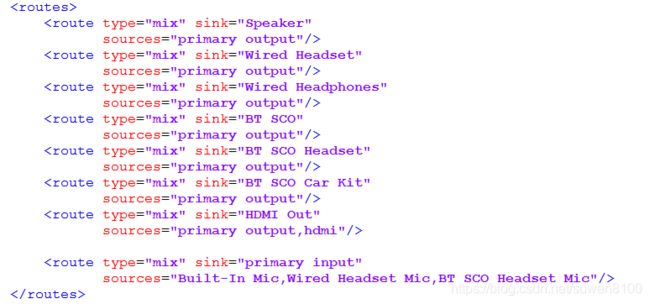

继续分析xml中字段为route,将被RouteTraits::deserialize()解析:

const char *const RouteTraits::tag = "route";

const char *const RouteTraits::collectionTag = "routes";

const char RouteTraits::Attributes::type[] = "type";

const char RouteTraits::Attributes::typeMix[] = "mix";

const char RouteTraits::Attributes::sink[] = "sink";

const char RouteTraits::Attributes::sources[] = "sources";

status_t RouteTraits::deserialize(_xmlDoc */*doc*/, const _xmlNode *root, PtrElement &element,

PtrSerializingCtx ctx)

{

string type = getXmlAttribute(root, Attributes::type);

if (type.empty()) {

ALOGE("%s: No %s found", __FUNCTION__, Attributes::type);

return BAD_VALUE;

}

//首先看

audio_route_type_t routeType = (type == Attributes::typeMix) ?

AUDIO_ROUTE_MIX : AUDIO_ROUTE_MUX;

ALOGV("%s: %s %s=%s", __FUNCTION__, tag, Attributes::type, type.c_str());

// new AudioRoute并将routeType传递下来

element = new Element(routeType);

string sinkAttr = getXmlAttribute(root, Attributes::sink);

if (sinkAttr.empty()) {

ALOGE("%s: No %s found", __FUNCTION__, Attributes::sink);

return BAD_VALUE;

}

// Convert Sink name to port pointer

//ctx就是解析的HwModule,findPortByTagName是找到module下的mixport(IOProfile),根据mixprot标签name找的

sp<AudioPort> sink = ctx->findPortByTagName(String8(sinkAttr.c_str()));

if (sink == NULL) {

ALOGE("%s: no sink found with name=%s", __FUNCTION__, sinkAttr.c_str());

return BAD_VALUE;

}

//找到sink属性,将sink值即Earpiece赋值给AudioRoute的setSink 标签

element->setSink(sink);

//解析sources属性 route的作用:

安卓音频在创建AudioTrack时会调用AudioSystem::getOutputForAttr(),根据应用程序指定的音频参数,然后根据音频策略选择device,最后根据device选择它所属的输出流设备(output)和 PlaybackThread。其中,如何选择输入/输出流(output),则需要根据xml配置文件中的route,一个output可能输出到多个device,而根据音频参数flag和输出设备device,根据route可以得到它所对应的output,也就是一个PlaybackThread。

最终解析完的所有module, config.setHwModules(modules)设置下去。到此基本就差不多了,剩下以下的的原理一样就不说了。

// deserialize volume section

deserializeCollection<VolumeTraits>(doc, cur, volumes, &config);

Global Configuration

GlobalConfigTraits::deserialize(cur, config);

audio_policy_configuration.xml位于frameworks/av/services/audiopolicy/config/ 目录下,有点需要注意这里有个

<xi:include href="a2dp_audio_policy_configuration.xml"/>

<xi:include href="usb_audio_policy_configuration.xml"/>

<xi:include href="r_submix_audio_policy_configuration.xml"/>

使用“包含”(XInclude)方法可避免将标准 Android 开放源代码项目 (AOSP) 音频 HAL 模块配置信息复制到所有音频政策配置文件(这样做容易出错)google为以下音频 HAL 提供了标准音频政策配置 xml 文件:

A2DP:a2dp_audio_policy_configuration.xml

重新导向子混音:rsubmix_audio_policy_configuration.xml

USB:usb_audio_policy_configuration.xml