- 跨域问题(Allow CORS)解决(3 种方法)

RainbowSea15

所遇问题-解决服务器运维java后端springboot

跨域问题(AllowCORS)解决(3种方法)文章目录跨域问题(AllowCORS)解决(3种方法)补充:SpringBoot设置Cors跨域的四种方式方式1:返回新的CorsFilter方式2:重写WebMvcConfigurer方式3:使用注解(@CrossOrigin)方式4:手工设置响应头(HttpServletResponse)最后:跨域问题:浏览器为了用户的安全,仅允许向同域,同端口的

- go Lock Sleep

贵哥的编程之路(热爱分享 为后来者)

golang

packagemainimport("fmt""sync""time")//Goods结构体,包含一个map[int]int用于存储商品编号和库存数量,以及一个互斥锁typeGoodsstruct{vmap[int]int//商品编号到库存数量的映射msync.Mutex//互斥锁,保证并发安全}//Inc方法,增加指定商品编号的库存数量func(g*Goods)Inc(keyint,numint

- element-ui 关于树形结构el-tree的笔记

首先尝试了下懒加载。发现是时候会出现无法加载数据以及数据重新加载的问题,古世勇一次性给加载上去。首先说一次性加载的适用方法先确定tree的配置文件label:'name',chcildren:'children',isLeaf:'leaf'看官网说明label就是你显示ui的值chcildren就是你下级目录的全部数据isLeaf指定一个字段是否为子节点。为ture时不为子节点所以数据结构为dat

- vue3项目的筛选是树状 el-tree 二级树结构(个人笔记)

齐露

笔记vue.js前端

先说一下筛选要求:可以多选,可以全选,可以取消全选(清空),可以关键字筛选(以下案例仅限于二级的树结构,多级的没有试),截图如下:废话不多说,上代码全选清空letform=reactive({certificationCategory:[],...})letcertificate_list=ref([//el-tree的内容{click:"AUTO",name:'电力业务许可证',value:'电

- 「源力觉醒 创作者计划」_文心大模型开源:开启 AI 新时代的大门

小黄编程快乐屋

人工智能

在人工智能的浩瀚星空中,大模型技术宛如一颗璀璨的巨星,照亮了无数行业前行的道路。自诞生以来,大模型凭借其强大的语言理解与生成能力,引发了全球范围内的技术变革与创新浪潮。百度宣布于6月30日开源文心大模型4.5系列,这一消息如同一颗重磅炸弹,在AI领域掀起了惊涛骇浪,其影响之深远,意义之重大,足以改写行业的发展轨迹。百度这次放大招,直接把文心大模型4.5开源了,这操作就像往国内AI圈子里空投了一个超

- Spring Boot 循环依赖问题解决方案笔记(基于电商系统示例)

Chen-Edward

SpringBootspringboot笔记后端javaideintellij-ideaspring

1.问题背景以一个电商系统为例子,SpringBoot应用启动时抛出了循环依赖(CircularDependency)异常,错误信息如下:***************************APPLICATIONFAILEDTOSTART***************************Description:Thedependenciesofsomeofthebeansintheappli

- 四种微调技术详解:SFT 监督微调、LoRA 微调、P-tuning v2、Freeze 监督微调方法

当谈到人工智能大语言模型的微调技术时,我们进入了一个令人兴奋的领域。这些大型预训练模型,如GPT-3、BERT和T5,拥有卓越的自然语言处理能力,但要使它们在特定任务上表现出色,就需要进行微调,以使其适应特定的数据和任务需求。在这篇文章中,我们将深入探讨四种不同的人工智能大语言模型微调技术:SFT监督微调、LoRA微调方法、P-tuningv2微调方法和Freeze监督微调方法。第一部分:SFT监

- Django5.1(91)—— 如何删除一个 Django 应用

小天的铁蛋儿

djangoPythondjangopython后端

如何删除一个Django应用Django提供了将一组功能组织成名为应用程序的Python包的能力。当需求发生变化时,应用程序可能会变得过时或不再需要。以下步骤将帮助你安全地删除一个应用程序。删除所有与该应用程序相关的引用(导入、外键等)。从相应的models.py文件中删除所有模型。通过运行makemigrations来创建相关的迁移。这一步会生成一个迁移,用于删除已删除模型的表,以及与这些模型相

- 2023年搜索领域的技术认证与职业发展指南

搜索引擎技术

搜索引擎ai

2023年搜索领域的技术认证与职业发展指南关键词搜索领域、技术认证、职业发展、搜索引擎技术、人工智能搜索摘要本指南旨在为搜索领域的从业者和有志于进入该领域的人士提供全面的技术认证与职业发展参考。首先介绍搜索领域的概念基础,包括其历史发展和关键问题。接着阐述相关理论框架,分析不同认证背后的原理。架构设计部分展示搜索系统的组成与交互。实现机制探讨算法复杂度和代码优化。实际应用部分给出实施和部署策略。高

- 探索AI人工智能医疗NLP实体识别系统的架构设计

AI学长带你学AI

人工智能自然语言处理easyuiai

探索AI人工智能医疗NLP实体识别系统的架构设计关键词:人工智能、医疗NLP、实体识别、系统架构、深度学习、自然语言处理、医疗信息化摘要:本文将深入探讨医疗领域NLP实体识别系统的架构设计。我们将从基础概念出发,逐步解析医疗文本处理的特殊性,详细介绍实体识别技术的核心原理,并通过实际案例展示如何构建一个高效可靠的医疗实体识别系统。文章还将探讨当前技术面临的挑战和未来发展方向,为医疗AI领域的从业者

- AI智能体原理及实践:从概念到落地的全链路解析

you的日常

人工智能大语言模型人工智能机器学习深度学习神经网络自然语言处理

AI智能体正从实验室走向现实世界,成为连接人类与数字世界的桥梁。它代表了人工智能技术从"知"到"行"的质变,是能自主感知环境、制定决策、执行任务并持续学习的软件系统。在2025年,AI智能体已渗透到智能家居、企业服务、医疗健康、教育和内容创作等领域,展现出强大的生产力与创造力。然而,其发展也伴随着技术挑战、伦理困境和安全风险,需要从架构设计到落地应用的全链条思考与平衡。一、AI智能体的核心定义与技

- Teleport 开源堡垒机(推荐工具)

小政同学

运维堡垒机

1.什么是堡垒机?堡垒机,就是让我们能够更安全的远程连接和操作服务器的一种工具,将其部署到服务器中,然后将其他服务器的外部访问进行限制,所有的操作都在堡垒机中进行,堡垒机还拥有记录登录信息与操作监控等功能,对于运行一些指定的危险命令,会对其进行告警反馈,有人登录时,管理员可以查看其在服务器中进行的操作,采用视频的形式展示,真正做到了出现故障能够追责到某个人。2.Teleport开源堡垒机他是一个轻

- 无人机载重模块技术要点分析

一、技术要点1.结构设计创新双电机卷扬系统:采用主电机(张力控制)和副电机(卷扬控制)协同工作,解决绳索缠绕问题,支持30米绳长1.2m/s高速收放,重载稳定性提升。轴双桨布局:无人机采用8轴16桨+轴双桨结构,单轴推力提升40%,载重能力突破200kg,冗余设计保障单轴失效时平稳飞行。模块化快拆:碳纤维+航空铝材质实现减重20%且强度提升50%,桨叶5分钟内可更换,提升野外维护效率。2.安全与制

- C#安装使用教程

小奇JAVA面试

安装使用教程c#开发语言

一、C#简介C#(读作C-Sharp)是微软开发的现代化、面向对象的编程语言,运行在.NET平台之上。它语法简洁、安全,广泛用于桌面应用、Web开发、游戏开发(Unity)以及跨平台开发。二、C#应用场景Windows桌面应用程序(WinForms、WPF)Web应用(ASP.NET)游戏开发(Unity3D)移动开发(Xamarin、MAUI)云服务、API开发控制台程序、自动化工具三、安装开发

- Golang学习笔记:协程

夜以冀北

golang学习

Golang学习笔记参考文档一链接:https目录一.协程用在哪里?协程需要解决什么问题?二.协程的框架(Linux的例子)三.如何在多种状态高效切换?四.进程、线程和协程之间的联系五.协程是如何工作的?六.协程与golang的关系一.协程用在哪里?协程需要解决什么问题?对于开发人员而言,客户端和服务器是熟知的对象,在这两个对象上都可以运用到协程。客户端向服务器端请求数据,如果是用线程来实现这个过

- 人工智能动画展示人类的特征

AGI大模型与大数据研究院

AI大模型应用开发实战javapythonjavascriptkotlingolang架构人工智能

人工智能,动画,人类特征,情感识别,行为模拟,机器学习,深度学习,自然语言处理1.背景介绍人工智能(AI)技术近年来发展迅速,已渗透到生活的方方面面。从智能语音助手到自动驾驶汽车,AI正在改变着我们的世界。然而,尽管AI技术取得了令人瞩目的成就,但它仍然难以完全模拟人类的复杂行为和特征。人类的特征是多方面的,包括情感、认知、社交和创造力等。这些特征是人类区别于其他生物的重要标志,也是人类社会文明发

- 对加密字段进行模糊查询:基于分词密文映射表的实现方案

大三小小小白

数据库

引言在当今数据安全日益重要的背景下,数据库字段加密已成为保护敏感信息的常见做法。然而,加密后的数据给模糊查询带来了巨大挑战。本文将介绍一种基于分词密文映射表的解决方案,实现对加密字段的高效模糊查询。一、问题背景考虑一个用户管理系统,其中包含手机号、身份证号、住址等敏感信息。这些字段需要加密存储以保证安全,但同时业务上又需要支持模糊查询(如根据手机号前几位查询用户)。传统加密方式直接阻碍了模糊查询功

- python 魔法方法常用_Python魔法方法指南

weixin_39603505

python魔法方法常用

有很多人说学习Python基础之后不知道干什么,不管你是从w3c还是从廖雪峰的教程学习的,这些教程都有一个特点:只能引你快速入门,但是有关于Python的很多基础内容这些教程中都没介绍,而这些你没学习的内容会让你在后期做项目的时候非常困惑。就比如下面这篇我要给大家推荐的文章所涉及的内容,不妨你用一天时间耐心看完,把代码都敲上一遍。--11:33更新--很多人想要我的一份学习笔记,所以在魔法指南之前

- Unity笔记-32-UI框架(实现)

Unity笔记-32-UI框架(实现)资源统一调配单例模版publicclassSingletonwhereT:class//class表示是引用类型{privatestaticT_singleton;//单例属性publicstaticTInstance{get{if(_singleton==null){//因为是单例,必须要有构造,但是如果有公有构造就不行,必须是私有构造//但是如果是私有构造

- unity进阶学习笔记:消息框架

Raine_Yang

unity学习笔记unity游戏引擎c#单例模式泛型

1使用消息框架的目的对于小型游戏,可能不需要任何框架,而是让各个游戏脚本直接相互通信。如要实现玩家受到攻击血量减少,通过玩家控制类向血条脚本发送消息减少血量。但是这样直接通信会导致各脚本通信关系记为复杂,并且每一个脚本都和多个脚本有联系,导致维护和更新十分困难我们利用上节课讲的管理类,可以将一类脚本由一个管理类控制,如将玩家的功能放在玩家管理类下,将血条,背包等UI组件放在UI管理类下。这样要减少

- 【Unity笔记01】基于单例模式的简单UI框架

单例模式的UIManagerusingSystem.Collections;usingSystem.Collections.Generic;usingUnityEngine;publicclassUIManager{privatestaticUIManager_instance;publicDictionarypathDict;publicDictionaryprefabDict;publicDi

- Android 发展历程

个人学习笔记安卓(android)是基于Linux内核的开源操作系统。主要用于移动设备,如智能手机、平板电脑、电视等,由Google公司及开放手机联盟领导及开发。2005年8月由谷歌收购注资HTC制造第一部Android手机2011年第一季度,android在全球的市场份额超过了塞班,成为全球第一2013年的第四季度,android平台手机的全球市场份额已经达到78.1%。2019年,谷歌官方宣布

- C++中noexcept的具体特性及其代码示例

码事漫谈

c++c++开发语言

文章目录1.**作为异常说明符**2.**作为运算符**3.**性能优化**4.**异常安全性**总结1.作为异常说明符noexcept可以放在函数声明或定义的后面,表示该函数不会抛出任何异常。如果函数在运行时抛出异常,程序会立即终止,并调用std::terminate()函数。特性:编译时检查:编译器会检查函数是否可能抛出异常。如果函数内部调用了可能抛出异常的代码,编译器会报错。运行时终止:如果

- 【Linux命令大全】Linux安全模块(LSM)终极指南:SELinux与AppArmor实战

【Linux命令大全】Linux安全模块(LSM)终极指南:SELinux与AppArmor实战安全警报:90%的Linux系统未正确配置强制访问控制!掌握这些技术可防御95%的提权攻击!本文包含100+策略案例,25张权限流程图,企业级安全方案全公开!前言:为什么LSM是系统安全的最后防线?在日益复杂的攻击环境下,我们面临的核心安全挑战:零日漏洞的应急防护容器逃逸攻击防御横向移动限制合规审计要求

- 前端相关性能优化笔记

星辰大海1412

笔记

1.打开速度怎么变快-首屏加载优化2.再次打开速度怎么变快-缓存优化了3.操作怎么才顺滑-渲染优化4.动画怎么保证流畅-长任务拆分2.1首屏加载指标细化:1.FP(FirstPaint首次绘制)2.FCP(FirstcontentfulPaint首次内容绘制),FP到FCP中间其实主要是SPA应用JS执行,太慢就会白屏时间太长3.FMP(FristMeaningfulPaint首次有效绘制),主要

- GitHub Pages上的个人技术展示网站

Rubix-Kai

本文还有配套的精品资源,点击获取简介:"weirufish.github.io"是一个托管在GitHubPages上的个人技术网站,可能包含个人资料、项目展示、博客文章等内容。该网站可能采用Markdown、HTML和CSS技术构建,提供了一个展示技术能力及分享学习笔记和见解的平台。此外,"weirufish.github.io-master"可能是该项目的主要分支或版本。网站特别注重样式设计,使

- 【ESP32-IDF笔记】08-SD(SDMMC)卡配置和使用

@Hwang

ESP32-IDF笔记#ESP32#ESP32-IDF#ESP32S3SD卡SDMMC

目录配置环境SDMMC主机驱动概述支持的速率模式使用SDMMC主机驱动配置总线宽度和频率配置GPIOeMMC芯片的DDR模式相关文档API参考头文件功能函数初始化SDMMC主机外围设备初始化SDMMC外设的给定插槽选择要用于数据传输的总线宽度获取配置为用于数据传输的总线宽度设置卡时钟频率启用或禁用SD接口的DDR模式启用或禁用永远开启的卡时钟发送数据启用IO中断等待IO中断禁用SDMMC主机并正常

- aws 数据库迁移_AWS Loft的数据库周

dnc8371

数据库大数据mysqljavapython

aws数据库迁移这是我的笔记:https://databaseweekoctober2019sf.splashthat.comAWS上的数据库:合适工作的合适工具在许多此类谈话中,我并没有做过深刻的记录。我正在关注重点。PostgreSQL排在MySQL之后。AWS上8种类型的数据库:关系型核心价值文件在记忆中图形搜索时间序列分类帐搜索:AWSDatabaseServices对于关系,他们有Ama

- 现代 C++ 容器深度解析及实践

mxpan

c++c++开发语言

一、线性容器:std::array与std::forward_list1.std::array:固定大小的高效容器在传统C++中,数组与vector的抉择常让人纠结:数组缺乏安全检查,vector存在动态扩容开销。C++11引入的std::array完美平衡了两者优势:特性解析:编译期确定大小,内存连续分配,访问效率与C数组一致;封装了迭代器、size()、empty()等标准接口,兼容STL算法

- 深度学习篇---简单果实分类网络

下面我将提供一个使用Python从零实现果实分类模型的完整流程,包括数据准备、模型构建、训练和部署,不依赖任何深度学习框架,仅使用NumPy进行数值计算。1.数据准备与预处理首先需要准备果实图像数据集,将其分为好果和坏果两类,并进行预处理:importosimportnumpyasnpfromPILimportImagefromsklearn.model_selectionimporttrain_

- 矩阵求逆(JAVA)初等行变换

qiuwanchi

矩阵求逆(JAVA)

package gaodai.matrix;

import gaodai.determinant.DeterminantCalculation;

import java.util.ArrayList;

import java.util.List;

import java.util.Scanner;

/**

* 矩阵求逆(初等行变换)

* @author 邱万迟

*

- JDK timer

antlove

javajdkschedulecodetimer

1.java.util.Timer.schedule(TimerTask task, long delay):多长时间(毫秒)后执行任务

2.java.util.Timer.schedule(TimerTask task, Date time):设定某个时间执行任务

3.java.util.Timer.schedule(TimerTask task, long delay,longperiod

- JVM调优总结 -Xms -Xmx -Xmn -Xss

coder_xpf

jvm应用服务器

堆大小设置JVM 中最大堆大小有三方面限制:相关操作系统的数据模型(32-bt还是64-bit)限制;系统的可用虚拟内存限制;系统的可用物理内存限制。32位系统下,一般限制在1.5G~2G;64为操作系统对内存无限制。我在Windows Server 2003 系统,3.5G物理内存,JDK5.0下测试,最大可设置为1478m。

典型设置:

java -Xmx

- JDBC连接数据库

Array_06

jdbc

package Util;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class JDBCUtil {

//完

- Unsupported major.minor version 51.0(jdk版本错误)

oloz

java

java.lang.UnsupportedClassVersionError: cn/support/cache/CacheType : Unsupported major.minor version 51.0 (unable to load class cn.support.cache.CacheType)

at org.apache.catalina.loader.WebappClassL

- 用多个线程处理1个List集合

362217990

多线程threadlist集合

昨天发了一个提问,启动5个线程将一个List中的内容,然后将5个线程的内容拼接起来,由于时间比较急迫,自己就写了一个Demo,希望对菜鸟有参考意义。。

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public c

- JSP简单访问数据库

香水浓

sqlmysqljsp

学习使用javaBean,代码很烂,仅为留个脚印

public class DBHelper {

private String driverName;

private String url;

private String user;

private String password;

private Connection connection;

privat

- Flex4中使用组件添加柱状图、饼状图等图表

AdyZhang

Flex

1.添加一个最简单的柱状图

? 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28

<?xml version=

"1.0"&n

- Android 5.0 - ProgressBar 进度条无法展示到按钮的前面

aijuans

android

在低于SDK < 21 的版本中,ProgressBar 可以展示到按钮前面,并且为之在按钮的中间,但是切换到android 5.0后进度条ProgressBar 展示顺序变化了,按钮再前面,ProgressBar 在后面了我的xml配置文件如下:

[html]

view plain

copy

<RelativeLa

- 查询汇总的sql

baalwolf

sql

select list.listname, list.createtime,listcount from dream_list as list , (select listid,count(listid) as listcount from dream_list_user group by listid order by count(

- Linux du命令和df命令区别

BigBird2012

linux

1,两者区别

du,disk usage,是通过搜索文件来计算每个文件的大小然后累加,du能看到的文件只是一些当前存在的,没有被删除的。他计算的大小就是当前他认为存在的所有文件大小的累加和。

- AngularJS中的$apply,用还是不用?

bijian1013

JavaScriptAngularJS$apply

在AngularJS开发中,何时应该调用$scope.$apply(),何时不应该调用。下面我们透彻地解释这个问题。

但是首先,让我们把$apply转换成一种简化的形式。

scope.$apply就像一个懒惰的工人。它需要按照命

- [Zookeeper学习笔记十]Zookeeper源代码分析之ClientCnxn数据序列化和反序列化

bit1129

zookeeper

ClientCnxn是Zookeeper客户端和Zookeeper服务器端进行通信和事件通知处理的主要类,它内部包含两个类,1. SendThread 2. EventThread, SendThread负责客户端和服务器端的数据通信,也包括事件信息的传输,EventThread主要在客户端回调注册的Watchers进行通知处理

ClientCnxn构造方法

&

- 【Java命令一】jmap

bit1129

Java命令

jmap命令的用法:

[hadoop@hadoop sbin]$ jmap

Usage:

jmap [option] <pid>

(to connect to running process)

jmap [option] <executable <core>

(to connect to a

- Apache 服务器安全防护及实战

ronin47

此文转自IBM.

Apache 服务简介

Web 服务器也称为 WWW 服务器或 HTTP 服务器 (HTTP Server),它是 Internet 上最常见也是使用最频繁的服务器之一,Web 服务器能够为用户提供网页浏览、论坛访问等等服务。

由于用户在通过 Web 浏览器访问信息资源的过程中,无须再关心一些技术性的细节,而且界面非常友好,因而 Web 在 Internet 上一推出就得到

- unity 3d实例化位置出现布置?

brotherlamp

unity教程unityunity资料unity视频unity自学

问:unity 3d实例化位置出现布置?

答:实例化的同时就可以指定被实例化的物体的位置,即 position

Instantiate (original : Object, position : Vector3, rotation : Quaternion) : Object

这样你不需要再用Transform.Position了,

如果你省略了第二个参数(

- 《重构,改善现有代码的设计》第八章 Duplicate Observed Data

bylijinnan

java重构

import java.awt.Color;

import java.awt.Container;

import java.awt.FlowLayout;

import java.awt.Label;

import java.awt.TextField;

import java.awt.event.FocusAdapter;

import java.awt.event.FocusE

- struts2更改struts.xml配置目录

chiangfai

struts.xml

struts2默认是读取classes目录下的配置文件,要更改配置文件目录,比如放在WEB-INF下,路径应该写成../struts.xml(非/WEB-INF/struts.xml)

web.xml文件修改如下:

<filter>

<filter-name>struts2</filter-name>

<filter-class&g

- redis做缓存时的一点优化

chenchao051

redishadooppipeline

最近集群上有个job,其中需要短时间内频繁访问缓存,大概7亿多次。我这边的缓存是使用redis来做的,问题就来了。

首先,redis中存的是普通kv,没有考虑使用hash等解结构,那么以为着这个job需要访问7亿多次redis,导致效率低,且出现很多redi

- mysql导出数据不输出标题行

daizj

mysql数据导出去掉第一行去掉标题

当想使用数据库中的某些数据,想将其导入到文件中,而想去掉第一行的标题是可以加上-N参数

如通过下面命令导出数据:

mysql -uuserName -ppasswd -hhost -Pport -Ddatabase -e " select * from tableName" > exportResult.txt

结果为:

studentid

- phpexcel导出excel表简单入门示例

dcj3sjt126com

PHPExcelphpexcel

先下载PHPEXCEL类文件,放在class目录下面,然后新建一个index.php文件,内容如下

<?php

error_reporting(E_ALL);

ini_set('display_errors', TRUE);

ini_set('display_startup_errors', TRUE);

if (PHP_SAPI == 'cli')

die('

- 爱情格言

dcj3sjt126com

格言

1) I love you not because of who you are, but because of who I am when I am with you. 我爱你,不是因为你是一个怎样的人,而是因为我喜欢与你在一起时的感觉。 2) No man or woman is worth your tears, and the one who is, won‘t

- 转 Activity 详解——Activity文档翻译

e200702084

androidUIsqlite配置管理网络应用

activity 展现在用户面前的经常是全屏窗口,你也可以将 activity 作为浮动窗口来使用(使用设置了 windowIsFloating 的主题),或者嵌入到其他的 activity (使用 ActivityGroup )中。 当用户离开 activity 时你可以在 onPause() 进行相应的操作 。更重要的是,用户做的任何改变都应该在该点上提交 ( 经常提交到 ContentPro

- win7安装MongoDB服务

geeksun

mongodb

1. 下载MongoDB的windows版本:mongodb-win32-x86_64-2008plus-ssl-3.0.4.zip,Linux版本也在这里下载,下载地址: http://www.mongodb.org/downloads

2. 解压MongoDB在D:\server\mongodb, 在D:\server\mongodb下创建d

- Javascript魔法方法:__defineGetter__,__defineSetter__

hongtoushizi

js

转载自: http://www.blackglory.me/javascript-magic-method-definegetter-definesetter/

在javascript的类中,可以用defineGetter和defineSetter_控制成员变量的Get和Set行为

例如,在一个图书类中,我们自动为Book加上书名符号:

function Book(name){

- 错误的日期格式可能导致走nginx proxy cache时不能进行304响应

jinnianshilongnian

cache

昨天在整合某些系统的nginx配置时,出现了当使用nginx cache时无法返回304响应的情况,出问题的响应头: Content-Type:text/html; charset=gb2312 Date:Mon, 05 Jan 2015 01:58:05 GMT Expires:Mon , 05 Jan 15 02:03:00 GMT Last-Modified:Mon, 05

- 数据源架构模式之行数据入口

home198979

PHP架构行数据入口

注:看不懂的请勿踩,此文章非针对java,java爱好者可直接略过。

一、概念

行数据入口(Row Data Gateway):充当数据源中单条记录入口的对象,每行一个实例。

二、简单实现行数据入口

为了方便理解,还是先简单实现:

<?php

/**

* 行数据入口类

*/

class OrderGateway {

/*定义元数

- Linux各个目录的作用及内容

pda158

linux脚本

1)根目录“/” 根目录位于目录结构的最顶层,用斜线(/)表示,类似于

Windows

操作系统的“C:\“,包含Fedora操作系统中所有的目录和文件。 2)/bin /bin 目录又称为二进制目录,包含了那些供系统管理员和普通用户使用的重要

linux命令的二进制映像。该目录存放的内容包括各种可执行文件,还有某些可执行文件的符号连接。常用的命令有:cp、d

- ubuntu12.04上编译openjdk7

ol_beta

HotSpotjvmjdkOpenJDK

获取源码

从openjdk代码仓库获取(比较慢)

安装mercurial Mercurial是一个版本管理工具。 sudo apt-get install mercurial

将以下内容添加到$HOME/.hgrc文件中,如果没有则自己创建一个: [extensions] forest=/home/lichengwu/hgforest-crew/forest.py fe

- 将数据库字段转换成设计文档所需的字段

vipbooks

设计模式工作正则表达式

哈哈,出差这么久终于回来了,回家的感觉真好!

PowerDesigner的物理数据库一出来,设计文档中要改的字段就多得不计其数,如果要把PowerDesigner中的字段一个个Copy到设计文档中,那将会是一件非常痛苦的事情。

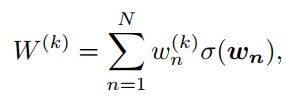

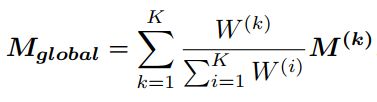

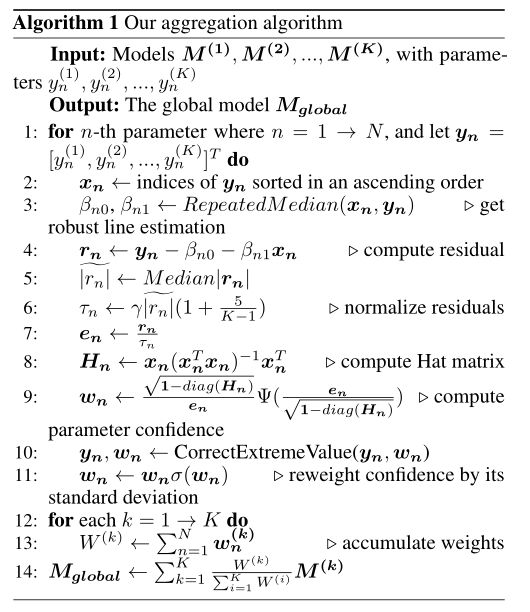

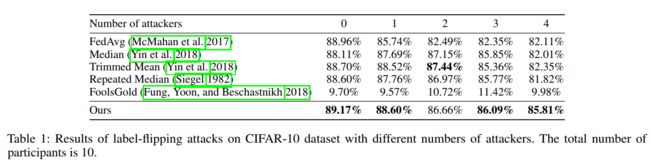

![]()

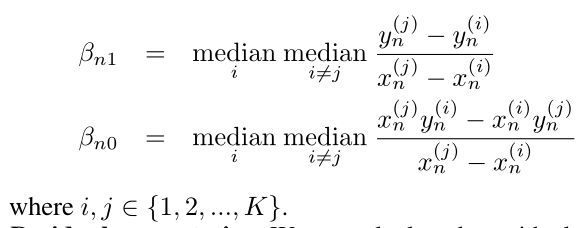

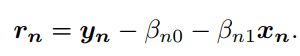

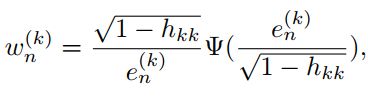

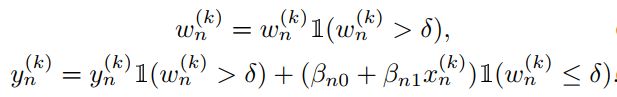

![]() 个参数的残差,由于对于不同的参数

个参数的残差,由于对于不同的参数![]() 不好比较,因此将

不好比较,因此将![]() 标准化

标准化![]() 是

是![]() 中的第

中的第![]() 个参数,

个参数,![]() ,

,![]() ,

,![]() 是超参数本文中定义为2,

是超参数本文中定义为2,![]() 是置信空间用

是置信空间用![]() 调整,

调整,![]() 是

是![]() 中的第

中的第![]() 个对角矩阵

个对角矩阵![]() ,若置信值低于\

,若置信值低于\![]() ,则用下式矫正

,则用下式矫正